Windows环境下安装HBase

下载HBase

官网: https://hbase.apache.org/downloads.html

不同版本集合:https://archive.apache.org/dist/hbase/

配置HBase

修改hbase-env.cmd

set JAVA_HOME=D:\Development\Java\jdk1.8

# HBase内置zookeeper,使用内置zk,需要设置为true:由HBase自己管理zookeeper

set HBASE_MANAGES_ZK=true

set HADOOP_HOME=D:\Development\Hadoop

set HBASE_LOG_DIR=D:\Development\HBase\logs

修改hbase-site.xml

<configuration>

<!--HBase数据在hdfs上的存储根目录-->

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

<!--是否为分布式模式部署,true表示分布式部署-->

<property>

<name>hbase.cluster.distributed</name>

<value>false</value>

</property>

<!--zookeeper集群的URL配置,多个host中间用逗号-->

<property>

<name>hbase.zookeeper.quorum</name>

<value>localhost:2181</value>

</property>

<!--HBase在zookeeper上数据的根目录znode节点-->

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase</value>

</property>

<!-- zookeeper数据目录-->

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>D:\Development\HBase\data\tmp\zoo</value>

</property>

<!-- 本地文件系统tmp目录-->

<property>

<name>hbase.tmp.dir</name>

<value>D:\Development\HBase\data\tmp</value>

</property>

<!-- 使用本地文件系统设置为false,使用hdfs设置为true -->

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

<!--HBase的Web界面访问端口-->

<property>

<name>hbase.master.info.port</name>

<value>16010</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

</configuration>

启动HBase

注意:先启动Hadoop,再启动HBase

Windows安装Hadoop3.x及在Windows环境下本地开发

在HBase/bin目录操作,启动HBase

D:\Development\HBase\bin>start-hbase.cmd

shell操作HBase

D:\Development\HBase\bin>hbase shell

hbase(main):001:0> list

TABLE

0 row(s)

Took 1.7100 seconds

=> []

hbase(main):002:0>

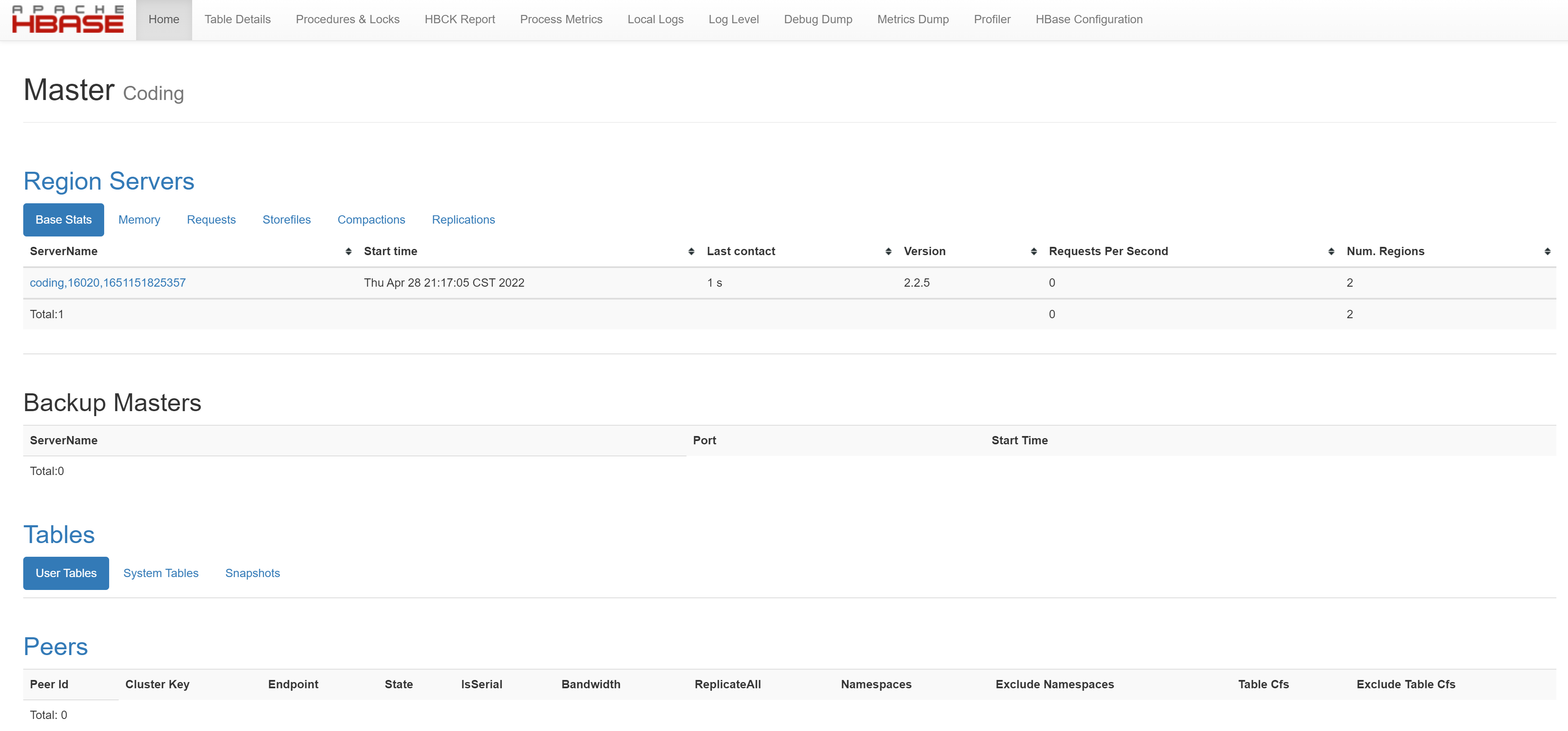

访问web界面

访问:http://localhost/:16010查看Hbase情况

Java操作HBase API

添加依赖

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>2.2.5</version>

</dependency>

<!--java.lang.NoSuchMethodError: 'void org.apache.hadoop.security.HadoopKerberosName.setRuleMechanism(java.lang.String)'-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.2.5</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13</version>

</dependency>

</dependencies>

添加Hadoop和HBase的配置文件到项目Resources目录

core-site.xml

hbase-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.NamespaceDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

public class HBaseTest {

/**

* 获取HBase管理员类

*/

private Admin admin;

/**

* 获取数据库连接

*/

private Connection connection;

/**

* 初始化

*/

@Before

public void init() throws IOException {

Configuration configuration = HBaseConfiguration.create();

this.connection = ConnectionFactory.createConnection(configuration);

this.admin = connection.getAdmin();

}

/**

* 资源释放

*/

@After

public void destory() throws IOException {

if (admin != null) {

admin.close();

}

if (connection != null) {

connection.close();

}

}

/**

* 查询所有表的信息

*/

@Test

public void listTables() throws IOException {

TableName[] tableNames = admin.listTableNames();

for (TableName tableName : tableNames) {

System.out.println("tableName:" + tableName);

}

}

}

关键异常记录

异常1:

util.FSUtils: Waiting for dfs to exit safe mode...

退出Hadoop安全模式

hadoop dfsadmin -safemode leave

异常2:

Caused by: org.apache.hbase.thirdparty.io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: no further information: Coding/192.168.138.245:16000

Caused by: java.net.ConnectException: Connection refused: no further information

最坑爹的一个问题,折腾太久太久,Hadoop与HBase版本不匹配,更换版本。本次搭建使用Hadoop3.1.3与HBase2.2.5

注意:官网给出的版本兼容不可靠,任然有Bug