1 环境简述

搭建es集群需要使用的技术如下:k8s集群、StatefulSet控制器、Service(NodePort)服务、PV、PVC、volumeClaimTemplates(存储卷申请模板)。

StatefulSet控制器创建的Pod适合用于分布式存储系统,它最大的特点是各个Pod的数据不一样,各个Pod无法使用同一个存储卷。注意StatefulSet会给所有的Pod从0开始编号,编号的规则是${statuefulset名称}-${序号}。如果StatefulSet的Pod被误删除,StatefulSet会自动重建与原来的网络标识相同的Pod,此外,Pod的启动和回收都是按照顺序进行的。

2 创建命名空间

# es-namespace.yaml

# 注意,所有的Controller、service等都需要添加到es-ns命名空间中

# 在查询相关信息时需要命名空间(参数:-n es-ns)

apiVersion: v1

kind: Namespace

metadata:

name: es-ns3 创建NFS和StorageClass

3.1 创建NFS

# 创建目录

sudo mkdir -p /data/es

# 添加权限

sudo chmod 777 /data/es

# 编辑文件

sudo vim /etc/exports

# 添加以下内容

/data/es 192.168.108.*(rw,sync,no_subtree_check)

# 重启服务

sudo service nfs-kernel-server restart

# 查看共享目录

sudo showmount -e 192.168.108.100

# 返回值如下,表示创建成功

Export list for 192.168.108.100:

/data/es 192.168.108.*3.2 设置NFS存储分配器权限

# es-nfs-client-provisioner-authority.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: es-ns

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: es-ns

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: es-ns

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: es-ns

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: es-ns

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io3.3 创建NFS存储分配器

# es-nfs-client-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

namespace: es-ns

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

# 存储分配器名称

- name: PROVISIONER_NAME

value: es-nfs-provisioner

# NFS服务器地址,设置为自己的IP

- name: NFS_SERVER

value: 192.168.108.100

# NFS共享目录地址

- name: NFS_PATH

value: /data/es

volumes:

- name: nfs-client-root

nfs:

# 设置为自己的IP

server: 192.168.108.100

# 对应NFS上的共享目录

path: /data/es3.4 创建StorageClass

# es-nfs-storage-class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: es-nfs-storage

namespace: es-ns

# 存储分配器的名称

# 对应“es-nfs-client-provisioner.yaml”文件中env.PROVISIONER_NAME.value

provisioner: es-nfs-provisioner

# 允许pvc创建后扩容

allowVolumeExpansion: True

parameters:

# 资源删除策略,“true”表示删除PVC时,同时删除绑定的PV

archiveOnDelete: "true"4 创建es服务

# es-service.yaml

apiVersion: v1

kind: Service

metadata:

name: es-cluster-svc

namespace: es-ns

spec:

selector:

# 注意一定要与"es-cluster.yaml"中spec.selector.matchLabels相同

app: es-net-data

# 设置服务类型

type: NodePort

ports:

- name: rest

# 服务端口

port: 9200

# 应用端口(Pod端口)

targetPort: 9200

# 映射到主机的端口,端口范围是30000~32767

nodePort: 320005 创建es控制器

# es-cluster.yaml

apiVersion: apps/v1

# 设置控制器

kind: StatefulSet

metadata:

name: es-cluster

namespace: es-ns

spec:

# 必须设置

serviceName: es-cluster-svc

# 设置副本数

replicas: 3

# 设置选择器

selector:

# 设置标签

matchLabels:

app: es-net-data

template:

metadata:

# 此处必须要与上面的matchLabels相同

labels:

app: es-net-data

spec:

# 初始化容器

# 初始化容器的作用是在应用容器启动之前做准备工作,每个init容器都必须在下一个启动之前成功完成

initContainers:

- name: increase-vm-max-map

image: busybox:1.32

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox:1.32

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

# 初始化容器结束后,才能继续创建下面的容器

containers:

- name: es-container

image: elasticsearch:7.6.2

ports:

# 容器内端口

- name: rest

containerPort: 9200

protocol: TCP

# 限制CPU数量

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

# 设置挂载目录

volumeMounts:

- name: es-data

mountPath: /usr/share/elasticsearch/data

# 设置环境变量

env:

# 自定义集群名

- name: cluster.name

value: k8s-es

# 定义节点名,使用metadata.name名称

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

# 初始化集群时,ES从中选出master节点

- name: cluster.initial_master_nodes

# 对应metadata.name名称加编号,编号从0开始

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

# 发现节点的地址,discovery.seed_hosts的值应包括所有master候选节点

# 如果discovery.seed_hosts的值是一个域名,且该域名解析到多个IP地址,那么es将处理其所有解析的IP地址。

- name: discovery.seed_hosts

value: "es-cluster-svc"

# 配置内存

- name: ES_JAVA_OPTS

value: "-Xms1g -Xmx1g"

- name: network.host

value: "0.0.0.0"

volumeClaimTemplates:

- metadata:

# 对应容器中volumeMounts.name

name: es-data

labels:

app: es-volume

spec:

# 存储卷可以被单个节点读写

accessModes: [ "ReadWriteOnce" ]

# 对应es-nfs-storage-class.yaml中的metadata.name

storageClassName: es-nfs-storage

# 申请资源的大小

resources:

requests:

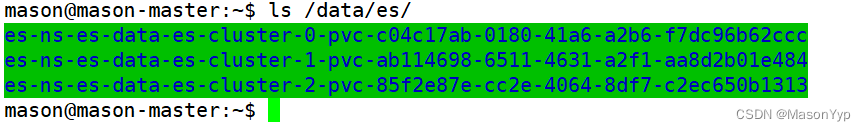

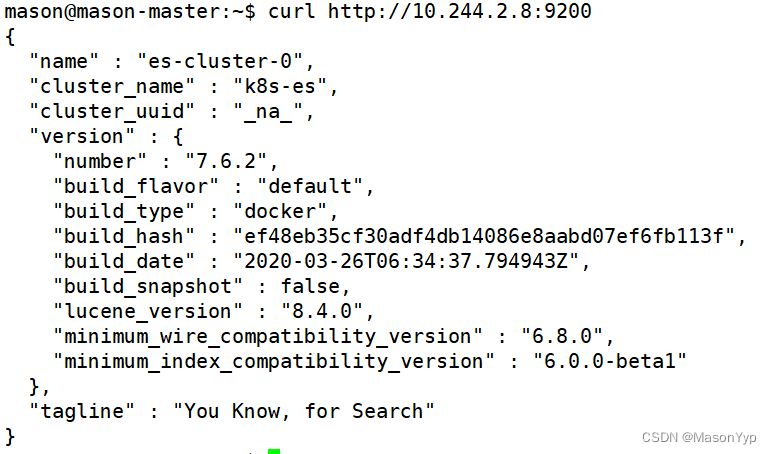

storage: 10Gi6 截图

集群节点

?

服务端口访问

节点访问

?

?NFS目录