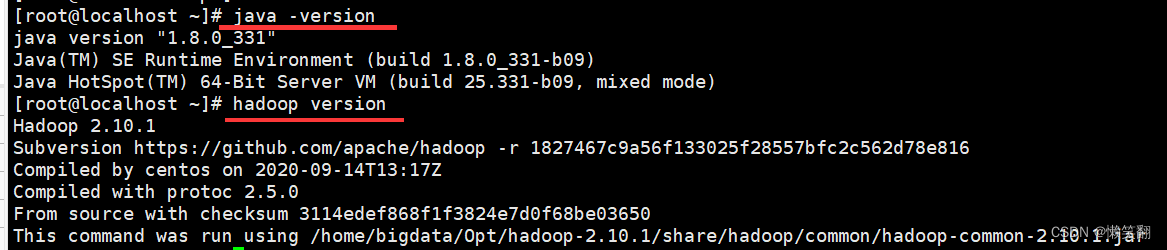

前期准备:

1、检测Java安装环境

java -version2、检测Hadoop安装环境

hadoop version

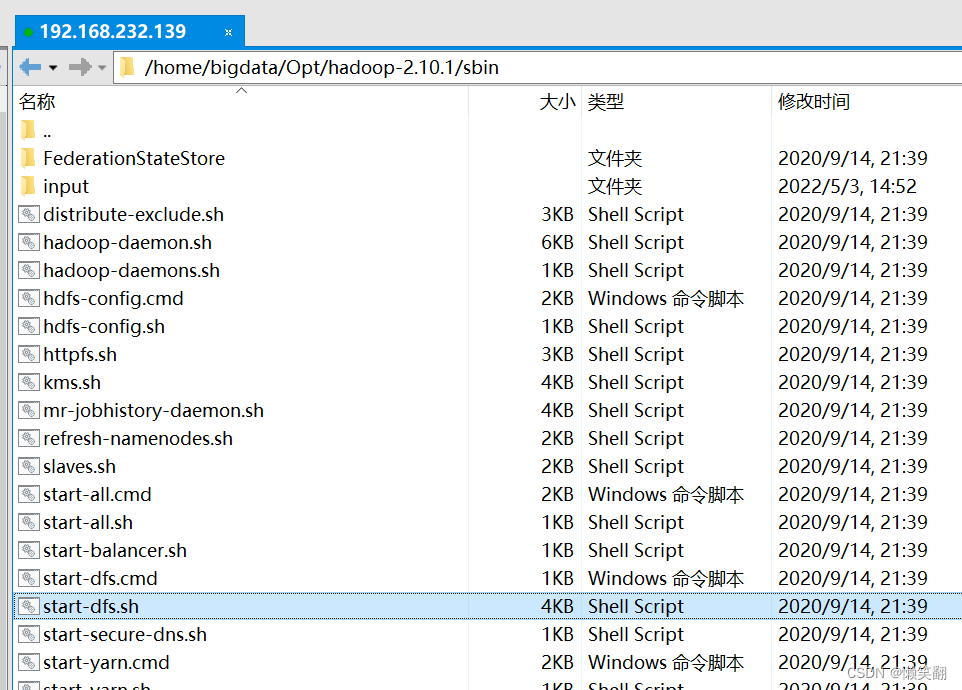

3、进入hadoop 的 /home/bigdata/Opt/hadoop-2.10.1/sbin 目录后:

cd /home/bigdata/Opt/hadoop-2.10.1/sbin4、启动 start-dfs.sh 服务

start-dfs.sh

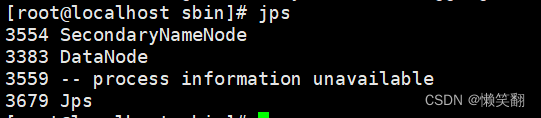

5、查看启动的服务

jps

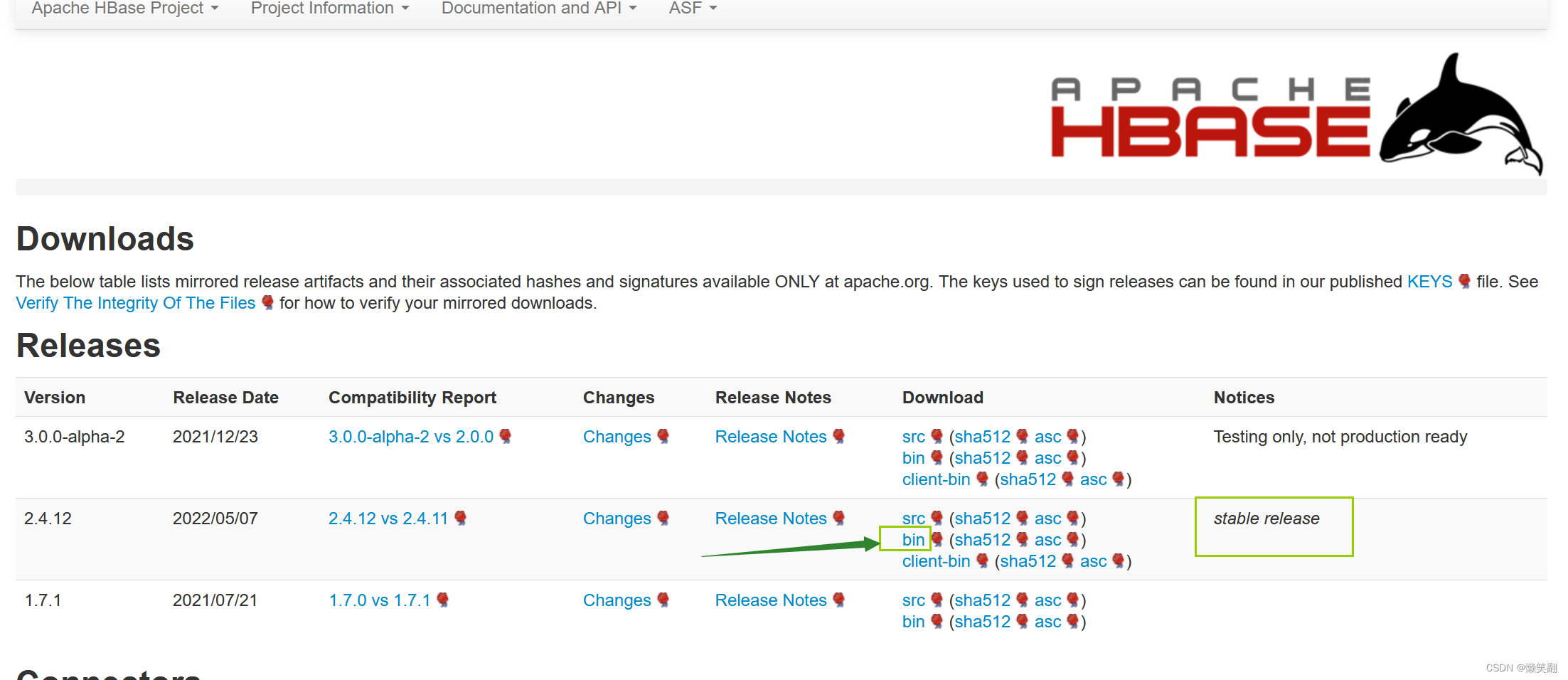

1、官网下载HBase

Apache HBase – Apache HBase Downloads

?

直接下载地址:https://dlcdn.apache.org/hbase/2.4.12/hbase-2.4.12-bin.tar.gz

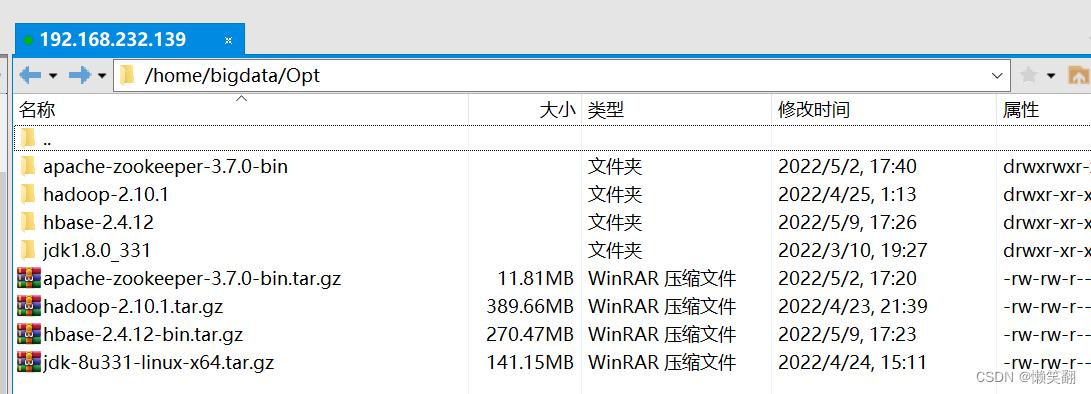

?2、打开 XFTP ,将 HBase 上传到 /home/bigdata/Opt 目录下

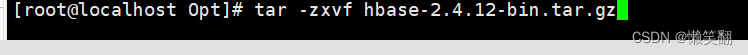

3、解压 hbase-2.4.12-bin.tar.gz? 到当前目录

cd /home/bigdata/Opttar -zxvf hbase-2.4.12-bin.tar.gz

[root@localhost Opt]# tar -zxvf hbase-2.4.12-bin.tar.gz

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/ScanResultConsumerBase.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/ServerType.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/ServiceCaller.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/SnapshotDescription.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/SnapshotType.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/Table.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/TableBuilder.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/TableDescriptor.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/TableDescriptorBuilder.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/TableDescriptorUtils.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/WrongRowIOException.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/backoff/ClientBackoffPolicy.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/backoff/ExponentialClientBackoffPolicy.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/locking/EntityLock.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/replication/ReplicationAdmin.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/replication/TableCFs.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/client/security/SecurityCapability.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/coprocessor/CoprocessorException.html

hbase-2.4.12/docs/apidocs/src-html/org/apache/hadoop/hbase/errorhandling/ForeignException.html

······

······

······

hbase-2.4.12/lib/jdk11/ha-api-3.1.12.jar

hbase-2.4.12/lib/jdk11/saaj-impl-1.5.1.jar

hbase-2.4.12/lib/jdk11/jakarta.activation-api-1.2.1.jar

hbase-2.4.12/lib/jdk11/jaxws-tools-2.3.2.jar

hbase-2.4.12/lib/jdk11/jaxb-xjc-2.3.2.jar

hbase-2.4.12/lib/jdk11/jaxb-jxc-2.3.2.jar

hbase-2.4.12/lib/jdk11/jaxws-eclipselink-plugin-2.3.2.jar

hbase-2.4.12/lib/jdk11/jakarta.mail-api-1.6.3.jar

hbase-2.4.12/lib/jdk11/jakarta.persistence-api-2.2.2.jar

hbase-2.4.12/lib/jdk11/org.eclipse.persistence.moxy-2.7.4.jar

hbase-2.4.12/lib/jdk11/org.eclipse.persistence.core-2.7.4.jar

hbase-2.4.12/lib/jdk11/org.eclipse.persistence.asm-2.7.4.jar

hbase-2.4.12/lib/jdk11/sdo-eclipselink-plugin-2.3.2.jar

hbase-2.4.12/lib/jdk11/org.eclipse.persistence.sdo-2.7.4.jar

hbase-2.4.12/lib/jdk11/commonj.sdo-2.1.1.jar

hbase-2.4.12/lib/jdk11/release-documentation-2.3.2-docbook.zip

hbase-2.4.12/lib/jdk11/samples-2.3.2.zip

hbase-2.4.12/lib/jdk11/jakarta.xml.ws-api-2.3.2.jar

hbase-2.4.12/lib/jdk11/jakarta.xml.bind-api-2.3.2.jar

hbase-2.4.12/lib/jdk11/jakarta.xml.soap-api-1.4.1.jar

hbase-2.4.12/lib/jdk11/jakarta.jws-api-1.1.1.jar

hbase-2.4.12/lib/test/hamcrest-core-1.3.jar

hbase-2.4.12/lib/test/junit-4.13.2.jar

hbase-2.4.12/lib/test/mockito-core-2.28.2.jar

在 XFTP 中刷新可查看解压结果

?

HBase的路径为(稍后配置会用到):

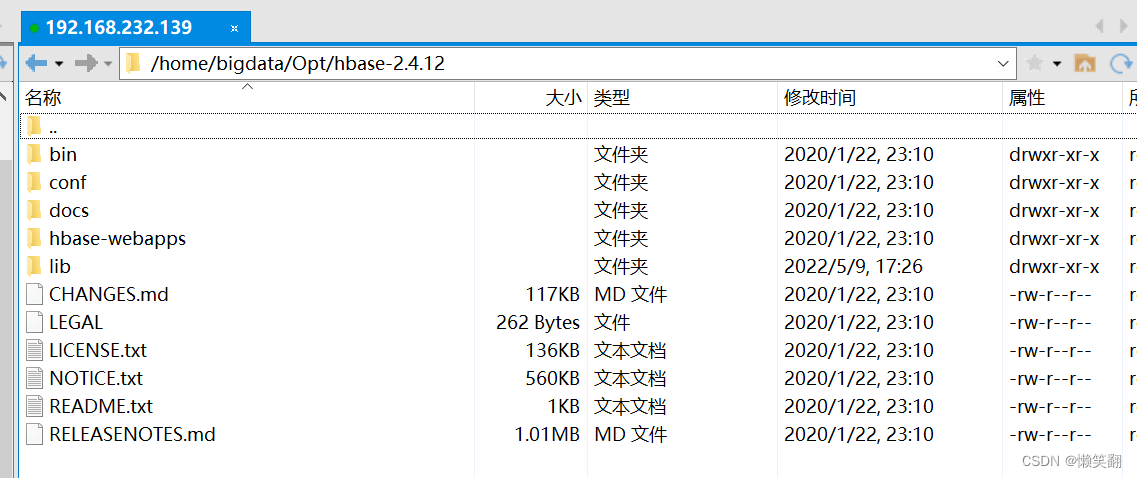

/home/bigdata/Opt/hbase-2.4.124、增加环境变量

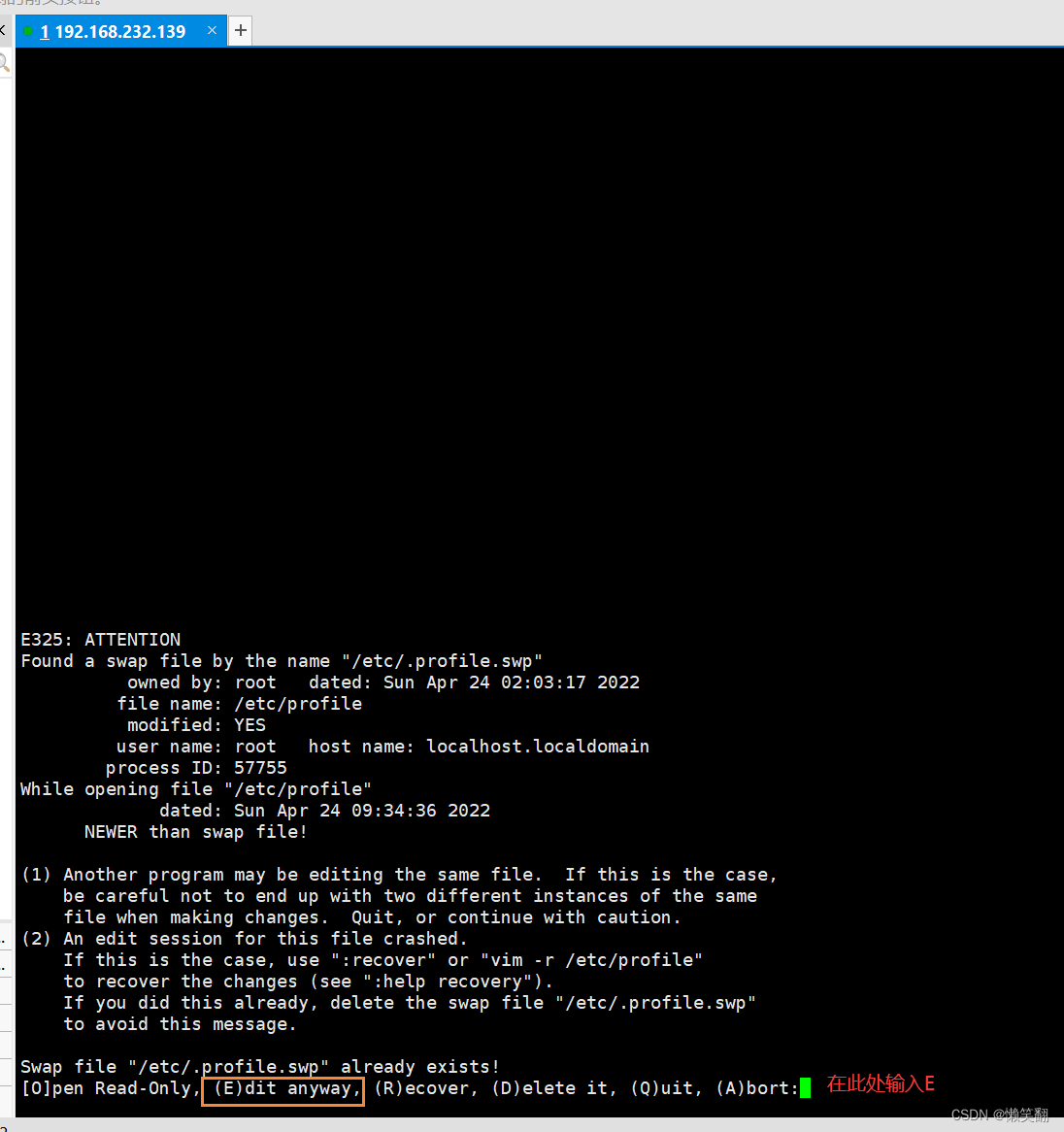

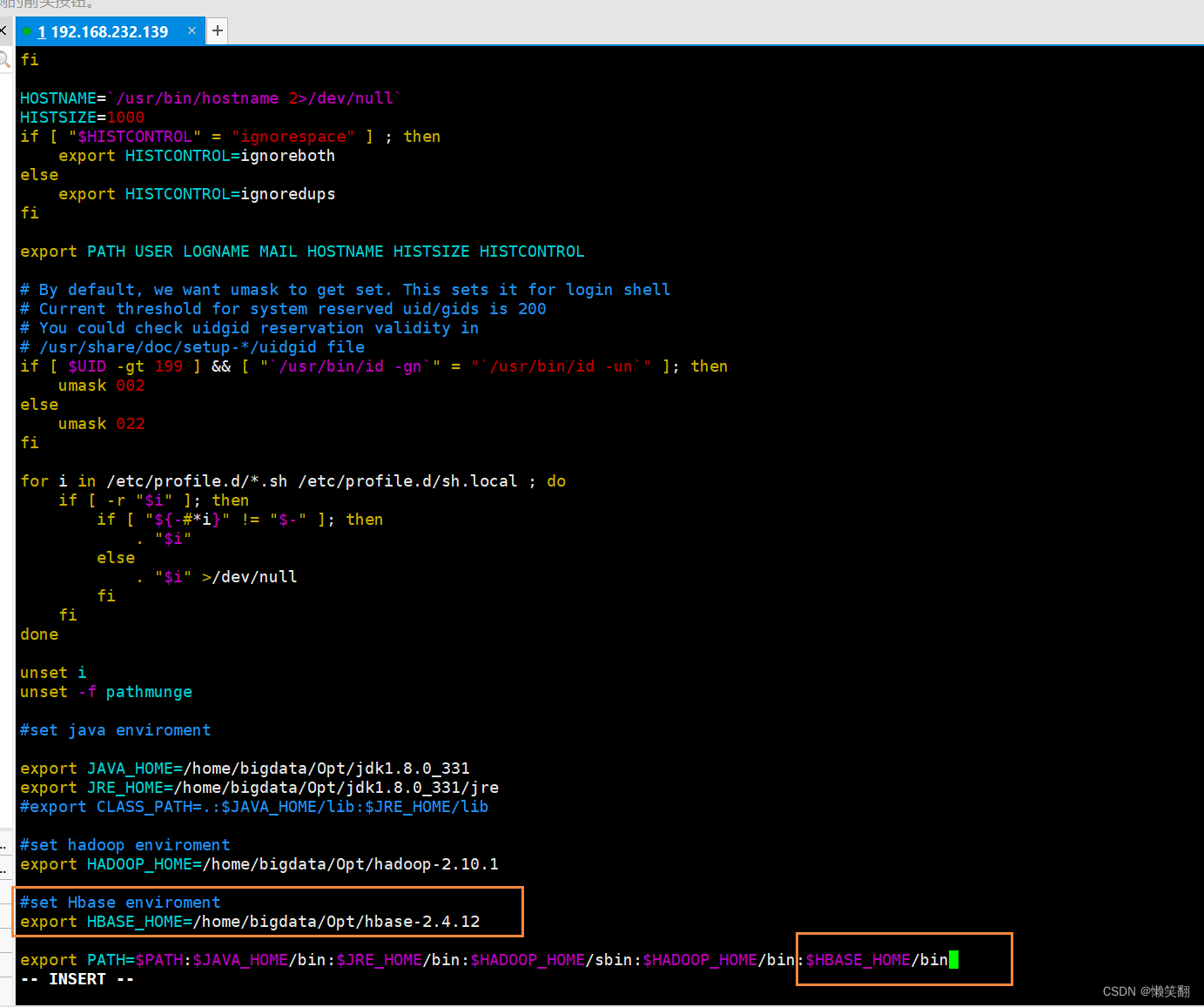

vim /etc/profile![]()

通过按键盘 ↓? 键 到最下方之前配置过jdk的配置信息处,增加HBase的配置信息:

按键盘 i 进入输入模式

#set Hbase enviroment

export HBASE_HOME=/home/bigdata/Opt/hbase-2.4.12

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$HBASE_HOME/bin

?按 ESC 退出编辑后,输入 :wq 保存并退出

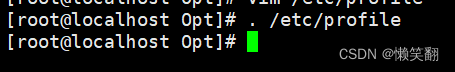

?5、重新加载环境变量

. /ect/profile

6、进入到目录 /home/bigdata/Opt/hbase-2.4.12/conf? 为步骤7、8做准备:

cd /home/bigdata/Opt/hbase-2.4.12/conf

?

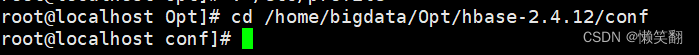

7、修改 hbase-env.sh 配置文件

vim hbase-env.sh按键盘 i 进入输入模式

# set JAVA_HOME

export JAVA_HOME=/home/bigdata/Opt/jdk1.8.0_331

# Tell HBase whether it should manage it's own instance of ZooKeeper or not.

# export HBASE_MANAGES_ZK=true

export HBASE_MANAGES_ZK=false

?按 ESC 退出编辑后,输入 :wq 保存并退出

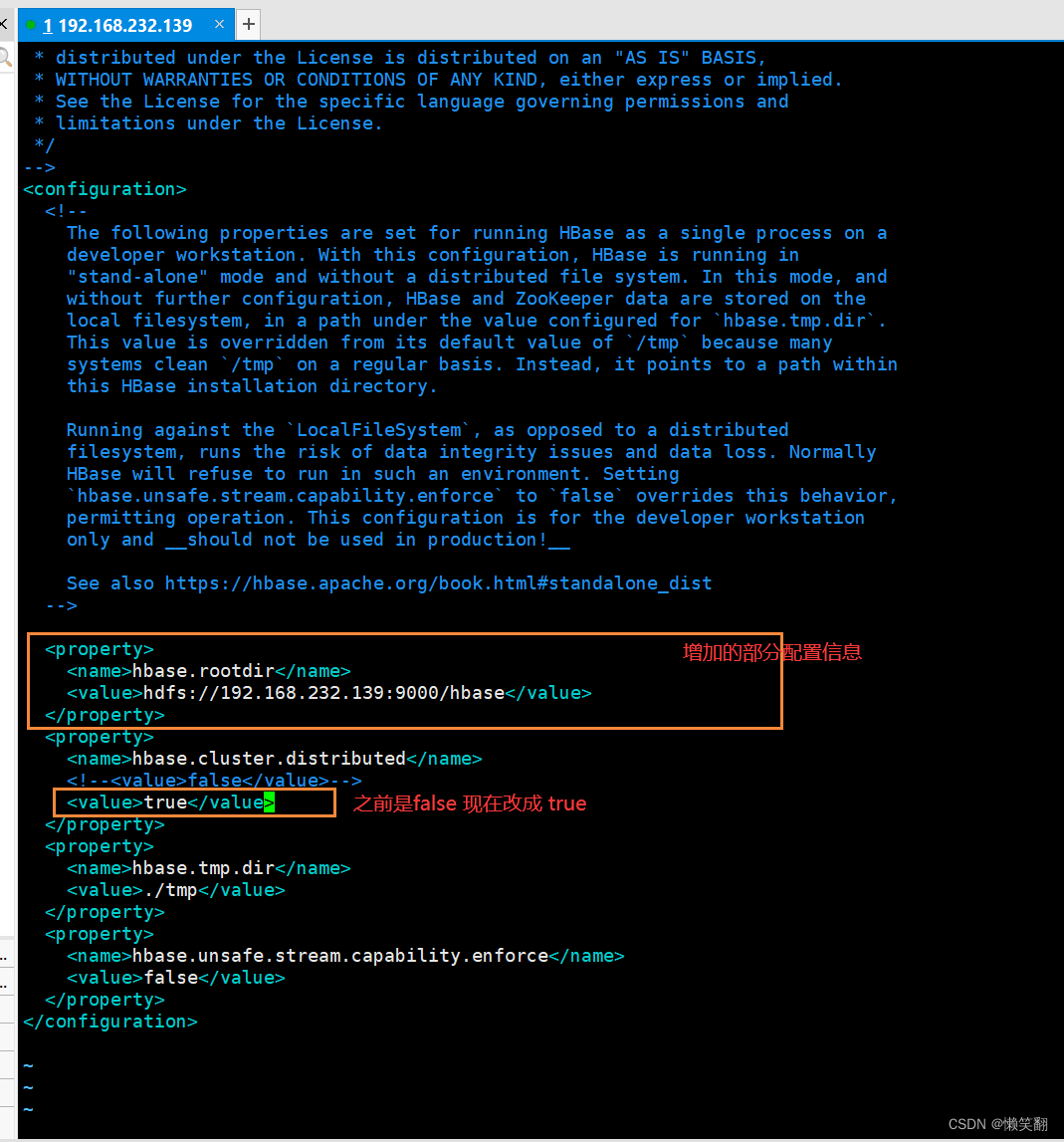

8、修改 hbase-site.xml 配置文件

vim hbase-site.xml按键盘 i 进入输入模式?

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.232.139:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<!--<value>false</value>-->

<value>true</value>

</property>?按 ESC 退出编辑后,输入 :wq 保存并退出

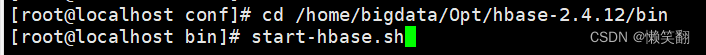

9、启动 HBase

cd /home/bigdata/Opt/hbase-2.4.12/binstart-hbase.sh

[root@localhost conf]# cd /home/bigdata/Opt/hbase-2.4.12/bin

[root@localhost bin]# start-hbase.sh

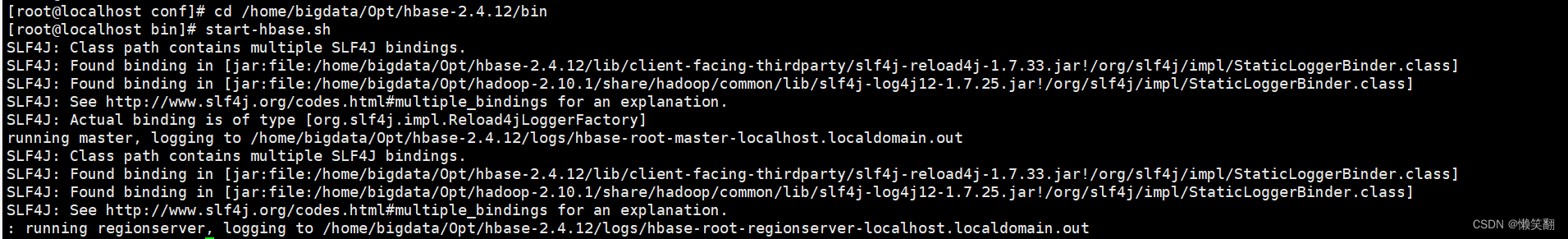

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/bigdata/Opt/hbase-2.4.12/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

running master, logging to /home/bigdata/Opt/hbase-2.4.12/logs/hbase-root-master-localhost.localdomain.out

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/bigdata/Opt/hbase-2.4.12/lib/client-facing-thirdparty/slf4j-reload4j-1.7.33.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/bigdata/Opt/hadoop-2.10.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

: running regionserver, logging to /home/bigdata/Opt/hbase-2.4.12/logs/hbase-root-regionserver-localhost.localdomain.out

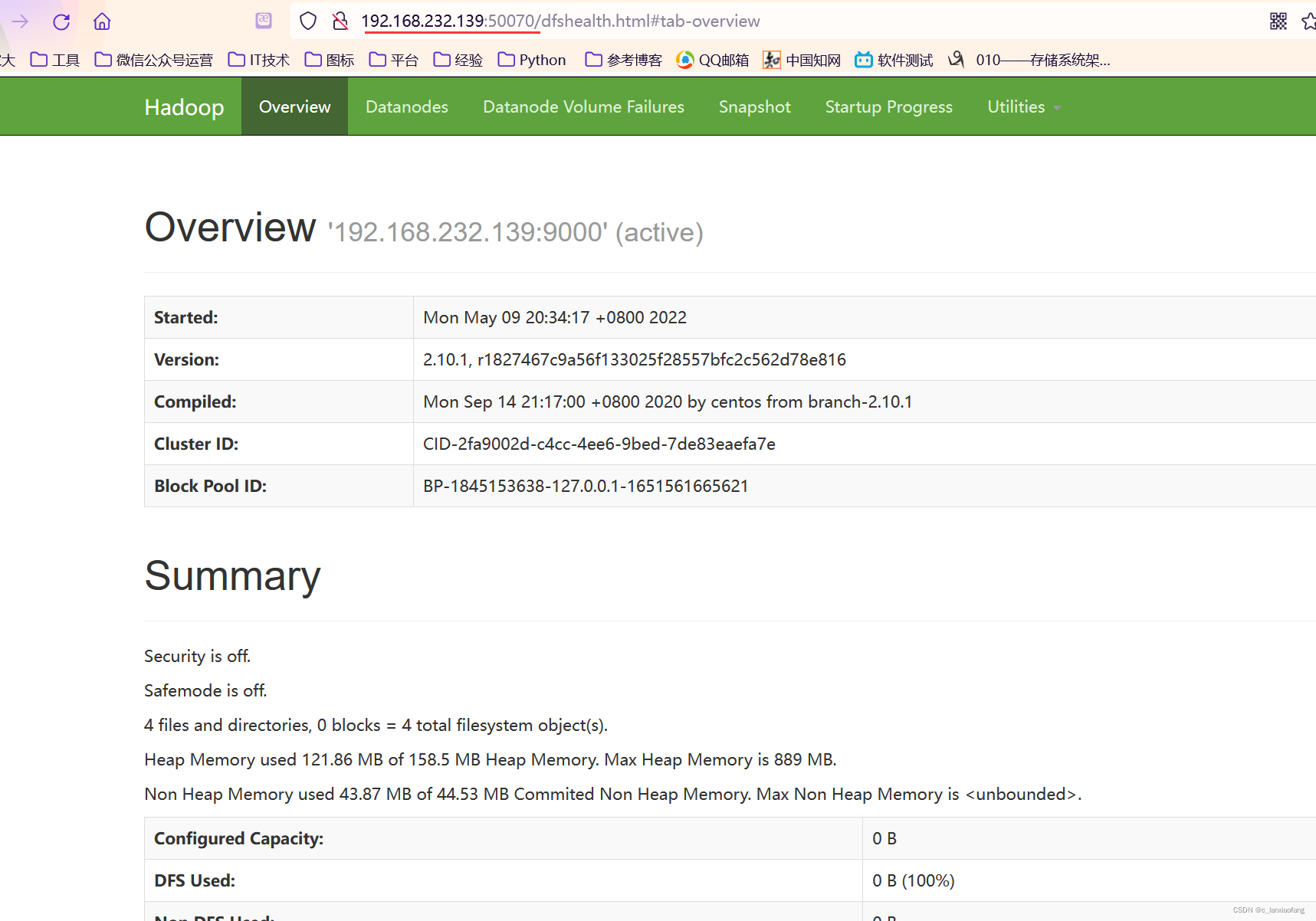

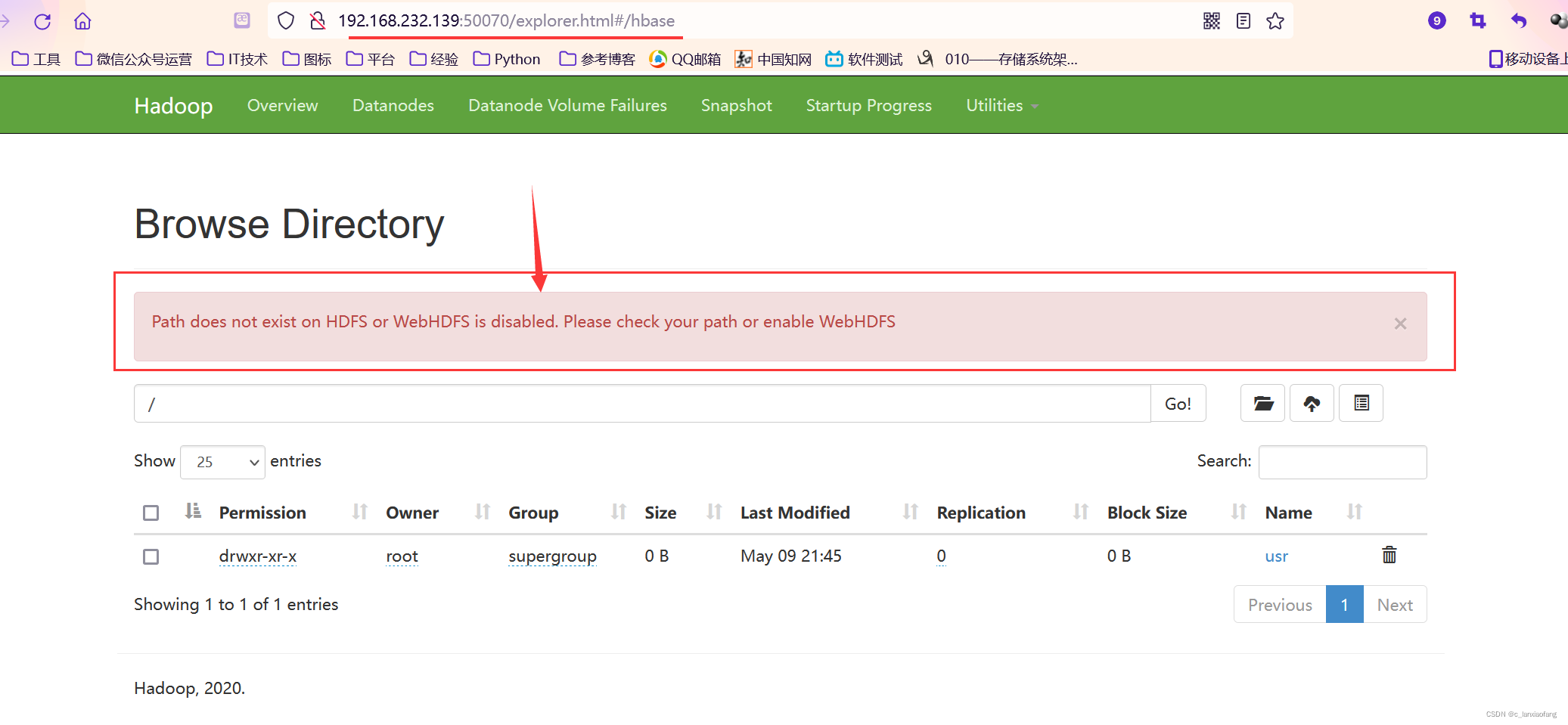

10.在浏览器打开 http://192.168.232.139:50070 查看HBase

说实话,到这里我是打不开这个网址的。发现配置完了,虽然到第9点都是和书上一样的,甚至包括命令运行后的显示内容。但是这时候如果你输入jps命令就发现,没有正在运行的HBase端口。这一点问题我也是个迷,直到 6.5 实训任务 HBase shell 常见命令的使用 中报错 ERROR: KeeperErrorCode = ConnectionLoss for /hbase/master 才发现并没有运行HBase,以及解决办法。----具体可以到6.5中查看我的办法。----

http://192.168.232.139:50070?

?

http://192.168.232.139:50070/explorer.html#/?

?

?

http://192.168.232.139:50070/explorer.html#/hbase?

?

?

?提示信息如下,等我解决再放上链接:

Path does not exist on HDFS or WebHDFS is disabled. Please check your path or enable WebHDFS?

?

11.关闭HBase

stop-hbase.sh