1、Presto介绍

Presto不存储数据,是基于内存的分布式可跨数据源连表查询的计算引擎,查询速度是Hive的5-10倍。Presto是一个OLAP(online Analytic Processing)工具,擅长对海量(GB,TB,PB)数据进行复杂的分析;但是对于OLTP(On-Line Transaction Processing)场景,并不是Presto所擅长,所以不要把Presto当做数据库来使用。

presto:介绍

(二)presto实现多数据源混合查询

2、Presto安装使用

正常解压安装包

[atguigu@hadoop102 software]$ tar -zxvf presto-server-0.196.tar.gz -C /opt/module/

# 创建存储数据文件夹

[atguigu@hadoop102 presto-server-0.196]$ mkdir data

# 编写配置内存管理的文件夹

[atguigu@hadoop102 presto-server-0.196]$ mkdir etc

# 编写配置数据源的配置文件夹

[atguigu@hadoop102 etc]$ mkdir catalog

[atguigu@hadoop102 etc]$ cat jvm.config

-server

-Xmx16G

-XX:+UseG1GC

-XX:G1HeapRegionSize=32M

-XX:+UseGCOverheadLimit

-XX:+ExplicitGCInvokesConcurrent

-XX:+HeapDumpOnOutOfMemoryError

-XX:+ExitOnOutOfMemoryError

[atguigu@hadoop102 catalog]$ cat hive.properties

connector.name=hive-hadoop2

hive.metastore.uri=thrift://hadoop102:9083

分发配置好的解压包

[atguigu@hadoop102 module]$ xsync presto-server-0.196/

配置node属性

[atguigu@hadoop102 etc]$ cat node.properties

node.environment=production

node.id=ffffffff-ffff-ffff-ffff-ffffffffffff

node.data-dir=/opt/module/presto-server-0.196/data

[atguigu@hadoop103 etc]$ cat node.properties

node.environment=production

node.id=ffffffff-ffff-ffff-ffff-fffffffffffe

node.data-dir=/opt/module/presto-server-0.196/data

[atguigu@hadoop104 etc]$ cat node.properties

node.environment=production

node.id=ffffffff-ffff-ffff-ffff-fffffffffffd

node.data-dir=/opt/module/presto-server-0.196/data

hadoop102配置为coordinator,hadoop103、hadoop104配置为worker

[atguigu@hadoop102 etc]$ cat config.properties

coordinator=true

node-scheduler.include-coordinator=false

http-server.http.port=8881

query.max-memory=50GB

discovery-server.enabled=true

discovery.uri=http://hadoop102:8881

[atguigu@hadoop103 etc]$ cat config.properties

coordinator=false

http-server.http.port=8881

query.max-memory=50GB

discovery.uri=http://hadoop102:8881

[atguigu@hadoop104 etc]$ cat config.properties

coordinator=false

http-server.http.port=8881

query.max-memory=50GB

discovery.uri=http://hadoop102:8881

启动Hive Metastore

[atguigu@hadoop102 apache-hive-3.1.2-bin]$ pwd

/opt/module/apache-hive-3.1.2-bin

[atguigu@hadoop102 apache-hive-3.1.2-bin]$ nohup bin/hive --service metastore >/dev/null 2>&1 &

[1] 2309

[atguigu@hadoop102 ~]$ netstat -nltp | grep 9083

启动Presto Server

# 前台启动Presto,控制台显示日志

[atguigu@hadoop102 presto-server-0.196]$ bin/launcher run

[atguigu@hadoop103 presto-server-0.196]$ bin/launcher run

[atguigu@hadoop104 presto-server-0.196]$ bin/launcher run

# 后台启动Presto

[atguigu@hadoop102 presto-server-0.196]$ bin/launcher start

[atguigu@hadoop103 presto-server-0.196]$ bin/launcher start

[atguigu@hadoop104 presto-server-0.196]$ bin/launcher start

Presto命令行Client安装

[atguigu@hadoop102 presto-server-0.196]$ mv presto-cli-0.196-executable.jar prestocli

[atguigu@hadoop102 presto-server-0.196]$ chmod +x prestocli

# 启动命令行Client

[atguigu@hadoop102 presto-server-0.196]$ ./prestocli --server hadoop102:8881 --catalog hive --schema default

presto:default>

Presto可视化Client安装

[atguigu@hadoop102 module]$ unzip yanagishima-18.0.zip

[atguigu@hadoop102 conf]$ cat yanagishima.properties

# yanagishima web port

jetty.port=8080

# 30 minutes. If presto query exceeds this time, yanagishima cancel the query.

presto.query.max-run-time-seconds=1800

# 1GB. If presto query result file size exceeds this value, yanagishima cancel the query.

presto.max-result-file-byte-size=1073741824

# you can specify freely. But you need to specify same name to presto.coordinator.server.[...] and presto.redirect.server.[...] and catalog.[...] and schema.[...]

presto.datasources=your-presto

auth.your-presto=false

# presto coordinator url

presto.coordinator.server.your-presto=http://presto.coordinator:8080

# almost same as presto coordinator url. If you use reverse proxy, specify it

presto.redirect.server.your-presto=http://presto.coordinator:8080

# presto catalog name

catalog.your-presto=hive

# presto schema name

schema.your-presto=default

# if query result exceeds this limit, to show rest of result is skipped

select.limit=500

# http header name for audit log

audit.http.header.name=some.auth.header

use.audit.http.header.name=false

# limit to convert from tsv to values query

to.values.query.limit=500

# authorization feature

check.datasource=false

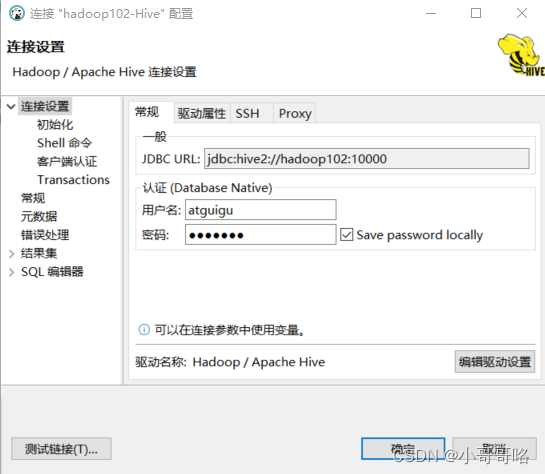

hive.jdbc.url.your-hive=jdbc:hive2://localhost:10000/default;auth=noSasl

hive.jdbc.user.your-hive=yanagishima

hive.jdbc.password.your-hive=yanagishima

hive.query.max-run-time-seconds=3600

hive.query.max-run-time-seconds.your-hive=3600

resource.manager.url.your-hive=http://localhost:8088

sql.query.engines=presto,hive

hive.datasources=your-hive

hive.disallowed.keywords.your-hive=insert,drop

# 1GB. If hive query result file size exceeds this value, yanagishima cancel the query.

hive.max-result-file-byte-size=1073741824

hive.setup.query.path.your-hive=/usr/local/yanagishima/conf/hive_setup_query_your-hive

cors.enabled=false

jetty.port=7080

presto.datasources=atguigu-presto

presto.coordinator.server.atguigu-presto=http://hadoop102:8881

catalog.atguigu-presto=hive

schema.atguigu-presto=default

上面没有直接配置hive的连接信息,是间接配置了hive

#单独配置hive信息

hive.datasources=prestocluster

hive.jdbc.url.prestocluster=jdbc:hive2://hive-server:10000/default

hive.jdbc.user.prestocluster=hdfs

hive.jdbc.password.prestocluster=hdfs

hive.query.max-run-time-seconds.prestocluster=3600

hive.max-result-file-byte-size=1073741824

hive.query.max-run-time-seconds=3600

启动web页面:http://hadoop102:7080

[atguigu@hadoop102 yanagishima-18.0]$ pwd

/opt/module/yanagishima-18.0

[atguigu@hadoop102 yanagishima-18.0]$ nohup bin/yanagishima-start.sh >y.log 2>&1 &

PS:报错:presto Unable to create input format org.apache.hadoop.mapred.TextInputFormat

报错解决方案:在hadoop102、hadoop103、hadoop104执行如下操作

[atguigu@hadoop102 hadoop]$ cd ./common/hadoop-lzo-0.4.20.jar /opt/module/presto-server-0.196/data/plugin/hive-hadoop2

[atguigu@hadoop102 hadoop]$ cd /opt/module/presto-server-0.196/data/plugin/hive-hadoop2/

[atguigu@hadoop102 hive-hadoop2]$ ll|grep 'hadoop'

-rw-r--r--. 1 atguigu atguigu 25873138 3月 2 2018 hadoop-apache2-2.7.4-1.jar

-rw-r--r--. 1 atguigu atguigu 193831 5月 19 23:39 hadoop-lzo-0.4.20.jar

-rw-r--r--. 1 atguigu atguigu 3488 3月 2 2018 presto-hive-hadoop2-0.196.jar

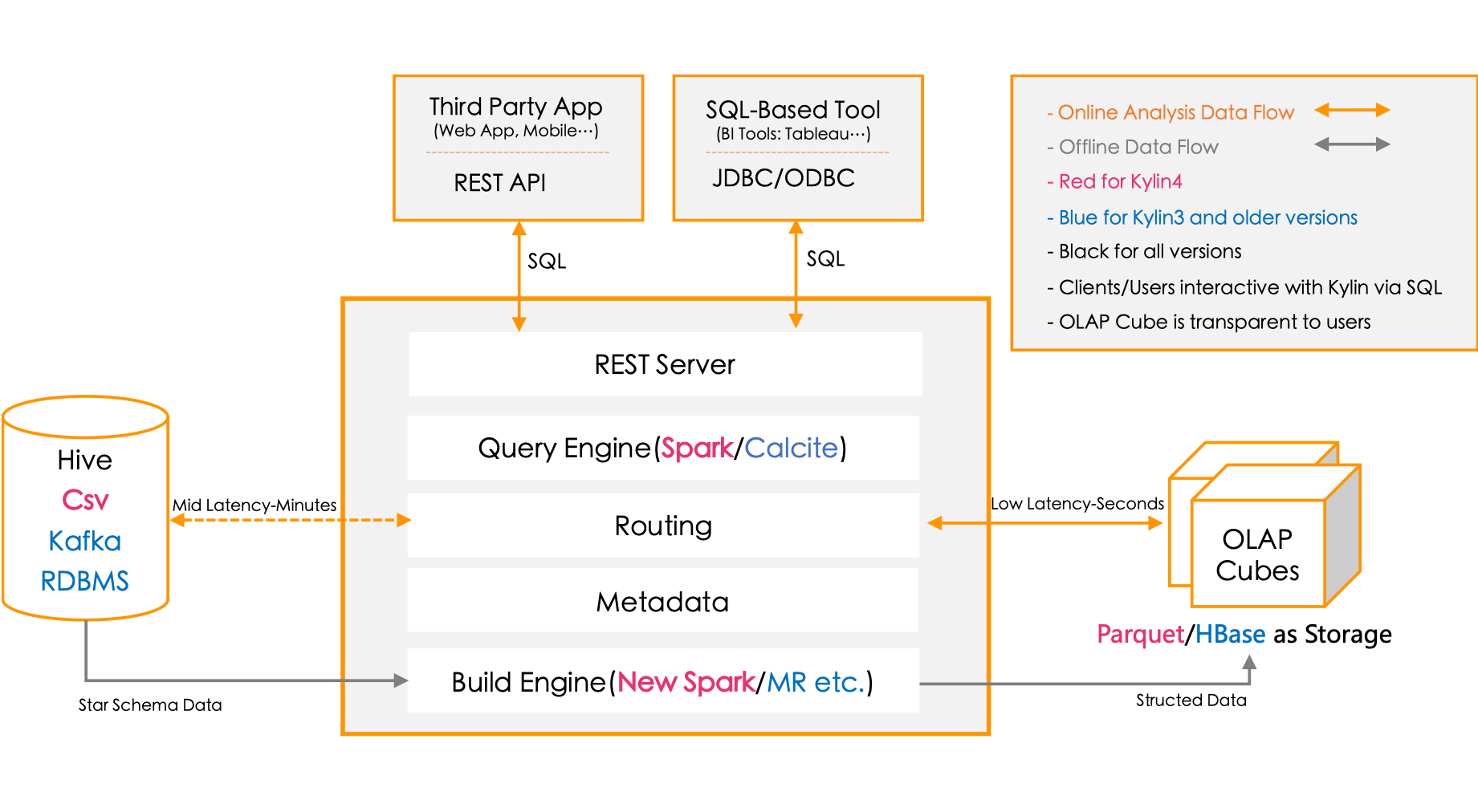

3、Apache Kylin

Apache Kylin是一个开源的分布式分析引擎,提供Hadoop/Spark之上的SQL查询接口及多维分析(OLAP)能力以支持超大规模数据,最初由eBay Inc开发并贡献至开源社区。它能在亚秒内查询巨大的Hive表。Kylin可以与现有的BI工具集成,提供ODBC、JDBC、RestAPI等交互接口。

什么是MPP数据库?

presto、druid、sparkSQL、kylin的对比分析,如性能、架构等,有什么异同?

4、Kylin安装使用

安装Kylin前需先部署好Hadoop、Hive、Zookeeper、HBase,并且需要在/etc/profile中配置以下环境变量HADOOP_HOME,HIVE_HOME,HBASE_HOME。

[atguigu@hadoop102 software]$ tar -zxvf apache-kylin-3.0.2-bin.tar.gz -C /opt/module/

[atguigu@hadoop102 bin]$ cat find-spark-dependency.sh

#!/bin/bash

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

source $(cd -P -- "$(dirname -- "$0")" && pwd -P)/header.sh

echo Retrieving Spark dependency...

spark_home=

if [ -n "$SPARK_HOME" ]

then

verbose "SPARK_HOME is set to: $SPARK_HOME, use it to locate Spark dependencies."

spark_home=$SPARK_HOME

fi

if [ -z "$SPARK_HOME" ]

then

verbose "SPARK_HOME wasn't set, use $KYLIN_HOME/spark"

spark_home=$KYLIN_HOME/spark

fi

if [ ! -d "$spark_home/jars" ]

then

quit "spark not found, set SPARK_HOME, or run bin/download-spark.sh"

fi

# 需要增加的内容:! -name '*jackson*' ! -name '*metastore*',解决 Kylin兼容性问题

spark_dependency=`find -L $spark_home/jars -name '*.jar' ! -name '*slf4j*' ! -name '*jackson*' ! -name '*metastore*' ! -name '*calcite*' ! -name '*doc*' ! -name '*test*' ! -name '*sources*' ''-printf '%p:' | sed 's/:$//'`

if [ -z "$spark_dependency" ]

then

quit "spark jars not found"

else

verbose "spark dependency: $spark_dependency"

export spark_dependency

fi

echo "export spark_dependency=$spark_dependency" > ${dir}/cached-spark-dependency.sh

启动Kylin依赖的框架

[atguigu@hadoop102 bin]$ myclusters start

[atguigu@hadoop102 bin]$ myzookeeper start

# hbase群起

[atguigu@hadoop102 hbase-2.0.5]$ bin/start-hbase.sh

[atguigu@hadoop102 hbase-2.0.5]$ jpsall

=============== hadoop102 ===============

3057 QuorumPeerMain

3561 HRegionServer

3371 HMaster

2699 NodeManager

2189 NameNode

2846 JobHistoryServer

2334 DataNode

=============== hadoop103 ===============

2259 NodeManager

2135 ResourceManager

1976 DataNode

2938 HRegionServer

2764 QuorumPeerMain

=============== hadoop104 ===============

1974 DataNode

2150 NodeManager

2057 SecondaryNameNode

2586 HRegionServer

2414 QuorumPeerMain

启动Kylin

[atguigu@hadoop102 apache-kylin-3.0.2-bin]$ bin/kylin.sh start

访问Web页面:http://hadoop102:7070/kylin

用户名为:ADMIN,密码为:KYLIN

Kylin不能处理Hive表中的复杂数据类型(Array,Map,Struct),即便复杂类型的字段并未参与到计算之中。故在加载Hive数据源时,不能直接加载带有复杂数据类型字段的表。而在dim_sku_info表中存在两个复杂数据类型的字段(平台属性和销售属性),故dim_sku_info不能直接加载,需对其进行以下处理。在kylin中重新导入dim_sku_info_view视图。

hive (gmall)> create view dim_sku_info_view

> as

> select

> id,

> price,

> sku_name,

> sku_desc,

> weight,

> is_sale,

> spu_id,

> spu_name,

> category3_id,

> category3_name,

> category2_id,

> category2_name,

> category1_id,

> category1_name,

> tm_id,

> tm_name,

> create_time

> from dim_sku_info;

事实表与维度表(维度表是拉链)关联后发散,需要建立视图取出有效数据

hive (gmall)> create view dim_user_info_view as select * from dim_user_info where dt='9999-99-99';

OK

id login_name nick_name name phone_num email user_level birthday gender create_time operate_time start_date end_date dt

Time taken: 2.132 seconds

hive (gmall)> alter view dim_sku_info_view

> as

> select

> id,

> price,

> sku_name,

> sku_desc,

> weight,

> is_sale,

> spu_id,

> spu_name,

> category3_id,

> category3_name,

> category2_id,

> category2_name,

> category1_id,

> category1_name,

> tm_id,

> tm_name,

> create_time

> from dim_sku_info

> where dt='2020-06-15';

OK

id price sku_name sku_desc weight is_sale spu_id spu_name category3_id category3_name category2_id category2_name category1_id category1_name tm_id tm_name create_time

Time taken: 0.193 seconds

HIVE on TEZ模式时,若DBeaver通过Spark Thrift Server(或HiveServer2)连接HIVE时,Kylin将不能正常调用HIVE,原因如下:014 大数据之HIVE实战

① HIVE on TEZ时,任务运行完成并不会释放TEZ获取的资源,若不手动释放(yarn application -kill 任务名),将持续占用;

② 同一个队列有多个TEZ SESSION时(yarn application -list),后来者挂起,直至前者释放资源,后者才可以获取资源;

③ 多个TEZ SESSION各自占用不同的队列时,多个TEZ SESSION可以并行执行, [set tez.queue.name=default];