一、HDFS客户端环境准备

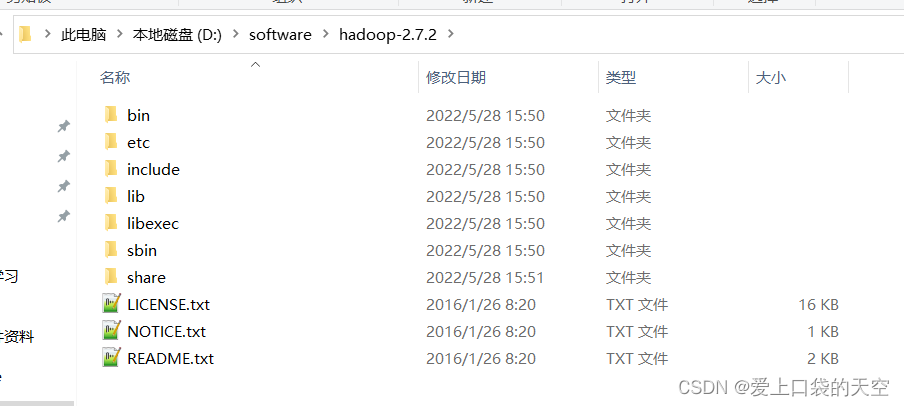

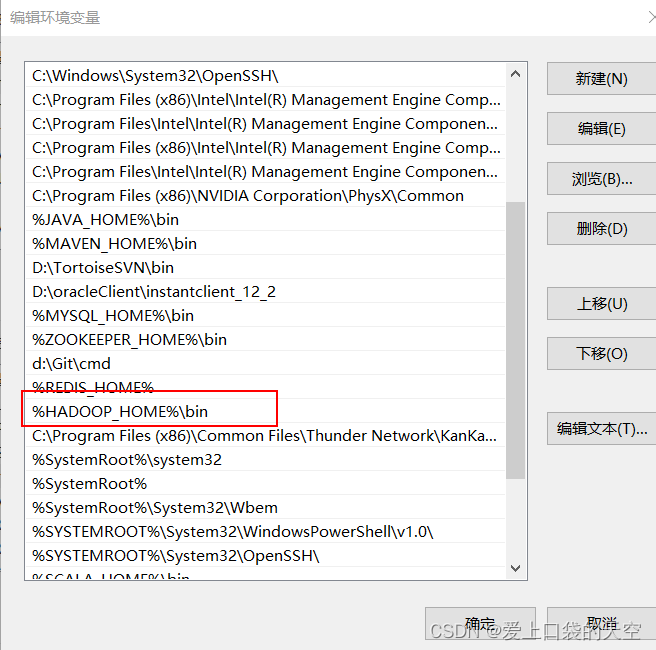

1、配置hadoop的环境变量

?

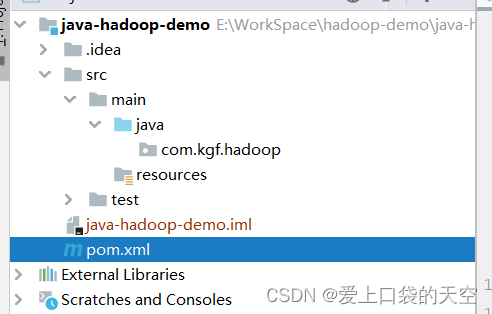

2、创建一个maven的工程

3、导入相应的依赖坐标+日志添加?

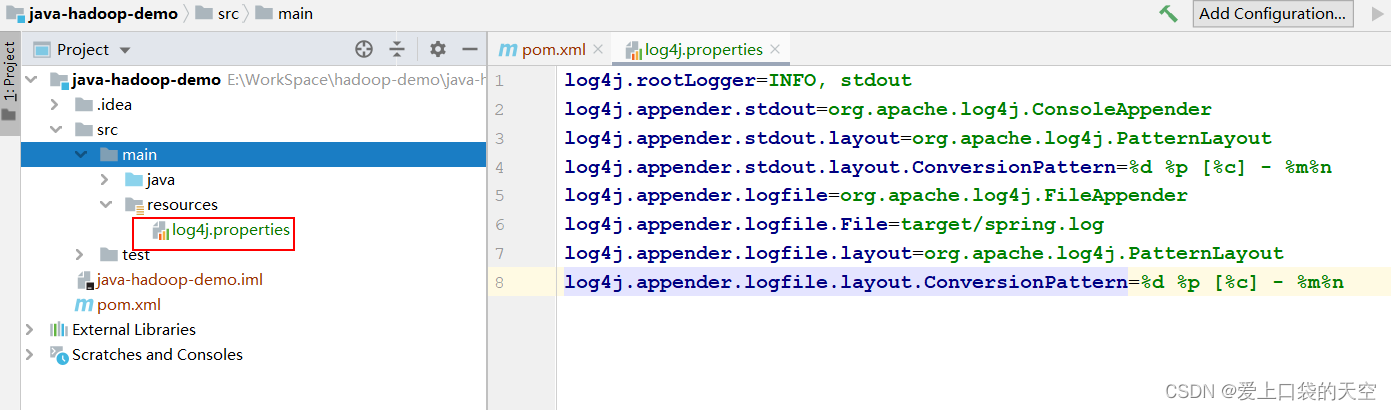

<dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>RELEASE</version> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>2.8.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>jdk.tools</groupId> <artifactId>jdk.tools</artifactId> <version>1.8</version> <scope>system</scope> <systemPath>${JAVA_HOME}/lib/tools.jar</systemPath> </dependency> </dependencies>4、创建log4j.properties文件

log4j.rootLogger=INFO, stdout log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n log4j.appender.logfile=org.apache.log4j.FileAppender log4j.appender.logfile.File=target/spring.log log4j.appender.logfile.layout=org.apache.log4j.PatternLayout log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n?5、创建HdfsClient类

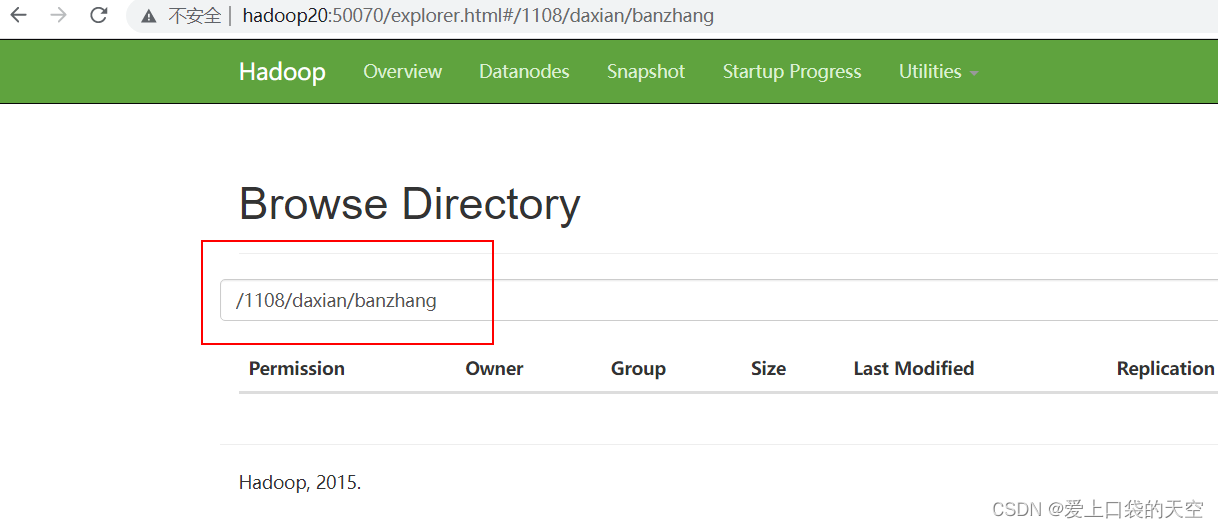

package com.kgf.hadoop; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; public class HdfsClient { public static void main(String[] args) throws URISyntaxException, IOException, InterruptedException { // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 创建目录 fs.mkdirs(new Path("/1108/daxian/banzhang")); // 3 关闭资源 fs.close(); } }运行之后的效果:

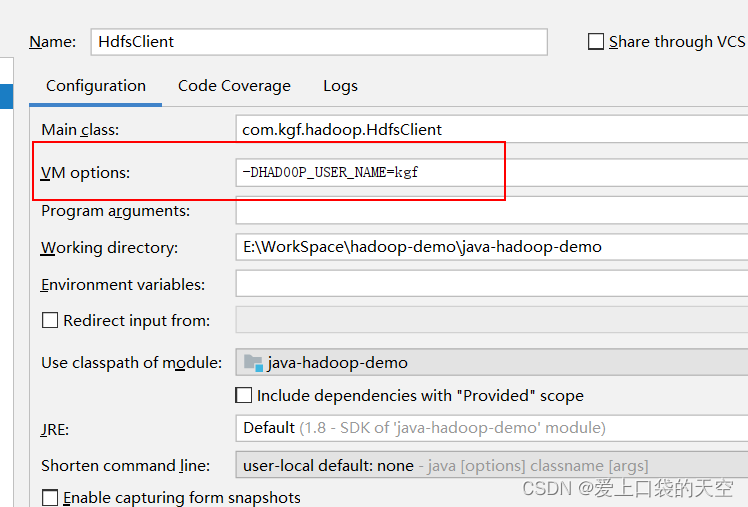

6、我们也可以换种方式去指定运行时的用户名称?

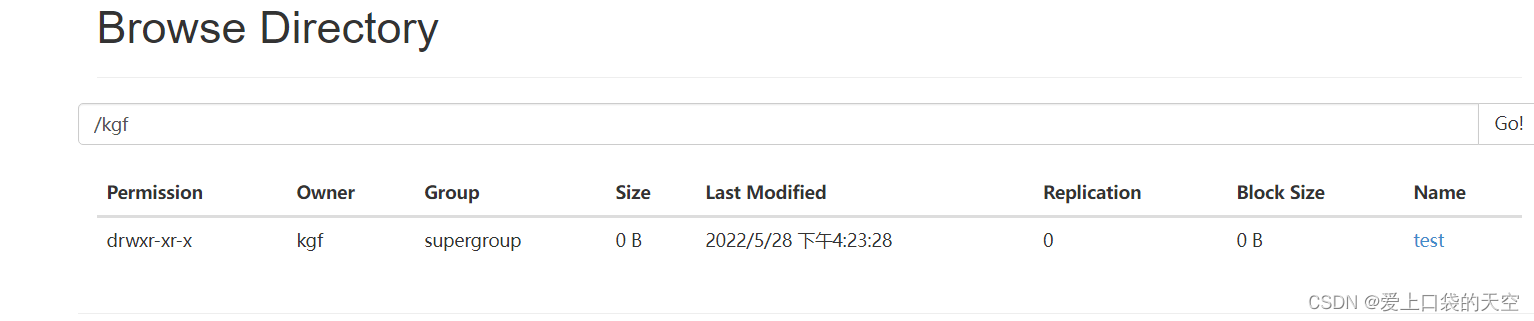

package com.kgf.hadoop; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import java.io.IOException; import java.net.URI; import java.net.URISyntaxException; public class HdfsClient { public static void main(String[] args) throws URISyntaxException, IOException, InterruptedException { // 1 获取文件系统 Configuration configuration = new Configuration(); // FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 配置在集群上运行 configuration.set("fs.defaultFS", "hdfs://hadoop20:9000"); FileSystem fs = FileSystem.get(configuration); // 2 创建目录 fs.mkdirs(new Path("/kgf/test")); // 3 关闭资源 fs.close(); System.out.println("over!"); } }客户端去操作HDFS时,是有一个用户身份的。默认情况下,HDFS客户端API会从JVM中获取一个参数来作为自己的用户身份:-DHADOOP_USER_NAME=kgf,kgf为用户名称。

然后运行结果:

二、HDFS的API操作

1、HDFS文件上传(测试参数优先级)

/** * HDFS文件上传 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ private void testCopyFromLocalFile() throws IOException, InterruptedException, URISyntaxException { // 1 获取文件系统 Configuration configuration = new Configuration(); configuration.set("dfs.replication", "2");//设置副本数量 FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 上传文件 fs.copyFromLocalFile(new Path("e:/banzhang.txt"), new Path("/banzhang.txt")); // 3 关闭资源 fs.close(); System.out.println("over"); }将hdfs-site.xml拷贝到项目的根目录下

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

执行效果如下:

?可以发现副本数是2:

参数优先级排序:

(1)客户端代码中设置的值 >

(2)ClassPath下的用户自定义配置文件 >

(3)然后是服务器的默认配置

2、HDFS文件下载

/*** * HDFS文件下载 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void testCopyToLocalFile() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 执行下载操作 // boolean delSrc 指是否将原文件删除 // Path src 指要下载的文件路径 // Path dst 指将文件下载到的路径 // boolean useRawLocalFileSystem 是否开启文件校验 fs.copyToLocalFile(false, new Path("/banzhang.txt"), new Path("e:/banhua.txt"), true); // 3 关闭资源 fs.close(); }3、HDFS文件夹删除

/*** * HDFS文件夹删除 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void testDelete() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 执行删除 fs.delete(new Path("/1108/"), true); // 3 关闭资源 fs.close(); }4、HDFS文件名更改

/*** * HDFS文件名更改 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void testRename() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 修改文件名称 fs.rename(new Path("/banzhang.txt"), new Path("/banhua.txt")); // 3 关闭资源 fs.close(); System.out.println("over!"); }5、HDFS文件详情查看

/*** * 查看文件名称、权限、长度、块信息 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void testListFiles() throws IOException, InterruptedException, URISyntaxException{ // 1获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 获取文件详情 RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true); while(listFiles.hasNext()){ LocatedFileStatus status = listFiles.next(); // 输出详情 // 文件名称 System.out.println(status.getPath().getName()); // 长度 System.out.println(status.getLen()); // 权限 System.out.println(status.getPermission()); // 分组 System.out.println(status.getGroup()); // 获取存储的块信息 BlockLocation[] blockLocations = status.getBlockLocations(); for (BlockLocation blockLocation : blockLocations) { // 获取块存储的主机节点 String[] hosts = blockLocation.getHosts(); for (String host : hosts) { System.out.println(host); } } System.out.println("-----------班长的分割线----------"); } // 3 关闭资源 fs.close(); }效果(将目录下的文件都递归查询出来了):

D:\jdk1.8.0_51\bin\java.exe -Dvisualvm.id=1345450326278400 -javaagent:D:\JetBrains\idea20190303\lib\idea_rt.jar=1048:D:\JetBrains\idea20190303\bin -Dfile.encoding=UTF-8 -classpath D:\jdk1.8.0_51\jre\lib\charsets.jar;D:\jdk1.8.0_51\jre\lib\deploy.jar;D:\jdk1.8.0_51\jre\lib\ext\access-bridge-64.jar;D:\jdk1.8.0_51\jre\lib\ext\cldrdata.jar;D:\jdk1.8.0_51\jre\lib\ext\dnsns.jar;D:\jdk1.8.0_51\jre\lib\ext\jaccess.jar;D:\jdk1.8.0_51\jre\lib\ext\jfxrt.jar;D:\jdk1.8.0_51\jre\lib\ext\localedata.jar;D:\jdk1.8.0_51\jre\lib\ext\nashorn.jar;D:\jdk1.8.0_51\jre\lib\ext\sunec.jar;D:\jdk1.8.0_51\jre\lib\ext\sunjce_provider.jar;D:\jdk1.8.0_51\jre\lib\ext\sunmscapi.jar;D:\jdk1.8.0_51\jre\lib\ext\sunpkcs11.jar;D:\jdk1.8.0_51\jre\lib\ext\zipfs.jar;D:\jdk1.8.0_51\jre\lib\javaws.jar;D:\jdk1.8.0_51\jre\lib\jce.jar;D:\jdk1.8.0_51\jre\lib\jfr.jar;D:\jdk1.8.0_51\jre\lib\jfxswt.jar;D:\jdk1.8.0_51\jre\lib\jsse.jar;D:\jdk1.8.0_51\jre\lib\management-agent.jar;D:\jdk1.8.0_51\jre\lib\plugin.jar;D:\jdk1.8.0_51\jre\lib\resources.jar;D:\jdk1.8.0_51\jre\lib\rt.jar;E:\WorkSpace\hadoop-demo\java-hadoop-demo\target\classes;D:\software\maven\repository\junit\junit\4.13.2\junit-4.13.2.jar;D:\software\maven\repository\org\hamcrest\hamcrest-core\1.3\hamcrest-core-1.3.jar;D:\software\maven\repository\org\apache\logging\log4j\log4j-core\2.8.2\log4j-core-2.8.2.jar;D:\software\maven\repository\org\apache\logging\log4j\log4j-api\2.8.2\log4j-api-2.8.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-common\2.7.2\hadoop-common-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-annotations\2.7.2\hadoop-annotations-2.7.2.jar;D:\software\maven\repository\com\google\guava\guava\11.0.2\guava-11.0.2.jar;D:\software\maven\repository\commons-cli\commons-cli\1.2\commons-cli-1.2.jar;D:\software\maven\repository\org\apache\commons\commons-math3\3.1.1\commons-math3-3.1.1.jar;D:\software\maven\repository\xmlenc\xmlenc\0.52\xmlenc-0.52.jar;D:\software\maven\repository\commons-httpclient\commons-httpclient\3.1\commons-httpclient-3.1.jar;D:\software\maven\repository\commons-codec\commons-codec\1.4\commons-codec-1.4.jar;D:\software\maven\repository\commons-io\commons-io\2.4\commons-io-2.4.jar;D:\software\maven\repository\commons-net\commons-net\3.1\commons-net-3.1.jar;D:\software\maven\repository\commons-collections\commons-collections\3.2.2\commons-collections-3.2.2.jar;D:\software\maven\repository\javax\servlet\servlet-api\2.5\servlet-api-2.5.jar;D:\software\maven\repository\org\mortbay\jetty\jetty\6.1.26\jetty-6.1.26.jar;D:\software\maven\repository\org\mortbay\jetty\jetty-util\6.1.26\jetty-util-6.1.26.jar;D:\software\maven\repository\javax\servlet\jsp\jsp-api\2.1\jsp-api-2.1.jar;D:\software\maven\repository\com\sun\jersey\jersey-core\1.9\jersey-core-1.9.jar;D:\software\maven\repository\com\sun\jersey\jersey-json\1.9\jersey-json-1.9.jar;D:\software\maven\repository\org\codehaus\jettison\jettison\1.1\jettison-1.1.jar;D:\software\maven\repository\com\sun\xml\bind\jaxb-impl\2.2.3-1\jaxb-impl-2.2.3-1.jar;D:\software\maven\repository\javax\xml\bind\jaxb-api\2.2.2\jaxb-api-2.2.2.jar;D:\software\maven\repository\javax\xml\stream\stax-api\1.0-2\stax-api-1.0-2.jar;D:\software\maven\repository\javax\activation\activation\1.1\activation-1.1.jar;D:\software\maven\repository\org\codehaus\jackson\jackson-jaxrs\1.8.3\jackson-jaxrs-1.8.3.jar;D:\software\maven\repository\org\codehaus\jackson\jackson-xc\1.8.3\jackson-xc-1.8.3.jar;D:\software\maven\repository\com\sun\jersey\jersey-server\1.9\jersey-server-1.9.jar;D:\software\maven\repository\asm\asm\3.1\asm-3.1.jar;D:\software\maven\repository\commons-logging\commons-logging\1.1.3\commons-logging-1.1.3.jar;D:\software\maven\repository\log4j\log4j\1.2.17\log4j-1.2.17.jar;D:\software\maven\repository\net\java\dev\jets3t\jets3t\0.9.0\jets3t-0.9.0.jar;D:\software\maven\repository\org\apache\httpcomponents\httpclient\4.1.2\httpclient-4.1.2.jar;D:\software\maven\repository\org\apache\httpcomponents\httpcore\4.1.2\httpcore-4.1.2.jar;D:\software\maven\repository\com\jamesmurty\utils\java-xmlbuilder\0.4\java-xmlbuilder-0.4.jar;D:\software\maven\repository\commons-lang\commons-lang\2.6\commons-lang-2.6.jar;D:\software\maven\repository\commons-configuration\commons-configuration\1.6\commons-configuration-1.6.jar;D:\software\maven\repository\commons-digester\commons-digester\1.8\commons-digester-1.8.jar;D:\software\maven\repository\commons-beanutils\commons-beanutils\1.7.0\commons-beanutils-1.7.0.jar;D:\software\maven\repository\commons-beanutils\commons-beanutils-core\1.8.0\commons-beanutils-core-1.8.0.jar;D:\software\maven\repository\org\slf4j\slf4j-api\1.7.10\slf4j-api-1.7.10.jar;D:\software\maven\repository\org\slf4j\slf4j-log4j12\1.7.10\slf4j-log4j12-1.7.10.jar;D:\software\maven\repository\org\codehaus\jackson\jackson-core-asl\1.9.13\jackson-core-asl-1.9.13.jar;D:\software\maven\repository\org\codehaus\jackson\jackson-mapper-asl\1.9.13\jackson-mapper-asl-1.9.13.jar;D:\software\maven\repository\org\apache\avro\avro\1.7.4\avro-1.7.4.jar;D:\software\maven\repository\com\thoughtworks\paranamer\paranamer\2.3\paranamer-2.3.jar;D:\software\maven\repository\org\xerial\snappy\snappy-java\1.0.4.1\snappy-java-1.0.4.1.jar;D:\software\maven\repository\com\google\protobuf\protobuf-java\2.5.0\protobuf-java-2.5.0.jar;D:\software\maven\repository\com\google\code\gson\gson\2.2.4\gson-2.2.4.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-auth\2.7.2\hadoop-auth-2.7.2.jar;D:\software\maven\repository\org\apache\directory\server\apacheds-kerberos-codec\2.0.0-M15\apacheds-kerberos-codec-2.0.0-M15.jar;D:\software\maven\repository\org\apache\directory\server\apacheds-i18n\2.0.0-M15\apacheds-i18n-2.0.0-M15.jar;D:\software\maven\repository\org\apache\directory\api\api-asn1-api\1.0.0-M20\api-asn1-api-1.0.0-M20.jar;D:\software\maven\repository\org\apache\directory\api\api-util\1.0.0-M20\api-util-1.0.0-M20.jar;D:\software\maven\repository\org\apache\curator\curator-framework\2.7.1\curator-framework-2.7.1.jar;D:\software\maven\repository\com\jcraft\jsch\0.1.42\jsch-0.1.42.jar;D:\software\maven\repository\org\apache\curator\curator-client\2.7.1\curator-client-2.7.1.jar;D:\software\maven\repository\org\apache\curator\curator-recipes\2.7.1\curator-recipes-2.7.1.jar;D:\software\maven\repository\com\google\code\findbugs\jsr305\3.0.0\jsr305-3.0.0.jar;D:\software\maven\repository\org\apache\htrace\htrace-core\3.1.0-incubating\htrace-core-3.1.0-incubating.jar;D:\software\maven\repository\org\apache\zookeeper\zookeeper\3.4.6\zookeeper-3.4.6.jar;D:\software\maven\repository\org\apache\commons\commons-compress\1.4.1\commons-compress-1.4.1.jar;D:\software\maven\repository\org\tukaani\xz\1.0\xz-1.0.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-client\2.7.2\hadoop-client-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-mapreduce-client-app\2.7.2\hadoop-mapreduce-client-app-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-mapreduce-client-common\2.7.2\hadoop-mapreduce-client-common-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-yarn-client\2.7.2\hadoop-yarn-client-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-yarn-server-common\2.7.2\hadoop-yarn-server-common-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-mapreduce-client-shuffle\2.7.2\hadoop-mapreduce-client-shuffle-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-yarn-api\2.7.2\hadoop-yarn-api-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-mapreduce-client-core\2.7.2\hadoop-mapreduce-client-core-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-yarn-common\2.7.2\hadoop-yarn-common-2.7.2.jar;D:\software\maven\repository\com\sun\jersey\jersey-client\1.9\jersey-client-1.9.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-mapreduce-client-jobclient\2.7.2\hadoop-mapreduce-client-jobclient-2.7.2.jar;D:\software\maven\repository\org\apache\hadoop\hadoop-hdfs\2.7.2\hadoop-hdfs-2.7.2.jar;D:\software\maven\repository\commons-daemon\commons-daemon\1.0.13\commons-daemon-1.0.13.jar;D:\software\maven\repository\io\netty\netty\3.6.2.Final\netty-3.6.2.Final.jar;D:\software\maven\repository\io\netty\netty-all\4.0.23.Final\netty-all-4.0.23.Final.jar;D:\software\maven\repository\xerces\xercesImpl\2.9.1\xercesImpl-2.9.1.jar;D:\software\maven\repository\xml-apis\xml-apis\1.3.04\xml-apis-1.3.04.jar;D:\software\maven\repository\org\fusesource\leveldbjni\leveldbjni-all\1.8\leveldbjni-all-1.8.jar com.kgf.hadoop.HdfsApiClient README.txt 1366 rw-r--r-- supergroup hadoop20 hadoop22 hadoop21 -----------班长的分割线---------- banhua.txt 15 rw-r--r-- supergroup hadoop22 hadoop21 -----------班长的分割线---------- kongming.txt 14 rw-rw-rw- kgf hadoop20 hadoop22 hadoop21 -----------班长的分割线---------- zhuge.txt 14 rw-r--r-- supergroup hadoop20 hadoop22 hadoop21 -----------班长的分割线---------- wc.input 46 rw-r--r-- supergroup hadoop20 hadoop22 hadoop21 -----------班长的分割线---------- zaiyiqi.txt 28 rw-r--r-- supergroup hadoop20 hadoop22 hadoop21 -----------班长的分割线---------- Process finished with exit code 06、HDFS文件和文件夹判断

/*** * HDFS文件和文件夹判断 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void testListStatus() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件配置信息 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 判断是文件还是文件夹 FileStatus[] listStatus = fs.listStatus(new Path("/")); for (FileStatus fileStatus : listStatus) { // 如果是文件 if (fileStatus.isFile()) { System.out.println("f:"+fileStatus.getPath().getName()); }else { System.out.println("d:"+fileStatus.getPath().getName()); } } // 3 关闭资源 fs.close(); System.out.println("over"); }效果输出:

f:README.txt f:banhua.txt d:kgf d:sanguo d:user over

三、HDFS的I/O流操作

1、把本地e盘上的banhua.txt文件上传到HDFS根目录

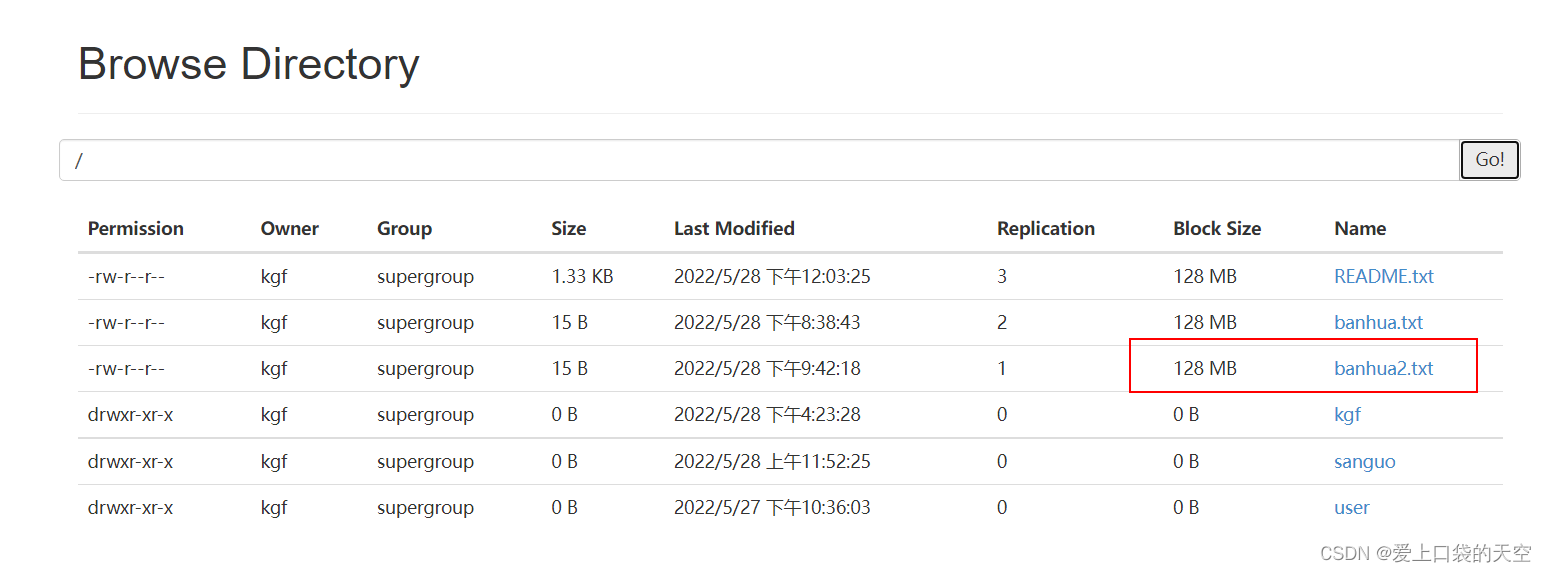

/*** * 把本地e盘上的banhua.txt文件上传到HDFS根目录 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void putFileToHDFS() throws IOException, InterruptedException, URISyntaxException { // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 创建输入流 FileInputStream fis = new FileInputStream(new File("e:/banhua.txt")); // 3 获取输出流 FSDataOutputStream fos = fs.create(new Path("/banhua2.txt")); // 4 流对拷 IOUtils.copyBytes(fis, fos, configuration); // 5 关闭资源 IOUtils.closeStream(fos); IOUtils.closeStream(fis); fs.close(); System.out.println("over!"); }

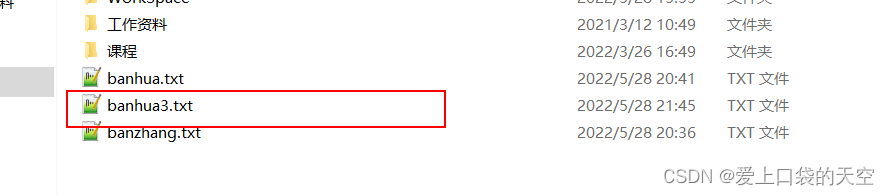

2、从HDFS上下载banhua.txt文件到本地e盘上

/*** * 从HDFS上下载banhua.txt文件到本地e盘上 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void getFileFromHDFS() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 获取输入流 FSDataInputStream fis = fs.open(new Path("/banhua.txt")); // 3 获取输出流 FileOutputStream fos = new FileOutputStream(new File("e:/banhua3.txt")); // 4 流的对拷 IOUtils.copyBytes(fis, fos, configuration); // 5 关闭资源 IOUtils.closeStream(fos); IOUtils.closeStream(fis); fs.close(); System.out.println("over"); }

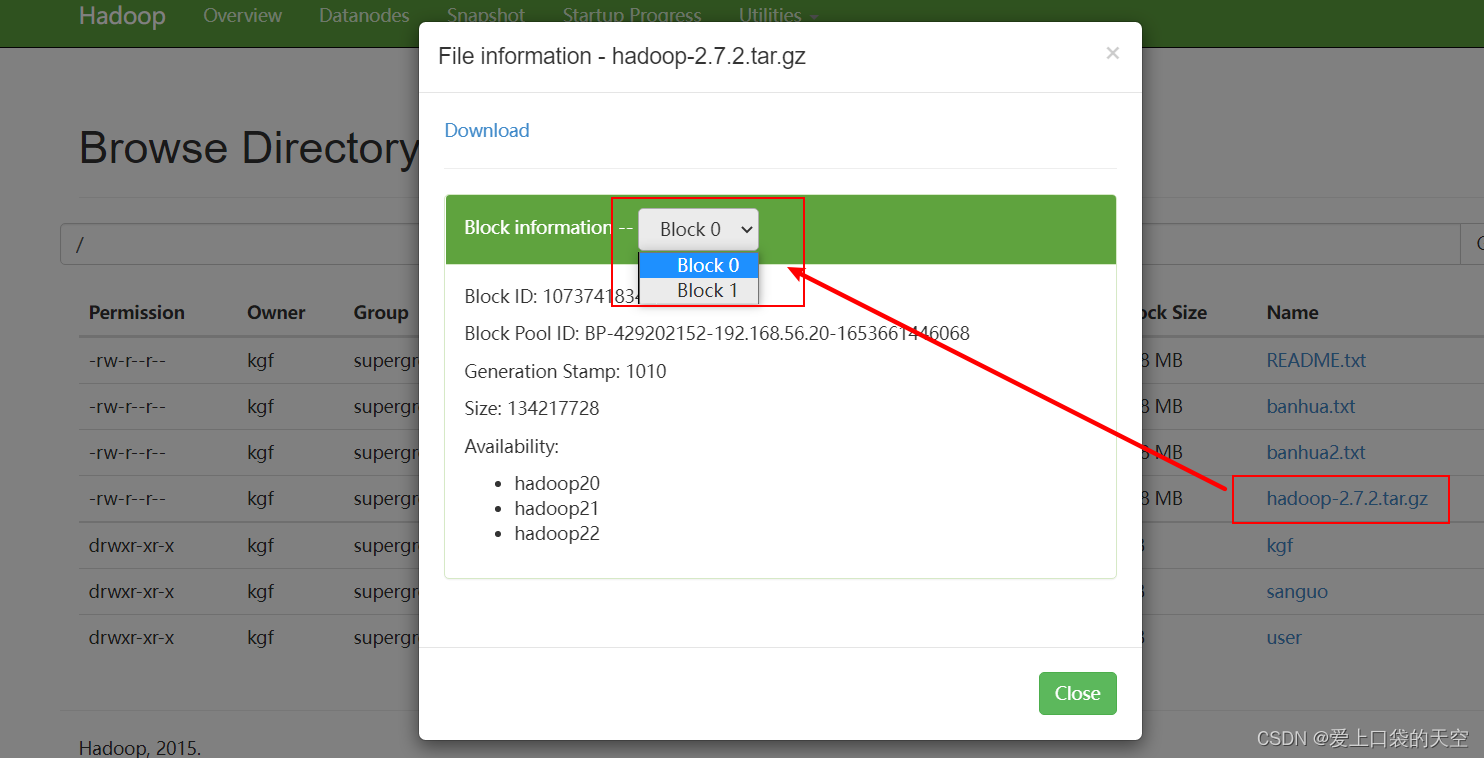

3、分块读取HDFS上的大文件,比如根目录下的/hadoop-2.7.2.tar.gz

?下载第一块block0(1024*1024*128=134217728=128M):

/** * 下载第一块 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void readFileSeek1() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 获取输入流 FSDataInputStream fis = fs.open(new Path("/hadoop-2.7.2.tar.gz")); // 3 创建输出流 FileOutputStream fos = new FileOutputStream(new File("e:/hadoop-2.7.2.tar.gz.part1")); // 4 流的拷贝 byte[] buf = new byte[1024]; for(int i =0 ; i < 1024 * 128; i++){//计算下载第一块1024*1024*128=134217728 fis.read(buf); fos.write(buf); } // 5关闭资源 IOUtils.closeStream(fis); IOUtils.closeStream(fos); fs.close(); }

?下载剩余的第二块block1

/*** * 下载第二块 * @throws IOException * @throws InterruptedException * @throws URISyntaxException */ public void readFileSeek2() throws IOException, InterruptedException, URISyntaxException{ // 1 获取文件系统 Configuration configuration = new Configuration(); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop20:9000"), configuration, "kgf"); // 2 打开输入流 FSDataInputStream fis = fs.open(new Path("/hadoop-2.7.2.tar.gz")); // 3 定位输入数据位置 fis.seek(1024*1024*128); // 4 创建输出流 FileOutputStream fos = new FileOutputStream(new File("e:/hadoop-2.7.2.tar.gz.part2")); // 5 流的对拷 IOUtils.copyBytes(fis, fos, configuration); // 6 关闭资源 IOUtils.closeStream(fis); IOUtils.closeStream(fos); }

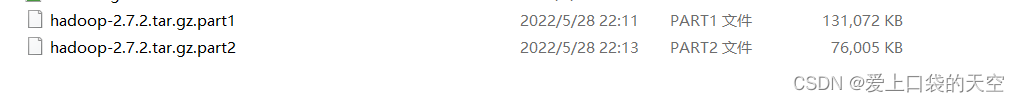

合并文件:

在Window命令窗口中进入到目录E:\,然后执行如下命令,对数据进行合并

type hadoop-2.7.2.tar.gz.part2 >> hadoop-2.7.2.tar.gz.part1

合并完成后,将hadoop-2.7.2.tar.gz.part1重新命名为hadoop-2.7.2.tar.gz。解压发现该tar包非常完整。