文章目录

- 实验环境

- 实验内容

- 安装Hive

- 安装并配置MySQL

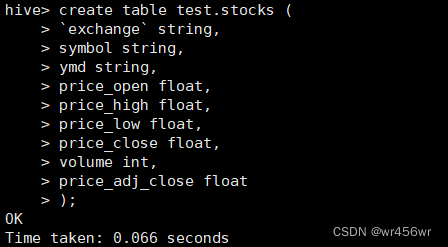

- 创建一个内部表stocks,字段分隔符为英文逗号,表结构如下图:

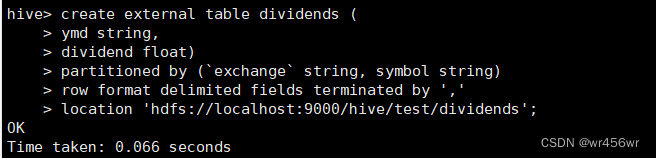

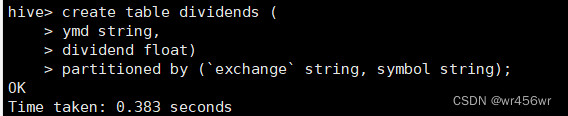

- 创建一个外部分区表dividends(分区字段为exchange和symbol),字段分隔符为英文逗号,表结构如下

- 从stocks.csv文件向stocks表中导入数据

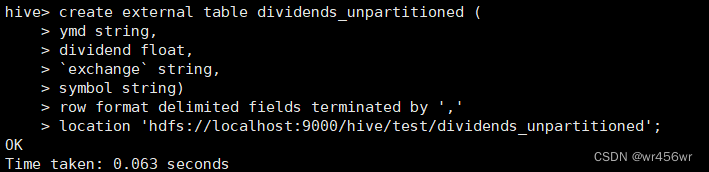

- 创建一个未分区的外部表dividends_unpartitioned,并从dividends.csv向其中导入数据,表结构如下:

- 以针对dividends_unpartitioned的查询为基础,利用Hive的自动分区特性向分区表dividends各个分区中插入对应数据

- 查询IBM公司(symbol = IBM)从 2000 年起所有支付股息的交易日(dividends 表中有对应记录)的收盘价(price_close)。

- 查询苹果公司(symbol = AAPL)2008 年 10 月每个交易日的涨跌情况,涨显示 rise,跌显示 fall,不变显示 unchange。

- 查询 stocks 表中收盘价(price_close)比开盘价(price_open)高得最多的那条记录的交易所(exchange)、股票代码(symbol)、日期(ymd)、收盘价、开盘价及二者差价。

- 从 stocks 表中查询苹果公司(symbol=AAPL)年平均调整后收盘价(price_adj_close)大于 50 美元的年份及年平均调整后收盘价。

- 查询每年年平均调整后收盘价(price_adj_close)前三名的公司的股票代码及年平均调整后收盘价。

- 实验总结

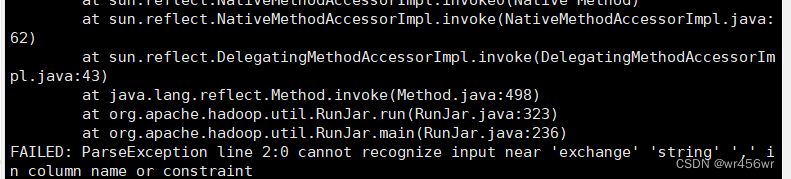

- 出现问题1:HiveException java.lang.RuntimeException

- 出现问题2:FAILED: ParseException line 2:0 cannot recognize input near 'exchange' 'string' ',' in column name or constraint

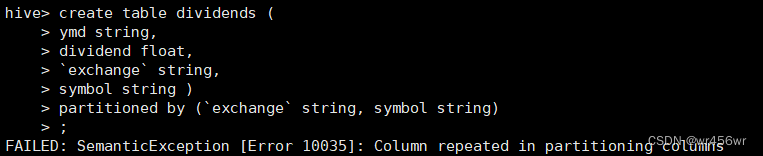

- 出现问题3:FAILED: SemanticException [Error 10035]: Column repeated in partitioning columns

- 出现问题4:FAILED: SemanticException [Error 10096]: Dynamic partition strict mode requires at least one static partition column. To turn this off set hive.exec.dynamic.partition.mode=nonstric

- 出现问题:hive FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

- 参考

实验环境

ubuntu18.04

实验内容

安装Hive

Hive下载官网:https://dlcdn.apache.org/hive/

下载Hive

wget https://dlcdn.apache.org/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

解压Hive

tar -zxf apache-hive-3.1.2-bin.tar.gz

更改名称

mv apache-hive-3.1.2-bin hive

配置环境变量

vim /etc/profile

加入

export HiVE_HOME=/root/hive

export HADOOP_HOME=/root/hadoop

export PATH=$PATH:$HIVE_HOME/bin

使配置生效

source /etc/profile

配置Hive配置文件

#进入hive的安装目录

cd hive

#修改配置文件名称

mv conf/hive-default.xml.template conf/hive-default.xml

#创建一个hive-site.xml配置文件

vim conf/hive-site.xml

在hive-site.xml内写入一下内容:

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password to use against metastore database</description>

</property>

</configuration>

安装并配置MySQL

安装mysql

apt install mysql-server

关闭和启动mysql

systemctl start mysql

systemctl stop mysql

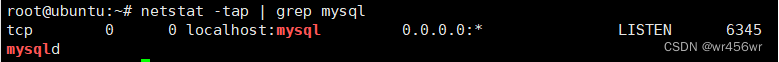

验证是否启动成功

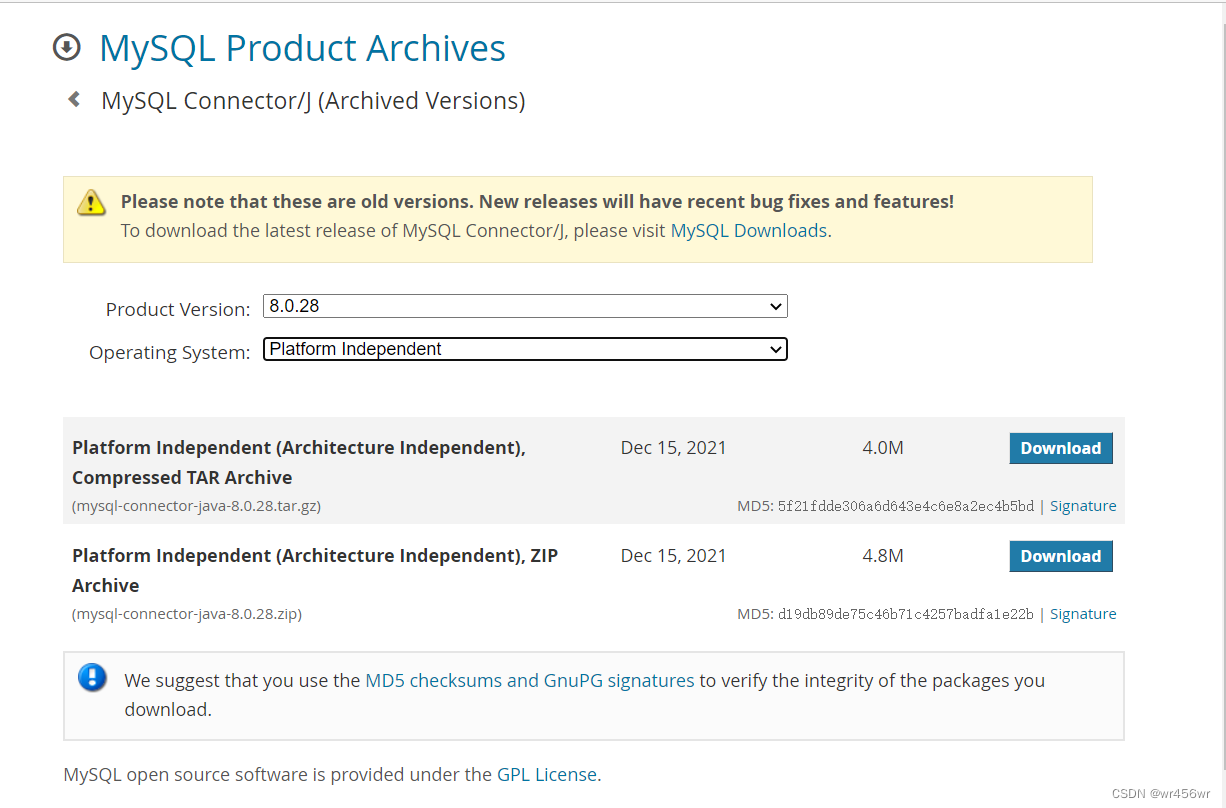

下载驱动:

https://downloads.mysql.com/archives/c-j/

wget https://downloads.mysql.com/archives/get/p/3/file/mysql-connector-java-8.0.25.tar.gz

解压:

tar -zxf mysql-connector-java-8.0.25.tar.gz

复制解压后的文件到hive/lib目录下

cp mysql-connector-java-8.0.25/mysql-connector-java-8.0.25.jar hive/lib/

登录mysql

mysql -u root -p

创建hive数据库

create database hive;

将所有数据库的所有表的所有权限赋给hive用户,后面的hive是配置hive-site.xml中配置的连接密码

grant all on . to hive@localhost identified by ‘hive’;

刷新mysql系统权限关系表

flush privileges;

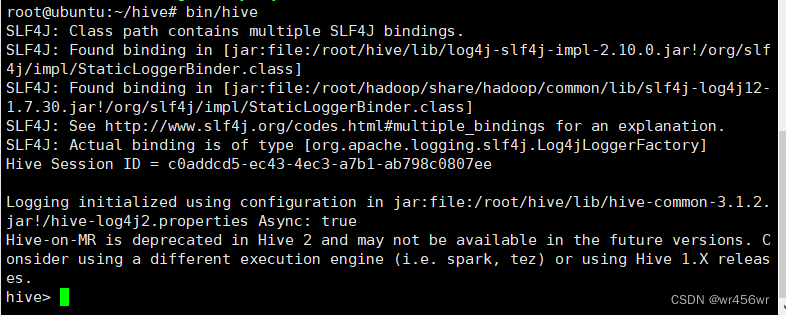

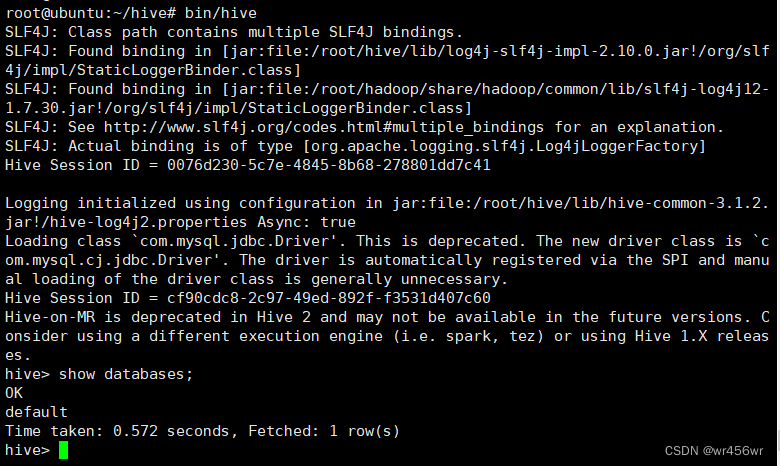

启动hive

bin/hive

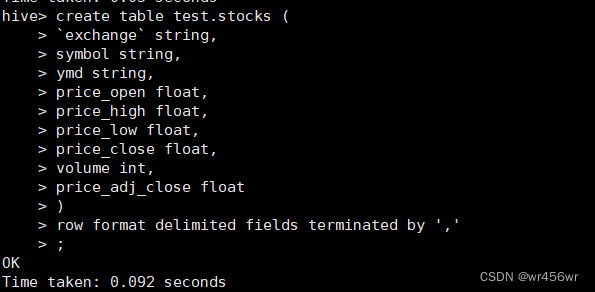

创建一个内部表stocks,字段分隔符为英文逗号,表结构如下图:

| col_name | data_type |

|---|---|

| i_exchange | string |

| symbol | string |

| ymd | string |

| price_open | float |

| price_high | float |

| price_low | float |

| price_close | float |

| volume | int |

| price_adj_close | float |

create table test.stocks (

`exchange` string,

symbol string,

ymd string,

price_open float,

price_high float,

price_low float,

price_close float,

volume int,

price_adj_close float

)

row format delimited fields terminated by ','

创建一个外部分区表dividends(分区字段为exchange和symbol),字段分隔符为英文逗号,表结构如下

| col_name | data_type |

|---|---|

| ymd | string |

| dividend | float |

| exchange | string |

| symbol | string |

create external table dividends (

ymd string,

dividend float)

partitioned by (`exchange` string, symbol string)

row format delimited fields terminated by ','

location 'hdfs://localhost:9000/hive/test/dividends'

从stocks.csv文件向stocks表中导入数据

stocks.csv文件放在我的/root/my_tmp目录下

导入数据:

load data local inpath’/root/my_tmp/stocks.csv’ into table stocks;

创建一个未分区的外部表dividends_unpartitioned,并从dividends.csv向其中导入数据,表结构如下:

| col_name | data_type |

|---|---|

| ymd | string |

| dividend | float |

| exchange | string |

| symbol | string |

创建表dividends_unpartitioned

create external table dividends_unpartitioned (

ymd string,

dividend float,

`exchange` string,

symbol string)

row format delimited fields terminated by ','

location 'hdfs://localhost:9000/hive/test/dividends_unpartitioned'

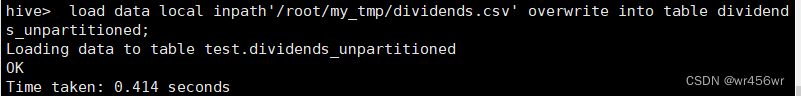

dividends.csv文件存放在/root/my_tmp目录下。

导入数据:

load data local inpath’/root/my_tmp/dividends.csv’ overwrite into table dividends_unpartitioned;

以针对dividends_unpartitioned的查询为基础,利用Hive的自动分区特性向分区表dividends各个分区中插入对应数据

insert overwrite table dividends partition (`exchange`, `symbol`) select `ymd`, `dividend`, `exchange`, `symbol` from dividends_unpartitioned;

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.mode=nonstrict;

set hive.exec.max.dynamic.partitions.pernode=1000;

insert overwrite table dividends partition(`exchange`,`symbol`) select `ymd`,`dividend`,`exchange`,`symbol` from dividends_unpartitioned;

查询IBM公司(symbol = IBM)从 2000 年起所有支付股息的交易日(dividends 表中有对应记录)的收盘价(price_close)。

select s.ymd,s.symbol,s.price_close

from stocks s

LEFT SEMI JOIN

dividends d

ON s.ymd=d.ymd and s.symbol=d.symbol

where s.symbol='IBM' and year(ymd)>=2000;

查询苹果公司(symbol = AAPL)2008 年 10 月每个交易日的涨跌情况,涨显示 rise,跌显示 fall,不变显示 unchange。

select ymd,

case

when price_close-price_open>0 then 'rise'

when price_close-price_open<0 then 'fall'

else 'unchanged'

end as situation

from stocks

where symbol='AAPL' and substring(ymd,0,7)='2008-10';

查询 stocks 表中收盘价(price_close)比开盘价(price_open)高得最多的那条记录的交易所(exchange)、股票代码(symbol)、日期(ymd)、收盘价、开盘价及二者差价。

select `exchange`,`symbol`,`ymd`,price_close,price_open,price_close-price_open as `diff`

from

(

select *

from stocks

order by price_close-price_open desc

limit 1

)t;

从 stocks 表中查询苹果公司(symbol=AAPL)年平均调整后收盘价(price_adj_close)大于 50 美元的年份及年平均调整后收盘价。

select

year(ymd) as `year`,

avg(price_adj_close) as avg_price from stocks

where `exchange`='NASDAQ' and symbol='AAPL'

group by year(ymd)

having avg_price > 50;

查询每年年平均调整后收盘价(price_adj_close)前三名的公司的股票代码及年平均调整后收盘价。

select t2.`year`,symbol,t2.avg_price

from

(

select

*,row_number() over(partition by t1.`year` order by t1.avg_price desc) as `rank`

from

(

select

year(ymd) as `year`,

symbol,

avg(price_adj_close) as avg_price

from stocks

group by year(ymd),symbol

)t1

)t2

where t2.`rank`<=3;

实验总结

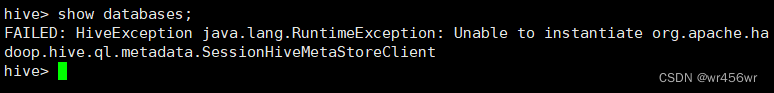

出现问题1:HiveException java.lang.RuntimeException

问题具体描述:

在hive的控制界面输入show databases;显示HiveException java.lang.RuntimeException错误

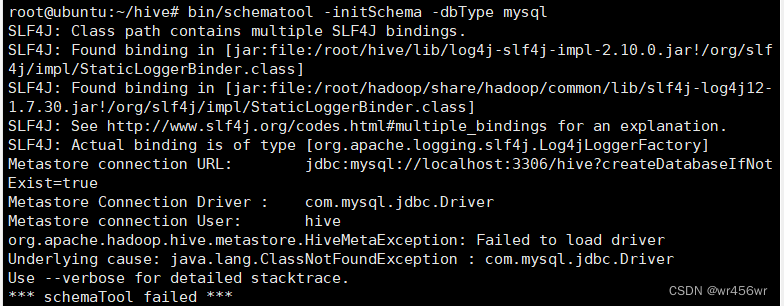

解决:

进行格式化

bin/schematool -initSchema -dbType mysql

出现错误:

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to load driver

;

解决:

将mysql驱动的jar包放到hive的lib目录下

cp mysql-connector-java-8.0.25/mysql-connector-java-8.0.25.jar hive/lib/

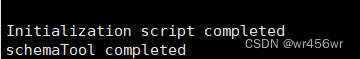

之后重新执行初始化

bin/schematool -initSchema -dbType mysql

之后问题成功解决

出现问题2:FAILED: ParseException line 2:0 cannot recognize input near ‘exchange’ ‘string’ ‘,’ in column name or constraint

在插入一下语句出现问题:

create table test.stocks (

exchange string,

symbol string,

ymd string,

price_open float,

price_high float,

price_low float,

price_close float,

volume int,

price_adj_close float

);

解决:将exchange字段改为i_exhange或者在exchange字段用 ` 符号包裹

因为 exchange 是一个关键字,用于将分区中的数据从一个表移动到另一个具有相同架构但还没有该分区的表以获取详细信息

出现问题3:FAILED: SemanticException [Error 10035]: Column repeated in partitioning columns

问题描述:创建分区表时出现错误

create table dividends (

ymd string,

dividend float,

`exchange` string,

symbol string )

partitioned by (`exchange` string, symbol string)

分区字段不能和表中的字段重复。

出现问题4:FAILED: SemanticException [Error 10096]: Dynamic partition strict mode requires at least one static partition column. To turn this off set hive.exec.dynamic.partition.mode=nonstric

分区字段是根据select出来的具体值进行动态分区,因此就需要开启:set hive.exec.dynamic.partition.mode=nonstrict

解决:

set hive.exec.dynamic.partition.mode=nonstrict

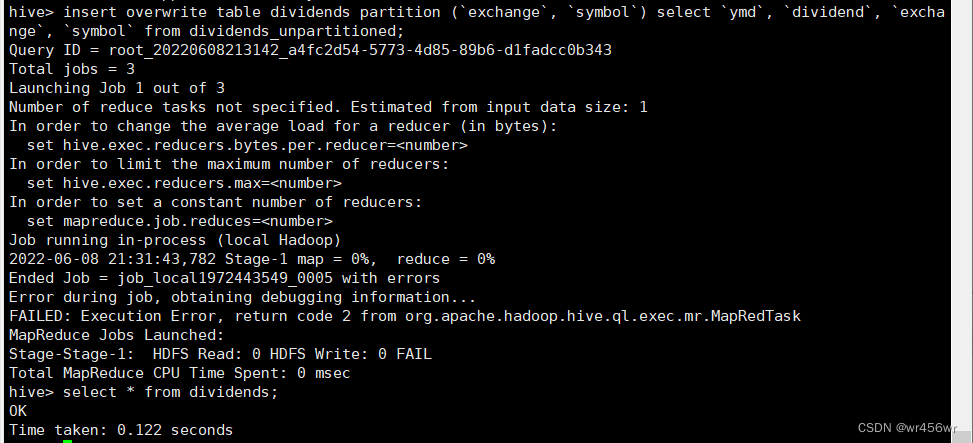

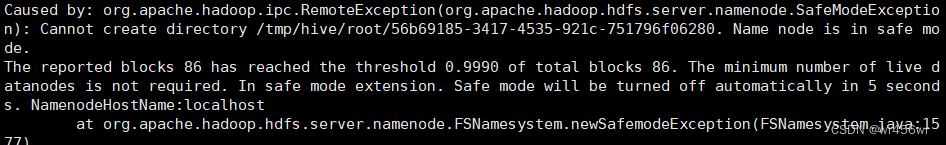

出现问题:hive FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

在执行分区插入时出错:

insert overwrite table dividends partition (`exchange`, `symbol`) select `ymd`, `dividend`, `exchange`, `symbol` from dividends_unpartitioned;

尝试解决:

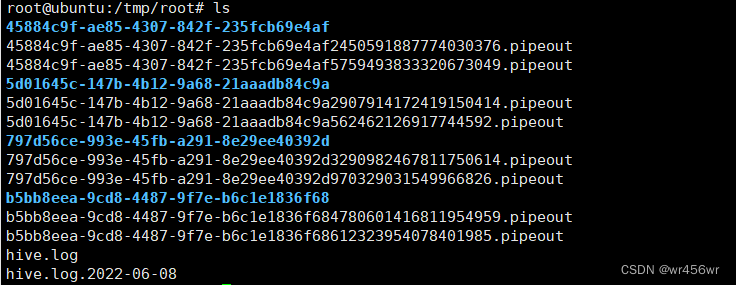

查看hive的日志,日志一般存放在/tmp/{当前用户}路径下

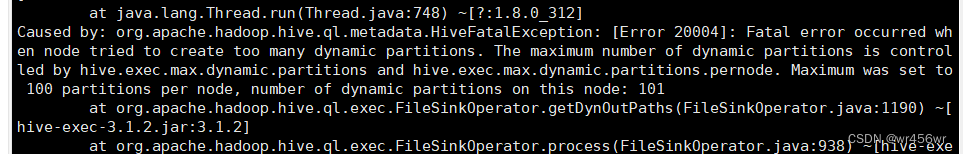

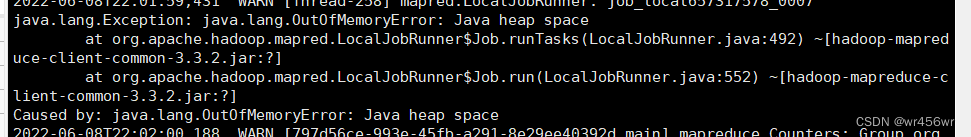

通过日志发现有两处错误,一处是Java 的堆内存不足,OutOfMemory,一个是分区数不足

设置扩大堆内存,设置map和reduce需要的最大堆内存为2048MB

set mapreduce.map.java.opts = -Xmx2048m;

set mapreduce.reduce.java.opts = -Xmx2048m;

设置允许每个节点创建最大动态分区数:

set hive.exec.max.dynamic.partitions.pernode=500;

重新进行分区导入任然报错。

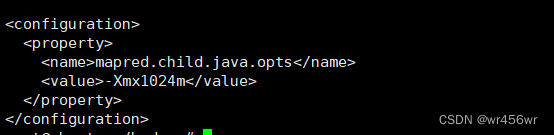

进入hadoop配置文件etc/hadoop/mapred-site.xml进行修改。

添加以下配置

<property>

<name>mapred.child.java.opts</name>

<value>-Xmx1024m</value>

</property>

停止hadoop重新启动hadoop并进入hive

如果进入失败可能是hadoop处于安全模式,等一会就好

重新进行操作,还是不行。。。。。。

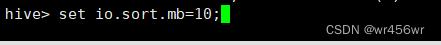

设置另一个参数(设置内存缓冲的大小,在suffle之前这个选项定义了map输出结果在内存里占用buffer的大小,当buffer达到某个阈值(后面那条配置),会启动一个后台线程来对buffer的内容进行排序,然后写入本地磁盘(一个spill文件)):

set io.sort.mb=10;

可以解决,但是进行数据操作时可能耗时太长

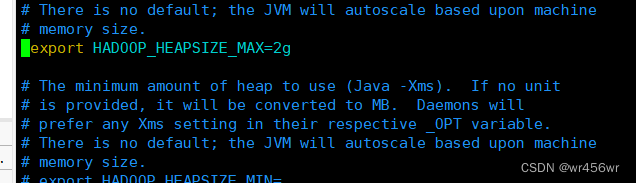

将hadoop的配置文件下的hadoop-env.sh加上最大堆值

之后重启hadoop

参考

https://www.jianshu.com/p/bcfa7bb40546

http://dblab.xmu.edu.cn/blog/2440-2/

https://blog.csdn.net/Maishuchongji/article/details/120923403

https://blog.csdn.net/zhengzaifeidelushang/article/details/117001957

https://www.coder.work/article/730910

https://blog.csdn.net/pingsha_luoyan/article/details/97272987

https://blog.csdn.net/Dax1n/article/details/81568442

JVM - 参数简介 https://www.jianshu.com/p/1c6b5c2e95f9

MapReduce 基本优化相关参数 https://www.cnblogs.com/yjt1993/p/9483032.html

hive报错Java heap space https://blog.csdn.net/qq_33792843/article/details/91875920

Hive一些参数设置 https://www.cnblogs.com/cfox/p/3849407.html