Docker安装Hive与Windows安装Hive

Docker安装Hive

由于使用Docker安装Hadoop,故将Hive安装在Docker容器内部

下载

进入官网: https://archive.apache.org/dist/hive/,选择与Hadoop匹配的版本进行下载

wget https://archive.apache.org/dist/hive/hive-2.3.8/apache-hive-2.3.8-bin.tar.gz

复制到容器内部

将Hive复制到Docker容器中

docker cp apache-hive-2.3.8-bin.tar.gz hadoop:/usr/local

进入容器

进入Hadoop容器,安装Hive

docker exec -it hadoop /bin/bash

解压及重命名

tar -zxvf apache-hive-2.3.8-bin.tar.gz

mv apache-hive-2.3.8-bin hive

修改hive-env.sh

cd hive/conf

cp hive-env.sh.template hive-env.sh

vi hive-env.sh

修改Hadoop安装位置及Hive的配置目录

# Set HADOOP_HOME to point to a specific hadoop install directory

# HADOOP_HOME=${bin}/../../hadoop

# Hive Configuration Directory can be controlled by:

# export HIVE_CONF_DIR=

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/usr/local/hadoop

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/usr/local/hive/conf

创建hive-site.xml

cp hive-default.xml.template hive-site.xml

vi hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 数据库相关配置 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://112.74.96.150:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

<!--hive的表存放位置,默认/user/hive/warehouse-->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/hive/warehouse</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>112.74.96.150</value>

</property>

</configuration>

添加驱动包

下载Mysql驱动包,放到到hive的lib目录

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.49/mysql-connector-java-5.1.49.jar

docker cp mysql-connector-java-5.1.49.jar hadoop:/usr/local/hive/lib

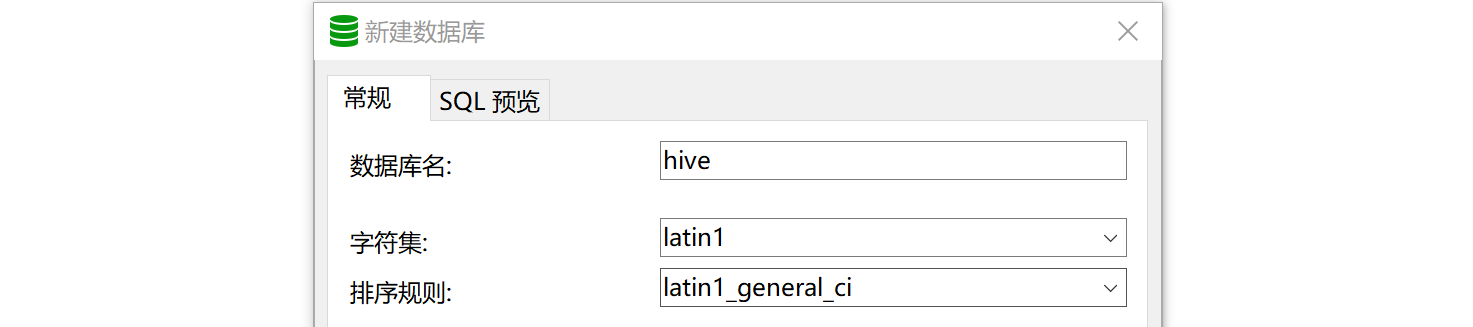

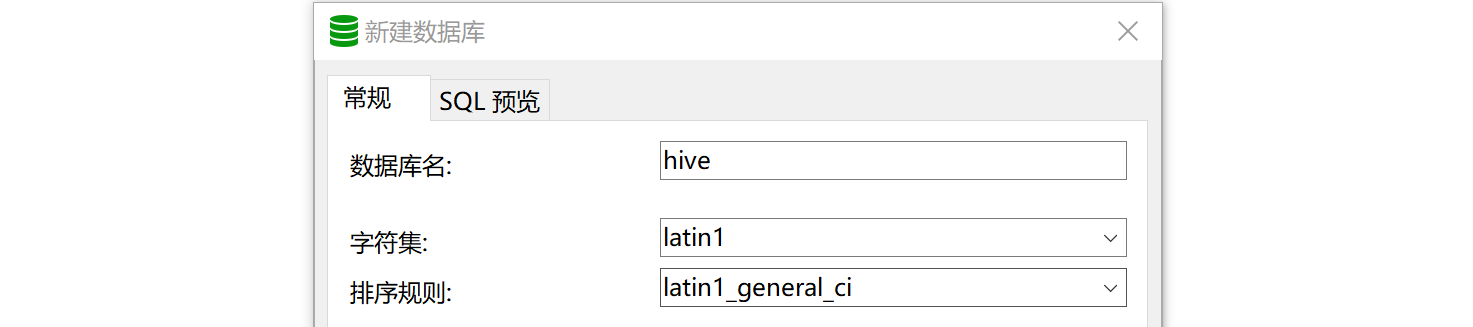

创建数据库

创建Hive初始化依赖的数据库hive,注意编码格式:atin1

启动Hive

出现异常,是说配置文件3233有特殊字符,将多余配置文件删除即可。

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3233,102,"file:/usr/local/program/hive/conf/hive-site.xml"]

bash-4.1# cd /usr/local/hive/bin

bash-4.1# ./hive

which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/java/default/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.7.0/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/hive/lib/hive-common-2.3.8.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. tez, spark) or using Hive 1.X releases.

hive> show databases;

OK

default

Time taken: 14.246 seconds, Fetched: 1 row(s)

hive>

Windows安装Hive

在Windows环境下安装Hive雨上述配置类似

下载Hive

官网:http://archive.apache.org/dist/hive/下载与Hadoop匹配合适的版本,解压到某个目录

配置hive-env.sh

复制hive-env.sh.template的副本修改为hive-env.sh并添加配置

HADOOP_HOME=D:\Development\Hadoop

export HIVE_CONF_DIR=D:\Development\Hive\conf

export HIVE_AUX_JARS_PATH=D:\Development\Hive\lib

配置hive-default.xml

复制hive-default.xml.template的副本修改为hive-default.xml并添加配置

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 数据库相关配置 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true

</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<!-- 美化打印数据 -->

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<!-- hive server -->

<property>

<name>hive.server2.webui.host</name>

<value>localhost</value>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

</property>

<!-- 数据存储位置 -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>D:\Development\Hive\warehouse</value>

</property>

</configuration>

添加驱动包

下载Mysql驱动包,放到到hive的lib目录

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.49/mysql-connector-java-5.1.49.jar

创建数据库

创建Hive初始化依赖的数据库hive,注意编码格式:atin1

启动Hive

启动Hadoop:stall-all.cmd

Hive初始化数据:hive --service metastore

启动Hive:hive.cmd