balance是啥,顾名思义 是个平衡器

主要是平衡各个datanode之间的使用

?网上的文档一个比一个写的6结果,有的命令都拼错了。。。而且你知道究竟平衡的是啥么

直接上官网

--查看balance 也就是集群之间转移数据的速度

hdfs dfsadmin -getBalancerBandwidth node17:9867?

Balancer bandwidth is 10485760 bytes per second.? --10M嫌慢 设置20M

这里权限有点问题。。。认证hdfs

hdfs dfsadmin -setBalancerBandwidth 20971520

[root@worker01 /home/devuser]# hdfs dfsadmin -setBalancerBandwidth ?20971520

NumberFormatException: For input string: "?20971520"

Usage: hdfs dfsadmin [-setBalancerBandwidth <bandwidth in bytes per second>]

[root@worker01 /home/devuser]# hdfs dfsadmin -setBalancerBandwidth 20971520

setBalancerBandwidth: Access denied for user hive. Superuser privilege is required

[root@worker01 /home/devuser]# kinit hdfs

Password for hdfs@CDH.COM:

[root@worker01 /home/devuser]# hdfs dfsadmin -setBalancerBandwidth 20971520

[root@worker01 /home/devuser]# hdfs dfsadmin -setBalancerBandwidth 20971520

Balancer bandwidth is set to 20971520 for master.data.com/9.134.64.234:8020

Balancer bandwidth is set to 20971520 for node01.data.com/9.134.66.48:8020

--这里的时候我遇到一个问题 ip我知道,这个端口是啥。。但是我注意这个是ipc_port?

开始准备balancer

[root@worker01 /home/devuser]# hdfs balancer --help

Usage: hdfs balancer

[-policy <policy>] the balancing policy: datanode or blockpool

[-threshold <threshold>] Percentage of disk capacity

[-exclude [-f <hosts-file> | <comma-separated list of hosts>]] Excludes the specified datanodes.

[-include [-f <hosts-file> | <comma-separated list of hosts>]] Includes only the specified datanodes.

[-source [-f <hosts-file> | <comma-separated list of hosts>]] Pick only the specified datanodes as source nodes.

[-blockpools <comma-separated list of blockpool ids>] The balancer will only run on blockpools included in this list.

[-idleiterations <idleiterations>] Number of consecutive idle iterations (-1 for Infinite) before exit.

[-runDuringUpgrade] Whether to run the balancer during an ongoing HDFS upgrade.This is usually not desired since it will not affect used space on over-utilized machines.

Generic options supported are:

-conf <configuration file> specify an application configuration file

-D <property=value> define a value for a given property

-fs <file:///|hdfs://namenode:port> specify default filesystem URL to use, overrides 'fs.defaultFS' property from configurations.

-jt <local|resourcemanager:port> specify a ResourceManager

-files <file1,...> specify a comma-separated list of files to be copied to the map reduce cluster

-libjars <jar1,...> specify a comma-separated list of jar files to be included in the classpath

-archives <archive1,...> specify a comma-separated list of archives to be unarchived on the compute machines

The general command line syntax is:

command [genericOptions] [commandOptions]

?这时候要考虑一个问题?怎么样才算平衡?

比如10个dn,每个100G容量,共计1T, 总共使用了200G 其中 dn1使用了1k dn2使用了99G

那么我要怎么平衡? dn1和dn2平衡到20G还是50G。我也不知道。

开始实验

threshold?

hdfs balancer -threshold 5? --阈值=5?也就是容忍datanode数据的差距是5%

[root@worker01 /home/devuser]# hdfs balancer -threshold 5

22/06/27 11:29:18 INFO balancer.Balancer: Using a threshold of 5.0

22/06/27 11:29:18 INFO balancer.Balancer: namenodes = [hdfs://s2cluster]

22/06/27 11:29:18 INFO balancer.Balancer: parameters = Balancer.BalancerParameters [BalancingPolicy.Node, threshold = 5.0, max idle iteration = 5, #excluded nodes = 0, #included nodes = 0, #source nodes = 0, #blockpools = 0, run during upgrade = false]

22/06/27 11:29:18 INFO balancer.Balancer: included nodes = []

22/06/27 11:29:18 INFO balancer.Balancer: excluded nodes = []

22/06/27 11:29:18 INFO balancer.Balancer: source nodes = []

Time Stamp Iteration# Bytes Already Moved Bytes Left To Move Bytes Being Moved

22/06/27 11:29:19 INFO balancer.KeyManager: Block token params received from NN: update interval=10hrs, 0sec, token lifetime=10hrs, 0sec

22/06/27 11:29:19 INFO block.BlockTokenSecretManager: Setting block keys

22/06/27 11:29:19 INFO balancer.KeyManager: Update block keys every 2hrs, 30mins, 0sec

22/06/27 11:29:19 INFO balancer.Balancer: dfs.balancer.movedWinWidth = 5400000 (default=5400000)

22/06/27 11:29:19 INFO balancer.Balancer: dfs.balancer.moverThreads = 1000 (default=1000)

22/06/27 11:29:19 INFO balancer.Balancer: dfs.balancer.dispatcherThreads = 200 (default=200)

22/06/27 11:29:19 INFO balancer.Balancer: dfs.datanode.balance.max.concurrent.moves = 50 (default=50)

22/06/27 11:29:19 INFO balancer.Balancer: dfs.balancer.getBlocks.size = 2147483648 (default=2147483648)

22/06/27 11:29:19 INFO balancer.Balancer: dfs.balancer.getBlocks.min-block-size = 10485760 (default=10485760)

22/06/27 11:29:19 INFO block.BlockTokenSecretManager: Setting block keys

22/06/27 11:29:19 INFO balancer.Balancer: dfs.balancer.max-size-to-move = 10737418240 (default=10737418240)

22/06/27 11:29:19 INFO balancer.Balancer: dfs.blocksize = 134217728 (default=134217728)

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.117.90:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.68.200:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.80.60:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.81.221:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.124.14:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.122.87:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.83.33:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.163.60:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.115.141:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.124.36:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.71.192:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.123.37:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.117.73:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.163.238:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.116.73:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.77.69:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.123.190:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.81.127:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.163.174:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.80.64:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.122.224:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.123.215:1004

22/06/27 11:29:19 INFO net.NetworkTopology: Adding a new node: /default/9.134.122.6:1004

22/06/27 11:29:19 INFO balancer.Balancer: 3 over-utilized: [9.134.117.90:1004:DISK, 9.134.124.14:1004:DISK, 9.134.116.73:1004:DISK]

22/06/27 11:29:19 INFO balancer.Balancer: 5 underutilized: [9.134.80.60:1004:DISK, 9.134.81.221:1004:DISK, 9.134.124.36:1004:DISK, 9.134.81.127:1004:DISK, 9.134.80.64:1004:DISK]

22/06/27 11:29:19 INFO balancer.Balancer: Need to move 51.86 GB to make the cluster balanced.

22/06/27 11:29:19 INFO balancer.Balancer: chooseStorageGroups for SAME_RACK: overUtilized => underUtilized

22/06/27 11:29:19 INFO balancer.Balancer: Decided to move 10 GB bytes from 9.134.117.90:1004:DISK to 9.134.80.60:1004:DISK

22/06/27 11:29:19 INFO balancer.Balancer: Decided to move 10 GB bytes from 9.134.124.14:1004:DISK to 9.134.81.221:1004:DISK

22/06/27 11:29:19 INFO balancer.Balancer: Decided to move 10 GB bytes from 9.134.116.73:1004:DISK to 9.134.124.36:1004:DISK

22/06/27 11:29:19 INFO balancer.Balancer: chooseStorageGroups for SAME_RACK: overUtilized => belowAvgUtilized

22/06/27 11:29:19 INFO balancer.Balancer: chooseStorageGroups for SAME_RACK: underUtilized => aboveAvgUtilized

22/06/27 11:29:19 INFO balancer.Balancer: Decided to move 10 GB bytes from 9.134.68.200:1004:DISK to 9.134.81.127:1004:DISK

22/06/27 11:29:19 INFO balancer.Balancer: Decided to move 10 GB bytes from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK

22/06/27 11:29:19 INFO balancer.Balancer: chooseStorageGroups for ANY_OTHER: overUtilized => underUtilized

22/06/27 11:29:19 INFO balancer.Balancer: chooseStorageGroups for ANY_OTHER: overUtilized => belowAvgUtilized

22/06/27 11:29:19 INFO balancer.Balancer: chooseStorageGroups for ANY_OTHER: underUtilized => aboveAvgUtilized

22/06/27 11:29:19 INFO balancer.Balancer: Will move 50 GB in this iteration

22/06/27 11:29:19 INFO balancer.Dispatcher: Limiting threads per target to the specified max.

22/06/27 11:29:19 INFO balancer.Dispatcher: Allocating 50 threads per target.

22/06/27 11:29:19 INFO retry.RetryInvocationHandler: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-error

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:88)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:1962)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1421)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlocks(FSNamesystem.java:1716)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlocks(NameNodeRpcServer.java:631)

at org.apache.hadoop.hdfs.protocolPB.NamenodeProtocolServerSideTranslatorPB.getBlocks(NamenodeProtocolServerSideTranslatorPB.java:89)

at org.apache.hadoop.hdfs.protocol.proto.NamenodeProtocolProtos$NamenodeProtocolService$2.callBlockingMethod(NamenodeProtocolProtos.java:12966)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:523)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:991)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:869)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:815)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2675)

, while invoking NamenodeProtocolTranslatorPB.getBlocks over master.data.com/9.134.64.234:8020. Trying to failover immediately.

22/06/27 11:29:19 WARN retry.RetryInvocationHandler: A failover has occurred since the start of call #13 NamenodeProtocolTranslatorPB.getBlocks over node01.data.com/9.134.66.48:8020

22/06/27 11:29:19 WARN retry.RetryInvocationHandler: A failover has occurred since the start of call #16 NamenodeProtocolTranslatorPB.getBlocks over node01.data.com/9.134.66.48:8020

22/06/27 11:29:19 WARN retry.RetryInvocationHandler: A failover has occurred since the start of call #14 NamenodeProtocolTranslatorPB.getBlocks over node01.data.com/9.134.66.48:8020

22/06/27 11:29:19 WARN retry.RetryInvocationHandler: A failover has occurred since the start of call #12 NamenodeProtocolTranslatorPB.getBlocks over node01.data.com/9.134.66.48:8020

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090172187_16434653 with size=112350808 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090197454_16459920 with size=134217728 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090172224_16434690 with size=92790751 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090200946_16463412 with size=134217728 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090243729_16506195 with size=10589105 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090383941_16646407 with size=73175462 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090243736_16506202 with size=10589105 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.68.200:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090384199_16646665 with size=265221529 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1090374653_16637119 with size=15559033 from 9.134.122.87:1004:DISK to 9.134.80.64:1004:DISK through 9.134.122.87:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1087149463_13410403 with size=70067422 from 9.134.117.90:1004:DISK to 9.134.80.60:1004:DISK through 9.134.163.174:1004

22/06/27 11:29:19 INFO balancer.Dispatcher: Start moving blk_1085874680_12135195 with size=18098983 from 9.134.68.200:1004:DISK to 9.134.81.127:1004:DISK through 9.134.83.33:1004

结果很有意思的是打印日志的时候报错了。来分析下日志

1.Using a threshold of 5.0 说明获取到阈值

2.namenodes ?= [hdfs://s2cluster] 获取到集群信息 并且是HA

3.included nodes = [] ,excluded nodes = [] 说明我们没有设置指定哪些节点或者排除哪些节点

4.dfs.balancer.xx 打印了很多默认参数

5.?3 over-utilized: [9.134.117.90:1004:DISK, 9.134.124.14:1004:DISK, 9.134.116.73:1004:DISK]

5 underutilized: [9.134.80.60:1004:DISK, 9.134.81.221:1004:DISK, 9.134.124.36:1004:DISK, 9.134.81.127:1004:DISK, 9.134.80.64:1004:DISK]

这里说的是3个超过了限额的。5个低于限额的。我们来先看下这3个over发现其实有问题的。

?

发现其实这三个没有相似处。暂且不管。

6.开始分配over->under

7开始move结果报错了,Operation category READ is not supported in state standby 这个不用说肯定是用到了standby的节点?

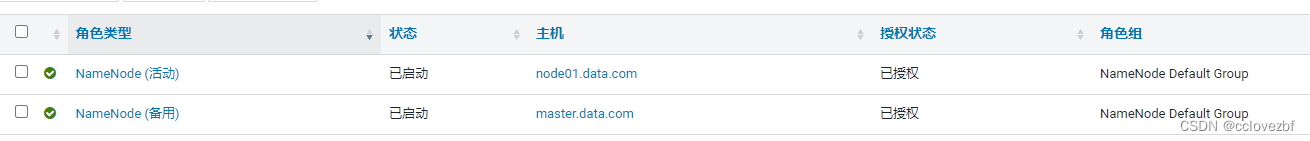

8.关键日志, while invoking NamenodeProtocolTranslatorPB.getBlocks over master.data.com/9.134.64.234:8020. Trying to failover immediately. 这个b怎么就用的mater,下图可以看到master处于备用阶段,按照ha应该是可以用node01的。淦!

?9、但是后面的日志惊奇的发现他又开始用node01的active nn了。并且有开始move的日志了

10、Failed to move blk_1074919453_1178890 with size=134217728 from 9.134.68.200:1004:DISK to 9.134.81.127:1004:DISK through 9.134.80.60:1004

java.io.IOException: Got error, status=ERROR, status message Not able to receive block 1074919453 from /9.134.70.1:44227 because threads quota is exceeded., reportedBlock move is failed 好像是thread超过限额了?

-----------------------

上面的命令说明了啥?

只要你设置了阈值那么就会立马起作用!!!?

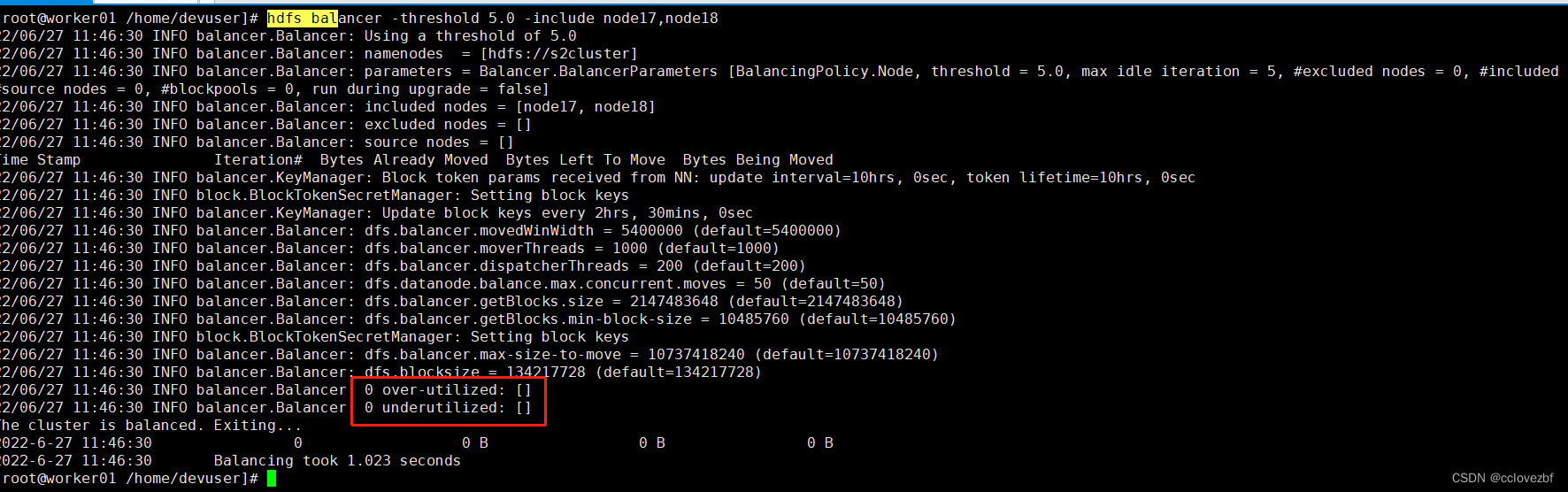

于是改变策略我只移动两个nn的。

?发现根本就没有低于阈值或者高于阈值的。。感觉这里有问题。

hdfs balancer -threshold 5 再次使用

之前取销是因为一直报错。。。后面发现这些报错不用管。等十几秒就会正常。前面都是连接错误啥的。

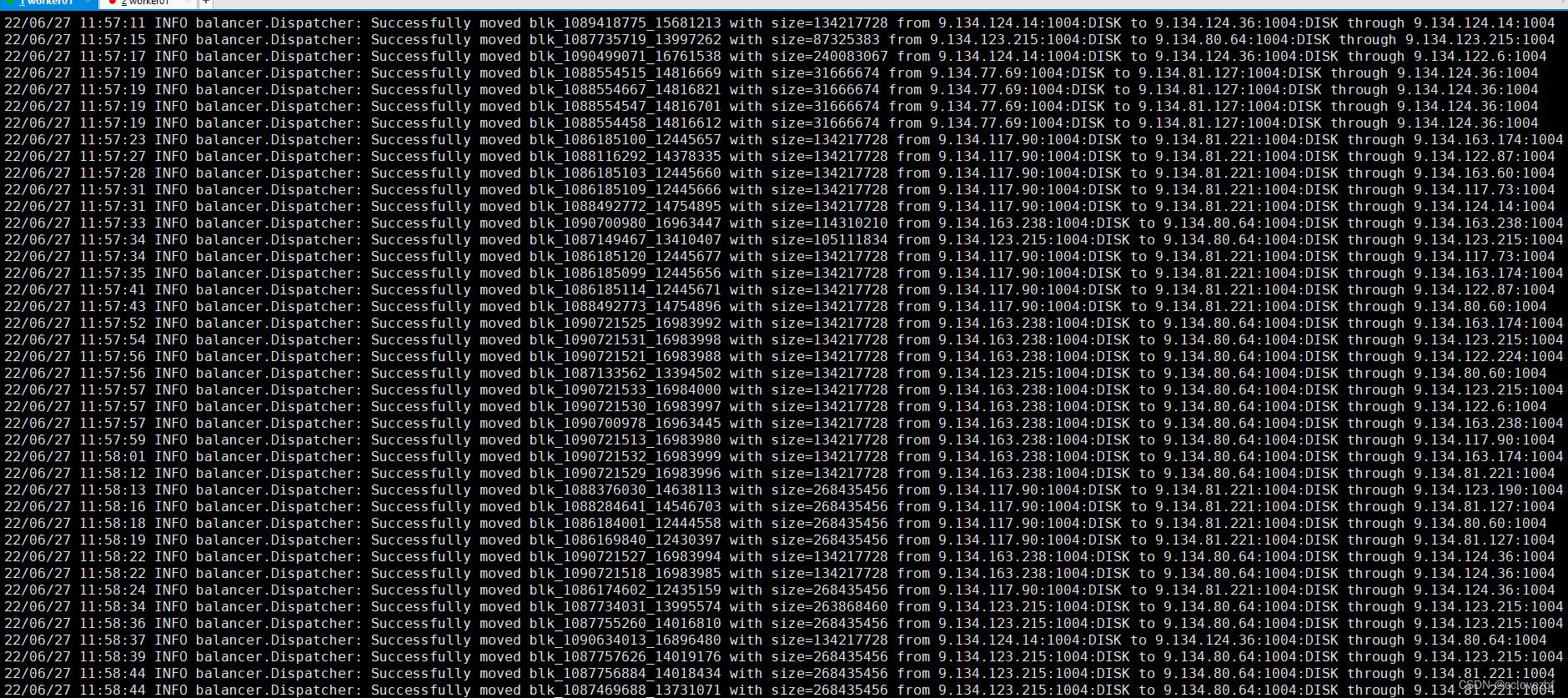

后面正常了就会显示 正在从a 移到b?

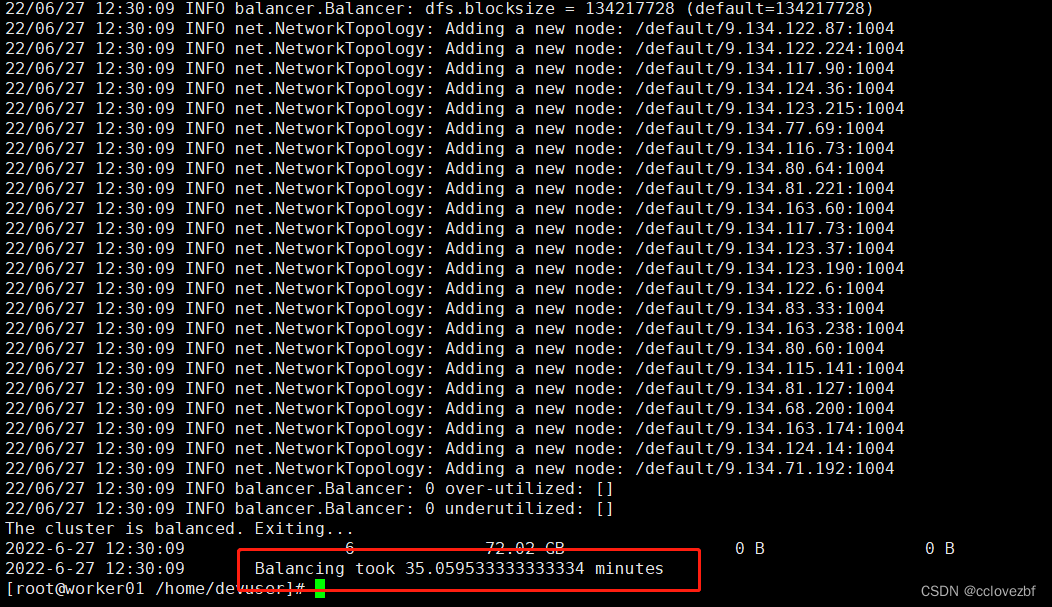

?这里显示完成了。但是我在cdh的控制页面发现还是一样的。

?这里显示完成了。但是我在cdh的控制页面发现还是一样的。

?其实我这里放了一个错误!!

磁盘的使用情况不是hdfs的容量的使用情况!!

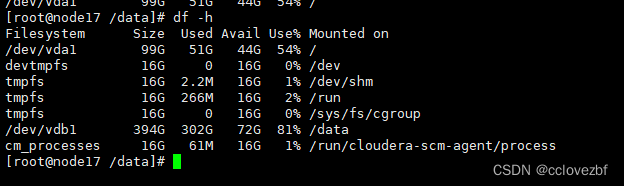

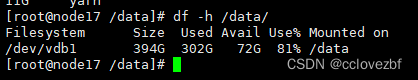

磁盘的使用情况df -h以node17为例!cdh显示 376G/491G

?

?

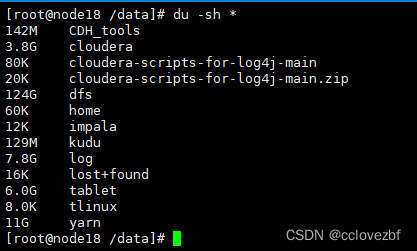

?node17所有容量相加 差不多是500多G,但是/data目录是394G,使用的差不多是302+51=353G

?

?

hdfs dfs -report 搜索node17

?

?这里显示configured Capacity 是383G, dfs实际使用116G 非dfs使用175G?

一般来说hdfs目录是挂载在/data目录的。

可以看到dfs占用了117G 其余的非hdfs差不多是5.7+7.1+8.7+137+11+其余的=175G

此时我们可以得到如下结论

/data目录本身有394G内存 与这个report的383G差不多。(这个误差我不知道为啥。)

/data目录有比如kudu的tablet log啥的这些属于非hdfs的文件,占用了175G和report一样!!

/data/dfs =117G和我们report的116.08G一样!

这时才明白 我为什么我上面看到node17 node18差别那么大,怎么不能balance了把,

截图node18 /data目录容量。因为这上面他本身dfs占用内存也不少

而node17的tablet目录也有100多G 让我误以为两者相差很多

当前这个都是我balance后的截图。。balance前两者是否平衡,我也不能回到过去了。。

总结

我们平衡hdfs,是为了平衡什么呢?

/data目录?/data/dfs下的容量?/data/dfs的容量占/data的百分比?

个人认为是/data/dfs的容量占/data的百分比。

由于我之前已经balance成功了。

可以看到所有的dn节点DFS Used% 差不多都是25-35之间,刚好符合我们的比例+_5%

Configured Capacity: 10424513773568 (9.48 TB)

Present Capacity: 7435669957834 (6.76 TB)

DFS Remaining: 4419545119946 (4.02 TB)

DFS Used: 3016124837888 (2.74 TB)

DFS Used%: 40.56%

9.48T是所有机器的/data目录容量

Present Capacity 是什么?先留下疑问

2.74 T是所有机器的/data/dfs目录容量

40.56%是 2.74/6.72 那么平均的百分比应该是40呀我们的threhold=5那么其余的dn应该是35-45%呀

注意下面的dn 以node19为例

Name: 9.134.115.141:1004 (node19.data.com)

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 119300038656 (111.11 GB)

Non DFS Used: 51429728256 (47.90 GB)

DFS Remaining: 219661856768 (204.58 GB)

DFS Used%: 28.96%

DFS Remaining%: 53.33%

383.60 GB 是/data目录的

111.11 GB 是/data/dfs目录的

28.96% =111.11G/383G

这个怎么百分比会这么少呢?按道理来说,每天机器差不多都是25-35%,那么total也应该是30左右呀怎么会是40%

有意思的事情出来了。可以看到我们

每个dn的dfs used差不多是110-120G那么23个节点就是2.xT 等于我们上面的2.74T。

dn中容量为383G的有14台 482G的有9台,总容量=383*14+482*9=9.7T

所以这里存在一个问题!!!!

所有dn的 DFS Used%= DFS Used/Present Capacity

每个dn的?DFS Used%=DFS Used/Configured Capacity

所以这里是有出入的。

所以关键在present Capacity 个人猜测是?Configured Capacity -所有的Non DFS Used

所以Present Capacity=9.48T-2.25T= 7.23T貌似不对...我也没办法了。

虽然这个问题没解决。但是有了如下收获!!

1. 设置转移速度, 设置balance阈值

2.记住balance之前 hdfs dfsadmin -report 查看各个dn的dfs used%

3.这里balance的是 /data/dfs目录 占/data目录的百分比。

4.一般来说要么设置dfs.datanode.data.dir = /data 那么就不要把其余工作目录设置到data。

因为比如每个dn容量为500G,但是某个dn 的/data目录里有个400G的文件,那么这个dn的dfs used在用到20%的时候就会出现问题。这个时候可以通过df -h 来排查。当然你排查了也没用。因为这个400G的文件就是在这!!!

[root@worker01 /home/devuser]# hdfs dfsadmin -report

[root@worker01 /home/devuser]# hdfs dfsadmin -report

Configured Capacity: 10424513773568 (9.48 TB)

Present Capacity: 7435669957834 (6.76 TB)

DFS Remaining: 4419545119946 (4.02 TB)

DFS Used: 3016124837888 (2.74 TB)

DFS Used%: 40.56%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (23):

Name: 9.134.115.141:1004 (node19.data.com)

Hostname: node19.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 119300038656 (111.11 GB)

Non DFS Used: 51429728256 (47.90 GB)

DFS Remaining: 219661856768 (204.58 GB)

DFS Used%: 28.96%

DFS Remaining%: 53.33%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:43 CST 2022

Last Block Report: Mon Jun 27 09:02:06 CST 2022

Name: 9.134.116.73:1004 (node22.data.com)

Hostname: node22.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 139341885440 (129.77 GB)

Non DFS Used: 4911681536 (4.57 GB)

DFS Remaining: 246138056704 (229.23 GB)

DFS Used%: 33.83%

DFS Remaining%: 59.76%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:42 CST 2022

Last Block Report: Mon Jun 27 11:49:39 CST 2022

Name: 9.134.117.73:1004 (node17.data.com)

Hostname: node17.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 124636291072 (116.08 GB)

Non DFS Used: 188638429184 (175.68 GB)

DFS Remaining: 77116903424 (71.82 GB)

DFS Used%: 30.26%

DFS Remaining%: 18.72%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 10:26:56 CST 2022

Name: 9.134.117.90:1004 (node16.data.com)

Hostname: node16.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 127383461888 (118.64 GB)

Non DFS Used: 107218415616 (99.85 GB)

DFS Remaining: 155668209253 (144.98 GB)

DFS Used%: 30.93%

DFS Remaining%: 37.79%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 4

Last contact: Mon Jun 27 13:50:42 CST 2022

Last Block Report: Mon Jun 27 12:35:15 CST 2022

Name: 9.134.122.224:1004 (node14.data.com)

Hostname: node14.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 139456868352 (129.88 GB)

Non DFS Used: 139715756032 (130.12 GB)

DFS Remaining: 111218999296 (103.58 GB)

DFS Used%: 33.86%

DFS Remaining%: 27.00%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:43 CST 2022

Last Block Report: Mon Jun 27 12:37:22 CST 2022

Name: 9.134.122.6:1004 (node12.data.com)

Hostname: node12.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 142010712064 (132.26 GB)

Non DFS Used: 138266742784 (128.77 GB)

DFS Remaining: 210434408448 (195.98 GB)

DFS Used%: 27.44%

DFS Remaining%: 40.66%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:42 CST 2022

Last Block Report: Mon Jun 27 12:51:06 CST 2022

Name: 9.134.122.87:1004 (node23.data.com)

Hostname: node23.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 126853881856 (118.14 GB)

Non DFS Used: 5369483264 (5.00 GB)

DFS Remaining: 258168258560 (240.44 GB)

DFS Used%: 30.80%

DFS Remaining%: 62.68%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:43 CST 2022

Last Block Report: Mon Jun 27 13:01:49 CST 2022

Name: 9.134.123.190:1004 (node13.data.com)

Hostname: node13.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 120316370944 (112.05 GB)

Non DFS Used: 135664951296 (126.35 GB)

DFS Remaining: 134410301440 (125.18 GB)

DFS Used%: 29.21%

DFS Remaining%: 32.63%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 10:26:38 CST 2022

Name: 9.134.123.215:1004 (node21.data.com)

Hostname: node21.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 123294625792 (114.83 GB)

Non DFS Used: 49384546304 (45.99 GB)

DFS Remaining: 217712451584 (202.76 GB)

DFS Used%: 29.93%

DFS Remaining%: 52.86%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:43 CST 2022

Last Block Report: Mon Jun 27 11:11:12 CST 2022

Name: 9.134.123.37:1004 (node18.data.com)

Hostname: node18.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 132322639872 (123.24 GB)

Non DFS Used: 19139104768 (17.82 GB)

DFS Remaining: 238929879040 (222.52 GB)

DFS Used%: 32.13%

DFS Remaining%: 58.01%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 10:27:47 CST 2022

Name: 9.134.124.14:1004 (node15.data.com)

Hostname: node15.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 134688702464 (125.44 GB)

Non DFS Used: 146982957056 (136.89 GB)

DFS Remaining: 108719964160 (101.25 GB)

DFS Used%: 32.70%

DFS Remaining%: 26.40%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:42 CST 2022

Last Block Report: Mon Jun 27 09:44:21 CST 2022

Name: 9.134.124.36:1004 (node20.data.com)

Hostname: node20.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 100490969088 (93.59 GB)

Non DFS Used: 74869800960 (69.73 GB)

DFS Remaining: 215030853632 (200.26 GB)

DFS Used%: 24.40%

DFS Remaining%: 52.21%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 08:49:47 CST 2022

Name: 9.134.163.174:1004 (node26.data.com)

Hostname: node26.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 149608824832 (139.33 GB)

Non DFS Used: 113226809344 (105.45 GB)

DFS Remaining: 227876229120 (212.23 GB)

DFS Used%: 28.91%

DFS Remaining%: 44.03%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:40 CST 2022

Last Block Report: Mon Jun 27 13:34:49 CST 2022

Name: 9.134.163.238:1004 (node24.data.com)

Hostname: node24.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 149926281216 (139.63 GB)

Non DFS Used: 177427165184 (165.24 GB)

DFS Remaining: 163358416896 (152.14 GB)

DFS Used%: 28.97%

DFS Remaining%: 31.56%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 11:00:49 CST 2022

Name: 9.134.163.60:1004 (node25.data.com)

Hostname: node25.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 148323336192 (138.14 GB)

Non DFS Used: 139817668608 (130.22 GB)

DFS Remaining: 202570858496 (188.66 GB)

DFS Used%: 28.66%

DFS Remaining%: 39.14%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:42 CST 2022

Last Block Report: Mon Jun 27 12:50:30 CST 2022

Name: 9.134.68.200:1004 (node11.data.com)

Hostname: node11.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 132859736064 (123.74 GB)

Non DFS Used: 53714735104 (50.03 GB)

DFS Remaining: 203817152512 (189.82 GB)

DFS Used%: 32.26%

DFS Remaining%: 49.48%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 09:35:01 CST 2022

Name: 9.134.71.192:1004 (node05.data.com)

Hostname: node05.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 123511422976 (115.03 GB)

Non DFS Used: 50063003648 (46.62 GB)

DFS Remaining: 216817197056 (201.93 GB)

DFS Used%: 29.99%

DFS Remaining%: 52.64%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:42 CST 2022

Last Block Report: Mon Jun 27 09:38:05 CST 2022

Name: 9.134.77.69:1004 (node07.data.com)

Hostname: node07.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 411883184128 (383.60 GB)

DFS Used: 131336716288 (122.32 GB)

Non DFS Used: 94864969728 (88.35 GB)

DFS Remaining: 164189937664 (152.91 GB)

DFS Used%: 31.89%

DFS Remaining%: 39.86%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:40 CST 2022

Last Block Report: Mon Jun 27 11:48:10 CST 2022

Name: 9.134.80.60:1004 (node27.data.com)

Hostname: node27.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 125220708352 (116.62 GB)

Non DFS Used: 154762784768 (144.13 GB)

DFS Remaining: 210728370176 (196.26 GB)

DFS Used%: 24.19%

DFS Remaining%: 40.71%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 08:58:05 CST 2022

Name: 9.134.80.64:1004 (node29.data.com)

Hostname: node29.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 134744162304 (125.49 GB)

Non DFS Used: 154976854016 (144.33 GB)

DFS Remaining: 200990846976 (187.19 GB)

DFS Used%: 26.03%

DFS Remaining%: 38.83%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 11:06:53 CST 2022

Name: 9.134.81.127:1004 (node31.data.com)

Hostname: node31.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 131076886528 (122.07 GB)

Non DFS Used: 151701401600 (141.28 GB)

DFS Remaining: 207812038245 (193.54 GB)

DFS Used%: 25.33%

DFS Remaining%: 40.15%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 4

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 08:35:59 CST 2022

Name: 9.134.81.221:1004 (node30.data.com)

Hostname: node30.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 126044688384 (117.39 GB)

Non DFS Used: 141402562560 (131.69 GB)

DFS Remaining: 223264612352 (207.93 GB)

DFS Used%: 24.35%

DFS Remaining%: 43.14%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:41 CST 2022

Last Block Report: Mon Jun 27 10:09:56 CST 2022

Name: 9.134.83.33:1004 (node28.data.com)

Hostname: node28.data.com

Rack: /default

Decommission Status : Normal

Configured Capacity: 517572132864 (482.03 GB)

DFS Used: 133375627264 (124.22 GB)

Non DFS Used: 152426917888 (141.96 GB)

DFS Remaining: 204909318144 (190.84 GB)

DFS Used%: 25.77%

DFS Remaining%: 39.59%

Configured Cache Capacity: 4294967296 (4 GB)

Cache Used: 0 (0 B)

Cache Remaining: 4294967296 (4 GB)

Cache Used%: 0.00%

Cache Remaining%: 100.00%

Xceivers: 2

Last contact: Mon Jun 27 13:50:43 CST 2022

Last Block Report: Mon Jun 27 08:24:57 CST 2022

Dead datanodes (2):

Name: 9.134.74.52:1004 (node06.data.com)

Hostname: node06.data.com

Decommission Status : Decommissioned

Configured Capacity: 0 (0 B)

DFS Used: 0 (0 B)

Non DFS Used: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used%: 100.00%

DFS Remaining%: 0.00%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 0

Last contact: Thu Jan 01 08:00:00 CST 1970

Last Block Report: Never

Name: 9.134.70.49:1004 (node04.data.com)

Hostname: node04.data.com

Decommission Status : Decommissioned

Configured Capacity: 0 (0 B)

DFS Used: 0 (0 B)

Non DFS Used: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used%: 100.00%

DFS Remaining%: 0.00%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 0

Last contact: Thu Jan 01 08:00:00 CST 1970

Last Block Report: Never

[root@worker01 /home/devuser]# df -h

文件系统 容量 已用 可用 已用% 挂载点

/dev/vda1 99G 56G 39G 59% /

devtmpfs 7.7G 0 7.7G 0% /dev

tmpfs 7.8G 0 7.8G 0% /dev/shm

tmpfs 7.8G 266M 7.5G 4% /run

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/vdb1 394G 161G 214G 43% /data

cm_processes 7.8G 66M 7.7G 1% /run/cloudera-scm-agent/process

tmpfs 1.6G 0 1.6G 0% /run/user/1000

9.135.223.197:/ 15T 12T 2.5T 83% /data/share/files/cfs

tmpfs 1.6G 0 1.6G 0% /run/user/0

[root@worker01 /home/devuser]# df -h /data

文件系统 容量 已用 可用 已用% 挂载点

/dev/vdb1 394G 161G 214G 43% /data

[root@worker01 /home/devuser]#