flink 设置ck遇到的部署遇到的问题

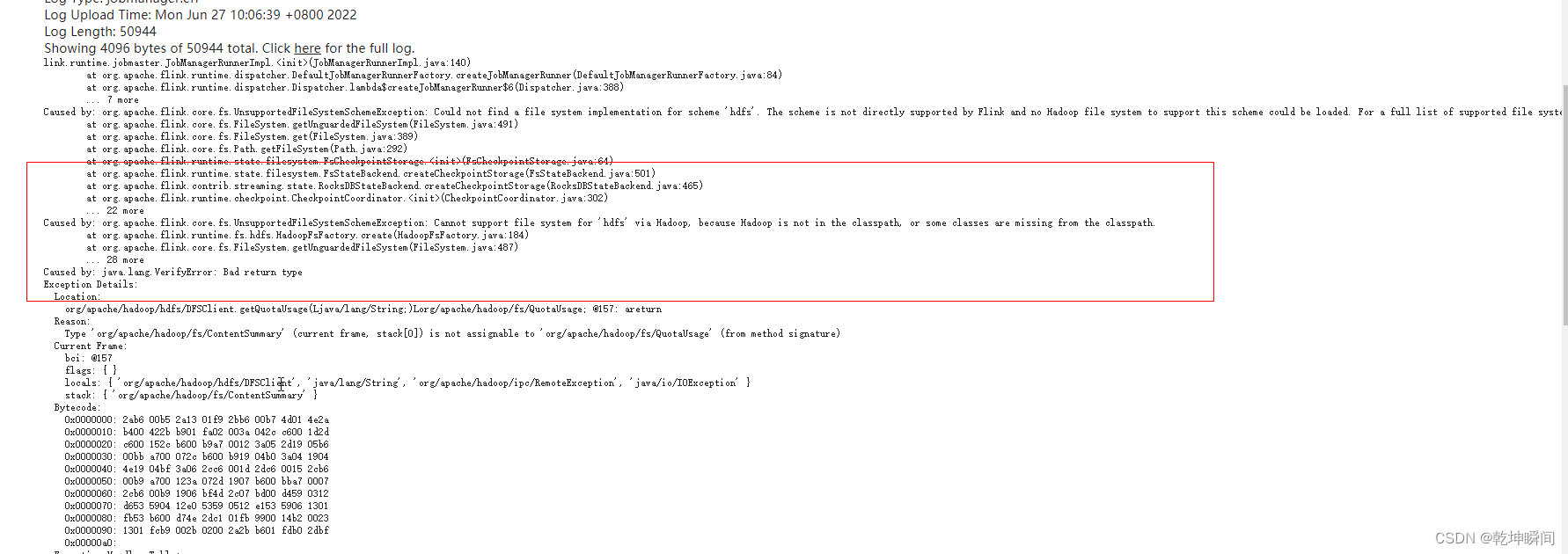

link.runtime.jobmaster.JobManagerRunnerImpl.<init>(JobManagerRunnerImpl.java:140)

at org.apache.flink.runtime.dispatcher.DefaultJobManagerRunnerFactory.createJobManagerRunner(DefaultJobManagerRunnerFactory.java:84)

at org.apache.flink.runtime.dispatcher.Dispatcher.lambda$createJobManagerRunner$6(Dispatcher.java:388)

... 7 more

Caused by: org.apache.flink.core.fs.UnsupportedFileSystemSchemeException: Could not find a file system implementation for scheme 'hdfs'. The scheme is not directly supported by Flink and no Hadoop file system to support this scheme could be loaded. For a full list of supported file systems, please see https://ci.apache.org/projects/flink/flink-docs-stable/ops/filesystems/.

at org.apache.flink.core.fs.FileSystem.getUnguardedFileSystem(FileSystem.java:491)

at org.apache.flink.core.fs.FileSystem.get(FileSystem.java:389)

at org.apache.flink.core.fs.Path.getFileSystem(Path.java:292)

at org.apache.flink.runtime.state.filesystem.FsCheckpointStorage.<init>(FsCheckpointStorage.java:64)

at org.apache.flink.runtime.state.filesystem.FsStateBackend.createCheckpointStorage(FsStateBackend.java:501)

at org.apache.flink.contrib.streaming.state.RocksDBStateBackend.createCheckpointStorage(RocksDBStateBackend.java:465)

at org.apache.flink.runtime.checkpoint.CheckpointCoordinator.<init>(CheckpointCoordinator.java:302)

... 22 more

Caused by: org.apache.flink.core.fs.UnsupportedFileSystemSchemeException: Cannot support file system for 'hdfs' via Hadoop, because Hadoop is not in the classpath, or some classes are missing from the classpath.

at org.apache.flink.runtime.fs.hdfs.HadoopFsFactory.create(HadoopFsFactory.java:184)

at org.apache.flink.core.fs.FileSystem.getUnguardedFileSystem(FileSystem.java:487)

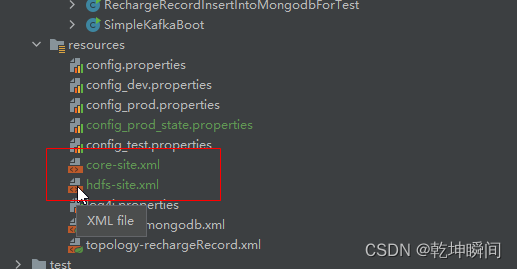

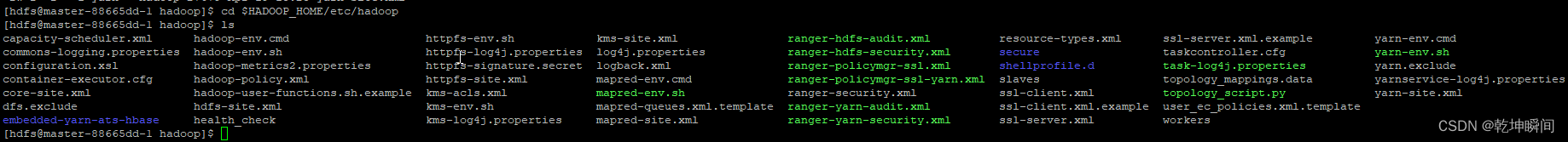

1、 增加 core-site.xml文件 和 hdfs-site.xml

这两个文件需要在集群的hadoop集群中找

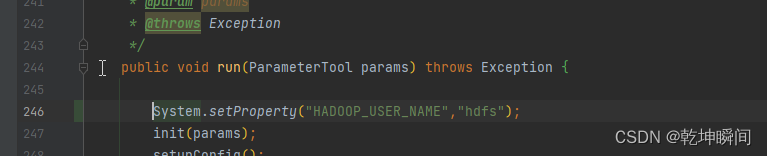

2、 需要在main方法中添加

System.setProperty("HADOOP_USER_NAME","hdfs");

3、 需要在项目中引入

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

hadoop版本通过

$ hadoop version

Hadoop 3.1.1

4、 添加 checkpoint路径

前提是flink用户需要有hdf集群的查看权限

如果使用 rockeddbStateBackEnd需要添加依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-statebackend-rocksdb_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

stateBackend = new RocksDBStateBackend("hdfs://servername:8020/flink/ck/trial_car/recharge_record", true);

env.setStateBackend(stateBackend);

env.enableCheckpointing(300_000);

env.getCheckpointConfig().setCheckpointTimeout(30000);

env.getCheckpointConfig().setMinPauseBetweenCheckpoints(5000);

env.getCheckpointConfig().setMaxConcurrentCheckpoints(1);

env.getCheckpointConfig().setFailOnCheckpointingErrors(false);

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

以上是设置增量ck需要做的代码设置。

配置rocksdb保存checkpoint时,idea运行报错

5、 确定设置了

export HADOOP_CLASSPATH=`hadoop classpath`

Could not find a file system implementation for scheme ‘hdfs‘. The scheme is not directly supported