启动Hadoop失败

报错信息

Starting namenodes on [master]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [master]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

大致意思就是 root 用户不被推荐操作 hadoop

解决办法

找到hadoop安装路径下的 sbin目录中的 start-dfs.sh stop-dfs.sh文件

我这里是 /root/software/hadoop-3.3.0/sbin

在里面添加配置文件

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

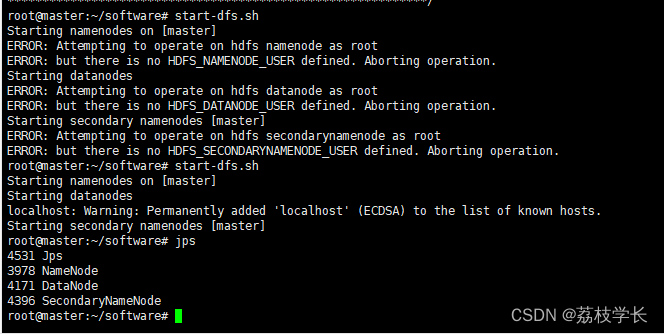

重新启动Hadoop即可。

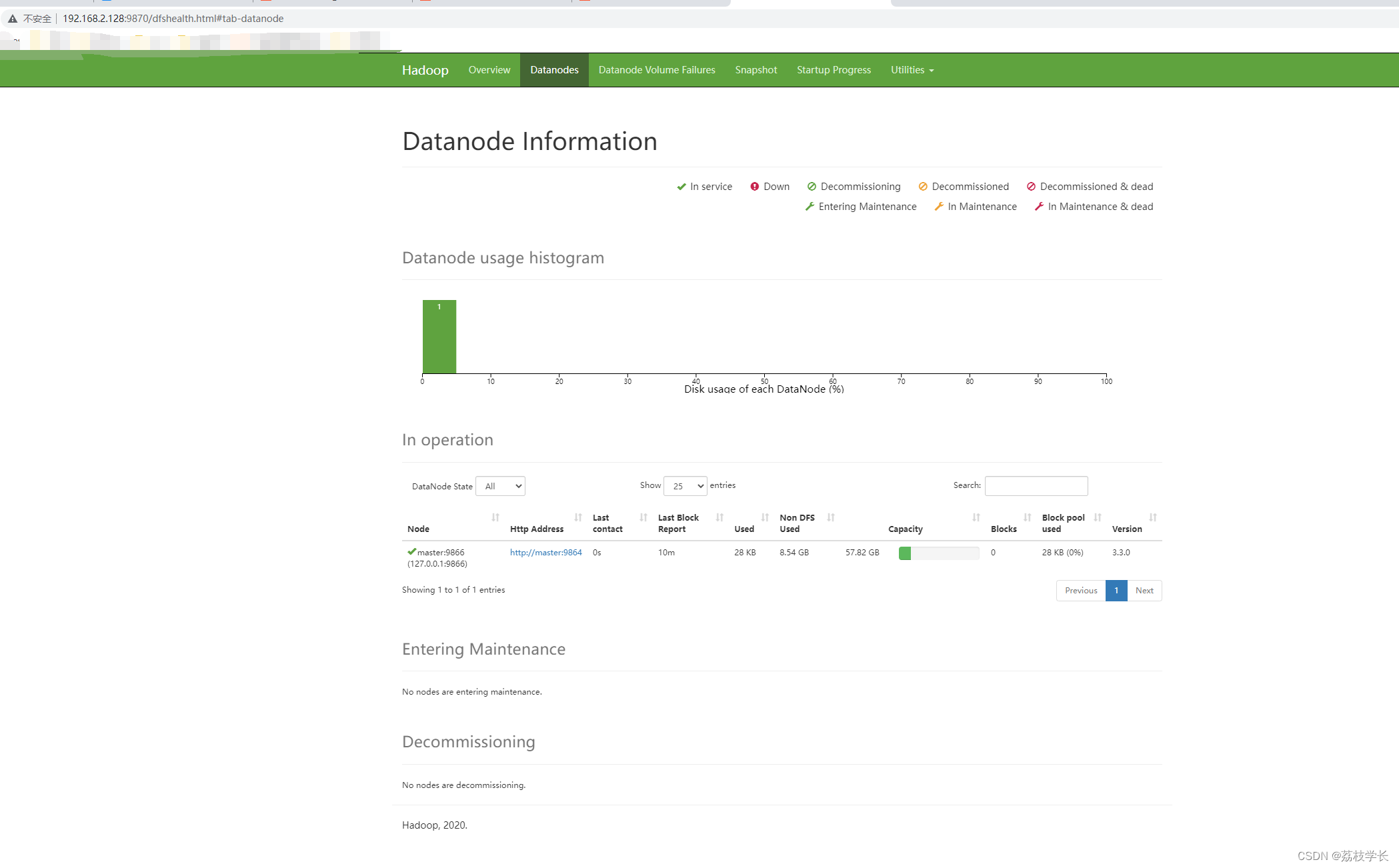

访问Hadoop Web管理页面

http://192.168.2.128:9870/dfshealth.html#tab-overview

ip地址+端口号9870(3.x默认端口号)

启动YARN失败

报错信息

Starting namenodes on [master]

Starting datanodes

Starting secondary namenodes [master]

Starting resourcemanager

ERROR: Attempting to operate on yarn resourcemanager as root

ERROR: but there is no YARN_RESOURCEMANAGER_USER defined. Aborting operation.

Starting nodemanagers

ERROR: Attempting to operate on yarn nodemanager as root

ERROR: but there is no YARN_NODEMANAGER_USER defined. Aborting operation.

解决办法

找到Hadoop安装目录下的 sbin 文件夹

我这里是 /root/software/hadoop-3.3.0/sbin

在start-dfs.sh,stop-dfs.sh (start-all.sh,stop-all.sh也可以加上)文件中加入如下参数:

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

YARN_RESOURCEMANAGER_USER=root

YARN_NODEMANAGER_USER=root

在 start-yarn.sh,stop-yarn.sh加上:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

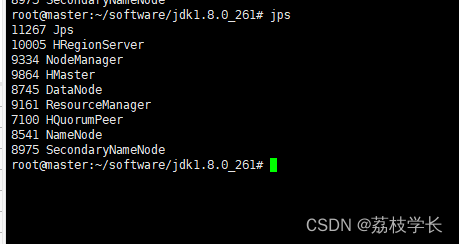

然后启动Hadoop 即可

start-dfs.sh

我这里是安装了Hbase 所以 会多出几个服务进程

Hbase 启动失败

报错信息

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/software/hadoop-3.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/root/software/hbase-2.2.4/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/software/hadoop-3.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/root/software/hbase-2.2.4/lib/client-facing-thirdparty/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

localhost: running zookeeper, logging to /root/software/hbase-2.2.4/bin/…/logs/hbase-root-zookeeper-master.out

localhost: stopping zookeeper.

错误原因是 Hadoop 和 Hbase 的 SLF4J Jar包冲突了。

在网上看了一堆,有说把冲突的jar移动到其他位置,在拷贝一个jar包过来,就是把Hadoop和Hbase的jar包保持版本一致。另一种就是删除jar,在从Hadoop 或者Hbase拷贝一份(自测)。

我测试过了,仍然报错。这里就不一一赘述了。

解决办法

找到HBase的安装路径下的hbase-env.sh文件,就是之前配置JAVA_HOME的那个文件。

我这里是

/root/software/hbase-2.2.4/conf

把文件末尾的 # export HBASE_DISABLE_HADOOP_CLASSPATH_LOOKUP=“true” 前面的注释删去即可。

export HBASE_DISABLE_HADOOP_CLASSPATH_LOOKUP="true"

export JAVA_HOME=/root/software/jdk1.8.0_261

然后在启动Hbase。

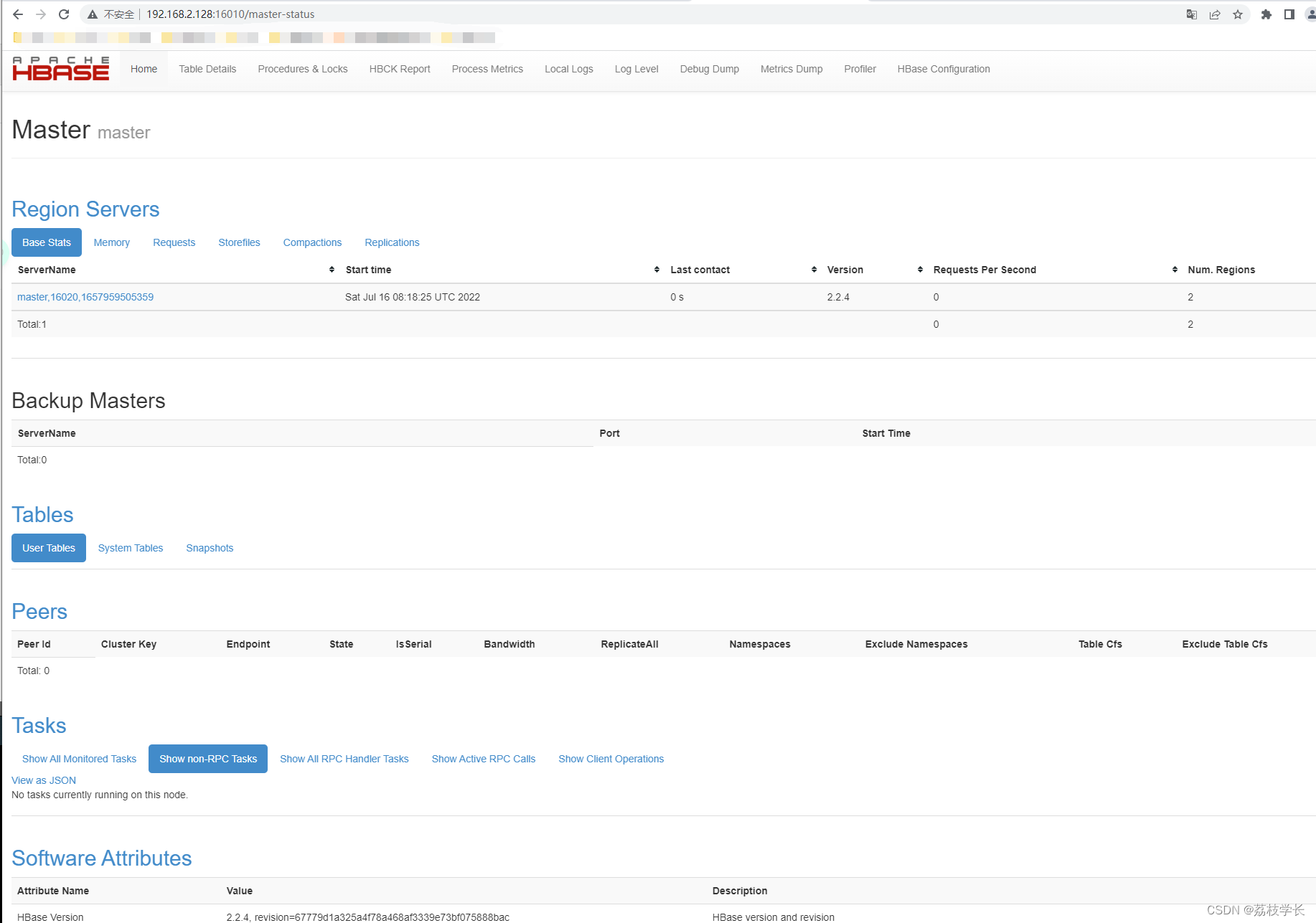

Web管理地址:

http://192.168.2.128:16010/

记得换成自己的IP地址