目录

? ? ? ? ?(3)Job History Server

????????〇、踩坑指南

????????其实在做这篇记录之前,已经多次搭建过Hadoop系统了。但为了补齐之前记录不太详尽的部分,就又重头搭建了一次。映像中距离上次应该也就过了不到2个月的功夫,居然毫无意外的又掉进了坑里。版本不匹配,不太容易注意到的配置变动,都有可能是构建环境路上的拦路虎。这可能是为什么网上不断有各种不同方式的Hadoop搭建指南的原因吧,也可能就是因为此,才为产生诸如Devops、容器等需求提供了强大的推进动力。

????????先记录此次搭建过程中的坑:

????????1、OpenJDK的版本

????????最开始搭建Single Node的时候,我们和之前搭分布式环境一样,使用的是在线安装java 1.8.0的版本,之前确实这样都成功过,所以也没多在意多少。

[root@pig1 ~]# java -version

java version "18.0.1.1" 2022-04-22

Java(TM) SE Runtime Environment (build 18.0.1.1+2-6)

Java HotSpot(TM) 64-Bit Server VM (build 18.0.1.1+2-6, mixed mode, sharing)

[root@pig1 ~]#

????????然而,在最后启动HDFS的时候,却莫名的发生了Server Error。

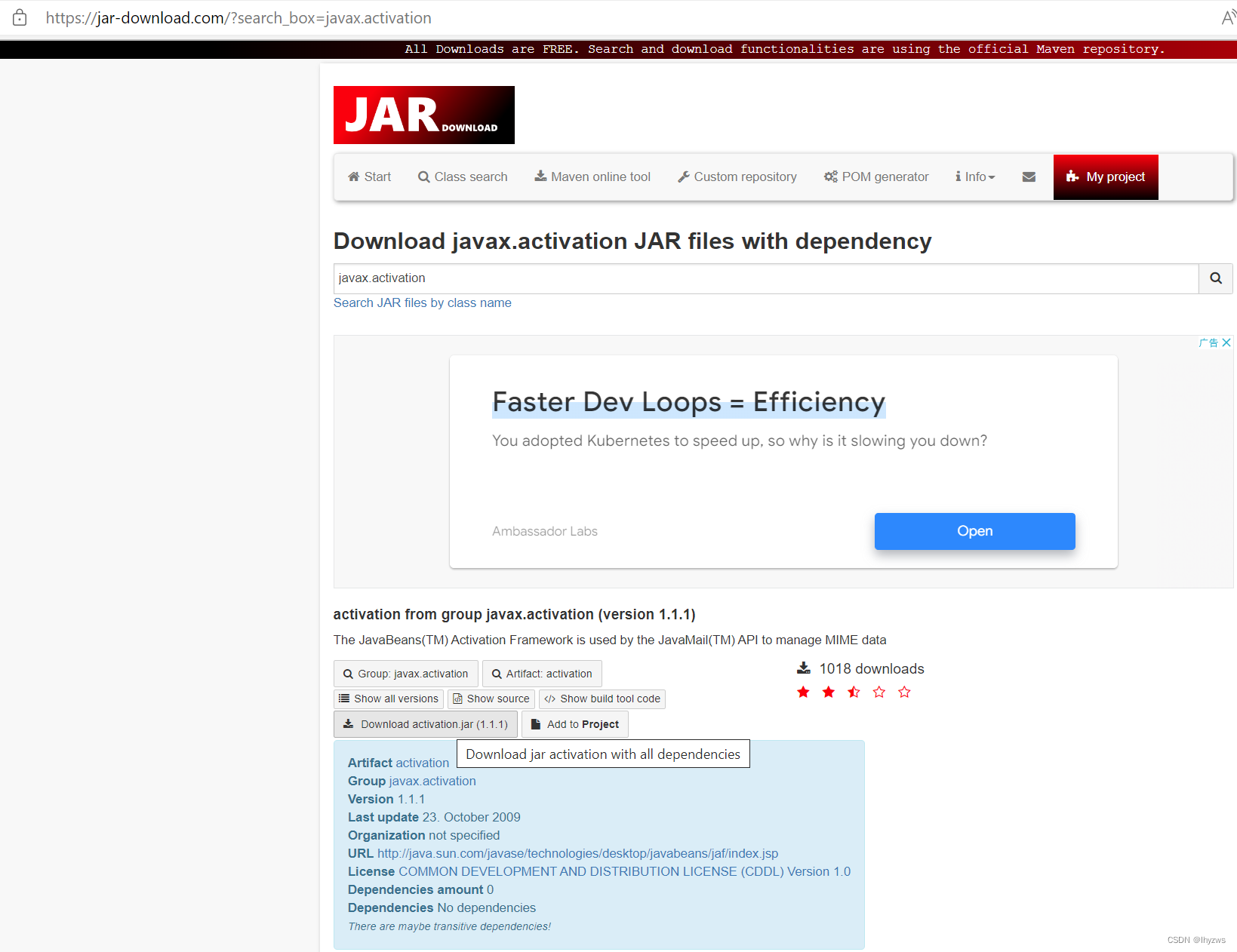

????????如网上一些教程所述,该问题的发生,基本属于Java版本问题。因为某些版本的Java在$HADOOP_HOME/share/hadoop/common目录下,缺少了javax.activation.jar库。但如教程所指,到相关网站上下载各种版本的库,拷贝到该目录下,也没有解决问题。

[root@pig1 share]# cp activation-1.1.1.jar /root/hadoop/share/hadoop/common/.

[root@pig1 share]# scp activation-1.1.1.jar root@pig2:/root/hadoop/share/hadoop/common/.

activation-1.1.1.jar 100% 68KB 15.9MB/s 00:00

[root@pig1 share]# scp activation-1.1.1.jar root@pig3:/root/hadoop/share/hadoop/common/.

activation-1.1.1.jar 100% 68KB 16.7MB/s 00:00

[root@pig1 share]# scp activation-1.1.1.jar root@pig4:/root/hadoop/share/hadoop/common/.

activation-1.1.1.jar 100% 68KB 12.9MB/s 00:00

[root@pig1 share]# scp activation-1.1.1.jar root@pig5:/root/hadoop/share/hadoop/common/.

activation-1.1.1.jar 100% 68KB 15.3MB/s 00:00

[root@pig1 share]#

????????所以,最后只能在离革命成功只差临门一脚的时候全部推到重来,换了JDK11。

? ? ? ? 如果是在线情况,可以先检查一下yum库,确实不止java-1.8.0可以装:

[root@localhost share]# yum list java*

上次元数据过期检查:0:00:12 前,执行于 2022年09月20日 星期二 09时23分52秒。

可安装的软件包

java-1.8.0-openjdk.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-accessibility.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-demo.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-devel.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-headless.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-headless-slowdebug.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-javadoc.noarch 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-javadoc-zip.noarch 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-slowdebug.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-1.8.0-openjdk-src.x86_64 1:1.8.0.322.b06-11.el8 appstream

java-11-openjdk.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-demo.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-devel.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-headless.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-javadoc.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-javadoc-zip.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-jmods.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-src.x86_64 1:11.0.15.0.10-3.el8 appstream

java-11-openjdk-static-libs.x86_64 1:11.0.15.0.10-3.el8 appstream

java-17-openjdk.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-demo.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-devel.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-headless.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-javadoc.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-javadoc-zip.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-jmods.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-src.x86_64 1:17.0.3.0.7-2.el8 appstream

java-17-openjdk-static-libs.x86_64 1:17.0.3.0.7-2.el8 appstream

java-atk-wrapper.x86_64 0.33.2-6.el8 appstream

java-dirq.noarch 1.8-9.el8 epel

java-dirq-javadoc.noarch 1.8-9.el8 epel

java-hdf.x86_64 4.2.14-5.el8 epel

java-hdf5.x86_64 1.10.5-4.el8 epel

java-kolabformat.x86_64 1.2.0-8.el8 epel

java-latest-openjdk.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-demo.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-demo-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-demo-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-devel.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-devel-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-devel-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-headless.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-headless-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-headless-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-javadoc.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-javadoc-zip.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-jmods.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-jmods-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-jmods-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-src.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-src-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-src-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-static-libs.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-static-libs-fastdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-latest-openjdk-static-libs-slowdebug.x86_64 1:18.0.1.0.10-1.rolling.el8 epel

java-runtime-decompiler.noarch 6.1-2.el8 epel

java-runtime-decompiler-javadoc.noarch 6.1-2.el8 epel

javapackages-filesystem.noarch 5.3.0-1.module_el8.0.0+11+5b8c10bd appstream

javapackages-tools.noarch 5.3.0-1.module_el8.0.0+11+5b8c10bd appstream

? ? ? ? 老样子,先下载保存,以便某天可以离线安装:

[root@localhost jdk11]# yumdownloader --resolve java-11*

上次元数据过期检查:0:02:37 前,执行于 2022年09月20日 星期二 09时23分52秒。

(1/15): ttmkfdir-3.0.9-54.el8.x86_64.rpm 153 kB/s | 62 kB 00:00

(2/15): java-11-openjdk-demo-11.0.15.0.10-3.el8.x86_64.rpm 2.2 MB/s | 4.5 MB 00:02

(3/15): java-11-openjdk-headless-11.0.15.0.10-3.el8.x86_64.rpm 3.0 MB/s | 40 MB 00:13

(4/15): copy-jdk-configs-4.0-2.el8.noarch.rpm 252 kB/s | 31 kB 00:00

(5/15): javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch.rpm 271 kB/s | 30 kB 00:00

(6/15): tzdata-java-2022c-1.el8.noarch.rpm 833 kB/s | 186 kB 00:00

(7/15): java-11-openjdk-devel-11.0.15.0.10-3.el8.x86_64.rpm 2.3 MB/s | 3.4 MB 00:01

(8/15): java-11-openjdk-javadoc-zip-11.0.15.0.10-3.el8.x86_64.rpm 2.7 MB/s | 42 MB 00:15

(9/15): java-11-openjdk-11.0.15.0.10-3.el8.x86_64.rpm 424 kB/s | 271 kB 00:00

(10/15): java-11-openjdk-javadoc-11.0.15.0.10-3.el8.x86_64.rpm 2.6 MB/s | 16 MB 00:06

(11/15): java-11-openjdk-src-11.0.15.0.10-3.el8.x86_64.rpm 2.4 MB/s | 50 MB 00:20

(12/15): xorg-x11-fonts-Type1-7.5-19.el8.noarch.rpm 1.7 MB/s | 522 kB 00:00

(13/15): lksctp-tools-1.0.18-3.el8.x86_64.rpm 900 kB/s | 100 kB 00:00

(14/15): java-11-openjdk-static-libs-11.0.15.0.10-3.el8.x86_64.rpm 2.3 MB/s | 19 MB 00:08

(15/15): java-11-openjdk-jmods-11.0.15.0.10-3.el8.x86_64.rpm 4.1 MB/s | 318 MB 01:18

[root@localhost jdk11]#

????????安装OpenJDK11

[root@localhost jdk11]# yum install -y java-11*

上次元数据过期检查:0:12:27 前,执行于 2022年09月20日 星期二 09时23分52秒。

依赖关系解决。

==============================================================================================================================

软件包 架构 版本 仓库 大小

==============================================================================================================================

安装:

java-11-openjdk x86_64 1:11.0.15.0.10-3.el8 @commandline 271 k

java-11-openjdk-demo x86_64 1:11.0.15.0.10-3.el8 @commandline 4.5 M

java-11-openjdk-devel x86_64 1:11.0.15.0.10-3.el8 @commandline 3.4 M

java-11-openjdk-headless x86_64 1:11.0.15.0.10-3.el8 @commandline 40 M

java-11-openjdk-javadoc x86_64 1:11.0.15.0.10-3.el8 @commandline 16 M

java-11-openjdk-javadoc-zip x86_64 1:11.0.15.0.10-3.el8 @commandline 42 M

java-11-openjdk-jmods x86_64 1:11.0.15.0.10-3.el8 @commandline 318 M

java-11-openjdk-src x86_64 1:11.0.15.0.10-3.el8 @commandline 50 M

java-11-openjdk-static-libs x86_64 1:11.0.15.0.10-3.el8 @commandline 19 M

安装依赖关系:

copy-jdk-configs noarch 4.0-2.el8 appstream 31 k

javapackages-filesystem noarch 5.3.0-1.module_el8.0.0+11+5b8c10bd appstream 30 k

lksctp-tools x86_64 1.0.18-3.el8 baseos 100 k

ttmkfdir x86_64 3.0.9-54.el8 appstream 62 k

tzdata-java noarch 2022c-1.el8 appstream 186 k

xorg-x11-fonts-Type1 noarch 7.5-19.el8 appstream 522 k

启用模块流:

javapackages-runtime 201801

事务概要

==============================================================================================================================

安装 15 软件包

总计:494 M

总下载:930 k

安装大小:1.0 G

下载软件包:

(1/6): javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch.rpm 113 kB/s | 30 kB 00:00

(2/6): copy-jdk-configs-4.0-2.el8.noarch.rpm 102 kB/s | 31 kB 00:00

(3/6): ttmkfdir-3.0.9-54.el8.x86_64.rpm 167 kB/s | 62 kB 00:00

(4/6): tzdata-java-2022c-1.el8.noarch.rpm 689 kB/s | 186 kB 00:00

(5/6): lksctp-tools-1.0.18-3.el8.x86_64.rpm 584 kB/s | 100 kB 00:00

(6/6): xorg-x11-fonts-Type1-7.5-19.el8.noarch.rpm 1.1 MB/s | 522 kB 00:00

------------------------------------------------------------------------------------------------------------------------------

总计 543 kB/s | 930 kB 00:01

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

运行脚本: copy-jdk-configs-4.0-2.el8.noarch 1/1

运行脚本: java-11-openjdk-headless-1:11.0.15.0.10-3.el8.x86_64 1/1

准备中 : 1/1

安装 : javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch 1/15

安装 : lksctp-tools-1.0.18-3.el8.x86_64 2/15

运行脚本: lksctp-tools-1.0.18-3.el8.x86_64 2/15

安装 : tzdata-java-2022c-1.el8.noarch 3/15

安装 : ttmkfdir-3.0.9-54.el8.x86_64 4/15

安装 : xorg-x11-fonts-Type1-7.5-19.el8.noarch 5/15

运行脚本: xorg-x11-fonts-Type1-7.5-19.el8.noarch 5/15

安装 : copy-jdk-configs-4.0-2.el8.noarch 6/15

安装 : java-11-openjdk-headless-1:11.0.15.0.10-3.el8.x86_64 7/15

运行脚本: java-11-openjdk-headless-1:11.0.15.0.10-3.el8.x86_64 7/15

安装 : java-11-openjdk-1:11.0.15.0.10-3.el8.x86_64 8/15

运行脚本: java-11-openjdk-1:11.0.15.0.10-3.el8.x86_64 8/15

安装 : java-11-openjdk-devel-1:11.0.15.0.10-3.el8.x86_64 9/15

运行脚本: java-11-openjdk-devel-1:11.0.15.0.10-3.el8.x86_64 9/15

安装 : java-11-openjdk-jmods-1:11.0.15.0.10-3.el8.x86_64 10/15

安装 : java-11-openjdk-static-libs-1:11.0.15.0.10-3.el8.x86_64 11/15

安装 : java-11-openjdk-demo-1:11.0.15.0.10-3.el8.x86_64 12/15

安装 : java-11-openjdk-javadoc-1:11.0.15.0.10-3.el8.x86_64 13/15

安装 : java-11-openjdk-javadoc-zip-1:11.0.15.0.10-3.el8.x86_64 14/15

安装 : java-11-openjdk-src-1:11.0.15.0.10-3.el8.x86_64 15/15

运行脚本: copy-jdk-configs-4.0-2.el8.noarch 15/15

运行脚本: java-11-openjdk-headless-1:11.0.15.0.10-3.el8.x86_64 15/15

运行脚本: java-11-openjdk-1:11.0.15.0.10-3.el8.x86_64 15/15

运行脚本: java-11-openjdk-devel-1:11.0.15.0.10-3.el8.x86_64 15/15

运行脚本: java-11-openjdk-javadoc-1:11.0.15.0.10-3.el8.x86_64 15/15

运行脚本: java-11-openjdk-javadoc-zip-1:11.0.15.0.10-3.el8.x86_64 15/15

运行脚本: java-11-openjdk-src-1:11.0.15.0.10-3.el8.x86_64 15/15

验证 : copy-jdk-configs-4.0-2.el8.noarch 1/15

验证 : javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch 2/15

验证 : ttmkfdir-3.0.9-54.el8.x86_64 3/15

验证 : tzdata-java-2022c-1.el8.noarch 4/15

验证 : xorg-x11-fonts-Type1-7.5-19.el8.noarch 5/15

验证 : lksctp-tools-1.0.18-3.el8.x86_64 6/15

验证 : java-11-openjdk-1:11.0.15.0.10-3.el8.x86_64 7/15

验证 : java-11-openjdk-demo-1:11.0.15.0.10-3.el8.x86_64 8/15

验证 : java-11-openjdk-devel-1:11.0.15.0.10-3.el8.x86_64 9/15

验证 : java-11-openjdk-headless-1:11.0.15.0.10-3.el8.x86_64 10/15

验证 : java-11-openjdk-javadoc-1:11.0.15.0.10-3.el8.x86_64 11/15

验证 : java-11-openjdk-javadoc-zip-1:11.0.15.0.10-3.el8.x86_64 12/15

验证 : java-11-openjdk-jmods-1:11.0.15.0.10-3.el8.x86_64 13/15

验证 : java-11-openjdk-src-1:11.0.15.0.10-3.el8.x86_64 14/15

验证 : java-11-openjdk-static-libs-1:11.0.15.0.10-3.el8.x86_64 15/15

已安装:

copy-jdk-configs-4.0-2.el8.noarch java-11-openjdk-1:11.0.15.0.10-3.el8.x86_64

java-11-openjdk-demo-1:11.0.15.0.10-3.el8.x86_64 java-11-openjdk-devel-1:11.0.15.0.10-3.el8.x86_64

java-11-openjdk-headless-1:11.0.15.0.10-3.el8.x86_64 java-11-openjdk-javadoc-1:11.0.15.0.10-3.el8.x86_64

java-11-openjdk-javadoc-zip-1:11.0.15.0.10-3.el8.x86_64 java-11-openjdk-jmods-1:11.0.15.0.10-3.el8.x86_64

java-11-openjdk-src-1:11.0.15.0.10-3.el8.x86_64 java-11-openjdk-static-libs-1:11.0.15.0.10-3.el8.x86_64

javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch lksctp-tools-1.0.18-3.el8.x86_64

ttmkfdir-3.0.9-54.el8.x86_64 tzdata-java-2022c-1.el8.noarch

xorg-x11-fonts-Type1-7.5-19.el8.noarch

完毕!

[root@localhost jdk11]# java -version

openjdk version "11.0.15" 2022-04-19 LTS

OpenJDK Runtime Environment 18.9 (build 11.0.15+10-LTS)

OpenJDK 64-Bit Server VM 18.9 (build 11.0.15+10-LTS, mixed mode, sharing)

[root@localhost jdk11]#

? ? ? ? 或者,也可以不重头安装系统,直接删除Java环境,不过需要考虑删干净一点:

[root@pig1 hadoop]# yum remove java*

依赖关系解决。

========================================================================================================================

软件包 架构 版本 仓库 大小

========================================================================================================================

移除:

java-1.8.0-openjdk x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 841 k

java-1.8.0-openjdk-accessibility x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 101

java-1.8.0-openjdk-demo x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 4.3 M

java-1.8.0-openjdk-devel x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 41 M

java-1.8.0-openjdk-headless x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 117 M

java-1.8.0-openjdk-headless-slowdebug x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 138 M

java-1.8.0-openjdk-javadoc noarch 1:1.8.0.322.b06-11.el8 @sharerepo 269 M

java-1.8.0-openjdk-javadoc-zip noarch 1:1.8.0.322.b06-11.el8 @sharerepo 46 M

java-1.8.0-openjdk-slowdebug x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 1.0 M

java-1.8.0-openjdk-src x86_64 1:1.8.0.322.b06-11.el8 @sharerepo 50 M

java-atk-wrapper x86_64 0.33.2-6.el8 @sharerepo 182 k

javapackages-filesystem noarch 5.3.0-1.module_el8.0.0+11+5b8c10bd @System 1.9 k

事务概要

========================================================================================================================

移除 12 软件包

将会释放空间:667 M

确定吗?[y/N]: y

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

删除 : java-1.8.0-openjdk-accessibility-1:1.8.0.322.b06-11.el8.x86_64 1/12

删除 : java-1.8.0-openjdk-slowdebug-1:1.8.0.322.b06-11.el8.x86_64 2/12

运行脚本: java-1.8.0-openjdk-slowdebug-1:1.8.0.322.b06-11.el8.x86_64 2/12

删除 : java-1.8.0-openjdk-devel-1:1.8.0.322.b06-11.el8.x86_64 3/12

运行脚本: java-1.8.0-openjdk-devel-1:1.8.0.322.b06-11.el8.x86_64 3/12

删除 : java-1.8.0-openjdk-demo-1:1.8.0.322.b06-11.el8.x86_64 4/12

删除 : java-1.8.0-openjdk-src-1:1.8.0.322.b06-11.el8.x86_64 5/12

删除 : java-1.8.0-openjdk-javadoc-zip-1:1.8.0.322.b06-11.el8.noarch 6/12

运行脚本: java-1.8.0-openjdk-javadoc-zip-1:1.8.0.322.b06-11.el8.noarch 6/12

删除 : java-1.8.0-openjdk-javadoc-1:1.8.0.322.b06-11.el8.noarch 7/12

运行脚本: java-1.8.0-openjdk-javadoc-1:1.8.0.322.b06-11.el8.noarch 7/12

删除 : java-1.8.0-openjdk-headless-slowdebug-1:1.8.0.322.b06-11.el8.x86_64 8/12

运行脚本: java-1.8.0-openjdk-headless-slowdebug-1:1.8.0.322.b06-11.el8.x86_64 8/12

删除 : java-atk-wrapper-0.33.2-6.el8.x86_64 9/12

删除 : java-1.8.0-openjdk-1:1.8.0.322.b06-11.el8.x86_64 10/12

运行脚本: java-1.8.0-openjdk-1:1.8.0.322.b06-11.el8.x86_64 10/12

删除 : java-1.8.0-openjdk-headless-1:1.8.0.322.b06-11.el8.x86_64 11/12

运行脚本: java-1.8.0-openjdk-headless-1:1.8.0.322.b06-11.el8.x86_64 11/12

删除 : javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch 12/12

运行脚本: javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch 12/12

验证 : java-1.8.0-openjdk-1:1.8.0.322.b06-11.el8.x86_64 1/12

验证 : java-1.8.0-openjdk-accessibility-1:1.8.0.322.b06-11.el8.x86_64 2/12

验证 : java-1.8.0-openjdk-demo-1:1.8.0.322.b06-11.el8.x86_64 3/12

验证 : java-1.8.0-openjdk-devel-1:1.8.0.322.b06-11.el8.x86_64 4/12

验证 : java-1.8.0-openjdk-headless-1:1.8.0.322.b06-11.el8.x86_64 5/12

验证 : java-1.8.0-openjdk-headless-slowdebug-1:1.8.0.322.b06-11.el8.x86_64 6/12

验证 : java-1.8.0-openjdk-javadoc-1:1.8.0.322.b06-11.el8.noarch 7/12

验证 : java-1.8.0-openjdk-javadoc-zip-1:1.8.0.322.b06-11.el8.noarch 8/12

验证 : java-1.8.0-openjdk-slowdebug-1:1.8.0.322.b06-11.el8.x86_64 9/12

验证 : java-1.8.0-openjdk-src-1:1.8.0.322.b06-11.el8.x86_64 10/12

验证 : java-atk-wrapper-0.33.2-6.el8.x86_64 11/12

验证 : javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch 12/12

已移除:

enjdk-devel-1:1.8.0.322.b06-11.el8.x86_64

java-1.8.0-openjdk-headless-1:1.8.0.322.b06-11.el8.x86_64

java-1.8.0-openjdk-headless-slowdebug-1:1.8.0.322.b06-11.el8.x86_64

java-1.8.0-openjdk-javadoc-1:1.8.0.322.b06-11.el8.noarch

java-1.8.0-openjdk-javadoc-zip-1:1.8.0.322.b06-11.el8.noarch

java-1.8.0-openjdk-slowdebug-1:1.8.0.322.b06-11.el8.x86_64

java-1.8.0-openjdk-src-1:1.8.0.322.b06-11.el8.x86_64

java-atk-wrapper-0.33.2-6.el8.x86_64

javapackages-filesystem-5.3.0-1.module_el8.0.0+11+5b8c10bd.noarch

????????移除JDK

[root@pig1 hadoop]# yum remove jdk-18.x86_64

依赖关系解决。

===================================================================================================

软件包 架构 版本 仓库 大小

===================================================================================================

移除:

jdk-18 x86_64 2000:18.0.1.1-ga @System 304 M

事务概要

===================================================================================================

移除 1 软件包

将会释放空间:304 M

确定吗?[y/N]: y

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

运行脚本: jdk-18-2000:18.0.1.1-ga.x86_64 1/1

删除 : jdk-18-2000:18.0.1.1-ga.x86_64 1/1

运行脚本: jdk-18-2000:18.0.1.1-ga.x86_64 1/1

验证 : jdk-18-2000:18.0.1.1-ga.x86_64 1/1

已移除:

jdk-18-2000:18.0.1.1-ga.x86_64

完毕!

????????检查,继续清除

[root@pig1 hadoop]# rpm -qa|grep jdk

copy-jdk-configs-4.0-2.el8.noarch

[root@pig1 hadoop]# rpm -qa|grep java

tzdata-java-2022a-2.el8.noarch

[root@pig1 hadoop]#

[root@pig1 hadoop]# yum remove copy-jdk-configs.noarch tzdata-java.noarch

依赖关系解决。

===================================================================================================

软件包 架构 版本 仓库 大小

===================================================================================================

移除:

copy-jdk-configs noarch 4.0-2.el8 @System 19 k

tzdata-java noarch 2022a-2.el8 @System 362 k

事务概要

===================================================================================================

移除 2 软件包

将会释放空间:381 k

确定吗?[y/N]: y

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

删除 : tzdata-java-2022a-2.el8.noarch 1/2

删除 : copy-jdk-configs-4.0-2.el8.noarch 2/2

验证 : copy-jdk-configs-4.0-2.el8.noarch 1/2

验证 : tzdata-java-2022a-2.el8.noarch 2/2

已移除:

copy-jdk-configs-4.0-2.el8.noarch tzdata-java-2022a-2.el8.noarch

完毕!

[root@pig1 hadoop]#

?????????2、WEB用户

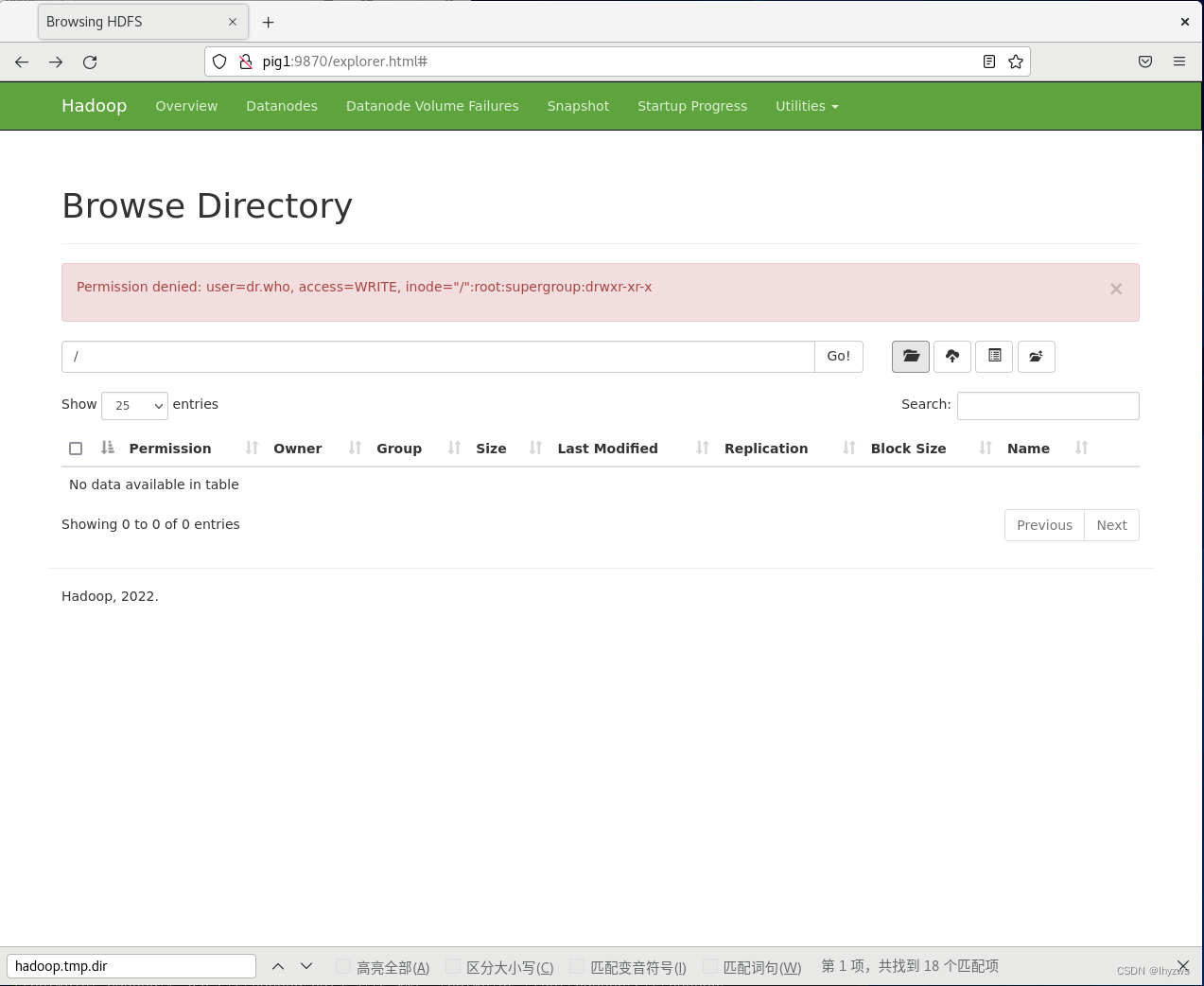

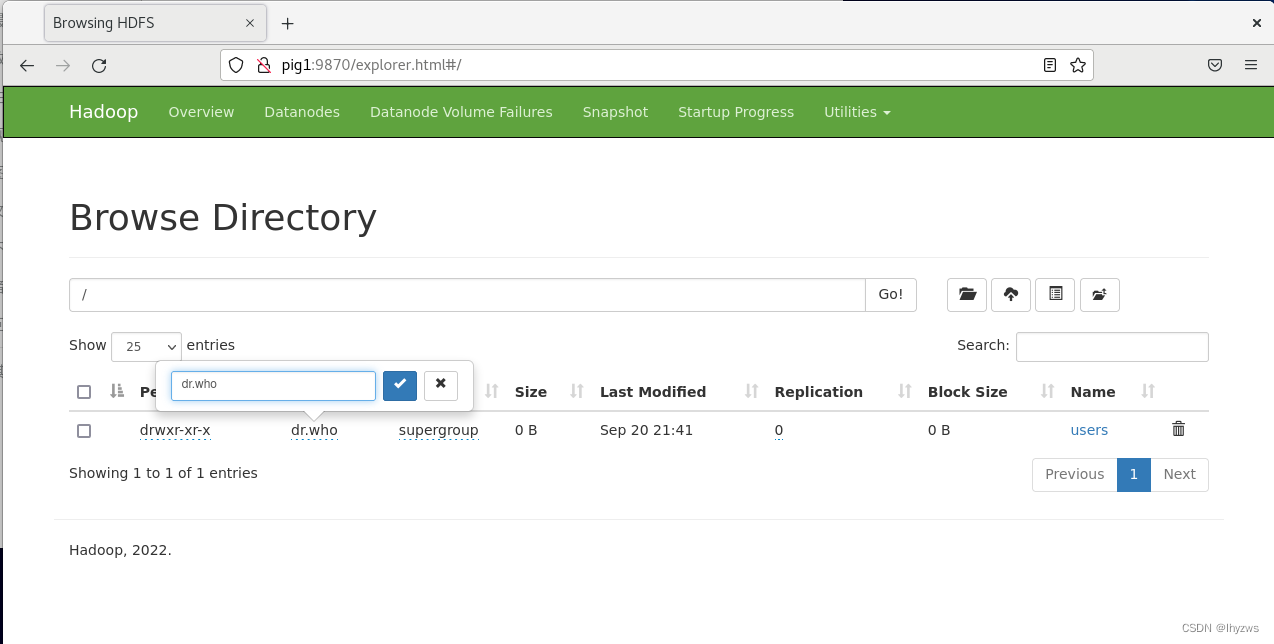

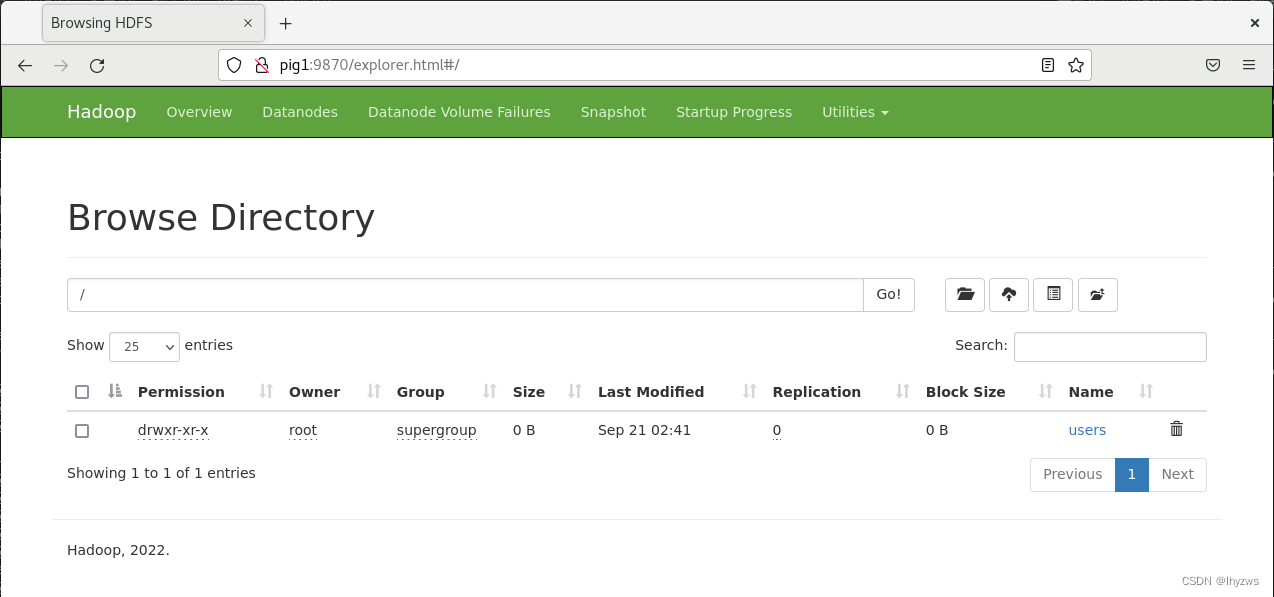

????????另一个坑,不知之前搭建系统的时候为什么没有遇到过。其原因在于,当使用WEB页面(通过9870)端口操作HDFS时,操作目录会出现权限问题,即Permisson Denied错误。这种情况是因为Hadoop默认使用dr.who作为HTTP静态登录时的用户,而root启动的Hadoop中,dr.who用户的权限不足。

????????然而在非WEB操作的情况下,实际是可以通过$HADOOP_HOME/bin/hdfs dfs -mkdir或者-ls命令来操作目录的。

[root@pig1 hadoop]# bin/hdfs dfs -ls /

Found 3 items

drwxrwx--- - root supergroup 0 2022-09-21 03:16 /tmp

drwxr-xr-x - root supergroup 0 2022-09-21 04:01 /user

drwxr-xr-x - root supergroup 0 2022-09-21 04:13 /users

????????所以解法之一,是在这里直接使用—chmod命令更改目录的权限,使得dr.who用户也可以访问。

????????解法之二,是在$HADOOP_HOME/etc/hadoop/hdfs-site.xml中增加属性

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

????????从而禁止权限检查。当然,作为强迫症患者,还是希望寻求非变通的解决办法。

????????比如解法之三,在$HADOOP_HOME/etc/hadoop/core-site.xml中增加一个属性,将Http的静态用户设为root用户就好了。

<property>

<name>Hadoop.http.staticuser.user</name>

<value>root</value>

</property>

????????可以看到,此时目录的用户已经变成了root。

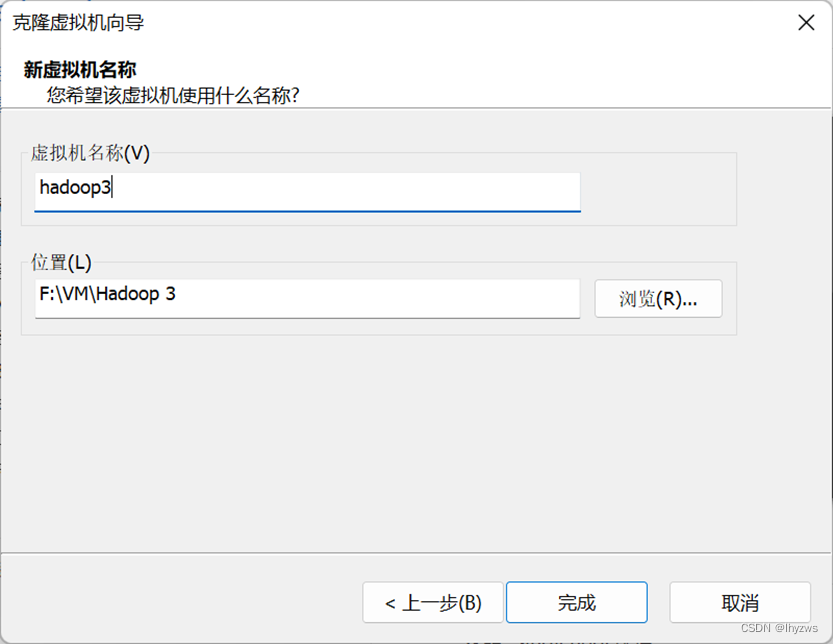

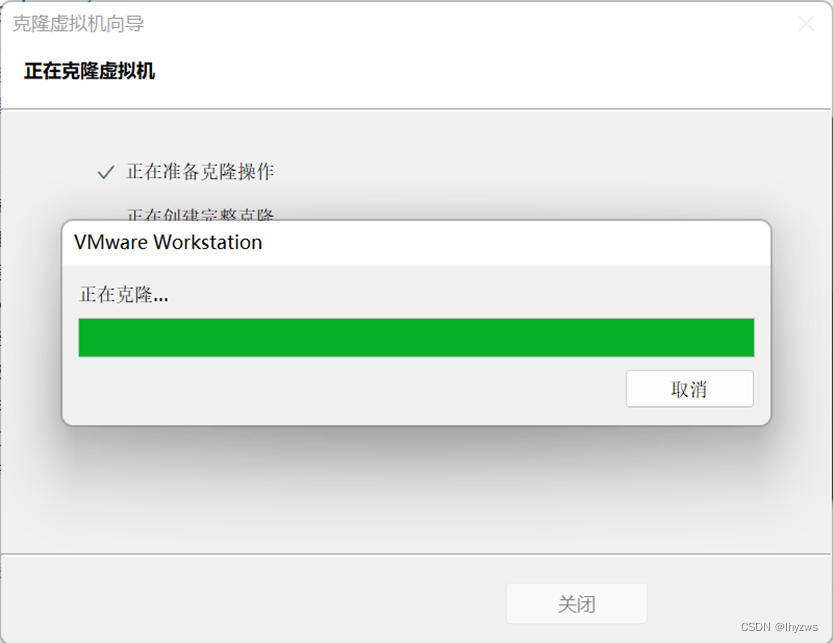

?????????一、克隆虚拟机

? ? ? ? 由于计划用到5个节点,如果一个一个的安装系统配置Hadoop无疑太麻烦了。这是需要借助一下Vmware的克隆虚拟机功能——首先在一个节点上按照SingleNode方式搭建好Hadoop环境,接着克隆到5台机器上以后再进行配置。

? ? ? ? 如图所示,一步一步操作进行克隆:

????????

?????????

????????

? ? ? ? 比如我的第一个节点叫做Hadoop1,复制到第三个就叫做Hadoop3:?

?????????

?????????

?????????

?????????

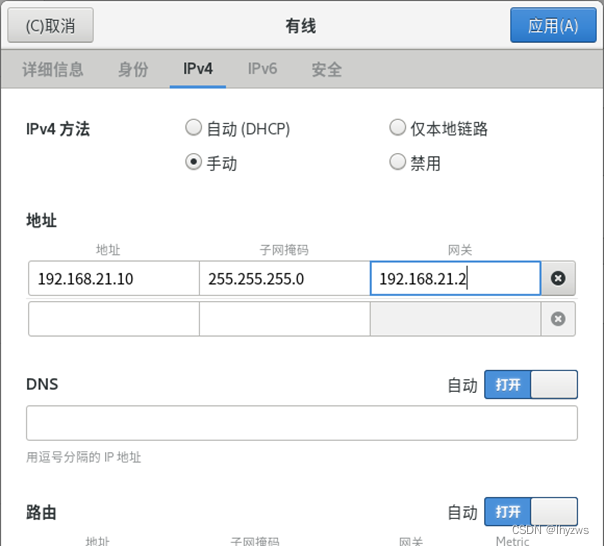

????????二、配置主机名和网络

? ? ? ? 克隆完毕后,首要是先更改主机名称和固定化IP地址,以方便组网。

????????1.配置网络

????????固定IP地址,以免发生未预期的变化。

????????比如图形化的CentOS,可以从设置菜单进去配置。

????????

? ? ? ? 或者直接更改配置文件:

[root@pig1 sysconfig]# cd network-scripts/

[root@pig1 network-scripts]# ls

ifcfg-ens160

[root@pig1 network-scripts]# vim ifcfg-ens160 ? ? ? ? 直接修改配置文件如下:?????????

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

#设置为不使用DHCP协议,即不自动分配IP地址

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

NAME=ens160

UUID=5961d222-44eb-4c47-b452-aae4511c9b04

DEVICE=ens160

#打开自动连接,以免每次开机都需要启动一下网络

ONBOOT=yes

#设好固定IP地址和网关;DNS要不就设置一下,以免使用WEB服务时出现不可预知问题

IPADDR=192.168.21.11

PREFIX=24

GATEWAY=192.168.21.2

DNS1=192.168.21.2? ? ? ? 重启一下网络:

[root@pig1]# nmcli networking off

[root@pig1]# nmcli networking on????????2、设置主机名

? ? ? ? 将主机名设置好,以便后面使用主机名访问:?

[root@localhost ~]# hostnamectl

Static hostname: localhost.localdomain

Icon name: computer-vm

Chassis: vm

Machine ID:

Boot ID:

Virtualization: vmware

Operating System: CentOS Stream 8

CPE OS Name: cpe:/o:centos:centos:8

Kernel: Linux 4.18.0-373.el8.x86_64

Architecture: x86-64

[root@localhost ~]#[root@localhost ~]# hostnamectl set-hostname pig1 --static

[root@localhost ~]# hostnamectl

Static hostname: pig1

Transient hostname: localhost.localdomain

Icon name: computer-vm

Chassis: vm

Machine ID:

Boot ID:

Virtualization: vmware

Operating System: CentOS Stream 8

CPE OS Name: cpe:/o:centos:centos:8

Kernel: Linux 4.18.0-373.el8.x86_64

Architecture: x86-64

[root@localhost ~]#

????????3.将主机关系对应名写入host文件

????????将以下内容写入/etc/hosts文件末尾,比如我们的5个小猪节点:

192.168.21.11 pig1

192.168.21.12 pig2

192.168.21.13 pig3

192.168.21.14 pig4

192.168.21.15 pig5? ? ? ? 三、配置免密SSH访问

????????1.本机测试

????????测试使用SSH登录本机是否需要密码,若需要密码,代表本机没有配置SSH免密。

????????为方便下文解释,我们首先做一个测试,观察一下用户目录:

[root@pig1 ~]# ls -a

. 图片 anaconda-ks.cfg .cache hadoop share

.. 文档 .bash_history .config .ICEauthority .tcshrc

公共 下载 .bash_logout .cshrc initial-setup-ks.cfg .viminfo

模板 音乐 .bash_profile .dbus .local

视频 桌面 .bashrc .esd_auth .pki

[root@pig1 ~]#

????????然后执行一下ssh登录本机:

[root@pig1 ~]# ssh pig1

The authenticity of host 'pig1 (192.168.21.11)' can't be established.

ECDSA key fingerprint is SHA256:

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'pig1,192.168.21.11' (ECDSA) to the list of known hosts.

root@pig1's password:

Activate the web console with: systemctl enable --now cockpit.socket

Last login: Wed Sep 14 07:49:55 2022

[root@pig1 ~]#

????????再次观察用户目录:

[root@pig1 ~]# ls -a

. 图片 anaconda-ks.cfg .cache hadoop share

.. 文档 .bash_history .config .ICEauthority .ssh

公共 下载 .bash_logout .cshrc initial-setup-ks.cfg .tcshrc

模板 音乐 .bash_profile .dbus .local .viminfo

视频 桌面 .bashrc .esd_auth .pki

[root@pig1 ~]# ls .ssh

known_hosts

[root@pig1 ~]# cat .ssh/known_hosts

pig1,192.168.21.11 ecdsa-sha2-nistp256 AAAA………………=

[root@pig1 ~]#

????????可见登录过程生成了一个名为.ssh的目录,其中存放了名为known_hosts的文件,该文件中记录了登录过的主机名,对应IP地址,指纹和指纹算法。

? ? ? ? 是不是很眼熟?这个和我们在配置CODE的SSH连接时的操作时一样的。

????????2.配置本机SSH免密

????????(1)生成密钥文件

[root@pig1 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:E98……………………4xz/pc root@pig1

The key's randomart image is:

+---[RSA 3072]----+

| ..O. * Bo|

…………

| .|

+----[SHA256]-----+

[root@pig1 ~]# ls -a

. 图片 anaconda-ks.cfg .cache hadoop share

.. 文档 .bash_history .config .ICEauthority .ssh

公共 下载 .bash_logout .cshrc initial-setup-ks.cfg .tcshrc

模板 音乐 .bash_profile .dbus .local .viminfo

视频 桌面 .bashrc .esd_auth .pki

[root@pig1 ~]# ls .ssh

id_rsa id_rsa.pub known_hosts

[root@pig1 ~]#

????????该命令生成密钥文件,其中-t指定算法为rsa;-P指示锁定口令为空‘’,如果不为空,在首次登录时,除询问被登录主机密钥外,还会提示该私钥已被锁定,需要提供此处指示过的非空密钥;-f指定密钥以文件方式输出到用户目录~/.ssh/下的id_rsa文件,当然这个名字是可以任意取的,只不过如果有多个的话可能需要ssh-copy-id的时候使用-i参数指定。如果使用过Windows的ssh登录过,或者使用过VSCODE的ssh远程登录,可知该目录通常用于存储与ssh相关的参数。

????????命令执行完成后,观察用户目录:

[root@pig1 ~]# ls .ssh

id_rsa id_rsa.pub known_hosts

????????其中,id_rsa文件记录了生成的私钥,id_rsa.pub文件记录了生成的公钥。

????????(2)将密钥配置到登录密钥文件中

[root@pig1 ~]# ssh-copy-id pig1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@pig1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'pig1'"

and check to make sure that only the key(s) you wanted were added.

????????这里和官方指南中将公钥文件cat到authorized_keys的做法不同,而且更简单一些。观察.ssh目录:

[root@pig1 ~]# ls .ssh

authorized_keys id_rsa id_rsa.pub known_hosts

[root@pig1 ~]# cat .ssh/authorized_keys

ssh-rsa AAAA/YE4W1Ou………………

diucFHlG1NLK8DlPSyk= root@pig1

[root@pig1 ~]#

????????就可以看到实际效果是一样的,都是在.ssh下生成了authorized_keys文件,并且将需要登录用户的公钥文件拷贝进去。

????????然后测试,此时可以不使用密码就能登录了。

[root@pig1 ~]# ssh pig1

Activate the web console with: systemctl enable --now cockpit.socket

Last login: Wed Sep 14 09:35:41 2022 from 192.168.21.11

[root@pig1 ~]#

????????(3)将密钥配置到远程主机中

????????以pig2为例,在配置前,不妨在pig2主机上ls -a一下用户目录,此时是没有.ssh目录的。

????????此时再次回到pig1的控制台中,使用ssh-copy-id命令将登录公钥拷贝到pig2中:

[root@pig1 ~]# ssh-copy-id pig2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'pig2 (192.168.21.12)' can't be established.

ECDSA key fingerprint is SHA256:zOZyGj3+LzDVOYsGVXR513zJSOBgi+RmX+GesdjGoJs.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@pig2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'pig2'"

and check to make sure that only the key(s) you wanted were added.

[root@pig1 ~]#

????????可见该命令实际首先执行了远程ssh登录pig2的步骤,该步骤会要求用户提供登录口令,登录成功后pig2上会建立.ssh目录,然后ssh-copy-id命令会在该目录下建立名为authorized_keys的文件,并写入pig1的公钥。

[root@pig2 ~]# ls

公共 视频 文档 音乐 anaconda-ks.cfg initial-setup-ks.cfg

模板 图片 下载 桌面 hadoop share

[root@pig2 ~]# ls -a

. 图片 anaconda-ks.cfg .cache hadoop share

.. 文档 .bash_history .config .ICEauthority .tcshrc

公共 下载 .bash_logout .cshrc initial-setup-ks.cfg .viminfo

模板 音乐 .bash_profile .dbus .local

视频 桌面 .bashrc .esd_auth .pki

[root@pig2 ~]# ls -a

. 图片 anaconda-ks.cfg .cache hadoop share

.. 文档 .bash_history .config .ICEauthority .ssh

公共 下载 .bash_logout .cshrc initial-setup-ks.cfg .tcshrc

模板 音乐 .bash_profile .dbus .local .viminfo

视频 桌面 .bashrc .esd_auth .pki

[root@pig2 ~]# ls .ssh

authorized_keys

[root@pig2 ~]#

????????现在,从pig1应该可以免密登录pig2了。

? ? ? ? 3.集群免密SSH

????????如此,可以在所有的机器上配置好ssh的免密互访问。当然,如果几百台机器这样去配显然是会疯的。所以也可以参考一下网上的一些方式,先配好一台,然后将其.ssh下的authorized_keys文件扩展(vim拷贝好多行,并且更改主机名后的序号),然后将整个.ssh文件夹拷贝到所有的host上——当然还得注意一下文件夹及文件的权限属性。这种方式下,实际上是默认所有的主机都是用相同的密钥文件id_rsa中的公私钥值是一样的……。

????????官方文档中,推荐我们使用pdsh去做这件事:

-

If your cluster doesn’t have the requisite software you will need to install it.

For example on Ubuntu Linux:

$ sudo apt-get install ssh $ sudo apt-get install pdsh

? ? ? ? 当然,也可以直接使用yum去安装,注意除了pdsh外,还需要安装pdsh-rcmd-ssh。

[root@bogon share]# yumdownloader pdsh

上次元数据过期检查:0:00:19 前,执行于 2022年07月13日 星期三 09时56分06秒。

pdsh-2.34-5.el8.x86_64.rpm 373 kB/s | 121 kB 00:00

[root@bogon share]# yum install pdsh -y

上次元数据过期检查:0:00:33 前,执行于 2022年07月13日 星期三 09时56分06秒。

依赖关系解决。

=================================================================================================

软件包 架构 版本 仓库 大小

=================================================================================================

安装:

pdsh x86_64 2.34-5.el8 epel 121 k

安装依赖关系:

pdsh-rcmd-rsh x86_64 2.34-5.el8 epel 19 k

事务概要

=================================================================================================

安装 2 软件包

总下载:140 k

安装大小:577 k

下载软件包:

(1/2): pdsh-rcmd-rsh-2.34-5.el8.x86_64.rpm 303 kB/s | 19 kB 00:00

(2/2): pdsh-2.34-5.el8.x86_64.rpm 1.5 MB/s | 121 kB 00:00

-------------------------------------------------------------------------------------------------

总计 280 kB/s | 140 kB 00:00

Extra Packages for Enterprise Linux 8 - x86_64 1.6 MB/s | 1.6 kB 00:00

导入 GPG 公钥 0x2F86D6A1:

Userid: "Fedora EPEL (8) <epel@fedoraproject.org>"

指纹: 94E2 79EB 8D8F 25B2 1810 ADF1 21EA 45AB 2F86 D6A1

来自: /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-8

导入公钥成功

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : pdsh-rcmd-rsh-2.34-5.el8.x86_64 1/2

安装 : pdsh-2.34-5.el8.x86_64 2/2

运行脚本: pdsh-2.34-5.el8.x86_64 2/2

验证 : pdsh-2.34-5.el8.x86_64 1/2

验证 : pdsh-rcmd-rsh-2.34-5.el8.x86_64 2/2

已安装:

pdsh-2.34-5.el8.x86_64 pdsh-rcmd-rsh-2.34-5.el8.x86_64

完毕![root@pig1 .ssh]# yum install pdsh-rcmd-ssh

上次元数据过期检查:0:13:08 前,执行于 2022年07月16日 星期六 08时46分13秒。

依赖关系解决。

========================================================================================================================

软件包 架构 版本 仓库 大小

========================================================================================================================

安装:

pdsh-rcmd-ssh x86_64 2.34-5.el8 epel 19 k

事务概要

========================================================================================================================

安装 1 软件包

总下载:19 k

安装大小:16 k

确定吗?[y/N]: y

下载软件包:

pdsh-rcmd-ssh-2.34-5.el8.x86_64.rpm 414 kB/s | 19 kB 00:00

------------------------------------------------------------------------------------------------------------------------

总计 18 kB/s | 19 kB 00:01

运行事务检查

事务检查成功。

运行事务测试

事务测试成功。

运行事务

准备中 : 1/1

安装 : pdsh-rcmd-ssh-2.34-5.el8.x86_64 1/1

运行脚本: pdsh-rcmd-ssh-2.34-5.el8.x86_64 1/1

验证 : pdsh-rcmd-ssh-2.34-5.el8.x86_64 1/1

已安装:

pdsh-rcmd-ssh-2.34-5.el8.x86_64

完毕!

? ? ? ? ?安装好了以后,就可以在指定的多个节点上并行执行命令了。比如:

[root@pig1 .ssh]# pdsh -w ssh:pig[1-3] "ls"

No such rcmd module "ssh"

pdsh@pig1: Failed to register rcmd "ssh" for "pig[1-3]"

? ? ? ? 又比如

[root@pig1 .ssh]# pdsh -w ssh:pig[1-3] "pwd"

pig2: /root

pig1: /root

pig3: /root

? ? ? ? 然而,不幸在于,安装了pdsh以后,在执行Hadoop的批启动脚本时会发生冲突。我又没有兴趣去找这个问题的解法,所以,如果想用批启动脚本——当然也可以不用,就不要装pdsh吧,多敲几次ssh或者自己写个脚本也好。所谓鱼和熊掌不可兼得也。

? ? ? ? 另一种办法,就是像网上有一种指南一样,在第一台机器自己ssh-copy-id自己成功后,直接将authorized-keys文件中的pig1的密钥复制到五行,更改后面的主机名,然后把.ssh目录拷贝到其它节点上,在更改id_rsa.pub中的主机名到对应主机名。这样实际的含义就是5个节点的公私钥对都是一样的,用于登录验证的密钥也是一样的。

????????四、配置Hadoop

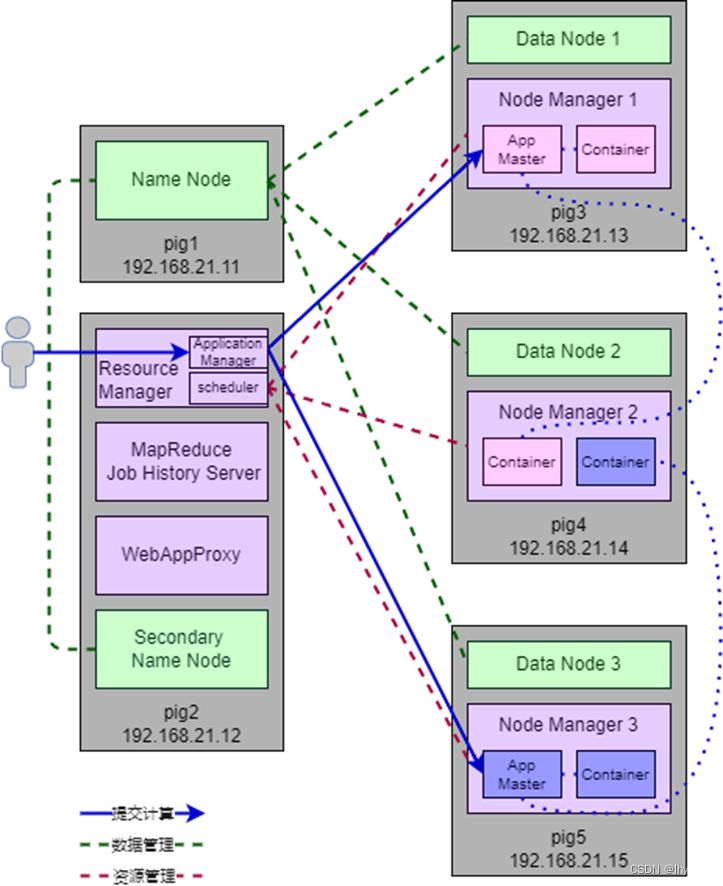

????????1.Hadoop集群构成

????????(1)主要组件简介

????????一个可做分布式存算的Hadoop集群,在其物理集群(也就是多个服务器或虚拟机)中,对应“存”和“算”的概念,实际包含2个逻辑意义上的集群:一个是存,即分布式文件系统HDFS;一个是算,即MapReduce:

?????????① HDFS的“存”集群

????????通常由NameNode和多个DataNode构成。NameNode用来管理文件存储的元数据,可以理解为文件系统的分区表。小型的集群1个NameNode也够,但通常为了避免单点故障和进行负载均衡,会使用更多的NameNode。此时,就需要配置Secondary NameNode;DataNode可以理解为一堆机器构成的磁盘阵列,上面以数据块为单位管理被存储于HDFS中的文件。

????????② MapReduce的“算”集群

????????主要基于Yarn进行构建。根据官方的说法,Yarn的基本思想是将资源管理和工作编排/监视2项基本功能且分开。全局层面,ResouceManager(RM)包含Scheduler和ApplicationManager(AM),Secheduler负责为“应用(代表一个Job或者多个Job构成的工作流)”分配资源,AM负责接受用户提交的Job,调度执行Job的应用控制器(AppMaster)和容器(Container);资源层面,整个集群的资源管理体系由全局的RM和每个物理节点上一个NodeManager(NM)构成,NM在节点上扮演监控代理的角色,向RM/Scheduler报告CPU、内存、磁盘使用信息;工作层面,由AM协同NodeManager,与RM一起执行与监视组成Job的Task的状态。

????????MapReduce Job History Server提供REST API,以方便用户查询承载工作/工作流的“应用”的状态。

????????Web Application Proxy作为Yarn的一部分,默认作为Resouce Manager的组件运行,但它可以被独立配置在其它节点上。在Yarn中,每个应用控制器(App Master)都需要向ResourceManager提供Web UI,Web Application Proxy似乎是负责为这些接口提供代理,以解决一些安全问题。

????????好了,啰嗦这么多,其实是因为理解这些,有助于理解Hadoop集群的配置过程。否则,按图索骥似的配置,一旦哪里出点问题,还真有可能如堕五里云雾中,这可能是Hadoop入门门槛不低的主要原因吧。

????????(2)集群节点分配

????????典型的Hadoop集群构建方式,会选择一个节点作为名字服务器,再选择一个另外的节点作为ResourceManager,这2种节点的身份是控制者(Masters,注意和AppMaster区别);其它服务(比如Web App Proxy Server和MapReduce Job History Server),可以视工作负载决定是否共用节点或独立节点安装;除此之外,集群中剩下的节点可以同时扮演DataNode和NodeManager,这些节点被称为工作者(Worker)。

????????按照上面介绍的Hadoop架构,我们使用5个虚拟机节点来配置一个小型的Hadoop集群。如前面图所示,各节点计划配置功能如下表:

| IP地址 | 主机名 | HDFS角色 | Map Reduce角色 | 角色类型 |

| 192.168.21.11 | pig1 | NameNode | Master | |

| 192.168.21.12 | pig2 | Secondary NameNode | Resource Manager MapReduce Job History Server Web Application Proxy | Master |

| 192.168.21.13 | pig3 | DataNode1 | Node Manager1 | Workers |

| 192.168.21.14 | pig4 | DataNode2 | Node Manager2 | Workers |

| 192.168.21.15 | pig5 | DataNode3 | Node Manager3 | Workers |

????????2.配置集群

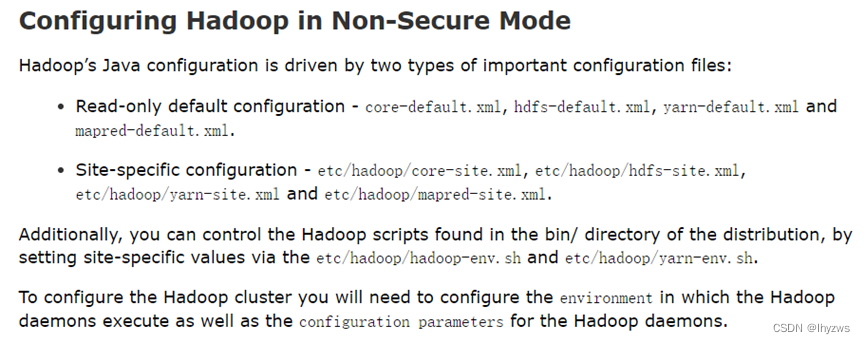

????????(1)配置文件简介

? ? ? ? 首先把官方指南里面关于集群搭建中需要配置的文件的部分信息摘要如下:

????????

?

????????如图,根据官方的指南,为搭建Hadoop集群,一般需要配置的文件包括3个类型。

????????① Shell文件中环境的配置

????????这个我们在Single Node配置的时候,就对hadoop-env.sh文件配置过export JAVA_HOME的内容。实际上,除了Hadoop-env.sh,Yarn-env.sh、Mapred-env.sh中也可以根据情况可选的设置JAVA_HOME。

????????② Hadoop文件中的配置

????????包括两个方面,一是Read-only默认配置,主要是core-default.xml、hdfs-default.xml、yarn-default.xml、mapred-default.xml这4个文件,不过一般来说不用更改;二是Site-Specific配置,包括core-site.xml、hdfs-site.xml、yarn-site.xml、mapred-site.xml这4个文件,其路径也在hadoop工作路径下的etc/hadoop子目录下。

????????③ Workers的配置

????????最后,是指明集群中那些非控制者(Master),也就是工作者(Workers)的节点有哪些的文件。这个默认在etc/hadoop这个文件夹下是没有的,需要自行创建,并将workers的主机名或IP地址写在里面,一个节点一行。

pig3

pig4

pig5

????????(2)配置环境设置文件

????????①配置全局环境文件

? ? ??

?????????按照官方指南指出的,一般需要在/etc/profile文件中添加HADOOP工作路径的环境变量,进行全局设置。设置完成后最好重启系统,以避免出现不可预期的错误:

export HADOOP_HOME=/root/Hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin????????实际上类似于start-all.sh类的安装脚本中使用的环境变量大多数从HADOOP_HOME衍生过来,所以HADOOP_HOME是不可少的。重启后开启一个shell,使用echo $HADOOP_HOME查看,确认其非空,且值正确。

????????然后使用配置同步命令(这个命令感觉和scp也没啥区别的样子)将profile文件同步到其它节点上,一样也重启其它节点:????????

[root@pig1 ~]# rsync -rvl /etc/profile root@pig2:/etc/profile

sending incremental file list

profile

sent 230 bytes received 59 bytes 578.00 bytes/sec

total size is 2,228 speedup is 7.71

[root@pig1 ~]# rsync -rvl /etc/profile root@pig3:/etc/profile

sending incremental file list

profile

sent 230 bytes received 59 bytes 192.67 bytes/sec

total size is 2,228 speedup is 7.71

[root@pig1 ~]# rsync -rvl /etc/profile root@pig4:/etc/profile

sending incremental file list

profile

sent 230 bytes received 59 bytes 578.00 bytes/sec

total size is 2,228 speedup is 7.71

[root@pig1 ~]# rsync -rvl /etc/profile root@pig5:/etc/profile

sending incremental file list

profile

sent 230 bytes received 59 bytes 192.67 bytes/sec

total size is 2,228 speedup is 7.71

????????②配置hadoop.sh

????????参考hadoop-env.sh中的描述,除配置JAVA_HOME外,在hadoop-env.sh脚本中还需要配置hdfs命令用户。例如,在运行bin/hdfs namenode –daemon start namenode来启动名字服务器时(使用批启动命令start-all.sh、start-dfs.sh其实也是在内部脚本中调用了该命令),此处的命令是hdfs,子命令是namenode,hadoop会检查执行该命令的用户是否在hadoop-env.sh中定义:????????

#

# To prevent accidents, shell commands be (superficially) locked

# to only allow certain users to execute certain subcommands.

# It uses the format of (command)_(subcommand)_USER.

#

# For example, to limit who can execute the namenode command,

# 在这里配置所有的用户,否则,在使用start-hdfs.sh和start-yarn.sh命令时会被告知用户不存在

# export HDFS_NAMENODE_USER=hdfs

????????否则,按照HADOOP的安全机制,在启动NameNode时,会出现用户未定义错误。

[root@pig1 hadoop]# sbin/start-dfs.sh

Starting namenodes on [pig1]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [pig2]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

????????因此,在hadoop-env.sh中,添加以下3行:

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

????????同理,在yarn-env.sh中,应添加以下2行,以便调用yarn resourcemanager --daemon start? resourcemanager命令和yarn nodemanager --daemon start nodemanager命令:

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

????????(3)配置Hadoop的xml文件

? ? ? ? 前面说过,Hadoop在物理机集群之上有2个逻辑集群:一个是存集群,从HDFS角度的配置

看,涉及core-site.xml、hdfs-site.xml这2个文件;一个是算集群,从MapReduce角度的配置看,涉及core-site.xml、mapred-site.xml。然后,mapreduce一般选择构建于yarn的资源管理之上,涉及yarn-site.xml。

? ? ? ? 如果要细究这些文件中的值怎么被hadoop使用的,可以查询HdfsConfiguration类的源码,具体可以参考知乎上的这篇文章。

????????① Core-site.xml

????????主要用于配置名字服务器的URI,Hadoop需要根据此配置识别NameNode的地址。其它可选的配置项还包括名字服务器临时文件存放目录等等。

<configuration>

<property><!-- 设置名字服务器的URI,Hadoop根据此设置识别NameNode-->

<name>fs.defaultFS</name>

<value>hdfs://pig1:9000</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

</configuration>

? ? ? ? 目前我们配的就两项:

? ? ? ? fs.defaultFS

????????标识名字服务器的URI,Hadoop根据此设置识别NameNode的位置,hadoop1.x版本默认使用的是9000,hadoop2.x中默认使用的是8020。不过最好还是显式设置一下,这里设为9000。

? ? ? ? hadoop.http.staticuser.user

? ? ? ? 这个前面踩坑指南里面已经说过了,为了确保使用WEB界面操作HDFS时具有权限,将WEB登录的用户指定为root(或者你的用户)。

? ? ? ? hadoop.tmp.dir

? ? ? ? 设置Hadoop工作时存储临时文件的目录,默认情况下是存放在系统/tmp文件夹下以hadoop+用户名命名的子文件夹下,包括dfs、name、data、mapreduce等文件夹。如果不在意这个文件夹下的文件,使用默认值就可以,如果在意的话还是得改一改,因为系统重启得时候可能会删掉。

[root@pig1 tmp]# cd hadoop-root/

[root@pig1 hadoop-root]# ls

dfs

[root@pig1 hadoop-root]# cd dfs

[root@pig1 dfs]# ls

name

[root@pig1 dfs]# cd name

[root@pig1 name]# ls

current in_use.lock

[root@pig1 name]#

????????② Hdfs-site.xml

????????主要用于配置HDFS的副本数,以及名字服务器和第二名字服务器(如果有的话)的Http地址。其它可配置选项还包括名字节点元数据存放位置,数据节点的块数据存放位置等。这两个参数的名称是dfs.namenode.name.dir和dfs.datanode.data.dir。不过这个我们使用默认值了,也就没配;如果默认的目录存在空间问题的话,还是需要专门配一下的。另外,如果要使用REST API,还需要将dfs.webhdfs.enabled设置为true。

<configuration>

<property><!--设置文件系统副本数 -->

<name>dfs.replication</name>

<value>3</value>

</property>

<property><!--设置名字服务器-->

<name>dfs.namenode.http-address</name>

<value>pig1:9870</value>

</property>

<property><!--设置第二名字服务器-->

<name>dfs.namenode.secondary.http-address</name>

<value>pig2:9890</value>

</property>

</configuration>

? ? ? ? dfs.replication

? ? ? ? 设置文件系统管理块存储时的的副本数,通俗讲也就是一份数据在几个节点上保存,这样有2个好处,一是可以提供冗余,二是分布式计算的时候可以有更多的节点提供数据访问,加快速度。

? ? ? ? dfs.namenode.http-address

? ? ? ? 设置名字服务器的WEB访问接口,以前似乎默认端口时50070,现在的版本似乎默认时9870……;而且这个端口是需要显式设置的,如果不设,名字服务器以及第二名字服务器会无法启动。或者,干脆就不要设这两个参数,这样,Hadoop会尝试用默认端口将名字服务器和第二名字服务器都安装在运行批启动命令或者hdfs --daemon start namenode命令的那个节点上。部署完成后,我们可以通过在浏览器上输入pig1:9870访问名字服务器的管理信息。

? ? ? ? dfs.namenode.secondary.http-address

? ? ? ? 和名字服务器的设置一样,该参数用来设置第二名字服务器的WEB接口。

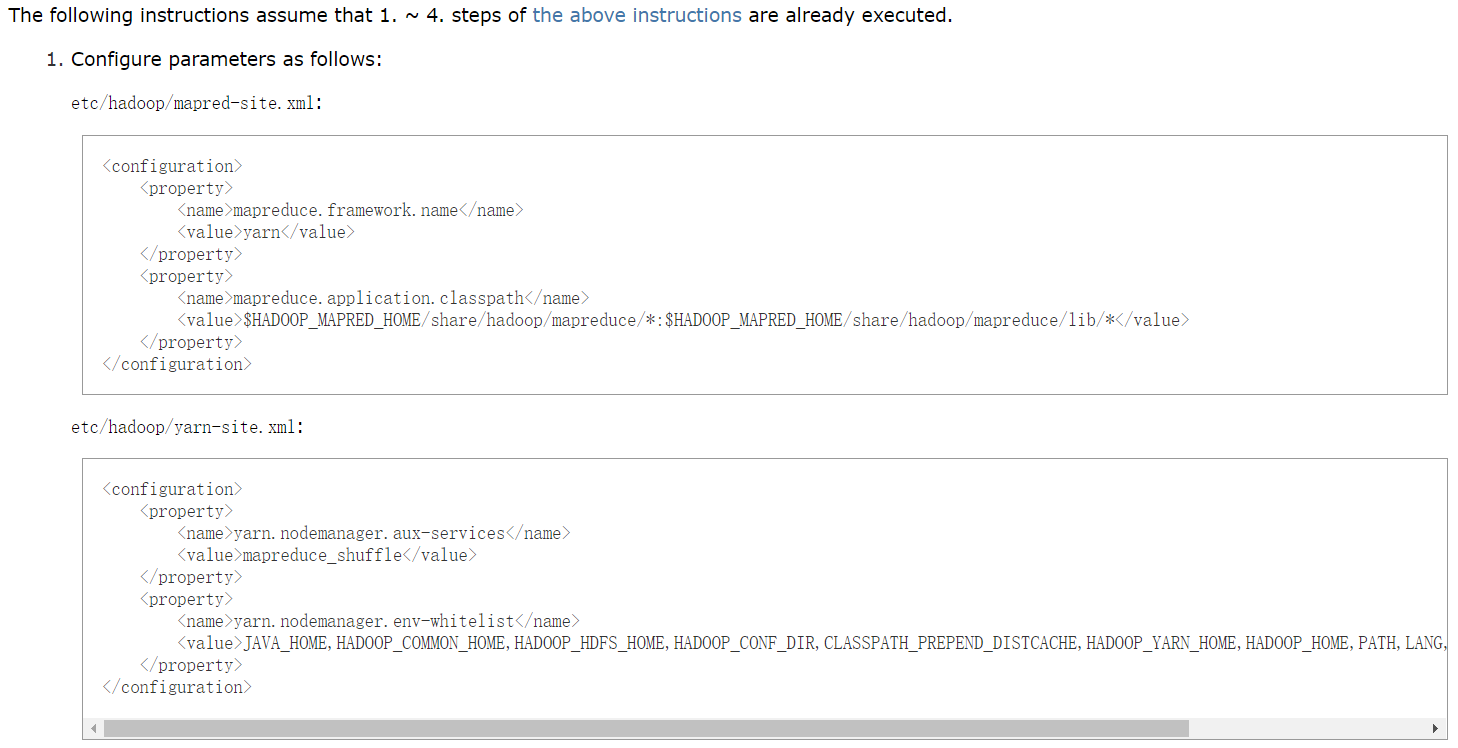

????????③ Mapred-site.xml

????????一是指定mapreduce的管理框架,如果使用Yarn则直接指定yarn就可以,否则需要为map、reduce分别指定环境变量;二是指定历史服务器的地址和端口;查看历史服务器已经运行完的Mapreduce作业记录的web地址。

<configuration>

<property><!--指定MapReduce使用的集群框架(YARN)-->

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property><!--设置Job History Server的地址-->

<name>mapreduce.jobhistory.address</name>

<value>pig2:10020</value>

</property>

<property><!--设置Job History Server的网络接口-->

<name>mapreduce.jobhistory.webapp.address</name>

<value>pig2:19888</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>

? ? ? ? mapreduce.framework.name

? ? ? ? 如果不是打算使用其它的什么机制进行资源管理,必设,指定为yarn。

? ? ? ? mapreduce.jobhistory.address

? ? ? ? 设置Job History Server的IPC协议主机及端口号,API访问使用该接口确定Job History Server的位置。该服务为启动MapReuce任务所需。

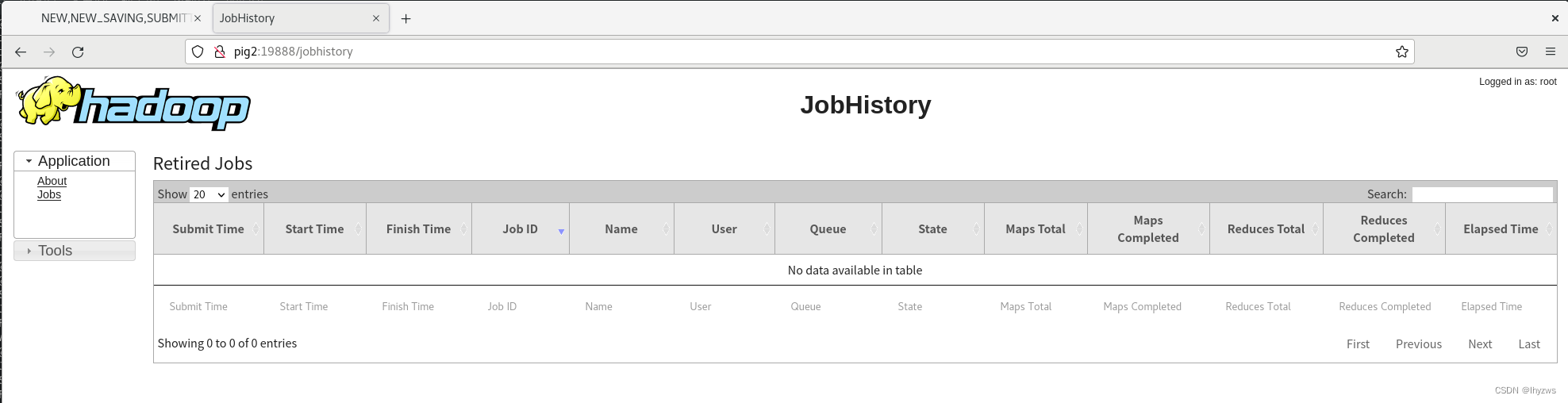

? ? ? ? mapreduce.jobhistory.webapp.address

? ? ? ? 设置Job History Server的WEB访问接口,在浏览器中使用pig2:19888可以访问MapReduce任务执行的相关信息。

? ? ? ? mapreduce.application.classpath

? ? ? ? 这个比较坑(和下面的那个env-whitelist一样),如果不设,可能会导致一系列环境变量方面的错误,总之按照官方指南,设置上就好了。

????????④ Yarn-site.xml

????????主要用于指定资源管理器的主机地址;以及NodeManager上的 Reducer获取数据的方式。

<configuration>

<!-- Site specific YARN configuration properties -->

<property><!--设置YARN服务器主机-->

<name>yarn.resourcemanager.hostname</name>

<value>pig2</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

</configuration> ? ? ? ? yarn.resourcemanager.hostname

? ? ? ? 设置Yarn服务器的主机名,也可以使用address来设置IP地址。

? ? ? ? yarn.nodemanager.aux-services

????????指定mapreduce作业使用混洗方式,总之设上就行了。

? ? ? ? yarn.nodemanager.env-whitelist

? ? ? ?如上所述这个也比较坑,总之也是按照官网上伪分布式部署的设置方式。设上,否则会出现一些环境变量方面的不可知问题。

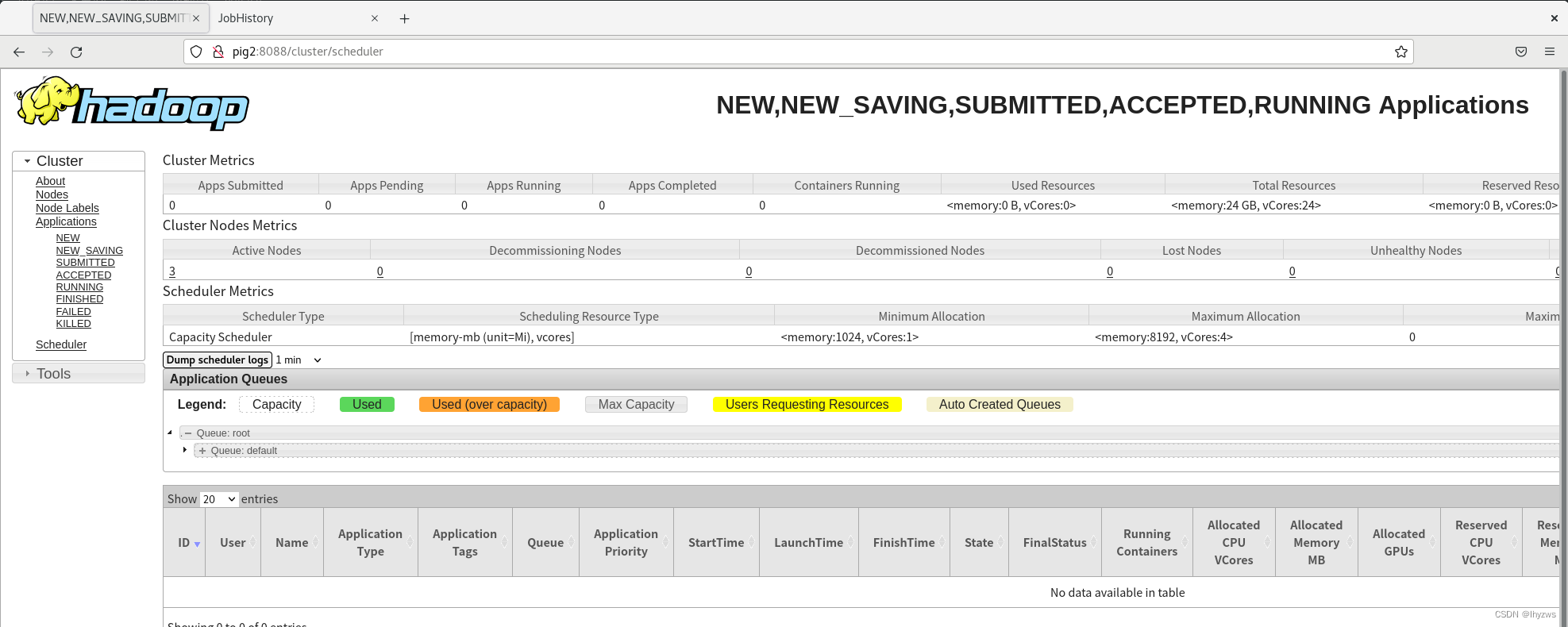

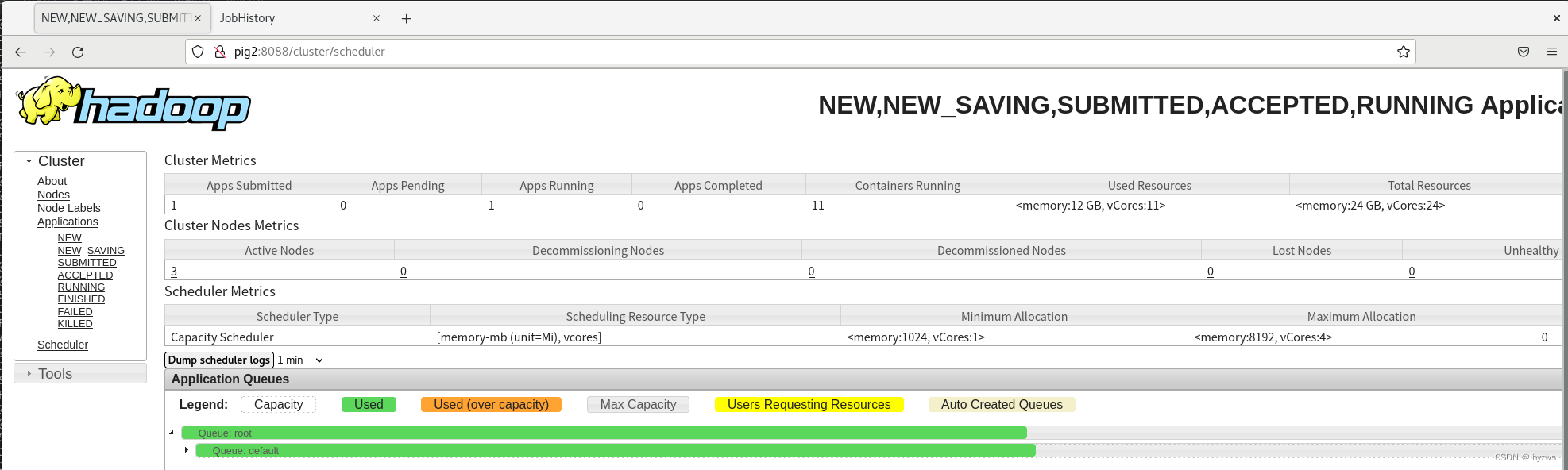

? ? ? ? yarn.resourcemanager.webapp.address

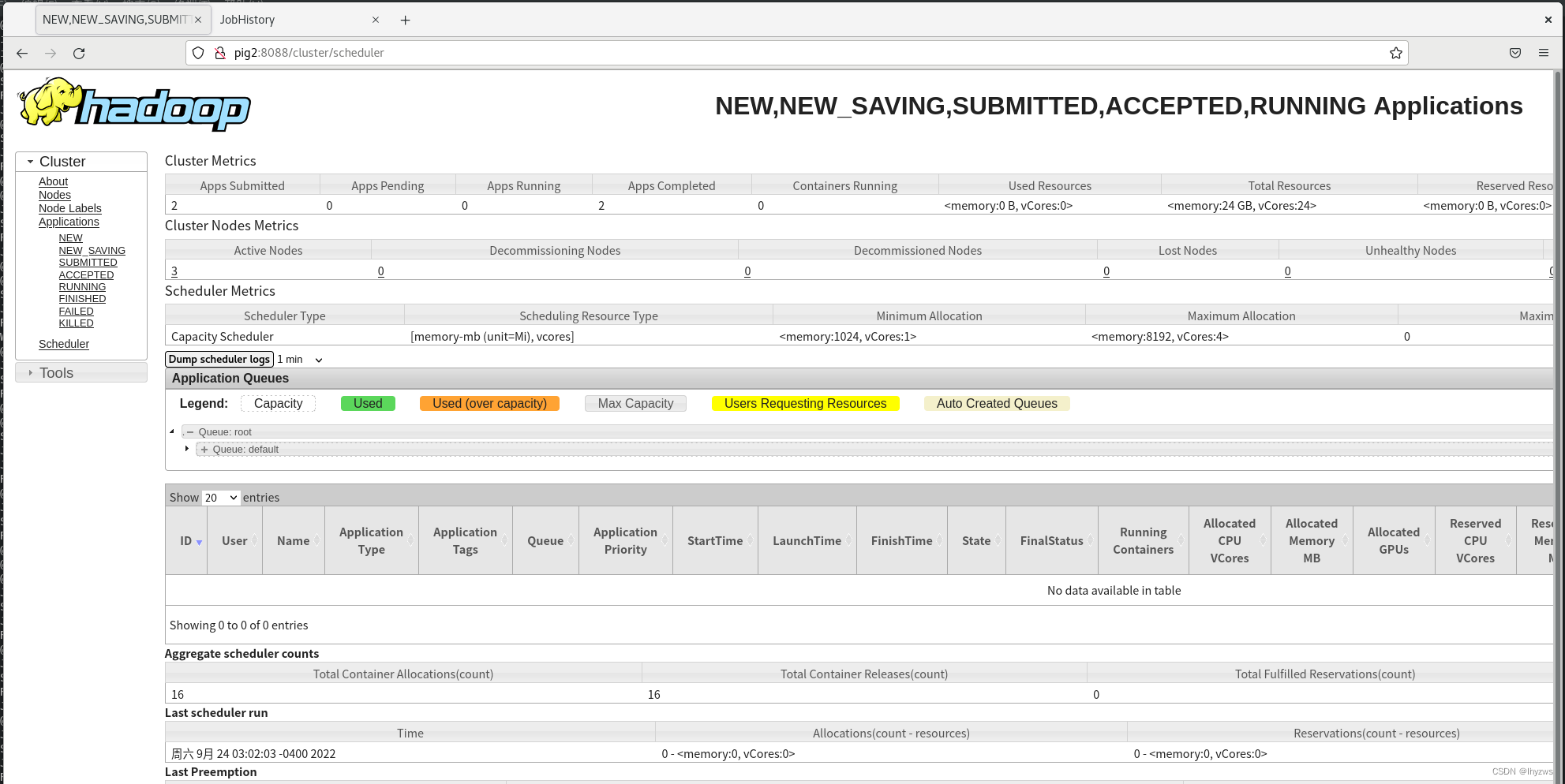

? ? ? ? 设置yarn的WEB访问地址与端口,默认就在resourcemanager所在的节点,端口为8088。这个因为设置过hostname,所以可以不设,直接使用默认值就行。回头可以使用浏览器输入pig2:8088就可以访问了。? ? ? ?

????????(4)分发配置文件

? ? ? ? 将更改后的xml、sh、workers文件分发到所有的节点上,可以使用rsync,或者scp:????????

[root@pig1 hadoop]# pwd

/root/hadoop/etc/hadoop

[root@pig1 hadoop]# scp *-site.xml root@pig2:/root/hadoop/etc/hadoop/.

core-site.xml 100% 932 571.1KB/s 00:00

hdfs-rbf-site.xml 100% 683 469.0KB/s 00:00

hdfs-site.xml 100% 1022 680.3KB/s 00:00

httpfs-site.xml 100% 620 677.9KB/s 00:00

kms-site.xml 100% 682 656.1KB/s 00:00

mapred-site.xml 100% 1183 1.4MB/s 00:00

yarn-site.xml 100% 918 771.2KB/s 00:00

[root@pig1 hadoop]# scp *-site.xml root@pig3:/root/hadoop/etc/hadoop/.

core-site.xml 100% 932 516.2KB/s 00:00

hdfs-rbf-site.xml 100% 683 466.5KB/s 00:00

hdfs-site.xml 100% 1022 765.6KB/s 00:00

httpfs-site.xml 100% 620 610.4KB/s 00:00

kms-site.xml 100% 682 741.1KB/s 00:00

mapred-site.xml 100% 1183 1.0MB/s 00:00

yarn-site.xml 100% 918 999.5KB/s 00:00

[root@pig1 hadoop]# scp *-site.xml root@pig4:/root/hadoop/etc/hadoop/.

core-site.xml 100% 932 427.6KB/s 00:00

hdfs-rbf-site.xml 100% 683 441.3KB/s 00:00

hdfs-site.xml 100% 1022 690.5KB/s 00:00

httpfs-site.xml 100% 620 666.1KB/s 00:00

kms-site.xml 100% 682 698.4KB/s 00:00

mapred-site.xml 100% 1183 1.2MB/s 00:00

yarn-site.xml 100% 918 122.1KB/s 00:00

[root@pig1 hadoop]# scp *-site.xml root@pig5:/root/hadoop/etc/hadoop/.

core-site.xml 100% 932 492.0KB/s 00:00

hdfs-rbf-site.xml 100% 683 421.4KB/s 00:00

hdfs-site.xml 100% 1022 701.5KB/s 00:00

httpfs-site.xml 100% 620 657.0KB/s 00:00

kms-site.xml 100% 682 722.5KB/s 00:00

mapred-site.xml 100% 1183 1.0MB/s 00:00

yarn-site.xml 100% 918 846.6KB/s 00:00

[root@pig1 hadoop]#

[root@pig1 hadoop]# scp workers root@pig2:/root/hadoop/etc/hadoop/.

workers 100% 15 6.4KB/s 00:00

[root@pig1 hadoop]# scp workers root@pig3:/root/hadoop/etc/hadoop/.

workers 100% 15 5.6KB/s 00:00

[root@pig1 hadoop]# scp workers root@pig4:/root/hadoop/etc/hadoop/.

workers 100% 15 7.3KB/s 00:00

[root@pig1 hadoop]# scp workers root@pig5:/root/hadoop/etc/hadoop/.

workers 100% 15 6.1KB/s 00:00

[root@pig1 hadoop]#

????????sh就不贴了哈

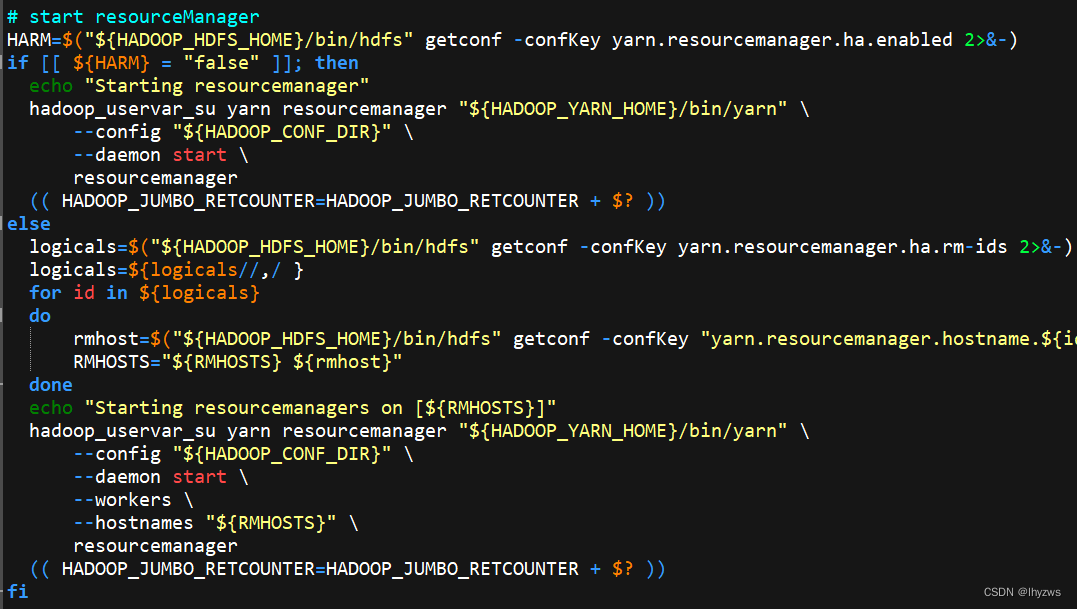

????????3、启动HDFS

????????虽然hadoop提供了批启动命令,比如start-all.sh,start-dfs.sh和start-yarn.sh。但是使用批启动命令,一旦出现问题,还是比较麻烦的,所以这里还是往下剖一下的好。

? ? ? ? 按照官网指南,HDFS启动涉及NameNode,SecondaryNameNode,DataNode 3个daemon程序;Yarn 启动涉及ResourceManager、NodeManager和WebAppProxy 3个daemon程序,另为了管理MapReduce任务,还需要启动MapReduce Job History Server:

????????HDFS daemons are NameNode, SecondaryNameNode, and DataNode. YARN daemons are ResourceManager, NodeManager, and WebAppProxy. If MapReduce is to be used, then the MapReduce Job History Server will also be running. For large installations, these are generally running on separate hosts.? ? ? ? 至于如何单步启动HDFS,最好的办法就是看上面提到的几个批启动命令的脚本。? ? ? ??

????????(1)检查配置文件正确性

????????HDFS? ? ? ?

????????在start-dfs的代码中,实际是使用hdfs的getconf子命令获取了NameNode和Secondary NameNode的主机名或地址:?

![]()

![]()

? ? ? ? ?所以,在启动HDFS前,我们也完全可以先使用这2个命令看看配置是否整对了。

[root@pig1 hadoop]# bin/hdfs getconf -namenodes

Incorrect configuration: namenode address dfs.namenode.servicerpc-address.[] or dfs.namenode.rpc-address.[] is not configured.

[root@pig1 hadoop]#

[root@pig1 hadoop]# bin/hdfs getconf -namenodes

pig1

[root@pig1 hadoop]# bin/hdfs getconf -secondaryNameNodes

pig2

[root@pig1 hadoop]#

? ? ? ? DataNode不用检查,只要workers文件设对了就行。

? ? ? ? YARN

? ? ? ? 在start-yarn的代码中,可以看到,没有直接检测resourcemanager之类节点设置是否正确的方式,而是在判断是否配置高可用(HA)模式。这个暂时我们也没有计划接触,所以一定返回false。好在只要xml没设错,yarn相关的daemon都不算太难启动,只是需要在正确的节点上运行start-yarn就行。

? ? ? ? ?(2)启动Hadoop

? ? ? ? 虽然有start-all,但不建议用,因为我也不太清楚,脚本都否知道我为节点分配的角色。所以最好是分别用start-dfs和start-yarn在pig1,pig2上分别启动

? ? ? ?①? 格式化DFS

????????如果是第一次启动,那么在启动前需要在名字服务器节点上执行一次文件系统格式化操作。该操作只能执行这一次,如果要重来,则需要停止各daemon,接着将hadoop.tmp.dir、dfs.namenode.name.dir和dfs.dataname.data.dir这3个目录下得文件清理干净,然后再执行格式化操作。

[root@pig1 hadoop]# bin/hdfs namenode -format

2022-09-19 02:48:57,722 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = pig1/192.168.21.11

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.3.4

STARTUP_MSG: classpath = /root/hadoop/etc/hadoop:/root/hadoop/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar:/root/hadoop/share/hadoop/common/lib/snappy-java-1.1.8.2.jar:/root/hadoop/share/hadoop/common/lib/jsr305-3.0.2.jar:/root/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-9.8.1.jar:/root/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/commons-net-3.6.jar:/root/hadoop/share/hadoop/common/lib/accessors-smart-2.4.7.jar:/root/hadoop/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/root/hadoop/share/hadoop/common/lib/curator-framework-4.2.0.jar:/root/hadoop/share/hadoop/common/lib/netty-3.10.6.Final.jar:/root/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.jar:/root/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/root/hadoop/share/hadoop/common/lib/jetty-servlet-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/root/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/root/hadoop/share/hadoop/common/lib/dnsjava-2.1.7.jar:/root/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/jetty-xml-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/hadoop-shaded-protobuf_3_7-1.1.1.jar:/root/hadoop/share/hadoop/common/lib/reload4j-1.2.22.jar:/root/hadoop/share/hadoop/common/lib/commons-compress-1.21.jar:/root/hadoop/share/hadoop/common/lib/guava-27.0-jre.jar:/root/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/root/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/jersey-server-1.19.jar:/root/hadoop/share/hadoop/common/lib/jetty-webapp-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/jetty-security-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/root/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/root/hadoop/share/hadoop/common/lib/jetty-server-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/root/hadoop/share/hadoop/common/lib/stax2-api-4.2.1.jar:/root/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/root/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/root/hadoop/share/hadoop/common/lib/jackson-databind-2.12.7.jar:/root/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/root/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/root/hadoop/share/hadoop/common/lib/gson-2.8.9.jar:/root/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/root/hadoop/share/hadoop/common/lib/jersey-json-1.19.jar:/root/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/root/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/hadoop-shaded-guava-1.1.1.jar:/root/hadoop/share/hadoop/common/lib/jetty-http-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/checker-qual-2.5.2.jar:/root/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/root/hadoop/share/hadoop/common/lib/jackson-core-2.12.7.jar:/root/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/httpcore-4.4.13.jar:/root/hadoop/share/hadoop/common/lib/asm-5.0.4.jar:/root/hadoop/share/hadoop/common/lib/slf4j-api-1.7.36.jar:/root/hadoop/share/hadoop/common/lib/json-smart-2.4.7.jar:/root/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/curator-recipes-4.2.0.jar:/root/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/jetty-io-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/hadoop-auth-3.3.4.jar:/root/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/root/hadoop/share/hadoop/common/lib/commons-lang3-3.12.0.jar:/root/hadoop/share/hadoop/common/lib/jakarta.activation-api-1.2.1.jar:/root/hadoop/share/hadoop/common/lib/commons-text-1.4.jar:/root/hadoop/share/hadoop/common/lib/woodstox-core-5.3.0.jar:/root/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/root/hadoop/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/root/hadoop/share/hadoop/common/lib/jsch-0.1.55.jar:/root/hadoop/share/hadoop/common/lib/zookeeper-jute-3.5.6.jar:/root/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/root/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.36.jar:/root/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/hadoop-annotations-3.3.4.jar:/root/hadoop/share/hadoop/common/lib/commons-io-2.8.0.jar:/root/hadoop/share/hadoop/common/lib/curator-client-4.2.0.jar:/root/hadoop/share/hadoop/common/lib/jetty-util-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/root/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/root/hadoop/share/hadoop/common/lib/zookeeper-3.5.6.jar:/root/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/root/hadoop/share/hadoop/common/lib/failureaccess-1.0.jar:/root/hadoop/share/hadoop/common/lib/jetty-util-ajax-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/root/hadoop/share/hadoop/common/lib/commons-codec-1.15.jar:/root/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/root/hadoop/share/hadoop/common/lib/jackson-annotations-2.12.7.jar:/root/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/root/hadoop/share/hadoop/common/lib/commons-daemon-1.0.13.jar:/root/hadoop/share/hadoop/common/lib/jersey-core-1.19.jar:/root/hadoop/share/hadoop/common/lib/httpclient-4.5.13.jar:/root/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/root/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/root/hadoop/share/hadoop/common/hadoop-kms-3.3.4.jar:/root/hadoop/share/hadoop/common/hadoop-nfs-3.3.4.jar:/root/hadoop/share/hadoop/common/hadoop-common-3.3.4.jar:/root/hadoop/share/hadoop/common/hadoop-common-3.3.4-tests.jar:/root/hadoop/share/hadoop/common/hadoop-registry-3.3.4.jar:/root/hadoop/share/hadoop/hdfs:/root/hadoop/share/hadoop/hdfs/lib/snappy-java-1.1.8.2.jar:/root/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/root/hadoop/share/hadoop/hdfs/lib/okhttp-4.9.3.jar:/root/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-9.8.1.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-net-3.6.jar:/root/hadoop/share/hadoop/hdfs/lib/accessors-smart-2.4.7.jar:/root/hadoop/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/curator-framework-4.2.0.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-rxtx-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-stomp-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-mqtt-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-http-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/root/hadoop/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-classes-macos-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/hadoop-shaded-protobuf_3_7-1.1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/reload4j-1.2.22.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-compress-1.21.jar:/root/hadoop/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-security-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.4.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-server-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-dns-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/stax2-api-4.2.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-xml-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/kotlin-stdlib-1.4.10.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-buffer-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.12.7.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-classes-epoll-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-native-unix-common-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/gson-2.8.9.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-native-epoll-4.1.77.Final-linux-aarch_64.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-memcache-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-all-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-native-epoll-4.1.77.Final-linux-x86_64.jar:/root/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/hadoop-shaded-guava-1.1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-http-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-native-kqueue-4.1.77.Final-osx-x86_64.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-handler-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-native-macos-4.1.77.Final-osx-aarch_64.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-udt-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-core-2.12.7.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.13.jar:/root/hadoop/share/hadoop/hdfs/lib/asm-5.0.4.jar:/root/hadoop/share/hadoop/hdfs/lib/json-smart-2.4.7.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-haproxy-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/curator-recipes-4.2.0.jar:/root/hadoop/share/hadoop/hdfs/lib/kotlin-stdlib-common-1.4.10.jar:/root/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-io-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.12.0.jar:/root/hadoop/share/hadoop/hdfs/lib/okio-2.8.0.jar:/root/hadoop/share/hadoop/hdfs/lib/jakarta.activation-api-1.2.1.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-classes-kqueue-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-text-1.4.jar:/root/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.3.0.jar:/root/hadoop/share/hadoop/hdfs/lib/jettison-1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/jsch-0.1.55.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-native-kqueue-4.1.77.Final-osx-aarch_64.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-transport-sctp-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/root/hadoop/share/hadoop/hdfs/lib/zookeeper-jute-3.5.6.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-smtp-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-io-2.8.0.jar:/root/hadoop/share/hadoop/hdfs/lib/curator-client-4.2.0.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-util-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/root/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-handler-proxy-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/root/hadoop/share/hadoop/hdfs/lib/zookeeper-3.5.6.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-redis-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-socks-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/root/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-codec-1.15.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-resolver-dns-native-macos-4.1.77.Final-osx-x86_64.jar:/root/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.12.7.jar:/root/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-resolver-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-common-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/root/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/root/hadoop/share/hadoop/hdfs/lib/netty-codec-http2-4.1.77.Final.jar:/root/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.13.jar:/root/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/root/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.3.4-tests.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.3.4.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.3.4-tests.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.3.4-tests.jar:/root/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.3.4-tests.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.3.4.jar:/root/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.3.4-tests.jar:/root/hadoop/share/hadoop/yarn:/root/hadoop/share/hadoop/yarn/lib/jetty-plus-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/jetty-jndi-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/root/hadoop/share/hadoop/yarn/lib/snakeyaml-1.26.jar:/root/hadoop/share/hadoop/yarn/lib/jetty-annotations-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/javax.websocket-client-api-1.0.jar:/root/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/root/hadoop/share/hadoop/yarn/lib/websocket-servlet-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/bcpkix-jdk15on-1.60.jar:/root/hadoop/share/hadoop/yarn/lib/objenesis-2.6.jar:/root/hadoop/share/hadoop/yarn/lib/asm-tree-9.1.jar:/root/hadoop/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/root/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/root/hadoop/share/hadoop/yarn/lib/asm-analysis-9.1.jar:/root/hadoop/share/hadoop/yarn/lib/javax.websocket-api-1.0.jar:/root/hadoop/share/hadoop/yarn/lib/javax-websocket-client-impl-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/root/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/root/hadoop/share/hadoop/yarn/lib/websocket-common-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.12.7.jar:/root/hadoop/share/hadoop/yarn/lib/websocket-api-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/jetty-client-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.jar:/root/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/root/hadoop/share/hadoop/yarn/lib/websocket-server-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/bcprov-jdk15on-1.60.jar:/root/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/root/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.12.7.jar:/root/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/root/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.12.7.jar:/root/hadoop/share/hadoop/yarn/lib/asm-commons-9.1.jar:/root/hadoop/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/root/hadoop/share/hadoop/yarn/lib/jna-5.2.0.jar:/root/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/root/hadoop/share/hadoop/yarn/lib/websocket-client-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/root/hadoop/share/hadoop/yarn/lib/javax-websocket-server-impl-9.4.43.v20210629.jar:/root/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/root/hadoop/share/hadoop/yarn/lib/jakarta.xml.bind-api-2.3.2.jar:/root/hadoop/share/hadoop/yarn/lib/jline-3.9.0.jar:/root/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-applications-mawo-core-3.3.4.jar:/root/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.3.4.jar

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r a585a73c3e02ac62350c136643a5e7f6095a3dbb; compiled by 'stevel' on 2022-07-29T12:32Z

STARTUP_MSG: java = 18.0.1.1

************************************************************/

2022-09-19 02:48:57,741 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2022-09-19 02:48:57,857 INFO namenode.NameNode: createNameNode [-format]

2022-09-19 02:48:58,449 INFO namenode.NameNode: Formatting using clusterid: CID-f134afe2-0d90-4d61-accd-f40b770a2e01

2022-09-19 02:48:58,485 INFO namenode.FSEditLog: Edit logging is async:true

2022-09-19 02:48:58,538 INFO namenode.FSNamesystem: KeyProvider: null

2022-09-19 02:48:58,541 INFO namenode.FSNamesystem: fsLock is fair: true

2022-09-19 02:48:58,542 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2022-09-19 02:48:58,574 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

2022-09-19 02:48:58,574 INFO namenode.FSNamesystem: supergroup = supergroup

2022-09-19 02:48:58,574 INFO namenode.FSNamesystem: isPermissionEnabled = true

2022-09-19 02:48:58,574 INFO namenode.FSNamesystem: isStoragePolicyEnabled = true

2022-09-19 02:48:58,574 INFO namenode.FSNamesystem: HA Enabled: false

2022-09-19 02:48:58,634 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2022-09-19 02:48:58,649 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2022-09-19 02:48:58,649 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2022-09-19 02:48:58,657 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2022-09-19 02:48:58,658 INFO blockmanagement.BlockManager: The block deletion will start around 2022 9月 19 02:48:58

2022-09-19 02:48:58,659 INFO util.GSet: Computing capacity for map BlocksMap

2022-09-19 02:48:58,659 INFO util.GSet: VM type = 64-bit

2022-09-19 02:48:58,662 INFO util.GSet: 2.0% max memory 928 MB = 18.6 MB

2022-09-19 02:48:58,662 INFO util.GSet: capacity = 2^21 = 2097152 entries

2022-09-19 02:48:58,676 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled

2022-09-19 02:48:58,677 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2022-09-19 02:48:58,682 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.999

2022-09-19 02:48:58,682 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2022-09-19 02:48:58,682 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2022-09-19 02:48:58,682 INFO blockmanagement.BlockManager: defaultReplication = 3

2022-09-19 02:48:58,682 INFO blockmanagement.BlockManager: maxReplication = 512

2022-09-19 02:48:58,682 INFO blockmanagement.BlockManager: minReplication = 1

2022-09-19 02:48:58,683 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2022-09-19 02:48:58,683 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2022-09-19 02:48:58,683 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2022-09-19 02:48:58,683 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2022-09-19 02:48:58,712 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

2022-09-19 02:48:58,712 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

2022-09-19 02:48:58,712 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

2022-09-19 02:48:58,712 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

2022-09-19 02:48:58,722 INFO util.GSet: Computing capacity for map INodeMap

2022-09-19 02:48:58,722 INFO util.GSet: VM type = 64-bit

2022-09-19 02:48:58,722 INFO util.GSet: 1.0% max memory 928 MB = 9.3 MB

2022-09-19 02:48:58,722 INFO util.GSet: capacity = 2^20 = 1048576 entries

2022-09-19 02:48:58,733 INFO namenode.FSDirectory: ACLs enabled? true

2022-09-19 02:48:58,733 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2022-09-19 02:48:58,733 INFO namenode.FSDirectory: XAttrs enabled? true

2022-09-19 02:48:58,733 INFO namenode.NameNode: Caching file names occurring more than 10 times

2022-09-19 02:48:58,737 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2022-09-19 02:48:58,739 INFO snapshot.SnapshotManager: SkipList is disabled

2022-09-19 02:48:58,742 INFO util.GSet: Computing capacity for map cachedBlocks

2022-09-19 02:48:58,742 INFO util.GSet: VM type = 64-bit

2022-09-19 02:48:58,742 INFO util.GSet: 0.25% max memory 928 MB = 2.3 MB

2022-09-19 02:48:58,742 INFO util.GSet: capacity = 2^18 = 262144 entries

2022-09-19 02:48:58,764 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2022-09-19 02:48:58,764 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2022-09-19 02:48:58,764 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2022-09-19 02:48:58,768 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2022-09-19 02:48:58,768 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2022-09-19 02:48:58,769 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2022-09-19 02:48:58,773 INFO util.GSet: VM type = 64-bit

2022-09-19 02:48:58,773 INFO util.GSet: 0.029999999329447746% max memory 928 MB = 285.1 KB

2022-09-19 02:48:58,773 INFO util.GSet: capacity = 2^15 = 32768 entries

2022-09-19 02:48:58,799 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1800723560-192.168.21.11-1663570138789

2022-09-19 02:48:58,821 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted.

2022-09-19 02:48:58,868 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2022-09-19 02:48:58,987 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

2022-09-19 02:48:59,007 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2022-09-19 02:48:59,025 INFO namenode.FSNamesystem: Stopping services started for active state

2022-09-19 02:48:59,025 INFO namenode.FSNamesystem: Stopping services started for standby state

2022-09-19 02:48:59,037 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2022-09-19 02:48:59,038 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at pig1/192.168.21.11

************************************************************/

? ? ? ? 如果格式化成功,可以在hadoop.tmp.dir下看到dfs/name子目录被成功建立。

? ? ? ? ②?启动NameNode、Secondary NameNode和DataNode

? ? ? ? 启动前,看看各节点上Java进程是什么情况:

#首先看看pig1的服务

[root@pig1 hadoop]# jps

12009 Jps

#Pig2的

[root@pig2 hadoop]# jps

9976 Jps

#Pig3的

[root@pig3 ~]# jps

9322 Jps? ? ? ? 在pig1上,启动:

[root@pig1 hadoop]# sbin/start-dfs.sh

Starting namenodes on [pig1]

Starting datanodes

pig5: WARNING: /root/hadoop/logs does not exist. Creating.

pig3: WARNING: /root/hadoop/logs does not exist. Creating.

pig4: WARNING: /root/hadoop/logs does not exist. Creating.

Starting secondary namenodes [pig2]

pig2: WARNING: /root/hadoop/logs does not exist. Creating.

????????再来看有哪些java进程:

[root@pig1 hadoop]# jps

12870 Jps

12476 NameNode

[root@pig2 hadoop]# jps

10096 SecondaryNameNode

10162 Jps

[root@pig3 ~]# jps

9441 DataNode

9518 Jps

? ? ? ? 可见和HDFS相关的3个daemon都已经启动了。如果不打算使用start-dfs,也可以直接在对应节点上使用hdfs命令:

? ? ? ? pig1:????????$HADOOP_HOME\bin\hdfs --daemon start namenode

? ? ? ? pig2:????????$HADOOP_HOME\bin\hdfs --daemon start secondarynamenode

? ? ? ? pig3-5:????????$HADOOP_HOME\bin\hdfs --daemon start datanode

? ? ? ? 其实我倒觉得这样更容易理解些:)

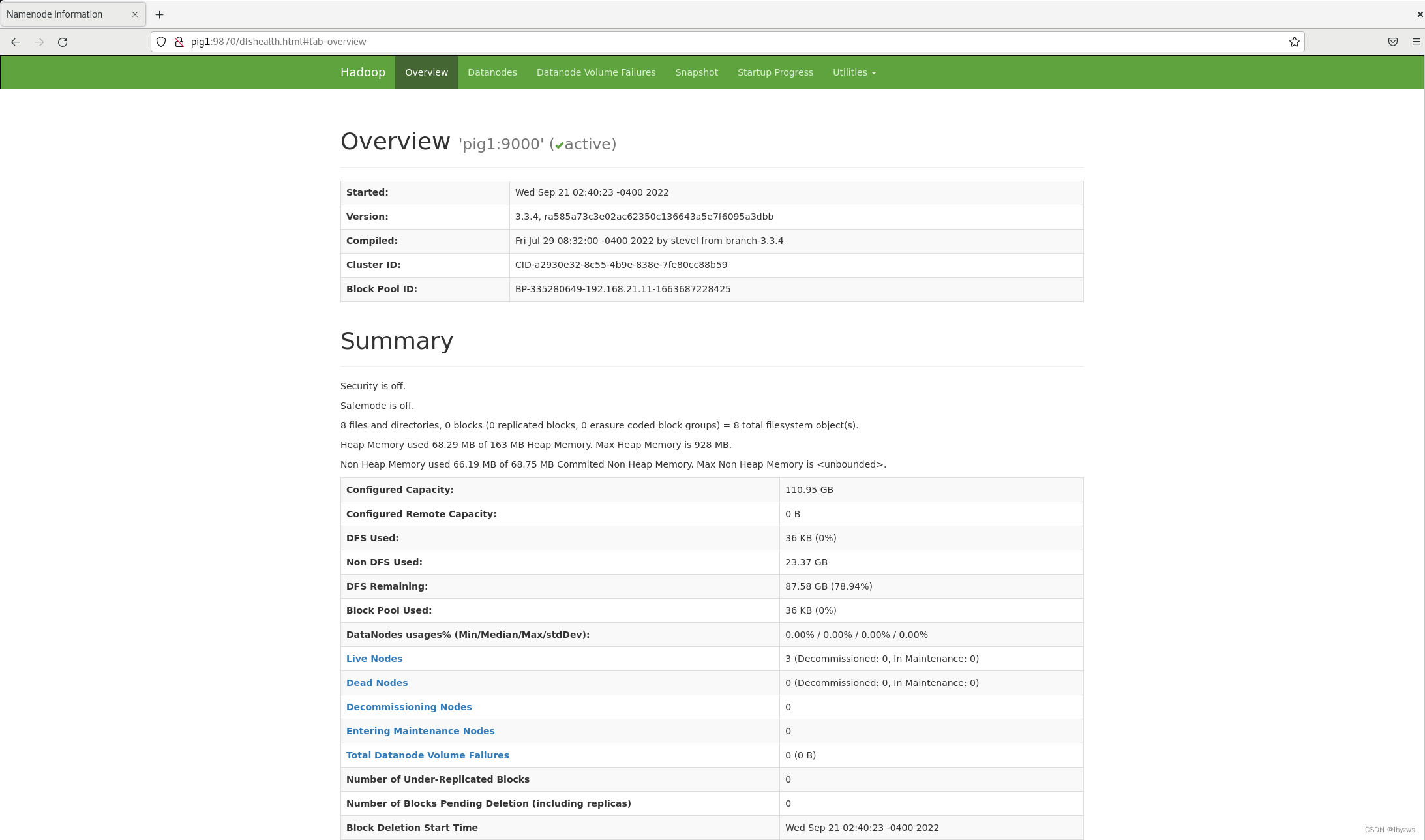

? ? ? ? 启动完成后,可以在浏览器中输入pig1:9870,就可以登进名字服务器的WEB界面了。当然,如果DNS没有设置的话,可能只能在pig1上进行这个操作。据说如果在hdfs-site.xml中没有配置如下属性,可能还会因为DNS设置的问题造成NameNode无法找到DataNode。我暂时还没碰上,MARK一下。

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

? ?????????③ 初始化Yarn

? ? ? ? 记住要在准备作为resourcemanager的节点(Pig2节点)上执行以下操作:

./sbin/start-yarn.sh

? ? ? ? 其实也可以 直接通过yarn命令启动:

????????pig2:????????$HADOOP_HOME\bin\yarn?--daemon start resourcemanager

? ? ? ? pig3-5:? ? ? ? $HADOOP_HOME\bin\yarn --daemon start nodemanager

????????④ 启动history server

? ? ? ?history server 和 web proxy似乎没有办法通过批启动脚本启动,亦或是我少配置了什么东西。不过没关系,按照官网指南,这个完全可以通过命令直接启动:

????????同样在pig2节点上执行以下操作:? ? ? ?

[root@pig2 hadoop]# bin/mapred --daemon start historyserver

[root@pig2 hadoop]# jps

6867 Jps

5477 SecondaryNameNode

6172 ResourceManager

6830 JobHistoryServer????????⑤ 启动Web Proxy Server

? ? ? ? 在pig2上启动web proxy

[root@pig2 hadoop]# bin/yarn --daemon start proxyserver

[root@pig2 hadoop]# jps

5477 SecondaryNameNode

7019 Jps

6172 ResourceManager

6988 WebAppProxyServer

[root@pig2 hadoop]#? ? ? ? ⑥完全手工启动方法

? ? ? ? 如上所述,作为一个强迫症患者,其实我更喜欢完全手工启动的方法,虽然只是用了--daemon参数,但似乎暂时也没碰到问题。虽然批启动脚本中试用了--workers --config --hostname等选项,可能与远程执行、执行模式及配置文件相关,但暂时没碰到问题,也就不深究了。

????????DFS相关

?????????bin/hdfs --daemon start namenode

????????bin/hdfs --daemon start secondarynamenode

????????bin/hdfs --daemon start datanode

? ? ? ? YARN相关

????????bin/yarn --daemon start resourcemanager

????????bin/yarn --daemon start nodemanager

????????bin/yarn --daemon start proxyserver

? ? ? ? MapReduce相关

????????bin/mapred --daemon start historyserver

????????4、执行Grep示例

? ? ? ? 好了,废了老鼻子劲,终于可以在集群模式下执行Grep示例了。

? ? ? ? (1)准备输入文件

? ? ? ? 同样是拷贝xml文件,但这次需要使用hdfs命令将本地文件拷贝到NFS中。

首先是在NFS中建立input文件夹,然后把本地文件put进去。这个操作和ftp很像。

[root@pig1 hadoop]# bin/hdfs dfs -mkdir /users/input

[root@pig1 hadoop]# bin/hdfs dfs -put etc/hadoop/*.xml /users/input

[root@pig1 hadoop]# bin/hdfs dfs -ls /users/input

Found 10 items