目录

1. 前言

在Android binder学习笔记2 - 获取ServiceManager中defaultServiceManager->ProcessState::getContextObject函数会调用IPCThreadState::self()->transact来探测binder是否ready,此处我们就来分析与servicemanager通信的这个过程

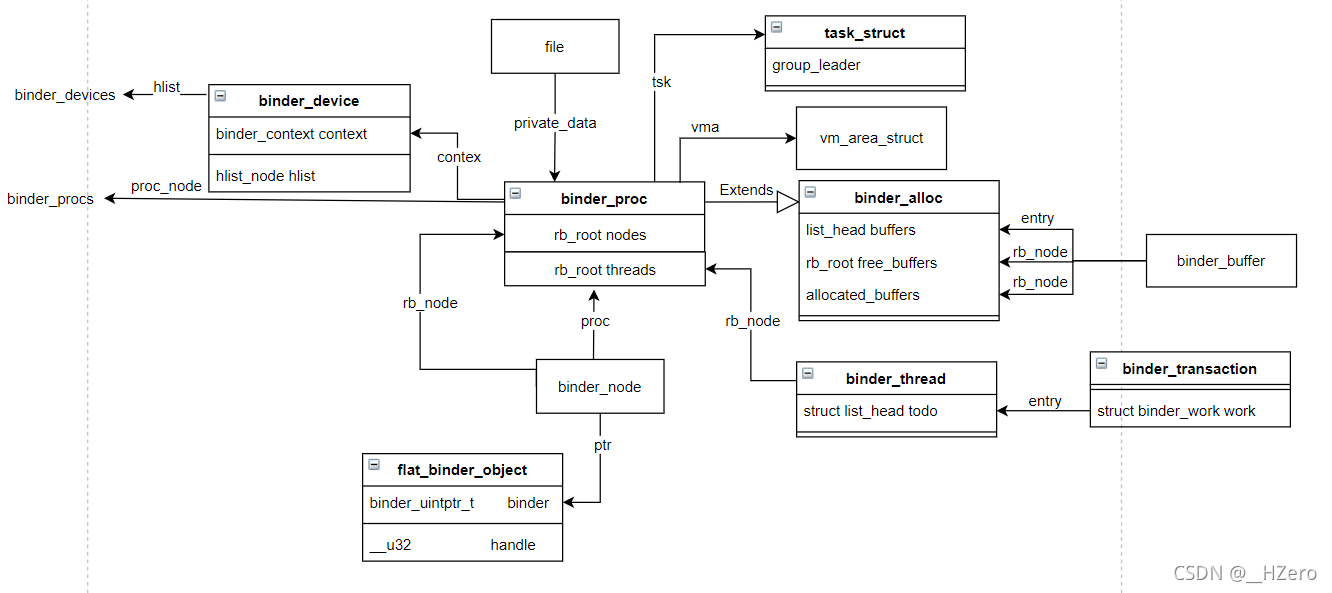

2. binder领域模型

binder_device代表一个binder设备,链入全局binder_devices链表中

binder_proc:binder_open中创建binder_proc,并且会链入到全局binder_procs链表中,binder_proc与一个进程关联,一般是与当前进程,在binder_open中创建的binder_proc即为serviceManagerService进程关联的binder_proc

binder_thread:通过rb_node链入binder_proc的红黑树

binder_alloc位于binder_proc中,它用于管理一块mmap内存区域,同时allocated_buffers红黑树用于管理已经分配的page,free_buffers红黑树用于管理空闲的page

binder_proc中的tsk描述的是一个领头进程,也就是线程组的主线程

binder_node: 通过rb_node链入binder_proc的红黑树

binder_transaction:binder事务,它通过binder_work->entry链入binder_thread->todo链表

3. IPCThreadState::self()->transact

前面defaultServiceManager->ProcessState::getContextObject函数会调用IPCThreadState::self()->transact来探测binder是否ready,此处我们就来分析与servicemanager通信的这个过程

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

|--flags |= TF_ACCEPT_FDS;

| //此处handle为0,code为PING_TRANSACTION, reply为nullptr

|--err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

|--if (err != NO_ERROR)

| if (reply) reply->setError(err);

| return (mLastError = err);

\--if ((flags & TF_ONE_WAY) == 0)

if (reply) {

err = waitForResponse(reply);

else

Parcel fakeReply;

err = waitForResponse(&fakeReply);

|- -writeTransactionData

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

|--bnder_transaction_data tr;

| //对于BpBinder则使用handle,对于BBinder则使用ptr

| tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

| tr.target.handle = handle;

| tr.code = code;

| tr.flags = binderFlags;

| //记录着BBinder指针

| tr.cookie = 0;

| tr.sender_pid = 0;

| tr.sender_euid = 0;

|--const status_t err = data.errorCheck();

|--if (err == NO_ERROR)

| // data代表整个数据区

| //代表本次传输的parcel数据的大小

| tr.data_size = data.ipcDataSize();

| //data.ptr指向的是传递给Binder驱动的数据区的起始地址

| tr.data.ptr.buffer = data.ipcData();

| //代表传递的IPC对象的大小;根据这个可以推测出传递了多少个binder对象

| tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

| // data.offsets指的是数据区中IPC数据地址的偏移量

| tr.data.ptr.offsets = data.ipcObjects();

| else if (statusBuffer)

| tr.flags |= TF_STATUS_CODE;

| *statusBuffer = err;

| tr.data_size = sizeof(status_t);

| tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

| tr.offsets_size = 0;

| tr.data.ptr.offsets = 0;

| else

| return (mLastError = err);

| //将tr写入mOut

\--mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

此处cmd为BC_TRANSACTION,handle为0,code为PING_TRANSACTION

bnder_transaction_data结构体用于组装发送给binder的事务数据,主要包括目标服务的handle或binder_uintptr_t指针,最终将命令码BC_TRANSACTION,以及bnder_transaction_data写入mOut, mOut位于IPCThreadState

bnder_transaction_data结构体定义可参考:http://gityuan.com/2015/11/01/binder-driver/

|- -IPCThreadState::waitForResponse

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

|--uint32_t cmd;

|--while (1) {

| if ((err=talkWithDriver()) < NO_ERROR) break;

| err = mIn.errorCheck();

| if (err < NO_ERROR) break;

| if (mIn.dataAvail() == 0) continue;

|

| cmd = (uint32_t)mIn.readInt32();

| switch (cmd) {

| case BR_TRANSACTION_COMPLETE:

| if (!reply && !acquireResult) goto finish;

| break;

| case BR_REPLY:

| {

| binder_transaction_data tr;

| err = mIn.read(&tr, sizeof(tr));

| if (reply) {

| if ((tr.flags & TF_STATUS_CODE) == 0) {

| reply->ipcSetDataReference(

| reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

| tr.data_size,

| reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

| tr.offsets_size/sizeof(binder_size_t),

| freeBuffer, this);

| } else {

| err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

| freeBuffer(nullptr,

| reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

| tr.data_size,

| reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

| tr.offsets_size/sizeof(binder_size_t), this);

| }

| } else {

| freeBuffer(nullptr,

| reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

| tr.data_size,

| reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

| tr.offsets_size/sizeof(binder_size_t), this);

| continue;

| }

| }

| goto finish;

|

default:

| err = executeCommand(cmd);

| if (err != NO_ERROR) goto finish;

| break;

| }

| }

return err;

}

|- - -talkWithDriver

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

//mOut中保存了target proc handle, cmd, code等信息

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

//接收数据缓冲区信息的填充。当收到驱动的数据,则写入mIn

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

if (mProcess->mDriverFD < 0) {

err = -EBADF;

}

} while (err == -EINTR);

return err;

}

binder_write_read结构体用来与Binder设备交换数据的结构, 通过ioctl与mDriverFD通信,是真正与Binder驱动进行数据读写交互的过程。

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)

|--binder_ioctl(filp, cmd, arg)

| //proc为vold进程,它在ProcessState::self()->binder_open时创建

|--struct binder_proc *proc = filp->private_data

| struct binder_thread *thread;

| unsigned int size = _IOC_SIZE(cmd);

| //获取用户空间buf, 它保存了用户空间的mOut.data

| void __user *ubuf = (void __user *)arg;

|--wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2)

| //通过当前线程的pid遍历binder_proc红黑树,查找一个的binder_thread, 如果没有找到则创建并插入红黑树

|--thread = binder_get_thread(proc);

| |--thread = binder_get_thread_ilocked(proc, NULL);

| \--if (!thread)

| new_thread = kzalloc(sizeof(*thread), GFP_KERNEL);

| //插入binder_proc的红黑树

| binder_get_thread_ilocked(proc, new_thread)

\--switch (cmd) {

case BINDER_WRITE_READ:

//arg中保存了用户数据

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

break;

...

}

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

| //vold进程对应的binder_proc

|--struct binder_proc *proc = filp->private_data;

| unsigned int size = _IOC_SIZE(cmd);

| void __user *ubuf = (void __user *)arg;

| struct binder_write_read bwr;

|--copy_from_user(&bwr, ubuf, sizeof(bwr))

|--if (bwr.write_size > 0)

| ret = binder_thread_write(proc, thread,

| bwr.write_buffer,

| bwr.write_size,

| &bwr.write_consumed);

|--if (bwr.read_size > 0)

| ret = binder_thread_read(proc, thread, bwr.read_buffer,

| bwr.read_size,

| &bwr.read_consumed,

| filp->f_flags & O_NONBLOCK);

| if (!binder_worklist_empty_ilocked(&proc->todo))

| binder_wakeup_proc_ilocked(proc);

|--copy_to_user(ubuf, &bwr, sizeof(bwr))

|- - - -binder_thread_write

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

struct binder_context *context = proc->context;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error.cmd == BR_OK) {

int ret;

//获取命令cmd

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

atomic_inc(&binder_stats.bc[_IOC_NR(cmd)]);

atomic_inc(&proc->stats.bc[_IOC_NR(cmd)]);

atomic_inc(&thread->stats.bc[_IOC_NR(cmd)]);

}

switch (cmd) {

...

case BC_TRANSACTION:

case BC_REPLY: {

//这是一个用户空间与内核空间相同的结构,它持有了目标进程handle,code, 用户buffer

struct binder_transaction_data tr;

//这是唯一一次拷贝的地方

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr,

cmd == BC_REPLY, 0);

break;

}

...

*consumed = ptr - buffer;

}

return 0;

}

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

|--int ret;

| struct binder_transaction *t;

| struct binder_work *w;

| struct binder_work *tcomplete;

| binder_size_t buffer_offset = 0;

| binder_size_t off_start_offset, off_end_offset;

| binder_size_t off_min;

| binder_size_t sg_buf_offset, sg_buf_end_offset;

| struct binder_proc *target_proc = NULL;

| struct binder_thread *target_thread = NULL;

| struct binder_node *target_node = NULL;

| struct binder_transaction *in_reply_to = NULL;

| struct binder_transaction_log_entry *e;

| uint32_t return_error = 0;

| uint32_t return_error_param = 0;

| uint32_t return_error_line = 0;

| binder_size_t last_fixup_obj_off = 0;

| binder_size_t last_fixup_min_off = 0;

| struct binder_context *context = proc->context;

| int t_debug_id = atomic_inc_return(&binder_last_id);

| char *secctx = NULL;

| u32 secctx_sz = 0;

|--e = binder_transaction_log_add(&binder_transaction_log);

| e->debug_id = t_debug_id;

| e->call_type = reply ? 2 : !!(tr->flags & TF_ONE_WAY);

| //获取当前进程也就是vold进程的pid

| e->from_proc = proc->pid;

| //获取当前线程的pid

| e->from_thread = thread->pid;

| //获取目标进程的handle

| e->target_handle = tr->target.handle;

| e->data_size = tr->data_size;

| e->offsets_size = tr->offsets_size;

| e->context_name = proc->context->name;

|--if (reply)

| ...

| else

| if (tr->target.handle)

| struct binder_ref *ref;

| // 由handle 找到相应 binder_ref, 由binder_ref 找到相应 binder_node

| ref = binder_get_ref_olocked(proc, tr->target.handle,true);

| //根据ref得到target_proc和binder_node

| target_node = binder_get_node_refs_for_txn(ref->node, &target_proc,&return_error);

| else

| //handle为0则代表serviceManager进程

| target_node = context->binder_context_mgr_node;

| target_node = binder_get_node_refs_for_txn(target_node, &target_proc,&return_error);

| w = list_first_entry_or_null(&thread->todo,struct binder_work, entry);

| if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack)

| struct binder_transaction *tmp = thread->transaction_stack;

| while (tmp)

| struct binder_thread *from = tmp->from;

| if (from && from->proc == target_proc)

| atomic_inc(&from->tmp_ref);

| target_thread = from;

| break;

| tmp = tmp->from_parent;

|--if (target_thread) e->to_thread = target_thread->pid;

|--e->to_proc = target_proc->pid;

| /* TODO: reuse incoming transaction for reply */

|--t = kzalloc(sizeof(*t), GFP_KERNEL);

|--tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

|--if (!reply && !(tr->flags & TF_ONE_WAY))

| t->from = thread;

| else

| t->from = NULL

| t->sender_euid = task_euid(proc->tsk);

| t->to_proc = target_proc;

| t->to_thread = target_thread

| t->code = tr->code;

| t->flags = tr->flags;

| if (!(t->flags & TF_ONE_WAY) &&

| binder_supported_policy(current->policy))

| /* Inherit supported policies for synchronous transactions */

| t->priority.sched_policy = current->policy;

| t->priority.prio = current->normal_prio;

| else

| /* Otherwise, fall back to the default priority */

| t->priority = target_proc->default_priority;

| //从target proc的alloc分配buffer

| t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size,

| tr->offsets_size, extra_buffers_size,

| !reply && (t->flags & TF_ONE_WAY), current->tgid);

| //从用户空间tr->data.ptr.buffer拷贝tr->data_size数据到t->buffer

|--binder_alloc_copy_user_to_buffer(&target_proc->alloc,

| t->buffer, 0,

| (const void __user *)(uintptr_t)tr->data.ptr.buffer,

| tr->data_size))

|--off_start_offset = ALIGN(tr->data_size, sizeof(void *));

| buffer_offset = off_start_offset;

| off_end_offset = off_start_offset + tr->offsets_size;

| sg_buf_offset = ALIGN(off_end_offset, sizeof(void *));

| sg_buf_end_offset = sg_buf_offset + extra_buffers_size -

| ALIGN(secctx_sz, sizeof(u64));

|--for (buffer_offset = off_start_offset; buffer_offset < off_end_offset;

| buffer_offset += sizeof(binder_size_t))

| ...

|--if (reply) {

| ...

| } else if (!(t->flags & TF_ONE_WAY)) {

| BUG_ON(t->buffer->async_transaction != 0);

| binder_inner_proc_lock(proc);

| /*

| * Defer the TRANSACTION_COMPLETE, so we don't return to

| * userspace immediately; this allows the target process to

| * immediately start processing this transaction, reducing

| * latency. We will then return the TRANSACTION_COMPLETE when

| * the target replies (or there is an error).

| */

| binder_enqueue_deferred_thread_work_ilocked(thread, tcomplete);

| t->need_reply = 1;

| t->from_parent = thread->transaction_stack;

| thread->transaction_stack = t;

| binder_inner_proc_unlock(proc);

| if (!binder_proc_transaction(t, target_proc, target_thread)) {

| binder_inner_proc_lock(proc);

| binder_pop_transaction_ilocked(thread, t);

| binder_inner_proc_unlock(proc);

| goto err_dead_proc_or_thread;

| }

| } else {

| BUG_ON(target_node == NULL);

| BUG_ON(t->buffer->async_transaction != 1);

| binder_enqueue_thread_work(thread, tcomplete);

| if (!binder_proc_transaction(t, target_proc, NULL))

| goto err_dead_proc_or_thread;

| }

|--if (target_thread)

| binder_thread_dec_tmpref(target_thread);

|--binder_proc_dec_tmpref(target_proc);

|--if (target_node)

| binder_dec_node_tmpref(target_node);

| /*

| * write barrier to synchronize with initialization

| * of log entry

| */

|--smp_wmb();

|--WRITE_ONCE(e->debug_id_done, t_debug_id);

\--return;

static bool binder_proc_transaction(struct binder_transaction *t,

struct binder_proc *proc,

struct binder_thread *thread)

{

struct binder_node *node = t->buffer->target_node;

struct binder_priority node_prio;

bool oneway = !!(t->flags & TF_ONE_WAY);

bool pending_async = false;

BUG_ON(!node);

binder_node_lock(node);

node_prio.prio = node->min_priority;

node_prio.sched_policy = node->sched_policy;

if (oneway) {

BUG_ON(thread);

if (node->has_async_transaction) {

pending_async = true;

} else {

node->has_async_transaction = true;

}

}

binder_inner_proc_lock(proc);

if (proc->is_dead || (thread && thread->is_dead)) {

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return false;

}

//从目标binder_proc中找到对应的binder_thread

if (!thread && !pending_async)

thread = binder_select_thread_ilocked(proc);

if (thread) {

binder_transaction_priority(thread->task, t, node_prio,

node->inherit_rt);

//将binder_transaction.binder_work入队到thread->todo

binder_enqueue_thread_work_ilocked(thread, &t->work);

} else if (!pending_async) {

binder_enqueue_work_ilocked(&t->work, &proc->todo);

} else {

binder_enqueue_work_ilocked(&t->work, &node->async_todo);

}

if (!pending_async)

binder_wakeup_thread_ilocked(proc, thread, !oneway /* sync */);fwa

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return true;

}

static void binder_wakeup_thread_ilocked(struct binder_proc *proc,

struct binder_thread *thread,

bool sync)

{

assert_spin_locked(&proc->inner_lock);

if (thread) {

#ifdef CONFIG_SCHED_WALT

if (thread->task && current->signal &&

(current->signal->oom_score_adj == 0) &&

(current->prio < DEFAULT_PRIO))

thread->task->low_latency = true;

#endif

if (sync)

wake_up_interruptible_sync(&thread->wait);

else

wake_up_interruptible(&thread->wait);

return;

}

/* Didn't find a thread waiting for proc work; this can happen

* in two scenarios:

* 1. All threads are busy handling transactions

* In that case, one of those threads should call back into

* the kernel driver soon and pick up this work.

* 2. Threads are using the (e)poll interface, in which case

* they may be blocked on the waitqueue without having been

* added to waiting_threads. For this case, we just iterate

* over all threads not handling transaction work, and

* wake them all up. We wake all because we don't know whether

* a thread that called into (e)poll is handling non-binder

* work currently.

*/

binder_wakeup_poll_threads_ilocked(proc, sync);

}

那么这里唤醒的进程是等待在thread->wait等待队列上的进程,那么这些进程是什么时候被挂在等待队列的呢?

我们可以从下面找到答案:

const struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

其中binder_poll会执行poll_wait(filp, &thread->wait, wait),这里猜测poll_wait会将进程挂载到thread->wait等待队列

那么谁会发起poll呢?还记的在Android binder学习笔记1 - 启动ServiceManager pollInner会执行这个poll

|- - - -binder_thread_read

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

// 判断当前线程是否正在等待进程任务(当满足下面2个条件时), 见【第6.1.1节】

// (1)当前线程事务栈和todo队列均为空

// (2)且当前线程looper标志为BINDER_LOOPER_STATE_ENTERED或BINDER_LOOPER_STATE_REGISTERED

wait_for_proc_work = binder_available_for_proc_work_ilocked(thread);

// 设置线程looper等待标志

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work) {

// 恢复当前线程优先级

binder_restore_priority(current, proc->default_priority);

}

if (non_block) {

...

} else {

// 当wait_for_proc_work为true, 则进入休眠等待状态

ret = binder_wait_for_work(thread, wait_for_proc_work);

}

// 设置线程looper退出等待标志

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

if (ret)

return ret;

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w = NULL;

struct list_head *list = NULL;

struct binder_transaction *t = NULL;

// 当前线程todo队列非空

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

// 当前进程todo队列非空, 且wait_for_proc_work标识为true, 则取proc->todo

else if (!binder_worklist_empty_ilocked(&proc->todo) && wait_for_proc_work)

list = &proc->todo;

else {

// 若无数据且当前线程looper_need_return为false, 则重试

if (ptr - buffer == 4 && !thread->looper_need_return)

goto retry;

break;

}

// binder读操作到buffer末尾, 结束循环

if (end - ptr < sizeof(tr) + 4) {

binder_inner_proc_unlock(proc);

break;

}

// 从list队列中取出第一项任务

w = binder_dequeue_work_head_ilocked(list);

if (binder_worklist_empty_ilocked(&thread->todo))

thread->process_todo = false;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

// 根据w实例(类型为binder_work)指针获得t实例(类型为binder_transaction)

t = container_of(w, struct binder_transaction, work);

} break;

case BINDER_WORK_RETURN_ERROR: ...

case BINDER_WORK_TRANSACTION_COMPLETE: {

// 将BR_TRANSACTION_COMPLETE返回协议写入用户空间的缓冲区

cmd = BR_TRANSACTION_COMPLETE;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

// 释放已完成的任务对象

kfree(w);

} break;

case BINDER_WORK_NODE: ...

case BINDER_WORK_DEAD_BINDER:

case BINDER_WORK_DEAD_BINDER_AND_CLEAR:

case BINDER_WORK_CLEAR_DEATH_NOTIFICATION: ...

}

// 若任务为空, 则继续循环

if (!t)

continue;

if (t->buffer->target_node) { // 目标Binder节点非空

struct binder_node *target_node = t->buffer->target_node;

struct binder_priority node_prio;

// 设置目标进程的Binder本地对象

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

node_prio.sched_policy = target_node->sched_policy;

node_prio.prio = target_node->min_priority;

binder_transaction_priority(current, t, node_prio, target_node->inherit_rt);

// 发送BR_TRANSACTION返回协议

cmd = BR_TRANSACTION;

} else { // 目标Binder节点为空

tr.target.ptr = 0;

tr.cookie = 0;

// 发送BR_REPLY返回协议

cmd = BR_REPLY;

}

tr.code = t->code;

tr.flags = t->flags;

tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid);

t_from = binder_get_txn_from(t);

if (t_from) {

struct task_struct *sender = t_from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender, task_active_pid_ns(current));

} else {

tr.sender_pid = 0;

}

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

// 修改binder_transaction_data结构体tr中的数据缓冲区和偏移数组的地址值

// 使其指向binder_transaction结构体t中的数据缓冲区和偏移数组的地址值

// 而前面分析往Binder驱动写数据, 即调用binder_thread_write方法时

// Binder驱动分配给binder_transaction结构体t的内核缓冲区同时映射到进程的内核空间和用户空间.

// 通过这种方式来减少一次数据拷贝

tr.data.ptr.buffer = (binder_uintptr_t)((uintptr_t)t->buffer->data +

binder_alloc_get_user_buffer_offset(&proc->alloc));

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size, sizeof(void *));

// 将返回协议BR_TRANSACTION拷贝到目标线程thread提供的用户空间

if (put_user(cmd, (uint32_t __user *)ptr)) {

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "put_user failed", BR_FAILED_REPLY);

return -EFAULT;

}

ptr += sizeof(uint32_t);

// 将struct binder_transaction_data结构体tr拷贝到目标线程thread提供的用户空间

if (copy_to_user(ptr, &tr, sizeof(tr))) {

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "copy_to_user failed", BR_FAILED_REPLY);

return -EFAULT;

}

ptr += sizeof(tr);

if (t_from)

binder_thread_dec_tmpref(t_from);

// 表示Binder驱动程序为它所分配的内核缓冲区允许目标线程thread在用户空间发出BC_FREE_BUFFER命令协议来释放

// 这里说的目标线程thread, 即servicemanager进程的主线程

t->buffer->allow_user_free = 1;

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) { // 同步通信

// 将事务t压入目标线程thread的事务堆栈中

binder_inner_proc_lock(thread->proc);

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

binder_inner_proc_unlock(thread->proc);

// 对于同步通信, 要等事务t处理完, 才释放内核空间

} else {

// 对于异步通信, 事务t已经不需要了, 释放内核空间

binder_free_transaction(t);

}

break;

}

done:

// 异常处理: 当w->type为BINDER_WORK_DEAD_BINDER或BINDER_WORK_DEAD_BINDER_AND_CLEAR或BINDER_WORK_CLEAR_DEATH_NOTIFICATION

*consumed = ptr - buffer;

binder_inner_proc_lock(proc);

//当请求线程数和等待线程数均为0,已启动线程数小于最大线程数(15),且looper状态为已注册或已进入时创建新的线程。

if (proc->requested_threads == 0 &&

list_empty(&thread->proc->waiting_threads) &&

proc->requested_threads_started < proc->max_threads &&

(thread->looper & (BINDER_LOOPER_STATE_REGISTERED | BINDER_LOOPER_STATE_ENTERED)))

{

proc->requested_threads++;

binder_inner_proc_unlock(proc);

// 向用户空间发送BR码指令BR_SPAWN_LOOPER, 创建新线程

if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer))

return -EFAULT;

} else

binder_inner_proc_unlock(proc);

return 0;

}

前面分析了BC_TRANSACTION过程, 会将tcomplete对象添加到源线程的todo队列.

从源线程的todo队列中取出tcomplete, type为BINDER_WORK_TRANSACTION_COMPLETE.

将BR_TRANSACTION_COMPLETE写入用户空间缓冲区mIn中.

binder_thread_write和binder_thread_read方法执行完后, 会依次返回talkWithDriver -> waitForResponse方法.

如上引用自:http://mouxuejie.com/blog/2020-01-18/add-service/

4. 总结

通过前面对transaction来查询serviceManager是否的执行过程进行跟踪,我们可以总结主要流程如下:

- 首先serviceManager在启动之后会通过poll系统调用将自身进程加入到自身binder_proc.binder_thread下的等待队列wait中,等待处理来自客户端的请求;

- 客户端(vold)通过transaction调用发送请求,其中请求的命令为BC_TRANSACTION,code为PING_TRANSACTION,携带的目标进程也就是serviceManager进程的handle为0,它用于在binder驱动中查询binder_proc;

- 根据上述信息在client端构建bnder_transaction_data结构,并写入mOut变量,mOut位于vold进程的IPCThreadState;

- 通过mOut构建binder_write_read,之后将通过talkWithDriver与binder驱动交互,通过binder_write_read作为参数,主要是通过ioctl系统调用,命令码为BINDER_WRITE_READ;

- 我们知道vold进程在defaultServiceManager时,会通过binder_open来创建binder_proc,并保存到filp->private_data中,此处在binder_ioctl中同样通过filp->private_data获取回之前保存的binder_proc,进一步找到最优的binder_thread,binder_write_read中携带了用户空间的buffer,它包含了用户空间的请求数据;

- 进一步调用binder_thread_write来构建binder_transaction_data,它将用户空间数据拷贝到binder_transaction_data,这就是binder的唯一一次用户空间与内核空间的互拷贝;

- 进一步调用binder_transaction来组装binder_transaction,根据之前从用户空间传递过来的handle(为0),获取到目标进程也就是ServiceManager的binder_proc,同时在目标进程的binder_proc. binder_alloc中分配buffer,将binder_transaction中数据拷贝到binder_proc. binder_alloc中分配的buffer中

- 进一步调用binder_proc_transaction,并获取到目标binder_proc的最优的binder_thread,唤醒在此binder_thread上睡眠的进程也就是serviceManager进程,对此次用户请求进行处理;

- 处理完毕后binder将发送BR_TRANSACTION_COMPLETE给到客户端进程;

- 后续通信过程将按照 Android binder学习笔记0 - 概述所示的模型进行

参考文档

http://mouxuejie.com/blog/2020-01-18/add-service/

彻底理解Android Binder通信架构