Service Manager的用处

-

在正式展开叙述之前,我们需要先说明一下为什么要进行Service Manager的启动和获取。回到binder-框架认知中的整体框架图可知:

客户端想要和服务端进行通信,首先需要和对应服务端建立连接,那么客户端是怎么知道,并且完成和服务端进行连接的呢?这就需要用到Service Manager服务了,其实对应客户端和服务端的连接通信都是通过Service Manager来建立的,之所以Service Manager可以实现相关需求,原因如下:

- Service Manager的Binder的handle,固定为0;而这就保证了:不论是Client端还是Service端都可以准确的获取到Service Manager

- Service Manager中记录了需要通信的server,这样就可以通过Service Manager去获取对应的需要通信的server

Service Manager的启动

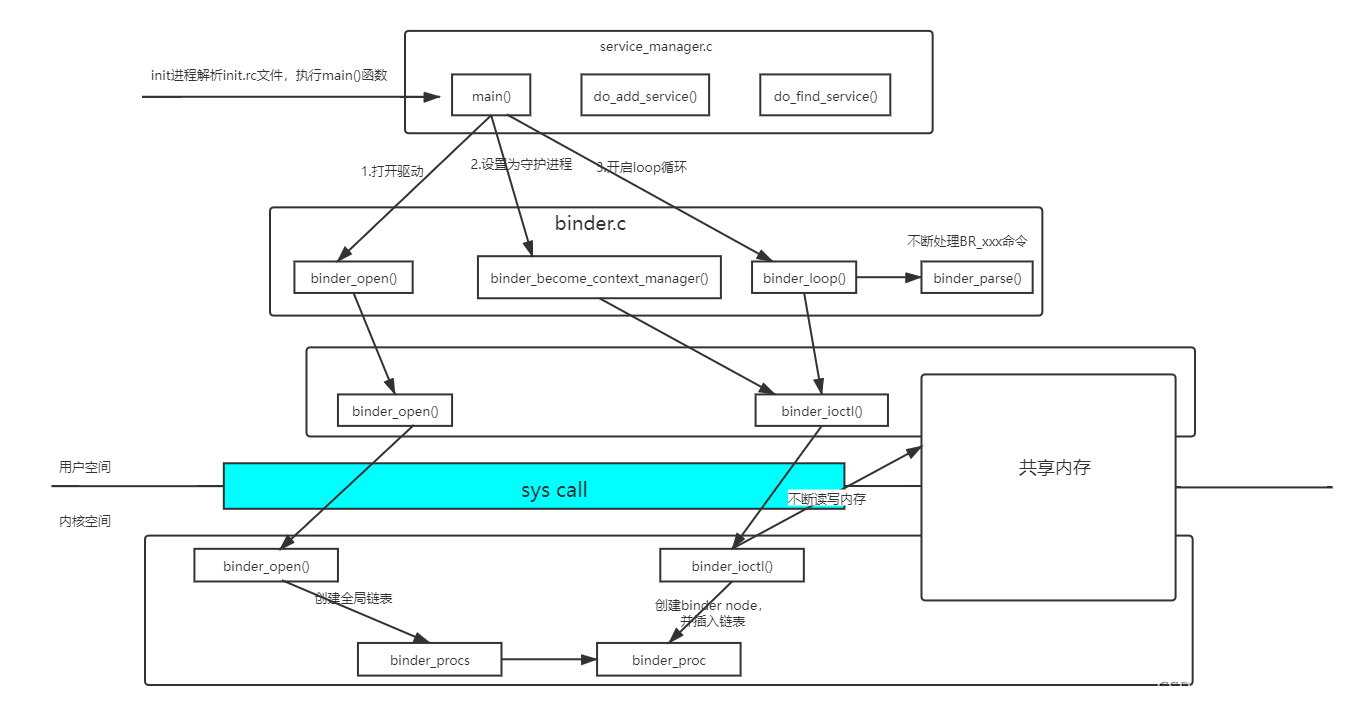

Service Manager启动相关图示

-

Service Manager启动方法调用图

-

Service Manager启动时序图

Service Manager启动代码解析

- 需要说明以下分析都是基于Android P版本进行分析

1. 启动Service Manager进程

-

ServiceManager是由init进程通过解析init.rc文件而创建的,其所对应的可执行程序是ServiceManager,所对应的源文件是service_manager.c,进程名是servicemanager

文件路径:android/system/core/rootdir/init.rc

# Start essential services. # 启动servicemanager.rc start servicemanager start hwservicemanager start vndservicemanager文件路径:android/frameworks/native/cmds/servicemanager.rc

# 启动service Manager进程 service servicemanager /system/bin/servicemanager class core animation user system group system readproc critical onrestart restart healthd onrestart restart zygote onrestart restart audioserver onrestart restart media onrestart restart surfaceflinger onrestart restart inputflinger onrestart restart drm onrestart restart cameraserver onrestart restart keystore onrestart restart gatekeeperd writepid /dev/cpuset/system-background/tasks shutdown critical

2. 进入 service_manager.c 中的 main()方法

-

启动servicemanager的入口就是

service_manager.c中的main()方法:int main(int argc, char** argv) { struct binder_state *bs; union selinux_callback cb; char *driver; if (argc > 1) { driver = argv[1]; } else { driver = "/dev/binder"; } //获取SM的binder驱动相关信息,并在其中建立了内存映射,这里open的Binder驱动文件是SM进程的,所以下面映射的128K的空间的SM的Binder的大小 bs = binder_open(driver, 128*1024); if (!bs) { #ifdef VENDORSERVICEMANAGER ALOGW("failed to open binder driver %s\n", driver); while (true) { sleep(UINT_MAX); } #else ALOGE("failed to open binder driver %s\n", driver); #endif return -1; } //将该binder注册成ServiceManager大管家 if (binder_become_context_manager(bs)) { ALOGE("cannot become context manager (%s)\n", strerror(errno)); return -1; } cb.func_audit = audit_callback; selinux_set_callback(SELINUX_CB_AUDIT, cb); cb.func_log = selinux_log_callback; selinux_set_callback(SELINUX_CB_LOG, cb); #ifdef VENDORSERVICEMANAGER sehandle = selinux_android_vendor_service_context_handle(); #else sehandle = selinux_android_service_context_handle(); #endif selinux_status_open(true); if (sehandle == NULL) { ALOGE("SELinux: Failed to acquire sehandle. Aborting.\n"); abort(); } if (getcon(&service_manager_context) != 0) { ALOGE("SELinux: Failed to acquire service_manager context. Aborting.\n"); abort(); } //开启binder loop循环 binder_loop(bs, svcmgr_handler); return 0; }在

main()方法中,关于SM的启动,主要的动作是三个:binder_open(): 打开Binder设备文件binder_become_context_manager(): 告知Binder驱动当前进程是Binder上下文管理者binder_loop(): 进入消息循环,等待Client请求;如果没有Client请求,那么就进入中断等待状态,如果有Client请求,就被唤醒,读取并处理Client请求

接下来针对这三个方法进行详细解析。

1. binder_open()方法解析

-

代码路径:android/framework/native/cmds/servicemanager/binder.c

//此处参数,driver = /dev/binder,mapsize = 128*1024 struct binder_state *binder_open(const char* driver, size_t mapsize) { struct binder_state *bs; struct binder_version vers; bs = malloc(sizeof(*bs)); if (!bs) { errno = ENOMEM; return NULL; } //打开dev/binder驱动,并返回驱动文件句柄,保存在bs->fd中 bs->fd = open(driver, O_RDWR | O_CLOEXEC); if (bs->fd < 0) { fprintf(stderr,"binder: cannot open %s (%s)\n", driver, strerror(errno)); goto fail_open; } //获取binder驱动版本号 if ((ioctl(bs->fd, BINDER_VERSION, &vers) == -1) || (vers.protocol_version != BINDER_CURRENT_PROTOCOL_VERSION)) { fprintf(stderr, "binder: kernel driver version (%d) differs from user space version (%d)\n", vers.protocol_version, BINDER_CURRENT_PROTOCOL_VERSION); goto fail_open; } bs->mapsize = mapsize; //建立SM进程的binder内存映射,mapsize为128K,所以此处映射的空间就是128K,所以Service Manager进程的binder空间就只有128K bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0); if (bs->mapped == MAP_FAILED) { fprintf(stderr,"binder: cannot map device (%s)\n", strerror(errno)); goto fail_map; } //返回SM的binder相关信息,包含binder驱动文件的句柄、内存大小以及binder驱动首地址 return bs; fail_map: close(bs->fd); fail_open: free(bs); return NULL; }binder_open()方法的用处就是打开“/dev/binder”节点的Binder驱动设备文件,然后调用mmap()方法进行内存映射 -

这里面的

open()、mmap()最后都是调用Binder驱动中的binder_open()、binder_mmap(),具体方法解析见binder框架解析(1),此处不多做说明。

2. binder_become_context_manager()方法解析

-

代码路径:android/framework/native/cmds/servicemanager/binder.c

int binder_become_context_manager(struct binder_state *bs) { //调用到binder驱动层的Binder_ioctl,传入的参数是BINDER_SET_CONTEXT_MGR return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0); }ioctl()会调用到Binder驱动层的binder_ioctl()方法- 代码路径:android/kernel/drivers/staging/android/binder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { ... switch (cmd) { ...... //传入的参数是BINDER_SET_CONTEXT_MGR,命中此处case case BINDER_SET_CONTEXT_MGR: ret = binder_ioctl_set_ctx_mgr(filp); if (ret) goto err; break; ..... } ... }传入的参数为:

BINDER_SET_CONTEXT_MGR,命中对应case后调用方法:binder_ioctl_set_ctx_mgr(filp)static int binder_ioctl_set_ctx_mgr(struct file *filp, struct flat_binder_object *fbo) { int ret = 0; //filp->private_data中记录的就是对应进程的proc信息 struct binder_proc *proc = filp->private_data; //拿到对应进程的context对象 struct binder_context *context = proc->context; struct binder_node *new_node; //拿到当前的uid kuid_t curr_euid = current_euid(); mutex_lock(&context->context_mgr_node_lock); //判断是否已经存在binder_context_mgr_node,如果已经存在,不能再进行二次设置,直接退出,保证只存在一个SM if (context->binder_context_mgr_node) { pr_err("BINDER_SET_CONTEXT_MGR already set\n"); ret = -EBUSY; goto out; } //判断proc->tsk的合法性,如果proc->tsk不合法直接退出 ret = security_binder_set_context_mgr(proc->tsk); if (ret < 0) goto out; //判断binder_context_mgr_uid是否合法,这个也是在判断是否已经进行过SM的设置了,确保只存在一个SM if (uid_valid(context->binder_context_mgr_uid)) { //如果binder_context_mgr_uid合法,即表示已经进行过SM的设置了,那么就uid和当前进程的uid是否相等 if (!uid_eq(context->binder_context_mgr_uid, curr_euid)) { pr_err("BINDER_SET_CONTEXT_MGR bad uid %d != %d\n", from_kuid(&init_user_ns, curr_euid), from_kuid(&init_user_ns, context->binder_context_mgr_uid)); ret = -EPERM; goto out; } } else { //如果不存在uid,则将当前线程的uid设置进去 context->binder_context_mgr_uid = curr_euid; } //根据proc新建一个binder_node对象,fbo此时为null new_node = binder_new_node(proc, fbo); if (!new_node) { ret = -ENOMEM; goto out; } //加锁,确保线程安全 binder_node_lock(new_node); //增加相关binder引用计数 new_node->local_weak_refs++; new_node->local_strong_refs++; new_node->has_strong_ref = 1; new_node->has_weak_ref = 1; //将新建的binder_node对象使用binder_context_mgr_node保存起来 context->binder_context_mgr_node = new_node; binder_node_unlock(new_node); binder_put_node(new_node); out: mutex_unlock(&context->context_mgr_node_lock); return ret; }纵观

binder_ioctl_set_ctx_mgr()方法,其实它做的事情就是:在判断binder_context_mgr_node为空的前提下,通过binder_new_node()方法新建了ServicerManager服务对应的Binder实体,并将其赋值binder_context_mgr_node- 其实每个需要进行IPC通信的server都会通过

binder_new_node()方法去新建一个Binder实体,而SM和其他服务的Binder实体所不同有两点:binder_new_node(proc, fbo)SM的Binder实体在创建时传入的参数是特殊的- SM的Binder实体是保存在

binder_context_mgr_node中的,而这就保证了其他的服务都可以通过context->binder_context_mgr_node去获取SM的Binder实体

接下来我们看一下

binder_new_node()方法的代码细节:static struct binder_node *binder_new_node(struct binder_proc *proc, struct flat_binder_object *fp) { struct binder_node *node; //创建一个新的binder_node对象 struct binder_node *new_node = kzalloc(sizeof(*node), GFP_KERNEL); if (!new_node) return NULL; binder_inner_proc_lock(proc); //传入代表当前进程的proc数据、新创建的new_node、fp(在创建SM时,fp为null) node = binder_init_node_ilocked(proc, new_node, fp); binder_inner_proc_unlock(proc); if (node != new_node) /* * The node was already added by another thread */ kfree(new_node); return node; } static struct binder_node *binder_init_node_ilocked( struct binder_proc *proc, struct binder_node *new_node, struct flat_binder_object *fp) { struct rb_node **p = &proc->nodes.rb_node; struct rb_node *parent = NULL; struct binder_node *node; //通过传入的fp参数是否为空,决定了ptr和cookie是否为0 binder_uintptr_t ptr = fp ? fp->binder : 0; binder_uintptr_t cookie = fp ? fp->cookie : 0; __u32 flags = fp ? fp->flags : 0; s8 priority; assert_spin_locked(&proc->inner_lock); //在proc->nodes.rb_node这颗红黑树中,查找是否存在匹配的binder实体(通过ptr成员来判断) while (*p) { parent = *p; node = rb_entry(parent, struct binder_node, rb_node); if (ptr < node->ptr) p = &(*p)->rb_left; else if (ptr > node->ptr) p = &(*p)->rb_right; else { /* * A matching node is already in * the rb tree. Abandon the init * and return it. */ binder_inc_node_tmpref_ilocked(node); return node; } } //如果在红黑树中没有找到对应相同ptr的binder_node对象,那么就将新创建的new_node赋值给node node = new_node; binder_stats_created(BINDER_STAT_NODE); node->tmp_refs++; //将新创建的node放入node->rb_node中 rb_link_node(&node->rb_node, parent, p); rb_insert_color(&node->rb_node, &proc->nodes); node->debug_id = atomic_inc_return(&binder_last_id); //将当前进程的proc信息保存到node->proc中 node->proc = proc; //保存下当前进程的ptr、cookie值,在SM中,ptr和cookie都为0,这是SM特殊的地方! node->ptr = ptr; node->cookie = cookie; node->work.type = BINDER_WORK_NODE; priority = flags & FLAT_BINDER_FLAG_PRIORITY_MASK; node->sched_policy = (flags & FLAT_BINDER_FLAG_SCHED_POLICY_MASK) >> FLAT_BINDER_FLAG_SCHED_POLICY_SHIFT; node->min_priority = to_kernel_prio(node->sched_policy, priority); node->accept_fds = !!(flags & FLAT_BINDER_FLAG_ACCEPTS_FDS); node->inherit_rt = !!(flags & FLAT_BINDER_FLAG_INHERIT_RT); node->txn_security_ctx = !!(flags & FLAT_BINDER_FLAG_TXN_SECURITY_CTX); spin_lock_init(&node->lock); INIT_LIST_HEAD(&node->work.entry); INIT_LIST_HEAD(&node->async_todo); binder_debug(BINDER_DEBUG_INTERNAL_REFS, "%d:%d node %d u%016llx c%016llx created\n", proc->pid, current->pid, node->debug_id, (u64)node->ptr, (u64)node->cookie); return node; } -

至此

binder_become_context_manager()就分析完毕了,其所谓的将SM设置为大管家,本质上其实就是两点:- SM的驱动层Binder实体创建是特殊的,

binder_new_node(proc, 0, 0)中传入的参数都是0 - SM的驱动层Binder实体用

binder_context_mgr_node进行了保存,而这就保证了所有server都可以通过context->binder_context_mgr_node获取到SM的Binder实体

- SM的驱动层Binder实体创建是特殊的,

3. binder_loop()方法解析

-

代码路径:android/framework/native/cmds/servicemanager/binder.c

void binder_loop(struct binder_state *bs, binder_handler func) { int res; struct binder_write_read bwr; uint32_t readbuf[32]; //首先将写数据置空 bwr.write_size = 0; bwr.write_consumed = 0; bwr.write_buffer = 0; //放入读数据为BC_ENTER_LOOPER readbuf[0] = BC_ENTER_LOOPER; //将数据通过ioctl写入, //这里的作用就是告诉Kernel binder驱动,我(ServiceManager进程)要进入消息循环状态了,请做好相关准备 binder_write(bs, readbuf, sizeof(uint32_t)); //此处是死循环 for (;;) { //这里的read数据就是BC_ENTER_LOOPER bwr.read_size = sizeof(readbuf); bwr.read_consumed = 0; bwr.read_buffer = (uintptr_t) readbuf; //通过ioctl调用kernel层的binder_ioctl,这里首次进来,write_buffer是空的,read_buffer为BC_ENTER_LOOPER //如果没有消息需要处理,同步状态下,会在这里阻塞 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); if (res < 0) { ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno)); break; } //解析消息反馈 res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func); if (res == 0) { ALOGE("binder_loop: unexpected reply?!\n"); break; } if (res < 0) { ALOGE("binder_loop: io error %d %s\n", res, strerror(errno)); break; } } }解析

binder_loop()方法可以看到,其中存在死循环,也就是说,SM启动后最终会一直在binder_loop()方法中循环获取消息,而在ioctl()方法处,如果不再有消息,则会阻塞。接下来进一步解析其中的方法,从

binder_write()方法开始看:int binder_write(struct binder_state *bs, void *data, size_t len) { struct binder_write_read bwr; int res; //将传入的data放到binder_write_read数据结构中的write_buffer中,此处的data为BC_ENTER_LOOPER bwr.write_size = len; bwr.write_consumed = 0; bwr.write_buffer = (uintptr_t) data; bwr.read_size = 0; bwr.read_consumed = 0; bwr.read_buffer = 0; //通过ioctl调用kernel层的binder_ioctl将数据写入 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); if (res < 0) { fprintf(stderr,"binder_write: ioctl failed (%s)\n", strerror(errno)); } return res; }分析

binder_write()发现,最终也是调用了ioctl()方法进到了kernel层进行消息处理,那么接下来简单分析一下传入的数据分别为:write_buffer = BC_ENTER_LOOPER和read_buffer = BC_ENTER_LOOPER时,在kernel层binder_ioctl()中都做了哪些动作吧- 代码路径:android/drivers/android/binder.c

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { .... switch (cmd) { ..... //传入的cmd为BINDER_WRITE_READ,命中case case BINDER_WRITE_READ: ret = binder_ioctl_write_read(filp, cmd, arg, thread); if (ret) goto err; break; ...... } ..... }两次传下来的cmd都为

BINDER_WRITE_READ,命中对应case,调用方法:binder_ioctl_write_read(filp, cmd, arg, thread)static int binder_ioctl_write_read(struct file *filp, unsigned int cmd, unsigned long arg, struct binder_thread *thread) { int ret = 0; //之前binder_open()方法打开的对应binder驱动文件上下文信息,就是存在filp->private_data中,此时就是通过其获取到之前打开的binder上下文 struct binder_proc *proc = filp->private_data; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; struct binder_write_read bwr; if (size != sizeof(struct binder_write_read)) { ret = -EINVAL; goto out; } //通过copy_from_user将用户空间的传输数据的封装bwr,拷贝到内核空间中 if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { ret = -EFAULT; goto out; } binder_debug(BINDER_DEBUG_READ_WRITE, "%d:%d write %lld at %016llx, read %lld at %016llx\n", proc->pid, thread->pid, (u64)bwr.write_size, (u64)bwr.write_buffer, (u64)bwr.read_size, (u64)bwr.read_buffer); //第一次进入时,write_size>0,存放的数据是BC_ENTER_LOOPER,命中if;第二次进入时,write_size=0,不满足条件 if (bwr.write_size > 0) { ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed); trace_binder_write_done(ret); if (ret < 0) { bwr.read_consumed = 0; if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto out; } } //第一次进入时read_size=0,不满足条件;第二次进入时,read_size>0,存放的数据是BC_ENTER_LOOPER,命中if if (bwr.read_size > 0) { ret = binder_thread_read(proc, thread, bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK); trace_binder_read_done(ret); //如果todo队列不为空,那么就唤醒处于中断状态的wait等待线程 if (!list_empty(&proc->todo)) wake_up_interruptible(&proc->wait); if (ret < 0) { if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto out; } } binder_debug(BINDER_DEBUG_READ_WRITE, "%d:%d wrote %lld of %lld, read return %lld of %lld\n", proc->pid, thread->pid, (u64)bwr.write_consumed, (u64)bwr.write_size, (u64)bwr.read_consumed, (u64)bwr.read_size); //读写完毕后,将内核空间的数据的封装bwr,拷贝回用户空间 if (copy_to_user(ubuf, &bwr, sizeof(bwr))) { ret = -EFAULT; goto out; } out: return ret; }接下来我们逐一分析

binder_thread_write()和binder_thread_read()方法先看一下

binder_thread_write():static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { uint32_t cmd; struct binder_context *context = proc->context; void __user *buffer = (void __user *)(uintptr_t)binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; //循环获取buffer中的数据 while (ptr < end && thread->return_error == BR_OK) { if (get_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); trace_binder_command(cmd); if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) { binder_stats.bc[_IOC_NR(cmd)]++; proc->stats.bc[_IOC_NR(cmd)]++; thread->stats.bc[_IOC_NR(cmd)]++; } switch (cmd) { //此时buffer中的数据是BC_ENTER_LOOPER,命中case case BC_ENTER_LOOPER: binder_debug(BINDER_DEBUG_THREADS, "%d:%d BC_ENTER_LOOPER\n", proc->pid, thread->pid); if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) { thread->looper |= BINDER_LOOPER_STATE_INVALID; binder_user_error("%d:%d ERROR: BC_ENTER_LOOPER called after BC_REGISTER_LOOPER\n", proc->pid, thread->pid); } //更新looper的值,表明现在进入了loop状态 thread->looper |= BINDER_LOOPER_STATE_ENTERED; break; ...... } return 0; }到这里,第一个写入的

BC_ENTER_LOOPER就处理完成了,其实就是置了标志位,表明当下进入了loop状态。接下来,我们看一下

binder_thread_read()中,对BC_ENTER_LOOPER的处理:static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { void __user *buffer = (void __user *)(uintptr_t)binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; int ret = 0; int wait_for_proc_work; //第一次进来consumed=0成立,命中if,向用户空间写入消息:BR_NOOP if (*consumed == 0) { if (put_user(BR_NOOP, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); } ........ //设置标志位,BINDER_LOOPER_STATE_WAITING thread->looper |= BINDER_LOOPER_STATE_WAITING; if (wait_for_proc_work) proc->ready_threads++; binder_unlock(__func__); trace_binder_wait_for_work(wait_for_proc_work, !!thread->transaction_stack, !list_empty(&thread->todo)); if (wait_for_proc_work) { //在调用binder_thread_write时,我们置入了标志位:BINDER_LOOPER_STATE_ENTERED,所以这里为false if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED | BINDER_LOOPER_STATE_ENTERED))) { binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n", proc->pid, thread->pid, thread->looper); wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); } //设置优先级,当前线程要处理proc的事务,所以设置优先级和proc一样 binder_set_nice(proc->default_priority); //判断是否是消息同步处理机制 //如果是不阻塞式,即异步的处理方式,通过binder_has_proc_work()读取消息,如果没有消息,则直接返回 if (non_block) { if (!binder_has_proc_work(proc, thread)) ret = -EAGAIN; } else //如果是阻塞式,即同步的处理方式,同样使用binder_has_proc_work()读取消息,但是如果没有消息存在,则在此处阻塞 ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread)); } else { if (non_block) { if (!binder_has_thread_work(thread)) ret = -EAGAIN; } else ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread)); } ...... }至此,

binder_thread_read()也已分析完成,当待处理队列中没有需要处理的事务时,那么就会阻塞(针对SM消息启动来说),直到有待处理事务写入,从而唤醒SM的binder_loop(),继续获取事务进行处理

Service Manager启动总结

- 通过对

service_manager.c文件中的main()方法的解析,我们可以得知:- 对于ServiceManager进程来说:

- 通过

binder_open()方法打开 Binder驱动设备文件,并且将内存映射到ServiceManager的进程空间 - 通过

binder_become_context_manager()设置当前进程为Binder上下文管理者 - 通过

binder_loop()进入消息获取循环,等待Client的请求

- 通过

- 对于Binder驱动来说:

- 初始化了ServiceManager对应的进程上下文环境,即

binder_proc变量 - 将内核虚拟内存和用户虚拟内存映射到同一块物理内存中,大小是128K

- 新建当前线程对应的

binder_thread对象,并将其添加到进程上下文信息binder_proc->threads红黑树中 - 新建ServiceManager的Binder实体,并将该Binder实体用

context->binder_context_mgr_node保存 - 当没有消息事务处理时,进入中断等待状态,等待其他进程将其唤醒

- 初始化了ServiceManager对应的进程上下文环境,即

- 对于ServiceManager进程来说: