ijkplayer是一款跨平台播放器,支持Android与iOS,音频解码默认使用FFmpeg软解。Android端播放音频可以用OpenSL ES和AudioTrack,而iOS端播放音频默认使用AudioQueue。

一、iOS解码播放

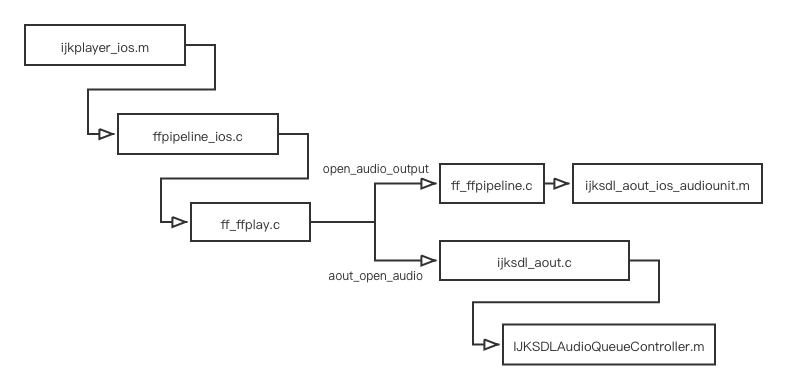

采用pipeline形式创建音频播放组件,整体流水线如下:

1、创建IjkMediaPlayer

首先调用ijkplayer_ios.m创建IjkMediaPlayer,具体代码如下:

IjkMediaPlayer *ijkmp_ios_create(int (*msg_loop)(void*))

{

IjkMediaPlayer *mp = ijkmp_create(msg_loop);

if (!mp)

goto fail;

mp->ffplayer->vout = SDL_VoutIos_CreateForGLES2();

if (!mp->ffplayer->vout)

goto fail;

mp->ffplayer->pipeline = ffpipeline_create_from_ios(mp->ffplayer);

if (!mp->ffplayer->pipeline)

goto fail;

return mp;

fail:

ijkmp_dec_ref_p(&mp);

return NULL;

}2、创建pipeline

在第一步调用ffpipeline_ios.c创建pipeline,对func_open_video_decoder和func_open_audio_output函数指针进行赋值:

IJKFF_Pipeline *ffpipeline_create_from_ios(FFPlayer *ffp)

{

IJKFF_Pipeline *pipeline = ffpipeline_alloc(&g_pipeline_class, sizeof(IJKFF_Pipeline_Opaque));

......

pipeline->func_open_video_decoder = func_open_video_decoder;

pipeline->func_open_audio_output = func_open_audio_output;

return pipeline;

}

static SDL_Aout *func_open_audio_output(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

return SDL_AoutIos_CreateForAudioUnit();

}3、创建SDL_Aout

在ff_play.c的ffp_prepare_async_l()方法调用ff_ffpipeline.c的ffpipeline_open_audio_output()方法:

int ffp_prepare_async_l(FFPlayer *ffp, const char *file_name)

{

......

if (!ffp->aout) {

ffp->aout = ffpipeline_open_audio_output(ffp->pipeline, ffp);

if (!ffp->aout)

return -1;

}

}然后调用ffpipeline_ios.c的func_open_audio_output()方法,最终调用ijksdl_aout_ios_audiounit.m创建SDL_Aout,分别为open_audio、pause_audio、flush_audio、close_audio函数指针进行赋值:

SDL_Aout *SDL_AoutIos_CreateForAudioUnit()

{

SDL_Aout *aout = SDL_Aout_CreateInternal(sizeof(SDL_Aout_Opaque));

......

aout->open_audio = aout_open_audio;

aout->pause_audio = aout_pause_audio;

aout->flush_audio = aout_flush_audio;

aout->close_audio = aout_close_audio;

......

return aout;

}4、打开音频解码器

在ff_ffplay.c的stream_component_open()方法寻找并且打开音频解码器:

static int stream_component_open(FFPlayer *ffp, int stream_index)

{

// 寻找解码器

codec = avcodec_find_decoder(avctx->codec_id);

// 打开解码器

if ((ret = avcodec_open2(avctx, codec, &opts)) < 0) {

goto fail;

}

......

switch (avctx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

// 打开音频输出设备

if ((ret = audio_open(ffp, channel_layout, nb_channels, sample_rate, &is->audio_tgt)) < 0)

goto fail;

// 启动音频解码线程

if ((ret = decoder_start(&is->auddec, audio_thread, ffp, "ff_audio_dec")) < 0)

goto out;

......

default:

break;

}

goto out;

fail:

avcodec_free_context(&avctx);

out:

av_dict_free(&opts);

return ret;

}5、创建AudioQueue

在audio_open()方法里调用ijksdl_aout.c的SDL_AoutOpenAudio()方法打开音频输出设备,并且设置pcm数据回调函数:

static int audio_open(FFPlayer *opaque, int64_t wanted_channel_layout, int wanted_nb_channels, int wanted_sample_rate, struct AudioParams *audio_hw_params)

{

// 设置sdl_audio_callback作为callback函数

wanted_spec.callback = sdl_audio_callback;

// 打开音频输出设备

while (SDL_AoutOpenAudio(ffp->aout, &wanted_spec, &spec) < 0) {

if (is->abort_request)

return -1;

}

}ijksdl_aout.c内部调用ijksdl_aout_ios_audiounit.m的aout_open_audio(),最终调用IJKSDLAudioQueueController.m创建AudioQueue用于播放音频:

- (id)initWithAudioSpec:(const SDL_AudioSpec *)aSpec

{

// 创建AudioQueue,设置回调函数

OSStatus status = AudioQueueNewOutput(&streamDescription,

IJKSDLAudioQueueOuptutCallback,

(__bridge void *) self,

NULL,

kCFRunLoopCommonModes,

0,

&audioQueueRef);

// 启动AudioQueue

status = AudioQueueStart(audioQueueRef, NULL);

return self;

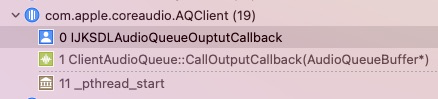

}AudioQueue内部会创建一个工作线程进行播放,如下图所示:

6、解码音频

音频解码线程:

static int audio_thread(void *arg)

{

......

do {

// decode audio

if ((got_frame = decoder_decode_frame(ffp, &is->auddec, frame, NULL)) < 0)

goto the_end;

// filter audio

#if CONFIG_AVFILTER

if ((ret = av_buffersrc_add_frame(is->in_audio_filter, frame)) < 0)

goto the_end;

while ((ret = av_buffersink_get_frame_flags(is->out_audio_filter, frame, 0)) >= 0) {

tb = av_buffersink_get_time_base(is->out_audio_filter);

}

#endif

frame_queue_push(&is->sampq);

} while (ret >= 0 || ret == AVERROR(EAGAIN) || ret == AVERROR_EOF);

the_end:

av_frame_free(&frame);

return ret;

}sdl_audio_callback的代码如下:

static void sdl_audio_callback(void *opaque, Uint8 *stream, int len)

{

......

while (len > 0) {

if (is->audio_buf_index >= is->audio_buf_size) {

// decode and convert frame

audio_size = audio_decode_frame(ffp);

}

if (!is->muted && is->audio_buf && is->audio_volume == SDL_MIX_MAXVOLUME)

memcpy(stream, (uint8_t *)is->audio_buf + is->audio_buf_index, len1);

else {

memset(stream, 0, len1);

if (!is->muted && is->audio_buf)

SDL_MixAudio(stream, (uint8_t *)is->audio_buf + is->audio_buf_index, len1, is->audio_volume);

}

len -= len1;

stream += len1;

is->audio_buf_index += len1;

}

}然后内部调用audio_decode_frame()进行音频转换:

static int audio_decode_frame(FFPlayer *ffp)

{

......

do {

// 获取下一个frame

frame_queue_next(&is->sampq);

} while (af->serial != is->audioq.serial);

// 音频转换

swr_convert(is->swr_ctx, out, out_count, in, af->frame->nb_samples);

return resampled_data_size;

}7、播放音频

在AudioQueue的回调函数会定时回调,然后调用ff_ffplay.c的sdl_audio_callback()获取pcm数据来播放:

static void IJKSDLAudioQueueOuptutCallback(void * inUserData, AudioQueueRef inAQ, AudioQueueBufferRef inBuffer) {

@autoreleasepool {

// 获取pcm数据

(*aqController.spec.callback)(aqController.spec.userdata, inBuffer->mAudioData, inBuffer->mAudioDataByteSize);

// pcm数据入队列

AudioQueueEnqueueBuffer(inAQ, inBuffer, 0, NULL);

}

}