一、前提回顾

稍微回顾一下之前的内容,以编码DefaultVideoEncoderFactory为入口,createEncoder是由HardwareVideoEncoderFactory 和 SoftwareVideoEncoderFactory各自创建出来,然后回传到PeerConnectionClient。

@Nullable

public VideoEncoder createEncoder(VideoCodecInfo info) {

VideoEncoder softwareEncoder = this.softwareVideoEncoderFactory.createEncoder(info);

VideoEncoder hardwareEncoder = this.hardwareVideoEncoderFactory.createEncoder(info);

if (hardwareEncoder != null && softwareEncoder != null) {

return new VideoEncoderFallback(softwareEncoder, hardwareEncoder);

} else {

return hardwareEncoder != null ? hardwareEncoder : softwareEncoder;

}

}对于HardwareVideoEncoderFactory??createEncoder创建硬解器,大概如下:

@Nullable

@Override

public VideoEncoder createEncoder(VideoCodecInfo input) {

VideoCodecMimeType type = VideoCodecMimeType.valueOf(input.name);

MediaCodecInfo info = findCodecForType(type);

String codecName = info.getName();

String mime = type.mimeType();

Integer surfaceColorFormat = MediaCodecUtils.selectColorFormat(

MediaCodecUtils.TEXTURE_COLOR_FORMATS, info.getCapabilitiesForType(mime));

Integer yuvColorFormat = MediaCodecUtils.selectColorFormat(

MediaCodecUtils.ENCODER_COLOR_FORMATS, info.getCapabilitiesForType(mime));

if (type == VideoCodecMimeType.H264) {

boolean isHighProfile = H264Utils.isSameH264Profile(

input.params, MediaCodecUtils.getCodecProperties(type, /* highProfile= */ true));

boolean isBaselineProfile = H264Utils.isSameH264Profile(

input.params, MediaCodecUtils.getCodecProperties(type, /* highProfile= */ false));

if (!isHighProfile && !isBaselineProfile) {

return null;

}

if (isHighProfile && !isH264HighProfileSupported(info)) {

return null;

}

}

return new HardwareVideoEncoder(new MediaCodecWrapperFactoryImpl(), codecName, type,

surfaceColorFormat, yuvColorFormat, input.params, getKeyFrameIntervalSec(type),

getForcedKeyFrameIntervalMs(type, codecName), createBitrateAdjuster(type, codecName),

sharedContext);

}createEncoder的流程在?Android-RTC-6?分析VideoEncoderFactory进行过较为详细的分析,这次深入HardwareVideoEncoder。

二、BitrateAdjuster

事不宜迟,赶紧进入分析,首先是初始化操作。

@Override

public VideoCodecStatus initEncode(Settings settings, Callback callback) {

// ...

useSurfaceMode = canUseSurface();

if (settings.startBitrate != 0 && settings.maxFramerate != 0) {

bitrateAdjuster.setTargets(settings.startBitrate * 1000, settings.maxFramerate);

}

adjustedBitrate = bitrateAdjuster.getAdjustedBitrateBps();

return initEncodeInternal();

}

private VideoCodecStatus initEncodeInternal() {

lastKeyFrameNs = -1;

try {

codec = mediaCodecWrapperFactory.createByCodecName(codecName);

} catch (IOException | IllegalArgumentException e) {

Logging.e(TAG, "Cannot create media encoder " + codecName);

return VideoCodecStatus.FALLBACK_SOFTWARE;

}

final int colorFormat = useSurfaceMode ? surfaceColorFormat : yuvColorFormat;

try {

MediaFormat format = MediaFormat.createVideoFormat(codecType.mimeType(), width, height)

format.setInteger(MediaFormat.KEY_BIT_RATE, adjustedBitrate);

format.setInteger(KEY_BITRATE_MODE, VIDEO_ControlRateConstant);

format.setInteger(MediaFormat.KEY_COLOR_FORMAT, colorFormat);

format.setInteger(MediaFormat.KEY_FRAME_RATE, bitrateAdjuster.getCodecConfigFramerate()

format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, keyFrameIntervalSec);

if (codecType == VideoCodecMimeType.H264) {

String profileLevelId = params.get(VideoCodecInfo.H264_FMTP_PROFILE_LEVEL_ID);

if (profileLevelId == null) {

profileLevelId = VideoCodecInfo.H264_CONSTRAINED_BASELINE_3_1;

}

switch (profileLevelId) {

case VideoCodecInfo.H264_CONSTRAINED_HIGH_3_1:

format.setInteger("profile", VIDEO_AVC_PROFILE_HIGH);

format.setInteger("level", VIDEO_AVC_LEVEL_3);

break;

case VideoCodecInfo.H264_CONSTRAINED_BASELINE_3_1:

break;

default:

Logging.w(TAG, "Unknown profile level id: " + profileLevelId);

}

}

codec.configure(

format, null /* surface */, null /* crypto */, MediaCodec.CONFIGURE_FLAG_ENCODE);

if (useSurfaceMode) {

textureEglBase = EglBase.createEgl14(sharedContext, EglBase.CONFIG_RECORDABLE);

textureInputSurface = codec.createInputSurface();

textureEglBase.createSurface(textureInputSurface);

textureEglBase.makeCurrent();

}

codec.start();

outputBuffers = codec.getOutputBuffers();

} catch (IllegalStateException e) {

Logging.e(TAG, "initEncodeInternal failed", e);

release();

return VideoCodecStatus.FALLBACK_SOFTWARE;

}

running = true;

outputThreadChecker.detachThread();

outputThread = createOutputThread();

outputThread.start();

return VideoCodecStatus.OK;

}1、initEncode都是一些MediaCodec的常规操作,就是封装得比较完善,看见一个bitrateAdjuster,主要意义用于码率调整适配器。是HardwareVideoEncoderFactory->createEncoder创建HardwareVideoEncoder对象的时候通过createBitrateAdjuster传递的一个对象。

2、initEncode还根据profileLevelId设置了profile / level,关于profileLevelId的相关知识,已在?Android-RTC-6?分析过,大家可以去看看。

这次让我们深入浅出的看看这个BitrateAdjuster是如何工作的。

private BitrateAdjuster createBitrateAdjuster(VideoCodecMimeType type, String codecName) {

if (codecName.startsWith(EXYNOS_PREFIX)) {

if (type == VideoCodecMimeType.VP8) {

// Exynos VP8 encoders need dynamic bitrate adjustment.

return new DynamicBitrateAdjuster();

} else {

// Exynos VP9 and H264 encoders need framerate-based bitrate adjustment.

return new FramerateBitrateAdjuster();

}

}

// Other codecs don't need bitrate adjustment.

return new BaseBitrateAdjuster();

}

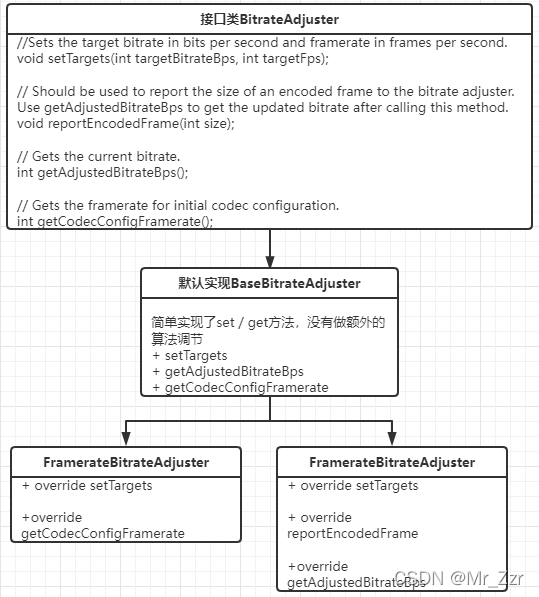

一图看懂BitrateAdjuster的继承关系。主要工作是FramerateBitrateAdjuster 和?DynamicBitrateAdjuster。

class FramerateBitrateAdjuster extends BaseBitrateAdjuster {

private static final int INITIAL_FPS = 30;

@Override

public void setTargets(int targetBitrateBps, int targetFps) {

if (this.targetFps == 0) {

// Framerate-based bitrate adjustment always initializes to the same framerate.

targetFps = INITIAL_FPS;

}

super.setTargets(targetBitrateBps, targetFps);

this.targetBitrateBps = this.targetBitrateBps * INITIAL_FPS / this.targetFps;

}

@Override

public int getCodecConfigFramerate() {

return INITIAL_FPS;

}

}先看FramerateBitrateAdjuster,写法有点绕,其实就是固定帧率=30。然后根据设置的码率求 调整后的帧率对应的码率。(占百分比的关系,多数用于固定带宽的场景。)

class DynamicBitrateAdjuster extends BaseBitrateAdjuster {

private static final double BITS_PER_BYTE = 8.0;

// Change the bitrate at most once every three seconds.

private static final double BITRATE_ADJUSTMENT_SEC = 3.0;

// Maximum bitrate adjustment scale - no more than 4 times.

private static final double BITRATE_ADJUSTMENT_MAX_SCALE = 4;

// Amount of adjustment steps to reach maximum scale.

private static final int BITRATE_ADJUSTMENT_STEPS = 20;

// How far the codec has deviated above (or below) the target bitrate (tracked in bytes).

private double deviationBytes;

private double timeSinceLastAdjustmentMs;

private int bitrateAdjustmentScaleExp;

@Override

public void setTargets(int targetBitrateBps, int targetFps) {

if (this.targetBitrateBps > 0 && targetBitrateBps < this.targetBitrateBps) {

// Rescale the accumulator level if the accumulator max decreases

deviationBytes = deviationBytes * targetBitrateBps / this.targetBitrateBps;

}

super.setTargets(targetBitrateBps, targetFps);

}

@Override

public void reportEncodedFrame(int size) {

if (targetFps == 0) {

return;

}

// Accumulate the difference between actual and expected frame sizes.

double expectedBytesPerFrame = (targetBitrateBps / BITS_PER_BYTE) / targetFps;

deviationBytes += (size - expectedBytesPerFrame);

timeSinceLastAdjustmentMs += 1000.0 / targetFps;

// Adjust the bitrate when the encoder accumulates one second's worth of data in excess or

// shortfall of the target.

double deviationThresholdBytes = targetBitrateBps / BITS_PER_BYTE;

// Cap the deviation, i.e., don't let it grow beyond some level to avoid using too old data for

// bitrate adjustment. This also prevents taking more than 3 "steps" in a given 3-second cycle.

double deviationCap = BITRATE_ADJUSTMENT_SEC * deviationThresholdBytes;

deviationBytes = Math.min(deviationBytes, deviationCap);

deviationBytes = Math.max(deviationBytes, -deviationCap);

// Do bitrate adjustment every 3 seconds if actual encoder bitrate deviates too much

// from the target value.

if (timeSinceLastAdjustmentMs <= 1000 * BITRATE_ADJUSTMENT_SEC) {

return;

}

if (deviationBytes > deviationThresholdBytes) {

// Encoder generates too high bitrate - need to reduce the scale.

int bitrateAdjustmentInc = (int) (deviationBytes / deviationThresholdBytes + 0.5);

bitrateAdjustmentScaleExp -= bitrateAdjustmentInc;

// Don't let the adjustment scale drop below -BITRATE_ADJUSTMENT_STEPS.

// This sets a minimum exponent of -1 (bitrateAdjustmentScaleExp / BITRATE_ADJUSTMENT_STEPS).

bitrateAdjustmentScaleExp = Math.max(bitrateAdjustmentScaleExp, -BITRATE_ADJUSTMENT_STEPS);

deviationBytes = deviationThresholdBytes;

} else if (deviationBytes < -deviationThresholdBytes) {

// Encoder generates too low bitrate - need to increase the scale.

int bitrateAdjustmentInc = (int) (-deviationBytes / deviationThresholdBytes + 0.5);

bitrateAdjustmentScaleExp += bitrateAdjustmentInc;

// Don't let the adjustment scale exceed BITRATE_ADJUSTMENT_STEPS.

// This sets a maximum exponent of 1 (bitrateAdjustmentScaleExp / BITRATE_ADJUSTMENT_STEPS).

bitrateAdjustmentScaleExp = Math.min(bitrateAdjustmentScaleExp, BITRATE_ADJUSTMENT_STEPS);

deviationBytes = -deviationThresholdBytes;

}

timeSinceLastAdjustmentMs = 0;

}

private double getBitrateAdjustmentScale() {

return Math.pow(BITRATE_ADJUSTMENT_MAX_SCALE,

(double) bitrateAdjustmentScaleExp / BITRATE_ADJUSTMENT_STEPS);

}

@Override

public int getAdjustedBitrateBps() {

return (int) (targetBitrateBps * getBitrateAdjustmentScale());

}

}再看DynamicBitrateAdjuster,复写了reportEncodedFrame,此方法传入的参数 是 编码输出的实际字节大小。我们来看看其中的算法逻辑。

// 期望的每帧分配码率,转换大Byte为单位。

double expectedBytesPerFrame = (targetBitrateBps / BITS_PER_BYTE) / targetFps;

// 统计实际帧大小和预期帧大小之间的差异。

deviationBytes += (size - expectedBytesPerFrame);

// 期望的码率值(Byte)

double deviationThresholdBytes = targetBitrateBps / BITS_PER_BYTE;/**

?* 限制偏差,也就是说,不要让偏差超过某个级别,以避免使用太旧的数据进行比特率调整。

?* 这还可以防止在给定的3秒周期内采取超过3个“调整级别”。

?*/

// 3s内的期望码率

double deviationCap = BITRATE_ADJUSTMENT_SEC * deviationThresholdBytes;

// 把实际与期望的偏差控制在-3s ~ +3s的期望码率内。

deviationBytes = Math.min(deviationBytes, deviationCap);

deviationBytes = Math.max(deviationBytes, -deviationCap);// 统计时间,调整码率调控时间 (3s一次)

timeSinceLastAdjustmentMs += 1000.0 / targetFps;

if (timeSinceLastAdjustmentMs <= 1000 * BITRATE_ADJUSTMENT_SEC) {

? ? return;

}if (deviationBytes > deviationThresholdBytes) {

? ? // 编码器产生的比特率太高-需要按比例减小。

? ? int bitrateAdjustmentInc = (int) (deviationBytes / deviationThresholdBytes + 0.5);

? ? bitrateAdjustmentScaleExp -= bitrateAdjustmentInc;

? ? // 不要让调整比例降到低于 -BITRATE_ADJUSTMENT_STEPS.

? ? // 这保证(bitrateAdjustmentScaleExp / BITRATE_ADJUSTMENT_STEPS)最小指数设置为-1

? ? bitrateAdjustmentScaleExp = Math.max(bitrateAdjustmentScaleExp, -BITRATE_ADJUSTMENT_STEPS);

? ? deviationBytes = deviationThresholdBytes;

} else if (deviationBytes < -deviationThresholdBytes) {

? ? // 编码器生成的比特率太低-需要按比例增加。

? ? int bitrateAdjustmentInc = (int) (-deviationBytes / deviationThresholdBytes + 0.5);

? ? bitrateAdjustmentScaleExp += bitrateAdjustmentInc;

? ? // 不要让调整比例超过 BITRATE_ADJUSTMENT_STEPS.

? ? // 这保证(bitrateAdjustmentScaleExp / BITRATE_ADJUSTMENT_STEPS)最大指数设置为1.

? ? bitrateAdjustmentScaleExp = Math.min(bitrateAdjustmentScaleExp, BITRATE_ADJUSTMENT_STEPS);

? ? deviationBytes = -deviationThresholdBytes;

}

timeSinceLastAdjustmentMs = 0;

关键逻辑就是根据情况算出bitrateAdjustmentScaleExp,得出合适的getBitrateAdjustmentScale。这个DynamicBitrateAdjuster场景在哪呢?就是客户端千变万化的网络场景,特别是弱网网络,根据探测的上行网络带宽,适当的调整编码输出码率,便于传输。

三、Encode

在AppWebRTC的demo当中,我们可以看到HardwareVideoEncoder的createOutputThread。执行deliverEncodedImage函数,其中就是在dequeueOutputBuffer输出编码数据后,通过bitrateAdjuster.getAdjustedBitrateBps()实时的调整码率。

private Thread createOutputThread() {

return new Thread() {

@Override

public void run() {

while (running) {

deliverEncodedImage();

}

releaseCodecOnOutputThread();

}

};

}

// Visible for testing.

protected void deliverEncodedImage() {

outputThreadChecker.checkIsOnValidThread();

try {

MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

int index = codec.dequeueOutputBuffer(info, DEQUEUE_OUTPUT_BUFFER_TIMEOUT_US);

if (index < 0) {

if (index == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

outputBuffersBusyCount.waitForZero();

outputBuffers = codec.getOutputBuffers();

}

return;

}

ByteBuffer codecOutputBuffer = outputBuffers[index];

codecOutputBuffer.position(info.offset);

codecOutputBuffer.limit(info.offset + info.size);

if ((info.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {

Logging.d(TAG, "Config frame generated. Offset: " + info.offset + ". Size: " + info.size);

configBuffer = ByteBuffer.allocateDirect(info.size);

configBuffer.put(codecOutputBuffer);

} else {

bitrateAdjuster.reportEncodedFrame(info.size);

if (adjustedBitrate != bitrateAdjuster.getAdjustedBitrateBps()) {

updateBitrate();

}

final boolean isKeyFrame = (info.flags & MediaCodec.BUFFER_FLAG_SYNC_FRAME) != 0;

if (isKeyFrame) {

Logging.d(TAG, "Sync frame generated");

}

// ...

}

} catch (IllegalStateException e) {

Logging.e(TAG, "deliverOutput failed", e);

}

}有输入才有输出,我们看看encode的输入:

@Override

public VideoCodecStatus encode(VideoFrame videoFrame, EncodeInfo encodeInfo) {

encodeThreadChecker.checkIsOnValidThread();

if (codec == null) {

return VideoCodecStatus.UNINITIALIZED;

}

final VideoFrame.Buffer videoFrameBuffer = videoFrame.getBuffer();

final boolean isTextureBuffer = videoFrameBuffer instanceof VideoFrame.TextureBuffer;

// If input resolution changed, restart the codec with the new resolution.

final int frameWidth = videoFrame.getBuffer().getWidth();

final int frameHeight = videoFrame.getBuffer().getHeight();

final boolean shouldUseSurfaceMode = canUseSurface() && isTextureBuffer;

if (frameWidth != width || frameHeight != height || shouldUseSurfaceMode != useSurfaceMode) {

VideoCodecStatus status = resetCodec(frameWidth, frameHeight, shouldUseSurfaceMode);

if (status != VideoCodecStatus.OK) {

return status;

}

}

if (outputBuilders.size() > MAX_ENCODER_Q_SIZE) {

// Too many frames in the encoder. Drop this frame.

Logging.e(TAG, "Dropped frame, encoder queue full");

return VideoCodecStatus.NO_OUTPUT; // See webrtc bug 2887.

}

boolean requestedKeyFrame = false;

for (EncodedImage.FrameType frameType : encodeInfo.frameTypes) {

if (frameType == EncodedImage.FrameType.VideoFrameKey) {

requestedKeyFrame = true;

}

}

if (requestedKeyFrame || shouldForceKeyFrame(videoFrame.getTimestampNs())) {

requestKeyFrame(videoFrame.getTimestampNs());

}

// Number of bytes in the video buffer. Y channel is sampled at one byte per pixel; U and V are

// subsampled at one byte per four pixels.

int bufferSize = videoFrameBuffer.getHeight() * videoFrameBuffer.getWidth() * 3 / 2;

EncodedImage.Builder builder = EncodedImage.builder()

.setCaptureTimeNs(videoFrame.getTimestampNs())

.setEncodedWidth(videoFrame.getBuffer().getWidth())

.setEncodedHeight(videoFrame.getBuffer().getHeight())

.setRotation(videoFrame.getRotation());

outputBuilders.offer(builder);

final VideoCodecStatus returnValue;

if (useSurfaceMode) {

returnValue = encodeTextureBuffer(videoFrame);

} else {

returnValue = encodeByteBuffer(videoFrame, videoFrameBuffer, bufferSize);

}

// Check if the queue was successful.

if (returnValue != VideoCodecStatus.OK) {

// Keep the output builders in sync with buffers in the codec.

outputBuilders.pollLast();

}

return returnValue;

}其中BlockingDeque<EncodedImage.Builder> outputBuilders;是输出对象队列,用于打时间戳、旋转角度等各种非必要的编码信息。其中看到encodeTextureBuffer /?encodeByteBuffer两个输入方法。

encodeTextureBuffer代码的模式和上一节的?Android-RTC-7?介绍的是一样的。这里就不再详细展开;encodeByteBuffer 也比较简单,着重关注方法里面的fillInputBuffer,根据当前的yuvColorFormat,底层调用libyuv进行格式转换,然后填入MediaCodec。

下一章分析WebRTC的关键点:?PeerConnection。新年假期简单码了一篇文章,也算是对得起自己之前承诺的保持学习,每月保持原创输出。在这新的虎年打算保持Android-RTC的产出,还打算输出Android有关于HDR实战专栏。希望能帮助大家。