起因

在 APP 中用 OWT(Open WebRTC Tookit) 实现直播功能时,发现,只要加入到创建好的房间,订阅了房间中的流之后,就会获取用户的麦克风权限。这样对只是想看直播并不想上麦讲话的用户很不友好,我们想要的效果是,只有用户上麦时才去获取麦克风权限,其他时间不获取麦克风权限。

原因

翻阅源码发现,在WebRTC官方SDK中,如果为RTCPeerConnection添加了AudioTrack,WebRTC就会尝试去初始化音频的输入输出。

Audio通道建立成功之后WebRTC会自动完成声音的采集传输播放。

RTCAudioSession提供了一个useManualAudio属性,将它设置为true,那么音频的输入输出开关将由isAudioEnabled属性控制。

但是,isAudioEnabled只能同时控制音频的输入输出,无法分开控制。

我们的产品现在需要关闭麦克风的功能,在只是订阅流的时候,不需要麦克风。需要推流(连麦等功能),必须要使用麦克风的时候,才需要去获取麦克风权限。

从WebRTC官方回复来看,WebRTC 是专门为全双工VoIP通话应用而设计的,所以必须是需要初始化麦克风的,而且是没有提供修改的API。

解决方案

目前官方没有提供API,底层相关代码还没有实现

// sdk/objc/native/src/audio/audio_device_ios.mm

int32_t AudioDeviceIOS::SetMicrophoneMute(bool enable) {

RTC_NOTREACHED() << "Not implemented";

return -1;

}

分析源码,可以在VoiceProcessingAudioUnit中找到Audio Unit的使用。

OnDeliverRecordedData回调函数拿到音频数据后通过VoiceProcessingAudioUnitObserver通知给AudioDeviceIOS

// sdk/objc/native/src/audio/voice_processing_audio_unit.mm

OSStatus VoiceProcessingAudioUnit::OnDeliverRecordedData(

void* in_ref_con,

AudioUnitRenderActionFlags* flags,

const AudioTimeStamp* time_stamp,

UInt32 bus_number,

UInt32 num_frames,

AudioBufferList* io_data) {

VoiceProcessingAudioUnit* audio_unit =

static_cast<VoiceProcessingAudioUnit*>(in_ref_con);

return audio_unit->NotifyDeliverRecordedData(flags, time_stamp, bus_number,

num_frames, io_data);

}

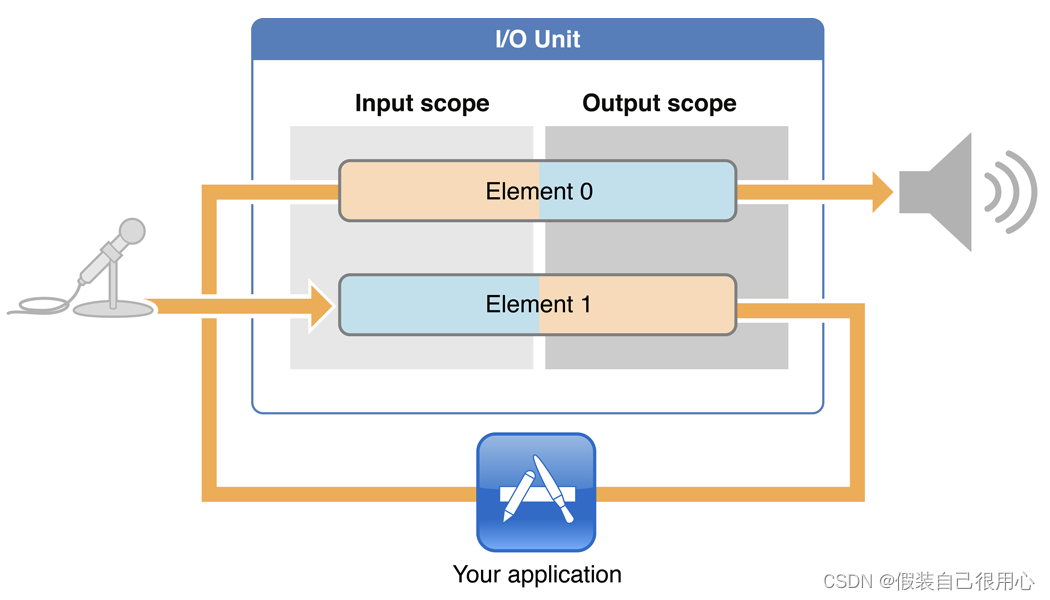

I/O Unit的特征

上图I/O Unit有两个element,但它们是独立的,例如,你可以根据应用程序的需要使用enable I/O属性(kAudioOutputUnitProperty_EnableIO)来独立启用或禁用某个element。每个element都有Input scope和Output scope。

I/O Unit的element 1连接音频的输入硬件,在上图中由麦克风表示。开发者只能访问控制Output scopeI/O Unit的element 0连接音频的输出硬件,在上图中由扬声器表示。开发者只能访问控制Input scope

input element is element 1(单词Input的字母“I”,类似1)

output element is element 0 (单词Output的字母“O”,类型0)

通过分析Audio Unit发现,其实要关闭麦克风也很简单,只需要在初始化音频单元配置的时候关闭掉输入。下面代码新增了一个isMicrophoneMute 变量,这个变量在RTCAudioSessionConfiguration中设置。

代码示例:

c++

// sdk/objc/native/src/audio/voice_processing_audio_unit.mm

bool VoiceProcessingAudioUnit::Init() {

RTC_DCHECK_EQ(state_, kInitRequired);

// Create an audio component description to identify the Voice Processing

// I/O audio unit.

AudioComponentDescription vpio_unit_description;

vpio_unit_description.componentType = kAudioUnitType_Output;

vpio_unit_description.componentSubType = kAudioUnitSubType_VoiceProcessingIO;

vpio_unit_description.componentManufacturer = kAudioUnitManufacturer_Apple;

vpio_unit_description.componentFlags = 0;

vpio_unit_description.componentFlagsMask = 0;

// Obtain an audio unit instance given the description.

AudioComponent found_vpio_unit_ref =

AudioComponentFindNext(nullptr, &vpio_unit_description);

// Create a Voice Processing IO audio unit.

OSStatus result = noErr;

result = AudioComponentInstanceNew(found_vpio_unit_ref, &vpio_unit_);

if (result != noErr) {

vpio_unit_ = nullptr;

RTCLogError(@"AudioComponentInstanceNew failed. Error=%ld.", (long)result);

return false;

}

// Enable input on the input scope of the input element.

RTCAudioSessionConfiguration* webRTCConfiguration = [RTCAudioSessionConfiguration webRTCConfiguration];

if (webRTCConfiguration.isMicrophoneMute)

{

RTCLog("@Enable input on the input scope of the input element.");

UInt32 enable_input = 1;

result = AudioUnitSetProperty(vpio_unit_, kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Input, kInputBus, &enable_input,

sizeof(enable_input));

if (result != noErr) {

DisposeAudioUnit();

RTCLogError(@"Failed to enable input on input scope of input element. "

"Error=%ld.",

(long)result);

return false;

}

}

else {

RTCLog("@Not Enable input on the input scope of the input element.");

}

// Enable output on the output scope of the output element.

UInt32 enable_output = 1;

result = AudioUnitSetProperty(vpio_unit_, kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Output, kOutputBus,

&enable_output, sizeof(enable_output));

if (result != noErr) {

DisposeAudioUnit();

RTCLogError(@"Failed to enable output on output scope of output element. "

"Error=%ld.",

(long)result);

return false;

}

// Specify the callback function that provides audio samples to the audio

// unit.

AURenderCallbackStruct render_callback;

render_callback.inputProc = OnGetPlayoutData;

render_callback.inputProcRefCon = this;

result = AudioUnitSetProperty(

vpio_unit_, kAudioUnitProperty_SetRenderCallback, kAudioUnitScope_Input,

kOutputBus, &render_callback, sizeof(render_callback));

if (result != noErr) {

DisposeAudioUnit();

RTCLogError(@"Failed to specify the render callback on the output bus. "

"Error=%ld.",

(long)result);

return false;

}

// Disable AU buffer allocation for the recorder, we allocate our own.

// TODO(henrika): not sure that it actually saves resource to make this call.

if (webRTCConfiguration.isMicrophoneMute)

{

RTCLog("@Disable AU buffer allocation for the recorder, we allocate our own.");

UInt32 flag = 0;

result = AudioUnitSetProperty(

vpio_unit_, kAudioUnitProperty_ShouldAllocateBuffer,

kAudioUnitScope_Output, kInputBus, &flag, sizeof(flag));

if (result != noErr) {

DisposeAudioUnit();

RTCLogError(@"Failed to disable buffer allocation on the input bus. "

"Error=%ld.",

(long)result);

return false;

}

}

else {

RTCLog("@NOT Disable AU buffer allocation for the recorder, we allocate our own.");

}

// Specify the callback to be called by the I/O thread to us when input audio

// is available. The recorded samples can then be obtained by calling the

// AudioUnitRender() method.

if (webRTCConfiguration.isMicrophoneMute)

{

RTCLog("@Specify the callback to be called by the I/O thread to us when input audio");

AURenderCallbackStruct input_callback;

input_callback.inputProc = OnDeliverRecordedData;

input_callback.inputProcRefCon = this;

result = AudioUnitSetProperty(vpio_unit_,

kAudioOutputUnitProperty_SetInputCallback,

kAudioUnitScope_Global, kInputBus,

&input_callback, sizeof(input_callback));

if (result != noErr) {

DisposeAudioUnit();

RTCLogError(@"Failed to specify the input callback on the input bus. "

"Error=%ld.",

(long)result);

return false;

}

}

else {

RTCLog("@NOT Specify the callback to be called by the I/O thread to us when input audio");

}

state_ = kUninitialized;

return true;

}

c++

// sdk/objc/native/src/audio/voice_processing_audio_unit.mm

bool VoiceProcessingAudioUnit::Initialize(Float64 sample_rate) {

RTC_DCHECK_GE(state_, kUninitialized);

RTCLog(@"Initializing audio unit with sample rate: %f", sample_rate);

OSStatus result = noErr;

AudioStreamBasicDescription format = GetFormat(sample_rate);

UInt32 size = sizeof(format);

#if !defined(NDEBUG)

LogStreamDescription(format);

#endif

RTCAudioSessionConfiguration* webRTCConfiguration = [RTCAudioSessionConfiguration webRTCConfiguration];

if (webRTCConfiguration.isMicrophoneMute)

{

RTCLog("@Setting the format on the output scope of the input element/bus because it's not movie mode");

// Set the format on the output scope of the input element/bus.

result =

AudioUnitSetProperty(vpio_unit_, kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Output, kInputBus, &format, size);

if (result != noErr) {

RTCLogError(@"Failed to set format on output scope of input bus. "

"Error=%ld.",

(long)result);

return false;

}

}

else {

RTCLog("@NOT setting the format on the output sscope of the input element because it's movie mode");

}

// Set the format on the input scope of the output element/bus.

result =

AudioUnitSetProperty(vpio_unit_, kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input, kOutputBus, &format, size);

if (result != noErr) {

RTCLogError(@"Failed to set format on input scope of output bus. "

"Error=%ld.",

(long)result);

return false;

}

// Initialize the Voice Processing I/O unit instance.

// Calls to AudioUnitInitialize() can fail if called back-to-back on

// different ADM instances. The error message in this case is -66635 which is

// undocumented. Tests have shown that calling AudioUnitInitialize a second

// time, after a short sleep, avoids this issue.

// See webrtc:5166 for details.

int failed_initalize_attempts = 0;

result = AudioUnitInitialize(vpio_unit_);

while (result != noErr) {

RTCLogError(@"Failed to initialize the Voice Processing I/O unit. "

"Error=%ld.",

(long)result);

++failed_initalize_attempts;

if (failed_initalize_attempts == kMaxNumberOfAudioUnitInitializeAttempts) {

// Max number of initialization attempts exceeded, hence abort.

RTCLogError(@"Too many initialization attempts.");

return false;

}

RTCLog(@"Pause 100ms and try audio unit initialization again...");

[NSThread sleepForTimeInterval:0.1f];

result = AudioUnitInitialize(vpio_unit_);

}

if (result == noErr) {

RTCLog(@"Voice Processing I/O unit is now initialized.");

}

// AGC should be enabled by default for Voice Processing I/O units but it is

// checked below and enabled explicitly if needed. This scheme is used

// to be absolutely sure that the AGC is enabled since we have seen cases

// where only zeros are recorded and a disabled AGC could be one of the

// reasons why it happens.

int agc_was_enabled_by_default = 0;

UInt32 agc_is_enabled = 0;

result = GetAGCState(vpio_unit_, &agc_is_enabled);

if (result != noErr) {

RTCLogError(@"Failed to get AGC state (1st attempt). "

"Error=%ld.",

(long)result);

// Example of error code: kAudioUnitErr_NoConnection (-10876).

// All error codes related to audio units are negative and are therefore

// converted into a postive value to match the UMA APIs.

RTC_HISTOGRAM_COUNTS_SPARSE_100000(

"WebRTC.Audio.GetAGCStateErrorCode1", (-1) * result);

} else if (agc_is_enabled) {

// Remember that the AGC was enabled by default. Will be used in UMA.

agc_was_enabled_by_default = 1;

} else {

// AGC was initially disabled => try to enable it explicitly.

UInt32 enable_agc = 1;

result =

AudioUnitSetProperty(vpio_unit_,

kAUVoiceIOProperty_VoiceProcessingEnableAGC,

kAudioUnitScope_Global, kInputBus, &enable_agc,

sizeof(enable_agc));

if (result != noErr) {

RTCLogError(@"Failed to enable the built-in AGC. "

"Error=%ld.",

(long)result);

RTC_HISTOGRAM_COUNTS_SPARSE_100000(

"WebRTC.Audio.SetAGCStateErrorCode", (-1) * result);

}

result = GetAGCState(vpio_unit_, &agc_is_enabled);

if (result != noErr) {

RTCLogError(@"Failed to get AGC state (2nd attempt). "

"Error=%ld.",

(long)result);

RTC_HISTOGRAM_COUNTS_SPARSE_100000(

"WebRTC.Audio.GetAGCStateErrorCode2", (-1) * result);

}

}

// Track if the built-in AGC was enabled by default (as it should) or not.

RTC_HISTOGRAM_BOOLEAN("WebRTC.Audio.BuiltInAGCWasEnabledByDefault",

agc_was_enabled_by_default);

RTCLog(@"WebRTC.Audio.BuiltInAGCWasEnabledByDefault: %d",

agc_was_enabled_by_default);

// As a final step, add an UMA histogram for tracking the AGC state.

// At this stage, the AGC should be enabled, and if it is not, more work is

// needed to find out the root cause.

RTC_HISTOGRAM_BOOLEAN("WebRTC.Audio.BuiltInAGCIsEnabled", agc_is_enabled);

RTCLog(@"WebRTC.Audio.BuiltInAGCIsEnabled: %u",

static_cast<unsigned int>(agc_is_enabled));

state_ = kInitialized;

return true;

}

上面代码通过个isMicrophoneMute变量,来判断是否开启输入。

通过上面的代码,我们可以做到初始化的时候设置是否需要麦克风权限。但是要做到动态连麦与下麦功能还远远不够。

通过我们设想,我们需要有一个方法,随时切换来初始化Audio Unit。分析源码发现,我们可以通过RTCAudioSession,增加一个另一个属性isMicrophoneMute。

这个变量将会像之前的isAudioEnabled属性一样,通过RTCAudioSession对外提供接口。我们只要模仿isAudioEnabled就可以轻松实现目的。

在RTCAudioSession中实现isMicrophoneMute属性。

代码示例:

// sdk/objc/components/audio/RTCAudioSession.mm

- (void)setIsMicrophoneMute:(BOOL)isMicrophoneMute {

@synchronized(self) {

if (_isMicrophoneMute == isMicrophoneMute) {

return;

}

_isMicrophoneMute = isMicrophoneMute;

}

[self notifyDidChangeMicrophoneMute];

}

- (BOOL)isMicrophoneMute {

@synchronized(self) {

return _isMicrophoneMute;

}

}

- (void)notifyDidChangeMicrophoneMute {

for (auto delegate : self.delegates) {

SEL sel = @selector(audioSession:didChangeMicrophoneMute:);

if ([delegate respondsToSelector:sel]) {

[delegate audioSession:self didChangeMicrophoneMute:self.isMicrophoneMute];

}

}

}

setIsMicrophoneMute将通过RTCNativeAudioSessionDelegateAdapter把消息传递给AudioDeviceIOS。

代码示例:

// sdk/objc/components/audio/RTCNativeAudioSessionDelegateAdapter.mm

- (void)audioSession:(RTCAudioSession *)session

didChangeMicrophoneMute:(BOOL)isMicrophoneMute {

_observer->OnMicrophoneMuteChange(isMicrophoneMute);

}

在AudioDeviceIOS中实现具体逻辑,AudioDeviceIOS::OnMicrophoneMuteChange将消息发送给线程来处理。

代码示例:

// sdk/objc/native/src/audio/audio_device_ios.mm

void AudioDeviceIOS::OnMicrophoneMuteChange(bool is_microphone_mute) {

RTC_DCHECK(thread_);

thread_->Post(RTC_FROM_HERE,

this,

kMessageTypeMicrophoneMuteChange,

new rtc::TypedMessageData<bool>(is_microphone_mute));

}

void AudioDeviceIOS::OnMessage(rtc::Message* msg) {

switch (msg->message_id) {

// ...

case kMessageTypeMicrophoneMuteChange: {

rtc::TypedMessageData<bool>* data = static_cast<rtc::TypedMessageData<bool>*>(msg->pdata);

HandleMicrophoneMuteChange(data->data());

delete data;

break;

}

}

}

void AudioDeviceIOS::HandleMicrophoneMuteChange(bool is_microphone_mute) {

RTC_DCHECK_RUN_ON(&thread_checker_);

RTCLog(@"Handling MicrophoneMute change to %d", is_microphone_mute);

if (is_microphone_mute) {

StopPlayout();

InitRecording();

StartRecording();

StartPlayout();

}else{

StopRecording();

StopPlayout();

InitPlayout();

StartPlayout();

}

}

至此,麦克风的静音就完成了。