一、Jsoup

jsoup是一款Java的HTML解析器,主要用来对HTML解析。

所以其需要页面是已加载的,对于一些需要启用js渲染完成后的页面则获取不到

- 基本页面爬取

/**

* 测试爬取结果

* @param args

* @throws Exception

*/

public static void main(String[] args) throws Exception {

// new ParseService().parseTo("").forEach(System.out::println);

new ParseService().parseTo945("https://www.945kmzh.com/%e6%9c%80%e6%96%b0")

.forEach(System.out::println);

}

public List<Parse> parseTo945(String keywords) throws Exception{

String url = keywords;

//解析网页 (Jsoup返回Document 就是页面对象 )

Document document = Jsoup.parse(new URL(url),30000);

Elements elements = document.getElementsByClass("post-item-thumbnail");

ArrayList<Parse> goodsList = new ArrayList<>();

for (Element el : elements) {

String img = el.getElementsByTag("img").eq(0).attr("src");

Parse content = new Parse();

content.setImg(img);

goodsList.add(content);

}

return goodsList;

}

- 爬取

vue页面

public static void main(String[] args) throws Exception {

new ParseService().parseJsoupJueJin("https://e.juejin.cn/#/");

}

public List<Parse> parseJsoupJueJin(String keywords) throws Exception{

String url = keywords;

//解析网页 (Jsoup返回Document 就是页面对象 )

Document document = Jsoup.parse(new URL(url),30000);

System.out.println(document);

ArrayList<Parse> goodsList = new ArrayList<>();

return goodsList;

}

二、htmlunit

对于需要js渲染的页面我们可以通过

htmlunit访问

- 依赖

<!-- htmlunit获取js渲染页面-->

<dependency>

<groupId>net.sourceforge.htmlunit</groupId>

<artifactId>htmlunit</artifactId>

<version>2.43.0</version>

</dependency>

public List<Parse> parseToNewQQ(String keywords) throws Exception{

final WebClient webClient = new WebClient(BrowserVersion.CHROME);

webClient.getOptions().setActiveXNative(true);// 不启用ActiveX

webClient.getOptions().setCssEnabled(false);// 是否启用CSS,因为不需要展现页面,所以不需要启用

webClient.getOptions().setUseInsecureSSL(true); // 设置为true,客户机将接受与任何主机的连接,而不管它们是否有有效证书

webClient.getOptions().setJavaScriptEnabled(true); // 很重要,启用JS

webClient.getOptions().setDownloadImages(false);// 不下载图片

webClient.getOptions().setThrowExceptionOnScriptError(false);// 当JS执行出错的时候是否抛出异常,这里选择不需要

webClient.getOptions().setThrowExceptionOnFailingStatusCode(false);// 当HTTP的状态非200时是否抛出异常,这里选择不需要

webClient.getOptions().setTimeout(15 * 1000); // 等待15s

webClient.getOptions().setConnectionTimeToLive(15 * 1000);

webClient.setAjaxController(new NicelyResynchronizingAjaxController());//很重要,设置支持AJAX

HtmlPage page = null;

try {

page = webClient.getPage(keywords);

} catch (Exception e) {

e.printStackTrace();

}finally {

webClient.close();

}

webClient.waitForBackgroundJavaScript(30*1000);// 异步JS执行需要耗时,所以这里线程要阻塞30秒,等待异步JS执行结束

String pageXml = page.getBody().asXml();

System.out.println(pageXml);

ArrayList<Parse> goodsList = new ArrayList<>();

return goodsList;

}

三、WebMagic

`WebMagic``深入爬虫,从页面发现后续url地址抓取数据

public class Demo implements PageProcessor {

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

public Site getSite() {

// TODO Auto-generated method stub

return site;

}

@Override

public void process(Page page) {

List<String> urls = page.getHtml().links().all();

for (int i = 0; i < urls.size(); i++) {

if (urls.get(i).equals("https://algo.itcharge.cn/01.%E6%95%B0%E7%BB%84/01.%E6%95%B0%E7%BB%84%E5%9F%BA%E7%A1%80%E7%9F%A5%E8%AF%86/01.%E6%95%B0%E7%BB%84%E5%9F%BA%E7%A1%80%E7%9F%A5%E8%AF%86/")) {

System.out.println(urls.get(i));

ArrayList<String> list = new ArrayList<>();

list.add(urls.get(i));

page.addTargetRequests(list);

System.out.println(page.getHtml());

}

}

}

public static void main(String[] args) {

Spider.create(new Demo()).addUrl("https://algo.itcharge.cn/")

//输出到控制台

// .addPipeline(new ConsolePipeline())

//传输到数据库

// .addPipeline(new MysqlPipelineBiQuGe())

//开启5个线程抓取

.thread(5)

//启动爬虫

.run();

}

}

- 笔趣阁实例

public class BiQuGeReptile implements PageProcessor {

//regex of URL:http://www.xbiquge.la/

public static final String FIRST_URL = "http://www\\.xbiquge\\.la/\\w+";

public static final String HELP_URL = "/\\d+/\\d+/";

public static final String TARGET_URL = "/\\d+/\\d+/\\d+\\.html/";

private Site site = Site.me().setRetryTimes(3).setSleepTime(1000);

public Site getSite() {

// TODO Auto-generated method stub

return site;

}

@Override

public void process(Page page) {

if(page.getUrl().regex(FIRST_URL).match()){

//获取全部链接

List<String> urls = page.getHtml().links().regex(HELP_URL).all();

//从页面发现后续的url地址来抓取

page.addTargetRequests(urls);

// 标题

page.putField("title",page.getHtml().xpath("//div[@id='info']/h1/text()").get());

//如果title为空则跳过

if (page.getResultItems().get("title") == null) {

page.setSkip(true);

}

//作者

page.putField("author",page.getHtml().xpath("//div[@id='info']/p/text()").get());

//简介

page.putField("info",page.getHtml().xpath("//div[@id='intro']/p[2]/text()").get());

//首图url

page.putField("image",page.getHtml().xpath("//div[@id='fmimg']/img").get());

//下一深度的网页爬取章节和内容

if(page.getUrl().regex(HELP_URL).match()){

List<String> links = page.getHtml().links().regex(TARGET_URL).all();

page.addTargetRequests(links);

//章节

page.putField("chapter", page.getHtml().xpath("//div[@class='bookname']/h1/text()").get());

//内容

page.putField("content", page.getHtml().xpath("//div[@id='content']/text()").get());

}

}

}

public static void main(String[] args){

Spider.create(new BiQuGeReptile()).addUrl("http://www.xbiquge.la/xiaoshuodaquan/")

//输出到控制台

.addPipeline(new ConsolePipeline())

//传输到数据库

// .addPipeline(new MysqlPipelineBiQuGe())

//开启5个线程抓取

.thread(5)

//启动爬虫

.run();

}

}

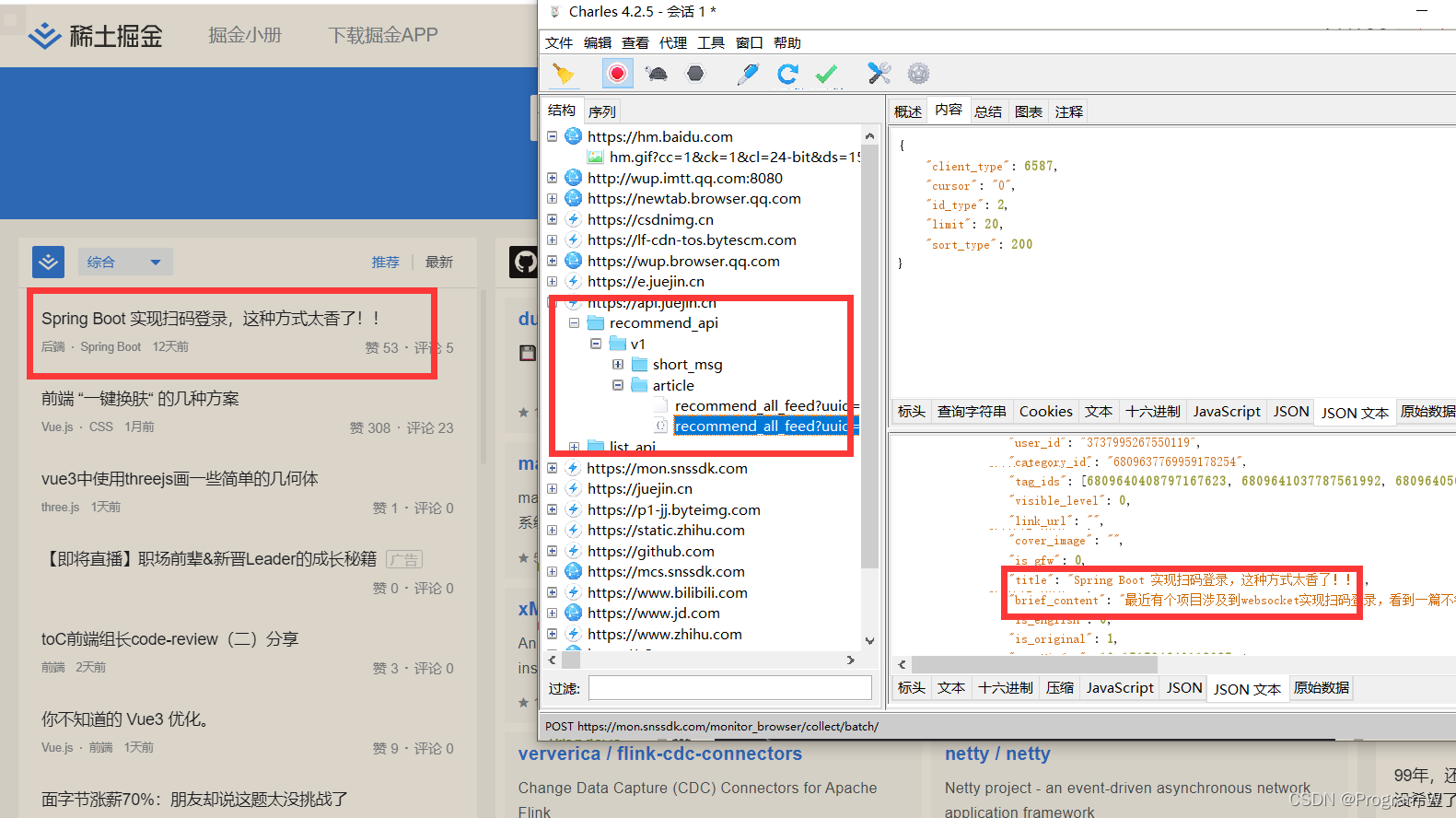

四、HttpRequest

实在拿不到页面了,去看看接口

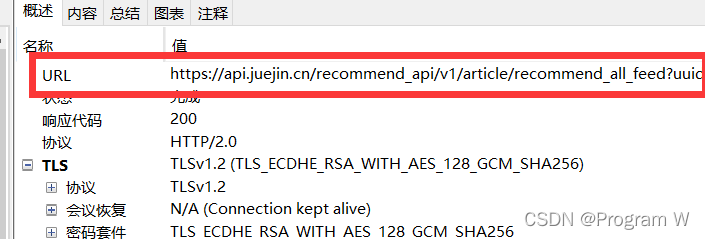

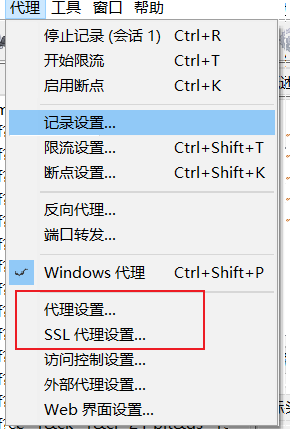

- charles抓包

找到我们需要的数据

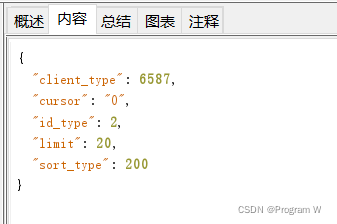

- 掘金酱文章部分获取

public class JuejinDemo {

public static void main(String[] args) {

String url = "https://api.juejin.cn/recommend_api/v1/article/recommend_all_feed?uuid=6981379481190958630&aid=6587";

Map<String, Object> paramMap = new HashMap<String, Object>();

//链式构建请求

String result2 = HttpRequest.post(url)

.header(Header.USER_AGENT, "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3775.400 QQBrowser/10.6.4208.400")//头信息,多个头信息多次调用此方法即可

.form(paramMap)//表单内容

.body("{\"client_type\":6587,\"cursor\":\"0\",\"id_type\":2,\"limit\":20,\"sort_type\":200}")

.timeout(20000)//超时,毫秒

.execute().body();

Console.log(result2);

}

}

因为掘金酱会变,所以这个爬的数据并不一定一样

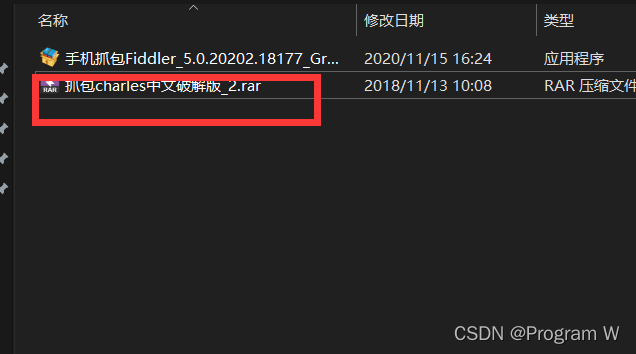

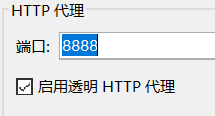

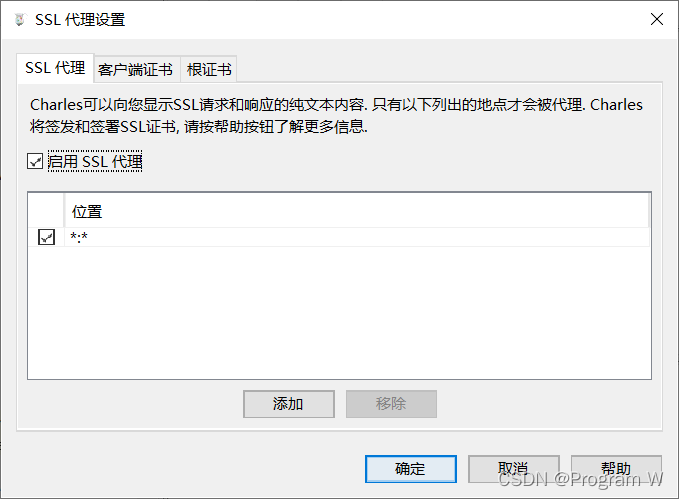

五、补充charles工具安装配置

下载了

charles后(先不启动),使用中文破解

-

安装给电脑与手机,(也可以保存下来可以给浏览器中的管理证书中直接使用)

-

配置代理(端口用于手机wifi代理用)

- 访问

http://chls.pro/ssl下载证书,用于导入浏览器证书或者手机证书导入

最后就可以抓到了,ok,就到这里

\(^o^)/~