最近在研究OC的生物活检方面的实现,发现SDK中自带有相应的功能类,则进行了调研与实现。

实现过程中发现一个比较坑人的一个地方,就是GPUIMAGE这个框架里面对于视频采集使用的YUV格式,而YUV格式无法与OC的类库进行配合实现实时识别。

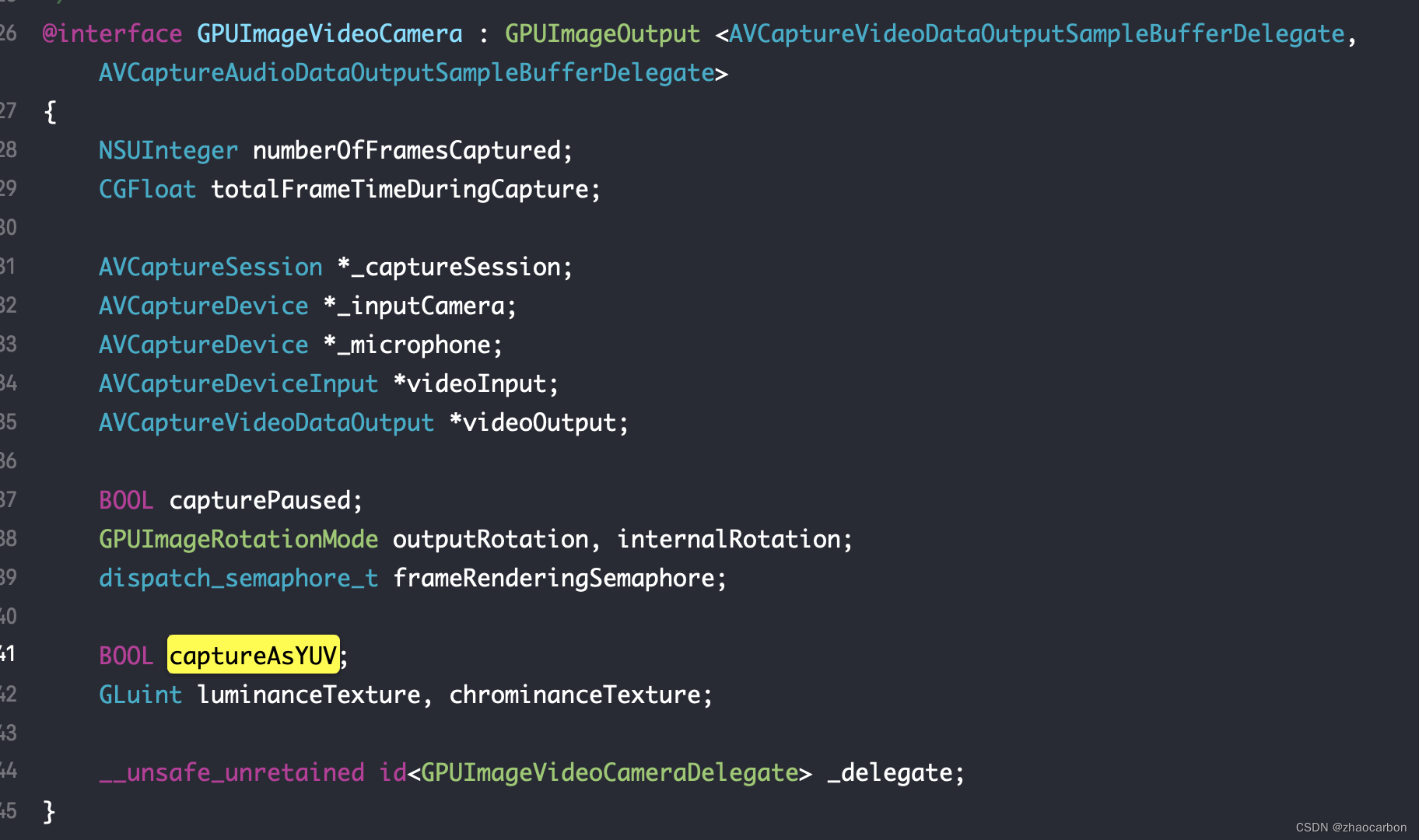

现在我们来剖析一下GPUImageVideoCamera的实现:

?

@interface GPUImageVideoCamera : GPUImageOutput <AVCaptureVideoDataOutputSampleBufferDelegate, AVCaptureAudioDataOutputSampleBufferDelegate>

- (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition;可以看到提供了一个初始化方法,此初始化方法内部的代码如下:?

- (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition;

{

if (!(self = [super init]))

{

return nil;

}

cameraProcessingQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_HIGH,0);

audioProcessingQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_LOW,0);

frameRenderingSemaphore = dispatch_semaphore_create(1);

_frameRate = 0; // This will not set frame rate unless this value gets set to 1 or above

_runBenchmark = NO;

capturePaused = NO;

outputRotation = kGPUImageNoRotation;

internalRotation = kGPUImageNoRotation;

captureAsYUV = YES;

_preferredConversion = kColorConversion709;

// Grab the back-facing or front-facing camera

_inputCamera = nil;

NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

for (AVCaptureDevice *device in devices)

{

if ([device position] == cameraPosition)

{

_inputCamera = device;

}

}

if (!_inputCamera) {

return nil;

}

// Create the capture session

_captureSession = [[AVCaptureSession alloc] init];

[_captureSession beginConfiguration];

// Add the video input

NSError *error = nil;

videoInput = [[AVCaptureDeviceInput alloc] initWithDevice:_inputCamera error:&error];

if ([_captureSession canAddInput:videoInput])

{

[_captureSession addInput:videoInput];

}

// Add the video frame output

videoOutput = [[AVCaptureVideoDataOutput alloc] init];

[videoOutput setAlwaysDiscardsLateVideoFrames:NO];

// if (captureAsYUV && [GPUImageContext deviceSupportsRedTextures])

if (captureAsYUV && [GPUImageContext supportsFastTextureUpload])

{

BOOL supportsFullYUVRange = NO;

NSArray *supportedPixelFormats = videoOutput.availableVideoCVPixelFormatTypes;

for (NSNumber *currentPixelFormat in supportedPixelFormats)

{

if ([currentPixelFormat intValue] == kCVPixelFormatType_420YpCbCr8BiPlanarFullRange)

{

supportsFullYUVRange = YES;

}

}

if (supportsFullYUVRange)

{

[videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarFullRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey]];

isFullYUVRange = YES;

}

else

{

[videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey]];

isFullYUVRange = NO;

}

}

else

{

[videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_32BGRA] forKey:(id)kCVPixelBufferPixelFormatTypeKey]];

}

runSynchronouslyOnVideoProcessingQueue(^{

if (captureAsYUV)

{

[GPUImageContext useImageProcessingContext];

// if ([GPUImageContext deviceSupportsRedTextures])

// {

// yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVVideoRangeConversionForRGFragmentShaderString];

// }

// else

// {

if (isFullYUVRange)

{

yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVFullRangeConversionForLAFragmentShaderString];

}

else

{

yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVVideoRangeConversionForLAFragmentShaderString];

}

// }

if (!yuvConversionProgram.initialized)

{

[yuvConversionProgram addAttribute:@"position"];

[yuvConversionProgram addAttribute:@"inputTextureCoordinate"];

if (![yuvConversionProgram link])

{

NSString *progLog = [yuvConversionProgram programLog];

NSLog(@"Program link log: %@", progLog);

NSString *fragLog = [yuvConversionProgram fragmentShaderLog];

NSLog(@"Fragment shader compile log: %@", fragLog);

NSString *vertLog = [yuvConversionProgram vertexShaderLog];

NSLog(@"Vertex shader compile log: %@", vertLog);

yuvConversionProgram = nil;

NSAssert(NO, @"Filter shader link failed");

}

}

yuvConversionPositionAttribute = [yuvConversionProgram attributeIndex:@"position"];

yuvConversionTextureCoordinateAttribute = [yuvConversionProgram attributeIndex:@"inputTextureCoordinate"];

yuvConversionLuminanceTextureUniform = [yuvConversionProgram uniformIndex:@"luminanceTexture"];

yuvConversionChrominanceTextureUniform = [yuvConversionProgram uniformIndex:@"chrominanceTexture"];

yuvConversionMatrixUniform = [yuvConversionProgram uniformIndex:@"colorConversionMatrix"];

[GPUImageContext setActiveShaderProgram:yuvConversionProgram];

glEnableVertexAttribArray(yuvConversionPositionAttribute);

glEnableVertexAttribArray(yuvConversionTextureCoordinateAttribute);

}

});

[videoOutput setSampleBufferDelegate:self queue:cameraProcessingQueue];

if ([_captureSession canAddOutput:videoOutput])

{

[_captureSession addOutput:videoOutput];

}

else

{

NSLog(@"Couldn't add video output");

return nil;

}

_captureSessionPreset = sessionPreset;

[_captureSession setSessionPreset:_captureSessionPreset];

// This will let you get 60 FPS video from the 720p preset on an iPhone 4S, but only that device and that preset

// AVCaptureConnection *conn = [videoOutput connectionWithMediaType:AVMediaTypeVideo];

//

// if (conn.supportsVideoMinFrameDuration)

// conn.videoMinFrameDuration = CMTimeMake(1,60);

// if (conn.supportsVideoMaxFrameDuration)

// conn.videoMaxFrameDuration = CMTimeMake(1,60);

[_captureSession commitConfiguration];

return self;

}注意看此初始化方法:恶心就恶心在这儿了,初始化时,默认给此全局变量设置了一个YES的值。导致后面所有的功能均基于YUV对视频进行采集。

captureAsYUV = YES;

迫于无奈,进行修改,新增如下初始化方法:

?//原初始化方法

- (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition;

//新增初始化方法

- (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition yuvColorSpace:(BOOL)yuvColorSpace;

- (id)initWithSessionPreset:(NSString *)sessionPreset cameraPosition:(AVCaptureDevicePosition)cameraPosition yuvColorSpace:(BOOL)yuvColorSpace { if (!(self = [super init])) { return nil; } cameraProcessingQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_HIGH,0); audioProcessingQueue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_LOW,0); frameRenderingSemaphore = dispatch_semaphore_create(1); _frameRate = 0; // This will not set frame rate unless this value gets set to 1 or above _runBenchmark = NO; capturePaused = NO; outputRotation = kGPUImageNoRotation; internalRotation = kGPUImageNoRotation; captureAsYUV = yuvColorSpace; _preferredConversion = kColorConversion709; // Grab the back-facing or front-facing camera _inputCamera = nil; NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo]; for (AVCaptureDevice *device in devices) { if ([device position] == cameraPosition) { _inputCamera = device; } } if (!_inputCamera) { return nil; } // Create the capture session _captureSession = [[AVCaptureSession alloc] init]; [_captureSession beginConfiguration]; // Add the video input NSError *error = nil; videoInput = [[AVCaptureDeviceInput alloc] initWithDevice:_inputCamera error:&error]; if ([_captureSession canAddInput:videoInput]) { [_captureSession addInput:videoInput]; } // Add the video frame output videoOutput = [[AVCaptureVideoDataOutput alloc] init]; [videoOutput setAlwaysDiscardsLateVideoFrames:NO]; // if (captureAsYUV && [GPUImageContext deviceSupportsRedTextures]) if (captureAsYUV && [GPUImageContext supportsFastTextureUpload]) { BOOL supportsFullYUVRange = NO; NSArray *supportedPixelFormats = videoOutput.availableVideoCVPixelFormatTypes; for (NSNumber *currentPixelFormat in supportedPixelFormats) { if ([currentPixelFormat intValue] == kCVPixelFormatType_420YpCbCr8BiPlanarFullRange) { supportsFullYUVRange = YES; } } if (supportsFullYUVRange) { [videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarFullRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey]]; isFullYUVRange = YES; } else { [videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey]]; isFullYUVRange = NO; } } else { [videoOutput setVideoSettings:[NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_32BGRA] forKey:(id)kCVPixelBufferPixelFormatTypeKey]]; } runSynchronouslyOnVideoProcessingQueue(^{ if (captureAsYUV) { [GPUImageContext useImageProcessingContext]; // if ([GPUImageContext deviceSupportsRedTextures]) // { // yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVVideoRangeConversionForRGFragmentShaderString]; // } // else // { if (isFullYUVRange) { yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVFullRangeConversionForLAFragmentShaderString]; } else { yuvConversionProgram = [[GPUImageContext sharedImageProcessingContext] programForVertexShaderString:kGPUImageVertexShaderString fragmentShaderString:kGPUImageYUVVideoRangeConversionForLAFragmentShaderString]; } // } if (!yuvConversionProgram.initialized) { [yuvConversionProgram addAttribute:@"position"]; [yuvConversionProgram addAttribute:@"inputTextureCoordinate"]; if (![yuvConversionProgram link]) { NSString *progLog = [yuvConversionProgram programLog]; NSLog(@"Program link log: %@", progLog); NSString *fragLog = [yuvConversionProgram fragmentShaderLog]; NSLog(@"Fragment shader compile log: %@", fragLog); NSString *vertLog = [yuvConversionProgram vertexShaderLog]; NSLog(@"Vertex shader compile log: %@", vertLog); yuvConversionProgram = nil; NSAssert(NO, @"Filter shader link failed"); } } yuvConversionPositionAttribute = [yuvConversionProgram attributeIndex:@"position"]; yuvConversionTextureCoordinateAttribute = [yuvConversionProgram attributeIndex:@"inputTextureCoordinate"]; yuvConversionLuminanceTextureUniform = [yuvConversionProgram uniformIndex:@"luminanceTexture"]; yuvConversionChrominanceTextureUniform = [yuvConversionProgram uniformIndex:@"chrominanceTexture"]; yuvConversionMatrixUniform = [yuvConversionProgram uniformIndex:@"colorConversionMatrix"]; [GPUImageContext setActiveShaderProgram:yuvConversionProgram]; glEnableVertexAttribArray(yuvConversionPositionAttribute); glEnableVertexAttribArray(yuvConversionTextureCoordinateAttribute); } }); [videoOutput setSampleBufferDelegate:self queue:cameraProcessingQueue]; if ([_captureSession canAddOutput:videoOutput]) { [_captureSession addOutput:videoOutput]; } else { NSLog(@"Couldn't add video output"); return nil; } _captureSessionPreset = sessionPreset; [_captureSession setSessionPreset:_captureSessionPreset]; // This will let you get 60 FPS video from the 720p preset on an iPhone 4S, but only that device and that preset // AVCaptureConnection *conn = [videoOutput connectionWithMediaType:AVMediaTypeVideo]; // // if (conn.supportsVideoMinFrameDuration) // conn.videoMinFrameDuration = CMTimeMake(1,60); // if (conn.supportsVideoMaxFrameDuration) // conn.videoMaxFrameDuration = CMTimeMake(1,60); [_captureSession commitConfiguration]; return self; }以上代码的上的就是对外暴露初始化时是使用RGB还是YUV格式进行采样。

captureAsYUV = yuvColorSpace;

项目中使用的定义类:

?

#import <Foundation/Foundation.h> #import <CoreImage/CoreImage.h> #import <GPUImage.h> @interface CIFaceFeatureMeta : NSObject @property (nonatomic,strong) CIFeature *features; @property (nonatomic,strong) UIImage *featureImage; @end @interface NSFaceFeature : NSObject + (CGRect)faceRect:(CIFeature*)feature; - (NSArray<CIFaceFeatureMeta *> *)processFaceFeaturesWithPicBuffer:(CMSampleBufferRef)sampleBuffer cameraPosition:(AVCaptureDevicePosition)currentCameraPosition; @end// // NSFaceFeature.m // VJFaceDetection // // Created by Vincent·Ge on 2018/6/19. // Copyright ? 2018年 Filelife. All rights reserved. // #import "NSFaceFeature.h" #import "GPUImageBeautifyFilter.h" // Options that can be used with -[CIDetector featuresInImage:options:] /* The value for this key is an integer NSNumber from 1..8 such as that found in kCGImagePropertyOrientation. If present, the detection will be done based on that orientation but the coordinates in the returned features will still be based on those of the image. */ typedef NS_ENUM(NSInteger , PHOTOS_EXIF_ENUM) { PHOTOS_EXIF_0ROW_TOP_0COL_LEFT = 1, // 1 = 0th row is at the top, and 0th column is on the left (THE DEFAULT). PHOTOS_EXIF_0ROW_TOP_0COL_RIGHT = 2, // 2 = 0th row is at the top, and 0th column is on the right. PHOTOS_EXIF_0ROW_BOTTOM_0COL_RIGHT = 3, // 3 = 0th row is at the bottom, and 0th column is on the right. PHOTOS_EXIF_0ROW_BOTTOM_0COL_LEFT = 4, // 4 = 0th row is at the bottom, and 0th column is on the left. PHOTOS_EXIF_0ROW_LEFT_0COL_TOP = 5, // 5 = 0th row is on the left, and 0th column is the top. PHOTOS_EXIF_0ROW_RIGHT_0COL_TOP = 6, // 6 = 0th row is on the right, and 0th column is the top. PHOTOS_EXIF_0ROW_RIGHT_0COL_BOTTOM = 7, // 7 = 0th row is on the right, and 0th column is the bottom. PHOTOS_EXIF_0ROW_LEFT_0COL_BOTTOM = 8 // 8 = 0th row is on the left, and 0th column is the bottom. }; @implementation CIFaceFeatureMeta @end @interface NSFaceFeature() @property (nonatomic, strong) CIDetector *faceDetector; @end @implementation NSFaceFeature - (instancetype)init { self = [super init]; [self loadFaceDetector]; return self; } - (void)loadFaceDetector { NSDictionary *detectorOptions = @{CIDetectorAccuracy:CIDetectorAccuracyLow, CIDetectorTracking:@(YES)}; self.faceDetector = [CIDetector detectorOfType:CIDetectorTypeFace context:nil options:detectorOptions]; } - (NSArray<CIFaceFeatureMeta *> *)processFaceFeaturesWithPicBuffer:(CMSampleBufferRef)sampleBuffer cameraPosition:(AVCaptureDevicePosition)currentCameraPosition { return [NSFaceFeature processFaceFeaturesWithPicBuffer:sampleBuffer faceDetector:self.faceDetector cameraPosition:currentCameraPosition]; } #pragma mark - Category Function + (CGRect)faceRect:(CIFeature*)feature { CGRect faceRect = feature.bounds; CGFloat temp = faceRect.size.width; temp = faceRect.origin.x; faceRect.origin.x = faceRect.origin.y; faceRect.origin.y = temp; return faceRect; } + (NSArray<CIFaceFeatureMeta *> *)processFaceFeaturesWithPicBuffer:(CMSampleBufferRef)sampleBuffer faceDetector:(CIDetector *)faceDetector cameraPosition:(AVCaptureDevicePosition)currentCameraPosition { CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); CFDictionaryRef attachments = CMCopyDictionaryOfAttachments(kCFAllocatorDefault, sampleBuffer, kCMAttachmentMode_ShouldPropagate); //从帧中获取到的图片相对镜头下看到的会向左旋转90度,所以后续坐标的转换要注意。 CIImage *convertedImage = [[CIImage alloc] initWithCVPixelBuffer:pixelBuffer options:(__bridge NSDictionary *)attachments]; if (attachments) { CFRelease(attachments); } NSDictionary *imageOptions = nil; UIDeviceOrientation curDeviceOrientation = [[UIDevice currentDevice] orientation]; int exifOrientation; BOOL isUsingFrontFacingCamera = currentCameraPosition != AVCaptureDevicePositionBack; switch (curDeviceOrientation) { case UIDeviceOrientationPortraitUpsideDown: exifOrientation = PHOTOS_EXIF_0ROW_LEFT_0COL_BOTTOM; break; case UIDeviceOrientationLandscapeLeft: if (isUsingFrontFacingCamera) { exifOrientation = PHOTOS_EXIF_0ROW_BOTTOM_0COL_RIGHT; }else { exifOrientation = PHOTOS_EXIF_0ROW_TOP_0COL_LEFT; } break; case UIDeviceOrientationLandscapeRight: if (isUsingFrontFacingCamera) { exifOrientation = PHOTOS_EXIF_0ROW_TOP_0COL_LEFT; }else { exifOrientation = PHOTOS_EXIF_0ROW_BOTTOM_0COL_RIGHT; } break; default: exifOrientation = PHOTOS_EXIF_0ROW_RIGHT_0COL_TOP; //值为6。确定初始化原点坐标的位置,坐标原点为右上。其中横的为y,竖的为x,表示真实想要显示图片需要顺时针旋转90度 break; } //exifOrientation的值用于确定图片的方向 imageOptions = [NSDictionary dictionaryWithObject:[NSNumber numberWithInt:exifOrientation] forKey:CIDetectorImageOrientation]; NSArray<CIFeature *> *lt = [faceDetector featuresInImage:convertedImage options:imageOptions]; NSMutableArray *at = [NSMutableArray arrayWithCapacity:0]; [lt enumerateObjectsUsingBlock:^(CIFeature * _Nonnull obj, NSUInteger idx, BOOL * _Nonnull stop) { CIFaceFeatureMeta *b = [[CIFaceFeatureMeta alloc] init]; [b setFeatures:obj]; UIImage *portraitImage = [[UIImage alloc] initWithCIImage: convertedImage scale: 1.0 orientation: UIImageOrientationRight]; [b setFeatureImage:portraitImage]; [at addObject:b]; }]; return at; } @end

页面控制器:

@interface AttendanceBioViewController : UIViewController

- (void)showWithResultsDelegateBlock:(void (^)(NSMutableDictionary *ej))delegateBlock useOtherBlock:(void (^)(void))useOtherBlock;

@end?

//

// AttendanceBioViewController.m

// SGBProject

//

// Created by carbonzhao on 2022/4/14.

// Copyright ? 2022 All rights reserved.

//

#import "AttendanceBioViewController.h"

#import "GPUImageBeautifyFilter.h"

#import "NSFaceFeature.h"

@interface AttendanceBioViewController ()<GPUImageVideoCameraDelegate>

{

}

@property (nonatomic, strong) GPUImageStillCamera *videoCamera;

@property (nonatomic, strong) GPUImageView *filterView;

@property (nonatomic, strong) GPUImageBeautifyFilter *beautifyFilter;

@property (strong, nonatomic) NSFaceFeature *faceFeature;

@property (nonatomic, strong) UIImageView *maskView;

@property (strong, nonatomic) UIImage *capturedImage;

@property (strong, nonatomic) UISpinnerAnimationView *animationView;

@property (nonatomic, strong) UILabel *tipsLabel;

@property (nonatomic, strong) UILabel *resultLabel;

@property (nonatomic, assign) BOOL hasSmile;

@property (nonatomic, assign) BOOL leftEyeClosed;

@property (nonatomic, assign) BOOL rightEyeClosed;

@end

@implementation AttendanceBioViewController

- (instancetype)init

{

if (self = [super init])

{

}

return self;

}

- (void)viewDidLoad {

[super viewDidLoad];

// Do any additional setup after loading the view.

//GPUImageVideoCamera.m中的修改,YUV格式下无法进行实时人脸识别,如果要进行实时人脸识别,设置为captureAsYUV = NO;否则无法进行人脸实时识别

[self setupCameraUI];

}

#pragma mark - otherMethod

- (void)setupCameraUI

{

self.faceFeature = [NSFaceFeature new];

//YUV格式下无法进行实时人脸识别,如果要进行实时人脸识别,设置为false

self.videoCamera = [[GPUImageStillCamera alloc] initWithSessionPreset:AVCaptureSessionPresetMedium cameraPosition:AVCaptureDevicePositionFront yuvColorSpace:NO];

self.videoCamera.outputImageOrientation = UIInterfaceOrientationPortrait;

self.videoCamera.horizontallyMirrorFrontFacingCamera = YES;

WeakSelf(self);

[NSAutoTimer scheduledTimerWithTimeInterval:2 target:self scheduledBlock:^(NSInteger timerIndex) {

weakSelf.videoCamera.delegate = self;

}];

UILabel *lb = [[UILabel alloc] initWithFrame:CGRectMake(0, CG_NAV_BAR_HEIGHT, self.view.size.width, CG_NAV_BAR_HEIGHT)];

[lb setBackgroundColor:[UIColor clearColor]];

[lb setText:@"拿起手机,眨眨眼"];

[lb setFont:[UIFont boldSystemFontOfSize:20]];

[lb setTextAlignment:NSTextAlignmentCenter];

[lb setTextColor:[UIColor blackColor]];

[self setTipsLabel:lb];

[self.view addSubview:lb];

CGFloat sz = MIN(self.view.bounds.size.width, self.view.bounds.size.height)/2;

CGRect ft = CGRectMake((self.view.bounds.size.width-sz)/2, lb.bottom+CG_NAV_BAR_HEIGHT, sz, sz);

UISpinnerAnimationView *c1View = [[UISpinnerAnimationView alloc] initWithFrame:ft];

[c1View.layer setMasksToBounds:YES];

[c1View.layer setCornerRadius:sz/2];

[c1View setAnimationType:UISpinnerAnimationViewAnimationSpinner];

CGPoint arcCenter = CGPointMake(c1View.frame.size.width / 2, c1View.frame.size.height / 2);

UIBezierPath *path = [UIBezierPath bezierPath];

[path addArcWithCenter:arcCenter radius:sz/2 startAngle:0.55 * M_PI endAngle:0.45 * M_PI clockwise:YES];

CAShapeLayer *shapeLayer=[CAShapeLayer layer];

shapeLayer.path = path.CGPath;

shapeLayer.fillColor = [UIColor clearColor].CGColor;//填充颜色

shapeLayer.strokeColor = [UIColor lightGrayColor].CGColor;//边框颜色

shapeLayer.lineCap = kCALineCapRound;

shapeLayer.lineWidth = 4;

[c1View.layer addSublayer:shapeLayer];

[self setAnimationView:c1View];

[self.view addSubview:c1View];

CGRect fm = CGRectMake(4, 4, c1View.bounds.size.width-8, c1View.bounds.size.height-8);

self.filterView = [[GPUImageView alloc] initWithFrame:fm];

self.filterView.fillMode = kGPUImageFillModePreserveAspectRatioAndFill;

// self.filterView.center = c1View.center;

[self.filterView.layer setMasksToBounds:NO];

[self.filterView.layer setCornerRadius:fm.size.height/2];

[self.filterView setClipsToBounds:YES];

self.filterView.layer.shouldRasterize = YES;

self.filterView.layer.rasterizationScale = [UIScreen mainScreen].scale;

[c1View addSubview:self.filterView];

self.beautifyFilter = [[GPUImageBeautifyFilter alloc] init];

[self.videoCamera addTarget:self.beautifyFilter];

[self.beautifyFilter addTarget:self.filterView];

UIView *mview = [[UIView alloc] initWithFrame:ft];

[mview.layer setMasksToBounds:YES];

[mview.layer setCornerRadius:ft.size.height/2];

[mview setClipsToBounds:YES];

mview.layer.shouldRasterize = YES;

mview.layer.rasterizationScale = [UIScreen mainScreen].scale;

[self.view addSubview:mview];

CGRect myRect = CGRectMake(0,0,ft.size.width, ft.size.height);

//背景

path = [UIBezierPath bezierPathWithRoundedRect:mview.bounds cornerRadius:0];

//镂空

UIBezierPath *circlePath = [UIBezierPath bezierPathWithOvalInRect:myRect];

[path appendPath:circlePath];

[path setUsesEvenOddFillRule:YES];

CAShapeLayer *fillLayer = [CAShapeLayer layer];

fillLayer.path = path.CGPath;

fillLayer.fillRule = kCAFillRuleEvenOdd;

fillLayer.fillColor = [UIColor clearColor].CGColor;

fillLayer.opacity = 0;

[mview.layer addSublayer:fillLayer];

UIImageView *icView = [[UIImageView alloc] initWithFrame:CGRectMake(0, -mview.bounds.size.height, mview.bounds.size.width, mview.bounds.size.height)];

[icView setImage:[UIImage imageNamed:@"scannet"]];

[icView setAlpha:0.7];

[mview addSubview:icView];

[self setMaskView:icView];

lb = [[UILabel alloc] initWithFrame:CGRectMake(0, 0, ft.size.width, 40)];

[lb setBackgroundColor:RGBA(40, 40, 40,0.7)];

[lb setText:@"没有检测到人脸"];

[lb setFont:[UIFont systemFontOfSize:12]];

[lb setTextAlignment:NSTextAlignmentCenter];

[lb setTextColor:[UIColor whiteColor]];

lb.hidden = YES;

self.resultLabel = lb;

[mview addSubview:lb];

UIButton *cbtn = [UIButton buttonWithType:UIButtonTypeCustom];

[cbtn setFrame:CGRectMake(20, CG_NAV_BAR_HEIGHT, 20, 20)];

[cbtn setBackgroundImage:[UIImage imageNamed:@"meeting_reserve_refuse_blue"] forState:UIControlStateNormal];

[cbtn addTargetActionBlock:^(UIButton * _Nonnull aButton) {

[weakSelf closeView:nil];

}];

[self.view addSubview:cbtn];

[self.videoCamera startCameraCapture];

[self loopDrawLine];

self.atimer = [NSAutoTimer scheduledTimerWithTimeInterval:2 target:self scheduledBlock:^(NSInteger timerIndex) {

[weakSelf loopDrawLine];

} userInfo:nil repeats:YES];

}

-(void)loopDrawLine

{

UIImageView *readLineView = self.maskView;

[readLineView setFrame:CGRectMake(0, -readLineView.bounds.size.height, readLineView.bounds.size.width, readLineView.bounds.size.height)];

[UIView animateWithDuration:2 animations:^

{

//修改fream的代码写在这里

CGRect ft = readLineView.frame;

ft.origin.y += ft.size.height;

ft.origin.y += ft.size.height;

readLineView.frame = ft;

}

completion:^(BOOL finished)

{

}];

}

- (void)closeView:(void (^)(void))hideFinishedBlock

{

[self.atimer invalidate];

[self.videoCamera stopCameraCapture];

[self dismissViewControllerAnimated:YES completion:^{

if (hideFinishedBlock)

{

dispatch_async(dispatch_get_main_queue(), ^{

hideFinishedBlock();

});

}

}];

}

- (void)showWithResultsDelegateBlock:(void (^)(NSMutableDictionary *ej))block useOtherBlock:(void (^)(void))useOtherBlock

{

[self setDelegateBlock:block];

[self setUseOtherBlock:useOtherBlock];

}

//向服务器上传采集的照片

-(void)uploadFaceImgWithCompleteBlock:(void(^)(void))completeBlock

{

WeakSelf(self);

UIImage *image = self.capturedImage;

//调用你们自己的服务器接口,成功后调用completeBlock

}

#pragma mark - Face Detection

- (UIImage *)sampleBufferToImage:(CMSampleBufferRef)sampleBuffer

{

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

CIImage *ciImage = [CIImage imageWithCVPixelBuffer:imageBuffer];

CIContext *temporaryContext = [CIContext contextWithOptions:nil];

CGImageRef videoImage = [temporaryContext createCGImage:ciImage fromRect:CGRectMake(0, 0, CVPixelBufferGetWidth(imageBuffer), CVPixelBufferGetHeight(imageBuffer))];

UIImage *result = [[UIImage alloc] initWithCGImage:videoImage scale:1.0 orientation:UIImageOrientationUp];

CGImageRelease(videoImage);

return result;

}

- (NSMutableArray *)faceFeatureResults:(CIImage *)ciImage

{

NSNumber *minSize = [NSNumber numberWithFloat:.45];

NSMutableDictionary *options = [NSMutableDictionary dictionaryWithCapacity:0];

[options setObject:CIDetectorAccuracyHigh forKey:CIDetectorAccuracy];

[options setObject:minSize forKey:CIDetectorMinFeatureSize];

[options setObject:[NSNumber numberWithBool:YES] forKey:CIDetectorSmile];

[options setObject:[NSNumber numberWithBool:YES] forKey:CIDetectorEyeBlink];

[options setObject:[NSNumber numberWithBool:YES] forKey:CIDetectorTracking];

CIDetector *detector = [CIDetector detectorOfType:CIDetectorTypeFace context:nil options:options];

NSMutableArray<CIFaceFeature *> *faceFeatures = (NSMutableArray<CIFaceFeature *> *) [detector featuresInImage:ciImage options:options];

return faceFeatures;

}

- (void)willOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer

{

UIImage *resultImage = [self sampleBufferToImage:sampleBuffer];

CIImage *ciImage = [[CIImage alloc] initWithImage:resultImage];

WeakSelf(self);

if (/*self.hasSmile && */(self.leftEyeClosed || self.rightEyeClosed))

{

NSArray *faceFeatures = [self faceFeatureResults:ciImage];

if(faceFeatures.count > 0)

{

[self.videoCamera stopCameraCapture];

self.capturedImage = resultImage;

self.videoCamera.delegate = nil;

[self.atimer invalidate];

dispatch_async(dispatch_get_main_queue(), ^{

[weakSelf.resultLabel setHidden:YES];

[weakSelf.animationView startAnimation];

[weakSelf uploadFaceImgWithCompleteBlock:^{

[weakSelf.animationView stopAnimation];

}];

});

}

else

{

self.hasSmile = NO;

self.leftEyeClosed = NO;

self.rightEyeClosed = NO;

}

}

else

{

NSMutableArray<CIFaceFeature *> *faceFeatures = [self faceFeatureResults:ciImage];

if (faceFeatures && faceFeatures.count > 0)

{

WeakSelf(self);

dispatch_async(dispatch_get_main_queue(), ^{

[weakSelf.resultLabel setHidden:YES];

});

__block BOOL hasSmile=NO;

__block BOOL leftEyeClosed = NO;

__block BOOL rightEyeClosed = NO;

[faceFeatures enumerateObjectsUsingBlock:^(CIFaceFeature *ft, NSUInteger idx, BOOL * _Nonnull stop)

{

hasSmile |= ft.hasSmile;

leftEyeClosed |= ft.leftEyeClosed;

rightEyeClosed |= ft.rightEyeClosed;

}];

self.hasSmile = hasSmile;

self.leftEyeClosed = leftEyeClosed;

self.rightEyeClosed = rightEyeClosed;

if (/*hasSmile &&*/ (leftEyeClosed || rightEyeClosed))

{

//准备抓拍下一帧做为人脸

}

else

{

dispatch_async(dispatch_get_main_queue(), ^{

[weakSelf.tipsLabel setText:@"请来一个阳光的微笑或眨眨眼"];

});

}

}

else

{

dispatch_async(dispatch_get_main_queue(), ^{

[self.resultLabel setHidden:NO];

[self.resultLabel setText:@"没有检测到脸"];

});

}

}

}

@end

使用的动画类:参考以下连接