目录

Android版本: P

前言

本文是对《深入理解Android内核设计思想》一书对于binder介绍的学习笔记。书中对binder的讲解主要以设计者的角度出发,一步步讲解binder机制的实现。

binder类比于网络,有如下的模型对应:

binder驱动 ---- 路由器

SM ----- DNS

binder标志----- IP地址

1. binder驱动

device_initcall(binder_init)

1.1 binder_open

static int binder_open(struct inode *nodp, struct file *filp)

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

//此处主要分配了binder_proc结构体,管理数据结构体,每个进程都有独立的记录

proc->tsk = current->group_leader;

INIT_LIST_HEAD(&proc->todo);

init_waitqueue_head(&proc->wait);

hlist_add_head(&proc->proc_node, &binder_procs);

...

对proc结构体进行初始化

hlist_add_head(&proc->proc_node, &binder_procs);

//将binder_proc加入到binder_procs的头部

binder_proc是为应用进程分配的数据结构,记录了该进程有关的信息

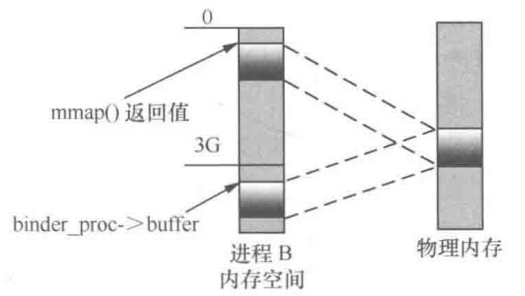

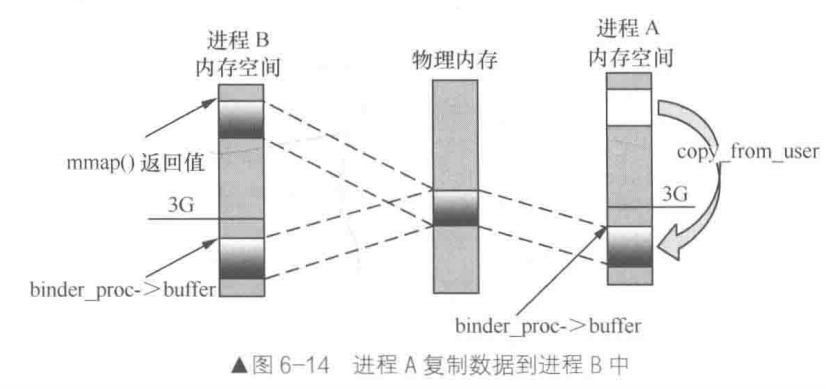

1.2 binder_mmap

mmap将使得用户空间和内核空间映射到同一块物理内存

由于进程的内核空间是共享的,因此如果进程B通过mmap实现用户空间和内核空间映射到同一块物理内存,进程A通过copy_from_user拷贝到内核空间,则可以实现与进程B的共享

static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

{

int ret;

struct vm_struct *area;

struct binder_proc *proc = filp->private_data;

const char *failure_string;

struct binder_buffer *buffer;

if (proc->tsk != current)

return -EINVAL;

if ((vma->vm_end - vma->vm_start) > SZ_4M)

vma->vm_end = vma->vm_start + SZ_4M;

//判断mmap标志是否被禁止

if (vma->vm_flags & FORBIDDEN_MMAP_FLAGS) {

ret = -EPERM;

failure_string = "bad vm_flags";

goto err_bad_arg;

}

vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE;

mutex_lock(&binder_mmap_lock);

if (proc->buffer) {

ret = -EBUSY;

failure_string = "already mapped";

goto err_already_mapped;

}

area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP);

proc->buffer = area->addr;

proc->user_buffer_offset = vma->vm_start - (uintptr_t)proc->buffer;

mutex_unlock(&binder_mmap_lock);

proc->pages = kzalloc(sizeof(proc->pages[0]) * ((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL);

if (proc->pages == NULL) {

ret = -ENOMEM;

failure_string = "alloc page array";

goto err_alloc_pages_failed;

}

proc->buffer_size = vma->vm_end - vma->vm_start;

vma->vm_ops = &binder_vm_ops;

vma->vm_private_data = proc;

if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) {

ret = -ENOMEM;

failure_string = "alloc small buf";

goto err_alloc_small_buf_failed;

}

buffer = proc->buffer;

INIT_LIST_HEAD(&proc->buffers);

//主要是添加到proc管理的链表中,proc管理着三条链表:buffers, allocate_buffers, free_buffers

list_add(&buffer->entry, &proc->buffers);

buffer->free = 1;

binder_insert_free_buffer(proc, buffer);

proc->free_async_space = proc->buffer_size / 2;

barrier();

proc->files = get_files_struct(current);

proc->vma = vma;

proc->vma_vm_mm = vma->vm_mm;

/*pr_info("binder_mmap: %d %lx-%lx maps %p\n",

proc->pid, vma->vm_start, vma->vm_end, proc->buffer);*/

return 0;

}

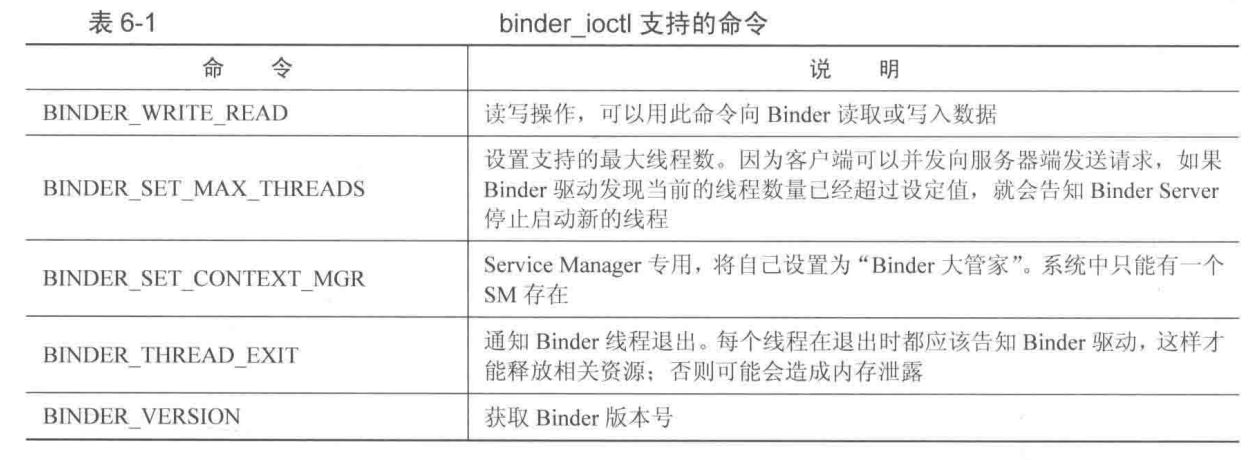

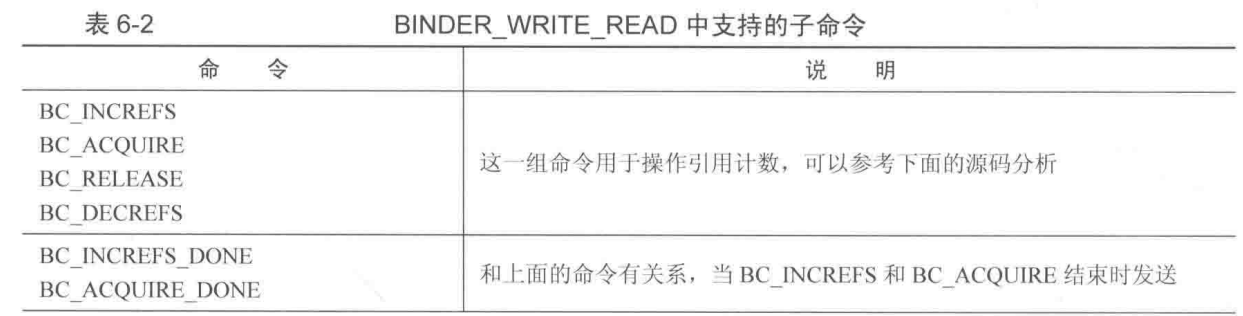

1.3 binder_ioctl

2. ServcieManager

主要位于framworks/native/cmds/ServiceManager文件夹

2.1 ServiceManager启动

service servicemanager /system/bin/servicemanager

class core animation

user system

group system readproc

critical

onrestart restart healthd

onrestart restart zygote

onrestart restart audioserver

onrestart restart media

onrestart restart surfaceflinger

onrestart restart inputflinger

onrestart restart drm

onrestart restart cameraserver

onrestart restart keystore

onrestart restart gatekeeperd

onrestart restart thermalservice

writepid /dev/cpuset/system-background/tasks

shutdown critical

ServiceManager是由init进程通过解析init.rc文件而创建的,其所对应的可执行程序:

/system/bin/servicemanager,所对应的源文件是

frameworks/native/cmds/servicemanager/service_manager.c,

进程名为/system/bin/servicemanager。

一旦SM重启,其它系统服务也需要重现加载,SM虽然承担着DNS服务器的功能,但它本身也是一个服务端

/*frameworks/native/cmds/servicemanager/service_manager.c*/

int main(int argc, char** argv)

|--driver = "/dev/binder";

|--binder_open(driver, 128*1024);

|--binder_become_context_manager(bs)

|--binder_loop(bs, svcmgr_handler)

ServiceManager是Binder IPC通信过程中的守护进程,本身也是一个Binder服务, 主要的工作包括:

-

binder_open:在驱动层创建当前进程的binder_proc,设置最大binder线程数为16,并通过mmap分配binder通信的内存空间;

-

becomeContextManager:注册成为binder服务的大管家

-

binder_loop 进入无限循环,开始监听binder节点

2.2 ServiceManager的loop循环

void binder_loop(struct binder_state *bs, binder_handler func)

390{

391 int res;

392 struct binder_write_read bwr;

393 uint32_t readbuf[32];

394 //write_size 为0,说明只处理读操作

395 bwr.write_size = 0;

396 bwr.write_consumed = 0;

397 bwr.write_buffer = 0;

398

399 readbuf[0] = BC_ENTER_LOOPER;

400 binder_write(bs, readbuf, sizeof(uint32_t));

401

402 for (;;) {

403 bwr.read_size = sizeof(readbuf);

404 bwr.read_consumed = 0;

405 bwr.read_buffer = (uintptr_t) readbuf;

406

407 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

408

409 if (res < 0) {

410 ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

411 break;

412 }

413

414 res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

415 if (res == 0) {

416 ALOGE("binder_loop: unexpected reply?!\n");

417 break;

418 }

419 if (res < 0) {

420 ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

421 break;

422 }

423 }

424}

SM的loop循环为binder_loop, bwr为strucdt binder_write_read类型,binder驱动中将通过判断bwr中write和read的size来得出是读取还是写入,此处为读取消息

主要通过ioctl(bs->fd, BINDER_WRITE_READ, &bwr)从binder驱动中获取消息

获取到消息后将通过binder_parse对消息进行解析,解析的过程会调用到svcmgr_handler

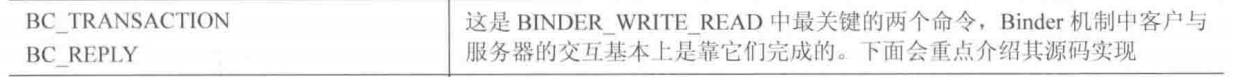

其中两个主要的命令是BR_TRANSACTION和BR_REPLY,后面主要讲述这两个命令的处理过程

2.3 binder_parse

在分析binder_parse的解析命令之前,我们先想下SM的主要职能:

- 注册服务,和某个句柄绑定;

- 通过句柄查询到具体的某个服务

- 其它查询功能

int binder_parse(struct binder_state *bs, struct binder_io *bio,

218 uintptr_t ptr, size_t size, binder_handler func)

219{

220 int r = 1;

221 uintptr_t end = ptr + (uintptr_t) size;

222

223 while (ptr < end) {

224 uint32_t cmd = *(uint32_t *) ptr;

225 ptr += sizeof(uint32_t);

229 switch(cmd) {

230 case BR_NOOP:

231 break;

232 case BR_TRANSACTION_COMPLETE:

233 break;

234 case BR_INCREFS:

235 case BR_ACQUIRE:

236 case BR_RELEASE:

237 case BR_DECREFS:

241 ptr += sizeof(struct binder_ptr_cookie);

242 break;

//对BR_TRANSACTION的处理

243 case BR_TRANSACTION: {

244 struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

245 if ((end - ptr) < sizeof(*txn)) {

246 ALOGE("parse: txn too small!\n");

247 return -1;

248 }

249 binder_dump_txn(txn);

250 if (func) {

251 unsigned rdata[256/4];

252 struct binder_io msg;

253 struct binder_io reply;

254 int res;

255

256 bio_init(&reply, rdata, sizeof(rdata), 4);

257 bio_init_from_txn(&msg, txn);

//此处func为svcmgr_handler,txn为数据部分

258 res = func(bs, txn, &msg, &reply);

259 if (txn->flags & TF_ONE_WAY) {

260 binder_free_buffer(bs, txn->data.ptr.buffer);

261 } else {

262 binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

263 }

264 }

265 ptr += sizeof(*txn);

266 break;

267 }

268 case BR_REPLY: {

269 struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

270 if ((end - ptr) < sizeof(*txn)) {

271 ALOGE("parse: reply too small!\n");

272 return -1;

273 }

274 binder_dump_txn(txn);

275 if (bio) {

276 bio_init_from_txn(bio, txn);

277 bio = 0;

278 } else {

279 /* todo FREE BUFFER */

280 }

281 ptr += sizeof(*txn);

282 r = 0;

283 break;

284 }

285 case BR_DEAD_BINDER: {

286 struct binder_death *death = (struct binder_death *)(uintptr_t) *(binder_uintptr_t *)ptr;

287 ptr += sizeof(binder_uintptr_t);

288 death->func(bs, death->ptr);

289 break;

290 }

291 case BR_FAILED_REPLY:

292 r = -1;

293 break;

294 case BR_DEAD_REPLY:

295 r = -1;

296 break;

297 default:

298 ALOGE("parse: OOPS %d\n", cmd);

299 return -1;

300 }

301 }

302

303 return r;

304}

2.3.1 BR_TRANSACTION

对于BR_TRANSACTION命令,binder_parse会调用svcmgr_handler处理,根据前面对SM功能的猜想,svcmgr_handler主要它包含了以下几个消息的处理,处理完毕后binder_parse会调用binder_send_reply,将执行结果回复给底层驱动,进而转发给客户端,之后binder_parse将继续下一轮循环

int svcmgr_handler(struct binder_state *bs,

251 struct binder_transaction_data *txn,

252 struct binder_io *msg,

253 struct binder_io *reply)

254{

255 struct svcinfo *si;

256 uint16_t *s;

257 size_t len;

258 uint32_t handle;

259 uint32_t strict_policy;

260 int allow_isolated;

261 uint32_t dumpsys_priority;

262

263 //ALOGI("target=%p code=%d pid=%d uid=%d\n",

264 // (void*) txn->target.ptr, txn->code, txn->sender_pid, txn->sender_euid);

265

266 if (txn->target.ptr != BINDER_SERVICE_MANAGER)

267 return -1;

268

269 if (txn->code == PING_TRANSACTION)

270 return 0;

271

272 // Equivalent to Parcel::enforceInterface(), reading the RPC

273 // header with the strict mode policy mask and the interface name.

274 // Note that we ignore the strict_policy and don't propagate it

275 // further (since we do no outbound RPCs anyway).

276 strict_policy = bio_get_uint32(msg);

277 s = bio_get_string16(msg, &len);

278 if (s == NULL) {

279 return -1;

280 }

281

282 if ((len != (sizeof(svcmgr_id) / 2)) ||

283 memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

284 fprintf(stderr,"invalid id %s\n", str8(s, len));

285 return -1;

286 }

287

288 if (sehandle && selinux_status_updated() > 0) {

289#ifdef VENDORSERVICEMANAGER

290 struct selabel_handle *tmp_sehandle = selinux_android_vendor_service_context_handle();

291#else

292 struct selabel_handle *tmp_sehandle = selinux_android_service_context_handle();

293#endif

294 if (tmp_sehandle) {

295 selabel_close(sehandle);

296 sehandle = tmp_sehandle;

297 }

298 }

299

300 switch(txn->code) {

301 case SVC_MGR_GET_SERVICE:

302 case SVC_MGR_CHECK_SERVICE:

303 s = bio_get_string16(msg, &len);

304 if (s == NULL) {

305 return -1;

306 }

//查找service

307 handle = do_find_service(s, len, txn->sender_euid, txn->sender_pid);

308 if (!handle)

309 break;

310 bio_put_ref(reply, handle);

311 return 0;

312

313 case SVC_MGR_ADD_SERVICE:

314 s = bio_get_string16(msg, &len);

315 if (s == NULL) {

316 return -1;

317 }

318 handle = bio_get_ref(msg);

319 allow_isolated = bio_get_uint32(msg) ? 1 : 0;

320 dumpsys_priority = bio_get_uint32(msg);

321 if (do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, dumpsys_priority,

322 txn->sender_pid))

323 return -1;

324 break;

325

326 case SVC_MGR_LIST_SERVICES: {

327 uint32_t n = bio_get_uint32(msg);

328 uint32_t req_dumpsys_priority = bio_get_uint32(msg);

329

330 if (!svc_can_list(txn->sender_pid, txn->sender_euid)) {

331 ALOGE("list_service() uid=%d - PERMISSION DENIED\n",

332 txn->sender_euid);

333 return -1;

334 }

335 si = svclist;

336 // walk through the list of services n times skipping services that

337 // do not support the requested priority

338 while (si) {

339 if (si->dumpsys_priority & req_dumpsys_priority) {

340 if (n == 0) break;

341 n--;

342 }

343 si = si->next;

344 }

345 if (si) {

346 bio_put_string16(reply, si->name);

347 return 0;

348 }

349 return -1;

350 }

351 default:

352 ALOGE("unknown code %d\n", txn->code);

353 return -1;

354 }

355

356 bio_put_uint32(reply, 0);

357 return 0;

358}

2.3.2 BR_REPLY

因为对于SM来说,并没有向其它服务请求,因此没有实质性操作,但是对于其它的服务则不同

如上可看出SM的处理流程

3. 获取ServiceManager服务

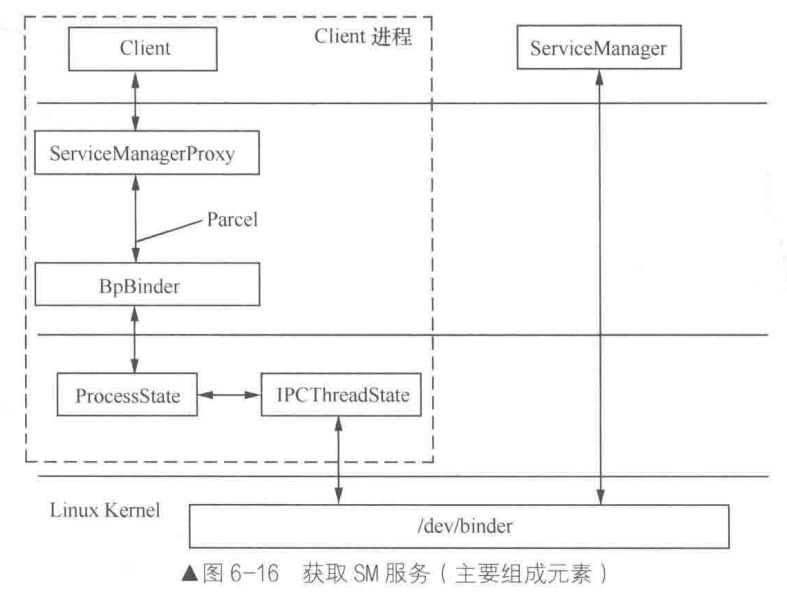

本节说明java层应用如何获取SM服务。

思路:从binder和SM设计的角度出发,考虑要提供SM的某个功能,应该怎么做。

一个进程只允许打开一次binder设备,执行一次mmap

ProcessState:专门管理应用程序中对binder的操作

IPCThreadState:管理线程与binder驱动通信

下面主要以getService为例进行说明

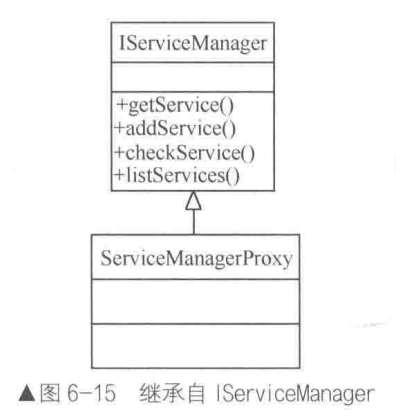

3.1 ServiceManager分层结构

- ServiceManagerProxy(java层)

位于frameworks/base/core/java/android/os/ServiceManagerNative.java

ServiceManagerProxy是对ServiceManager服务所提供的封装,ServiceManagerProxy和使用者位于同一进程,同时ServiceManagerProxy所提供的服务接口与ServiceManager是一致的

ServiceManagerProxy通过BpBinder调用ProcessState / IPCThreadState来与Binder驱动进行交互

- IServiceManager接口

把ServciceManagerProxy的一些接口提取出来,就是IServiceManager,ServiceManagerProxy实现了IServiceManager接口 - ServiceManager.java

ServiceManager.java是对ServciceManagerProxy的又一层封装,通过ServiceManager.java可以创建ServciceManagerProxy,并调用ServciceManagerProxy的相关接口 - IBinder

位于frameworks/base/core/java/android/os/IBinder.java

为了用户尽可能简单的使用Binder的功能,因此创建了IBinder,统一提供binder的接口,包含如下的接口:

public boolean transact(int code, @NonNull Parcel data, @Nullable Parcel reply, int flags) throws RemoteException;

public @Nullable IInterface queryLocalInterface(@NonNull String descriptor);

同时还需要能够创建IBinder的类,位于frameworks/base/core/java/com/android/internal/os/BinderInternal.java

public class BinderInternal {

...

public static final native IBinder getContextObject();

...

}

这是一个native方法,通过JNI调用本地代码实现,对应的JNI层代码为:

frameworks/base/core/jni/android_util_Binder.cpp

977 static jobject android_os_BinderInternal_getContextObject(JNIEnv* env, jobject clazz)

978{

979 sp<IBinder> b = ProcessState::self()->getContextObject(NULL);//创建BpBinder

980 return javaObjectForIBinder(env, b);//将native层BpBinder转换为java层IBinderProxy

981}

注:因为context可以理解为进程上下文,而它与IPC关联密切,而binder作为context的一部分,因此可以称为contex

- ProcessState

ProcessState需要具有如下特性:

- 同一进程只有一个ProcessState实例,而且只有在ProcessState对象创建时才打开Binder设备以及做内存映射。

- 能提供IPC服务

- 与IPCThread能合理分工

代码位置:frameworks/native/libs/binder/ProcessState.cpp

通过单例模式来创建ProcessState

68sp<ProcessState> ProcessState::self()

69{

70 Mutex::Autolock _l(gProcessMutex);

71 if (gProcess != NULL) {

72 return gProcess;

73 }

74 gProcess = new ProcessState("/dev/binder");

75 return gProcess;

76}

ProcessState的构造函数如下:

409ProcessState::ProcessState(const char *driver)

410 : mDriverName(String8(driver))

411 , mDriverFD(open_driver(driver)) //这里会打开binder设备,并执行mmap

412 , mVMStart(MAP_FAILED)

413 , mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

414 , mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

415 , mExecutingThreadsCount(0)

416 , mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

417 , mStarvationStartTimeMs(0)

418 , mManagesContexts(false)

419 , mBinderContextCheckFunc(NULL)

420 , mBinderContextUserData(NULL)

421 , mThreadPoolStarted(false)

422 , mThreadPoolSeq(1)

423{

424 if (mDriverFD >= 0) {

425 // mmap the binder, providing a chunk of virtual address space to receive transactions.

426 mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

427 if (mVMStart == MAP_FAILED) {

428 // *sigh*

429 ALOGE("Using %s failed: unable to mmap transaction memory.\n", mDriverName.c_str());

430 close(mDriverFD);

431 mDriverFD = -1;

432 mDriverName.clear();

433 }

434 }

435

436 LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating.");

437}

下面我们重点来看android_os_BinderInternal_getContextObject

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& /*caller*/)

{

//传入0代表ServiceManager

sp<IBinder> context = getStrongProxyForHandle(0);

.....

}

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result;

AutoMutex _l(mLock);

//ProcessState维护着一个全局表,通过handle查表可以查询到某个服务

handle_entry* e = lookupHandleLocked(handle);

if (e != nullptr) {

IBinder* b = e->binder;

//满足如下两个条件将创建新的BpBinder

if (b == nullptr || !e->refs->attemptIncWeak(this)) {

if (handle == 0) {

Parcel data;

status_t status = IPCThreadState::self()->transact(

0, IBinder::PING_TRANSACTION, data, nullptr, 0);

if (status == DEAD_OBJECT)

return nullptr;

}

//创建BpBinder

b = BpBinder::create(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

// This little bit of nastyness is to allow us to add a primary

// reference to the remote proxy when this team doesn't have one

// but another team is sending the handle to us.

result.force_set(b);

e->refs->decWeak(this);

}

}

return result;

}

此处创建的是native层的binder(最终会创建Java层的IBinder(BinderProxy)传递给java层)

BpBInder它的构造函数如下,handle代表了BpBinder的句柄,之后会通过这个句柄转换为IBinder(BpBinder)

BpBinder::BpBinder(int32_t handle, int32_t trackedUid)

: mHandle(handle)

, mStability(0)

, mAlive(1)

, mObitsSent(0)

, mObituaries(nullptr)

, mTrackedUid(trackedUid)

{

ALOGV("Creating BpBinder %p handle %d\n", this, mHandle);

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

IPCThreadState::self()->incWeakHandle(handle, this);

}

TODO: 上面关于弱指针

到此处,我们可以看到ProcessState主要是打开了binder,执行了mmap,并创建了native层的BpBinder

从上面可以看出IBinder的创建确实是通过ProcessState来创建,位于native层会被称为BpBinder,它还要转换为java层的BinderProxy(位于Binder.java)

- IPCThreadState

代码位置:frameworks/native/libs/binder/IPCThreadState.cpp

IPCThreadState主要负责与binder作具体命令的交互

static std::atomic<bool> gHaveTLS(false);

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS.load(std::memory_order_acquire)) {//gHaveTLS初始值false

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

return new IPCThreadState;

}

// Racey, heuristic test for simultaneous shutdown.

if (gShutdown.load(std::memory_order_relaxed)) {

ALOGW("Calling IPCThreadState::self() during shutdown is dangerous, expect a crash.\n");

return nullptr;

}

pthread_mutex_lock(&gTLSMutex);

if (!gHaveTLS.load(std::memory_order_relaxed)) {

int key_create_value = pthread_key_create(&gTLS, threadDestructor);

if (key_create_value != 0) {

pthread_mutex_unlock(&gTLSMutex);

ALOGW("IPCThreadState::self() unable to create TLS key, expect a crash: %s\n",

strerror(key_create_value));

return nullptr;

}

gHaveTLS.store(true, std::memory_order_release);

}

pthread_mutex_unlock(&gTLSMutex);

goto restart;

}

初始时gHaveTLS为false,因此不会创建IPCThreadState,goto restart后会创建IPCThreadState,通过这种方式保证单例。

IPCThreadState的构造函数如下:

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mServingStackPointer(nullptr),

mWorkSource(kUnsetWorkSource),

mPropagateWorkSource(false),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0),

mCallRestriction(mProcess->mCallRestriction)

{

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}

mIn和mOut都是Parcel类型,mIn是用于接受从Binder驱动发送过来的数据,最大容量256

mOut用于发送给Binder驱动的数据,最大容量256

BpBinder构造函数的最后会调用IPCThreadState::self()->incWeakHandle(handle, this)

void IPCThreadState::incWeakHandle(int32_t handle, BpBinder *proxy)

{

LOG_REMOTEREFS("IPCThreadState::incWeakHandle(%d)\n", handle);

mOut.writeInt32(BC_INCREFS);

mOut.writeInt32(handle);

// Create a temp reference until the driver has handled this command.

proxy->getWeakRefs()->incWeak(mProcess.get());

mPostWriteWeakDerefs.push(proxy->getWeakRefs());

}

3.2 getService

位于frameworks/base/core/java/android/os/ServiceManager.java

118 public static IBinder getService(String name) {

119 try {

120 IBinder service = sCache.get(name);

121 if (service != null) {

122 return service;

123 } else {

124 return Binder.allowBlocking(rawGetService(name));

125 }

126 } catch (RemoteException e) {

127 Log.e(TAG, "error in getService", e);

128 }

129 return null;

130 }

249

250 private static IBinder rawGetService(String name) throws RemoteException {

251 final long start = sStatLogger.getTime();

252

253 final IBinder binder = getIServiceManager().getService(name);

254 ......

}

这里的getIServiceManager()定义如下,可以看出这里是为了避免多次创建sServiceManager,如果是第一次调用则创建一个sServiceManager,从后文看这里返回的是ServiceManagerProxy对象

101 private static IServiceManager getIServiceManager() {

102 if (sServiceManager != null) {

103 return sServiceManager;

104 }

105

106 // Find the service manager

//ServiceManagerNative.asInterface会返回ServiceManagerProxy对象

//BinderInternal.getContextObject()会通过native创建IBinder,native层为BpBinder,java层为BinderProxy

//参考BinderInternal

107 sServiceManager = ServiceManagerNative

108 .asInterface(Binder.allowBlocking(BinderInternal.getContextObject()));

109 return sServiceManager;

110 }

此处又出现了一个新的类 ServiceManagerNative, 位于frameworks/base/core/java/android/os/ServiceManagerNative.java

33 static public IServiceManager asInterface(IBinder obj)

34 {

35 if (obj == null) {

36 return null;

37 }

38 IServiceManager in =

39 (IServiceManager)obj.queryLocalInterface(descriptor);

40 if (in != null) {

41 return in;

42 }

43

44 return new ServiceManagerProxy(obj);

45 }

此处主要是将IBinder对象转换为IServiceManager 对象,并会创建ServiceManagerProxy,这里的IBinder对象是通过BinderInternal.getContextObject()得到,可以理解成为一个与底层binder驱动沟通的工具,getContextObject的分析见IBinder。

可以看到ServiceManagerProxy的构造函数传入了IBinder对象,预示着ServiceManagerProxy需要与底层binder打交道, 因此getIServiceManager最终会得到一个ServiceManagerProxy对象

112 public ServiceManagerProxy(IBinder remote) {

113 mRemote = remote;

114 }

ServiceManagerProxy的构造函数中通过mRemote 保存了IBinder对象,作者把IBinder比如为餐馆的电话,getIServiceManager()获取到ServiceManagerProxy,我们继续看ServiceManagerProxy的getService:

120 public IBinder getService(String name) throws RemoteException {

// 1. 通过Parcel 打包数据

121 Parcel data = Parcel.obtain();

122 Parcel reply = Parcel.obtain();

123 data.writeInterfaceToken(IServiceManager.descriptor);

124 data.writeString(name);

// 2. 调用IBinder的transact请求,它内部会使用ProcessState和IPCThreadState来与Binder驱动通信(会挂起)

125 mRemote.transact(GET_SERVICE_TRANSACTION, data, reply, 0);

// 3. 获取返回结果

126 IBinder binder = reply.readStrongBinder();

127 reply.recycle();

128 data.recycle();

129 return binder;

130 }

此处用到了mRemote,它保存了IBinder对象,通过它来执行transact,实际此IBinder对象会通过ProcessState和IPCThreadState来与Binder驱动通信

小结:ServiceManager.java是对ServiceManagerProxy的进一步封装,以getService为例,它会创建ServiceManagerProxy对象,通过IBinder对象调用ProcessState和IPCThreadState来与Binder驱动通信,这个IBinder是在getService期间获取到

下面主要以transact为例,说明从BinderProxy::transact -> BpBinder::transact的执行过程

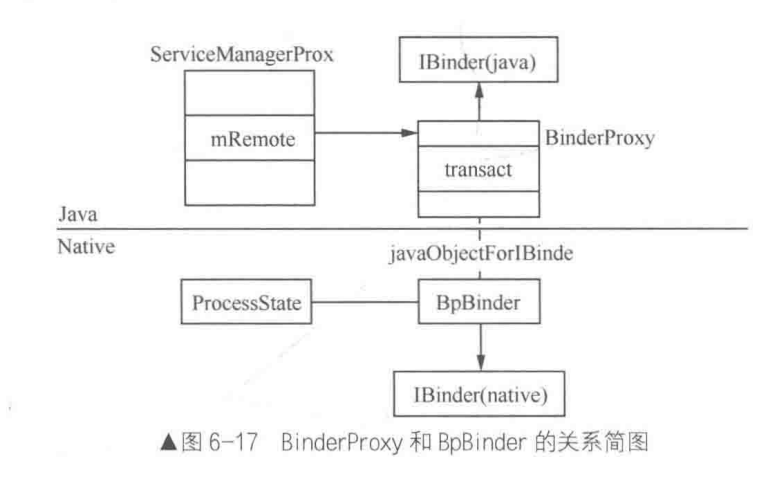

BpBinder和BinderProxy分别继承自native层的IBinder和java层的IBinder, 分别由

ProcessState::self()->getContextObject(NULL)和javaObjectForIBinder创建

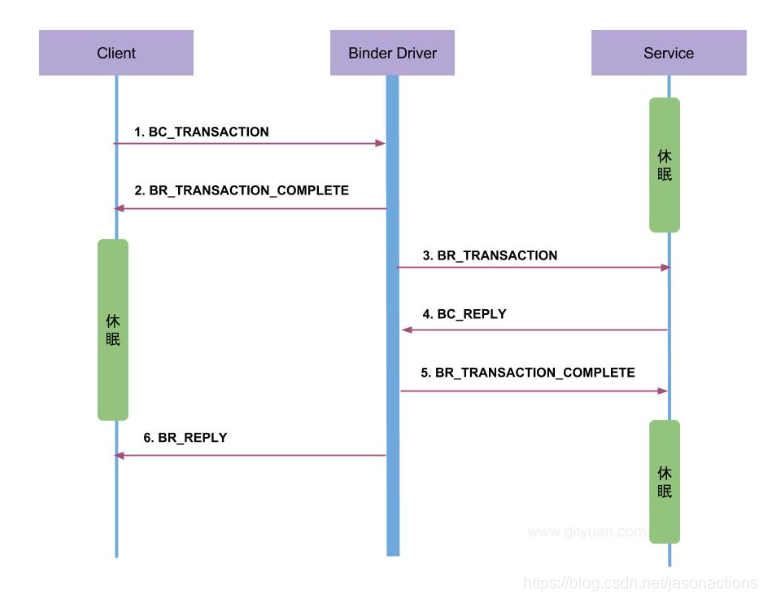

transaction的流程如上图,BinderProxy位于

frameworks/base/core/java/android/os/Binder.java, 它的transaction函数如下:

773final class BinderProxy implements IBinder {

......

1107 public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

1108 Binder.checkParcel(this, code, data, "Unreasonably large binder buffer");

1109

1110 if (mWarnOnBlocking && ((flags & FLAG_ONEWAY) == 0)) {

1111 // For now, avoid spamming the log by disabling after we've logged

1112 // about this interface at least once

1113 mWarnOnBlocking = false;

1114 Log.w(Binder.TAG, "Outgoing transactions from this process must be FLAG_ONEWAY",

1115 new Throwable());

1116 }

1117

1118 final boolean tracingEnabled = Binder.isTracingEnabled();

1119 if (tracingEnabled) {

1120 final Throwable tr = new Throwable();

1121 Binder.getTransactionTracker().addTrace(tr);

1122 StackTraceElement stackTraceElement = tr.getStackTrace()[1];

1123 Trace.traceBegin(Trace.TRACE_TAG_ALWAYS,

1124 stackTraceElement.getClassName() + "." + stackTraceElement.getMethodName());

1125 }

1126 try {

1127 return transactNative(code, data, reply, flags);

1128 } finally {

1129 if (tracingEnabled) {

1130 Trace.traceEnd(Trace.TRACE_TAG_ALWAYS);

1131 }

1132 }

1133 }

......

}

最终会调用transactNative(code, data, reply, flags),这是native层的transaction,它才是真正的实现,可以推断出,它位于前述ProcessState::self()->getContextObject创建的BpBinder

位于frameworks/base/core/jni/android_util_Binder.cpp

1235static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

1236 jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

1237{

//dataObj和replyObj来自于java层transact的第2和第3个参数

//需要先转化为native层的Parcel

1243 Parcel* data = parcelForJavaObject(env, dataObj);

1247 Parcel* reply = parcelForJavaObject(env, replyObj);

1251

1252 IBinder* target = getBPNativeData(env, obj)->mObject.get();

1261

1262 bool time_binder_calls;

1263 int64_t start_millis;

1264 if (kEnableBinderSample) {

1265 // Only log the binder call duration for things on the Java-level main thread.

1266 // But if we don't

1267 time_binder_calls = should_time_binder_calls();

1268

1269 if (time_binder_calls) {

1270 start_millis = uptimeMillis();

1271 }

1272 }

1273

1274 //printf("Transact from Java code to %p sending: ", target); data->print();

1275 status_t err = target->transact(code, *data, reply, flags);

1276 //if (reply) printf("Transact from Java code to %p received: ", target); reply->print();

1277

1278 if (kEnableBinderSample) {

1279 if (time_binder_calls) {

1280 conditionally_log_binder_call(start_millis, target, code);

1281 }

1282 }

1283

1284 if (err == NO_ERROR) {

1285 return JNI_TRUE;

1286 } else if (err == UNKNOWN_TRANSACTION) {

1287 return JNI_FALSE;

1288 }

1289

1290 signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/, data->dataSize());

1291 return JNI_FALSE;

1292}

getBPNativeData的实现如下:

631 BinderProxyNativeData* getBPNativeData(JNIEnv* env, jobject obj) {

//通过全局变量gBinderProxyOffsets获取mNativeData值,它是BinderProxyNativeData类型

//BinderProxyNativeData 包含了BpBinder,结构体定义如下

632 return (BinderProxyNativeData *) env->GetLongField(obj, gBinderProxyOffsets.mNativeData);

633}

BinderProxyNativeData 结构体定义如下,它包含了BpBinder:

619struct BinderProxyNativeData {

620 // Both fields are constant and not null once javaObjectForIBinder returns this as

621 // part of a BinderProxy.

622

623 // The native IBinder proxied by this BinderProxy.

624 sp<IBinder> mObject;

625

626 // Death recipients for mObject. Reference counted only because DeathRecipients

627 // hold a weak reference that can be temporarily promoted.

628 sp<DeathRecipientList> mOrgue; // Death recipients for mObject.

629};

上述android_os_BinderProxy_transact中通过:

IBinder* target = getBPNativeData(env, obj)->mObject.get();

拿到了BpBinder指针,下面会调用:

status_t err = target->transact(code, *data, reply, flags);

那么这里的target->transact实际就是BpBinder.transact,位于:

frameworks/native/libs/binder/BpBinder.cpp文件

209status_t BpBinder::transact(

210 uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

211{

212 // Once a binder has died, it will never come back to life.

213 if (mAlive) {

214 status_t status = IPCThreadState::self()->transact(

215 mHandle, code, data, reply, flags);

216 if (status == DEAD_OBJECT) mAlive = 0;

217 return status;

218 }

219

220 return DEAD_OBJECT;

221}

从这里可以看出最终BpBinder最终是跟IPCThreadState打交道,IPCThreadState跟binder打交道

因此小结一下有如下的调用层次:

client ->

ServiceManager.java ->

ServiceManagerNative ->

ServiceManagerProxy ->

BinderProxy ->

BpBinder ->

IPCThreadState

对于getService此处的code为GET_SERVICE_TRANSACTION

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err;

flags |= TF_ACCEPT_FDS;

// 包装成binder驱动协议所规定的数据包格式,结果会放到mOut中

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, nullptr);

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

if (UNLIKELY(mCallRestriction != ProcessState::CallRestriction::NONE)) {

if (mCallRestriction == ProcessState::CallRestriction::ERROR_IF_NOT_ONEWAY) {

ALOGE("Process making non-oneway call (code: %u) but is restricted.", code);

CallStack::logStack("non-oneway call", CallStack::getCurrent(10).get(),

ANDROID_LOG_ERROR);

} else /* FATAL_IF_NOT_ONEWAY */ {

LOG_ALWAYS_FATAL("Process may not make oneway calls (code: %u).", code);

}

}

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

} else {

// 同步等待

err = waitForResponse(nullptr, nullptr);

}

return err;

}

writeTransactionData包装成binder驱动协议所规定的数据包格式,结果会放到mOut中

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

到目前为止,transact按照binder的协议将数据填充好放到了mOut中,此处只封装了BC_TRANSACTION子命令的数据

TODO: tr.data.ptr.buffer如何与binder mmap区域建立关联?

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

//将已有数据封装BINDER_WRITE_READ信息,发送给binder驱动

if ((err=talkWithDriver()) < NO_ERROR) break;

//此处有接收到binder回复,说明binder server已经执行完毕(如getService)

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_FROZEN_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

{

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish;

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}

由于BC_TRANSACTION是BINDER_WRITE_READ的子命令,因此还要封装一层BINDER_WRITE_READ的数据信息,此时仍保存在mOut中,并未发送出去,另外binder的IPC多半是阻塞性的,这个是如何体现的?答案就在waitForResponse中。

TODO: 如何保证调用者线程会同步等待直到接收到服务线程发送的回复数据?

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD < 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

//封装BINDER_WRITE_READ

bwr.write_size = outAvail;

//存放的binder_transaction_data 结构体

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

//设置需要读取的数据大小和buffer

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

// Return immediately if there is nothing to do.

// 请求中不需要读写数据

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

//执行真正的数据读写操作

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

if (mProcess->mDriverFD < 0) {

err = -EBADF;

}

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

//对binder结果进行处理,并做善后处理

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {// binder驱动对写入数据进行了处理

if (bwr.write_consumed < mOut.dataSize())// binder驱动对写入数据进行了部分处理

LOG_ALWAYS_FATAL("Driver did not consume write buffer. "

"err: %s consumed: %zu of %zu",

statusToString(err).c_str(),

(size_t)bwr.write_consumed,

mOut.dataSize());

else { // binder驱动对写入数据进行了全部处理

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {// binder驱动读到了数据

mIn.setDataSize(bwr.read_consumed); //设置数据大小

mIn.setDataPosition(0); //设置读取的位置

}

return NO_ERROR;

}

return err;

}

talkWithDriver是真正与binder驱动通信的地方,首先它会先封装一层BINDER_WRITE_READ的数据信息,然后执行ioctl执行真正的写入

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)是binder读写真正执行的地方,下面仍然以getService的场景看下ioctl的执行流程

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

/*此处体现出binder通信是同步阻塞的,首次进入binder_stop_on_user_error < 2,因此不会被挂起*/

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

return ret;

mutex_lock(&binder_lock);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

.....

此处是向ServiceManager请求getService,首先是向binder写入,proc为调用者进程,thread为调用者线程,

int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread,

void __user *buffer, int size, signed long *consumed)

{

uint32_t cmd;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

//获取一个cmd

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

//跳过cmd占用的空间,此时ptr指向要处理的数据

ptr += sizeof(uint32_t);

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

......

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}

....

下面主要关注binder_transaction处理

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

//client进程向SM进程请求getService,client进程需等待SM进程完成后继续执行

struct binder_work *tcomplete;

size_t *offp, *off_end;

//表示被请求服务的进程,以getService为例,则为SM进程

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

uint32_t return_error;

if (reply) {

//暂时忽略,只看transaction部分

....

} else {

if (tr->target.handle) {//目标进程handle不为0

struct binder_ref *ref;

ref = binder_get_ref(proc, tr->target.handle);

target_node = ref->node;

} else {//目标进程handle为0,即SM

//1.找到目标node

target_node = binder_context_mgr_node;

}

//2.1找到目标proc

target_proc = target_node->proc;

if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) {

struct binder_transaction *tmp;

tmp = thread->transaction_stack;

while (tmp) {

if (tmp->from && tmp->from->proc == target_proc)

//2.2找到目标thread

target_thread = tmp->from;

tmp = tmp->from_parent;

}

}

}

//3.找到目标thrad的列表,todo列表上挂接了需要处理的transaction

// wait

if (target_thread) {

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

// 4.生成一个binder_transaction, 最后会加入到target_thread->todo,target_thread唤醒后会执行

/* TODO: reuse incoming transaction for reply */

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_t_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION);

//4.2 创建一个binder_work,加入到本线程的todo队列,表示还有一个transaction未完成

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

if (tcomplete == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_tcomplete_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

// 5. 填写binder_transaction数据

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = proc->tsk->cred->euid;

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code;//面向binder server的请求码,getService对应GET_SERVICE_TRANSACTION

t->flags = tr->flags;

t->priority = task_nice(current);

//从目标进程的mmap区域分配一块buffer用于本次transaction

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

offp = (size_t *)(t->buffer->data + ALIGN(tr->data_size, sizeof(void *)));

// 拷贝用户空间的tr到申请的buffer

if (copy_from_user(t->buffer->data, tr->data.ptr.buffer, tr->data_size)) {

binder_user_error("binder: %d:%d got transaction with invalid "

"data ptr\n", proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

if (copy_from_user(offp, tr->data.ptr.offsets, tr->offsets_size)) {

binder_user_error("binder: %d:%d got transaction with invalid "

"offsets ptr\n", proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

off_end = (void *)offp + tr->offsets_size;

//处理数据中的binder object,对于getService忽略

for (; offp < off_end; offp++) {

struct flat_binder_object *fp;

....

}

if (reply) {

BUG_ON(t->buffer->async_transaction != 0);

binder_pop_transaction(target_thread, in_reply_to);

} else if (!(t->flags & TF_ONE_WAY)) {//getService时执行此分支

BUG_ON(t->buffer->async_transaction != 0);

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

BUG_ON(target_node == NULL);

BUG_ON(t->buffer->async_transaction != 1);

if (target_node->has_async_transaction) {

target_list = &target_node->async_todo;

target_wait = NULL;

} else

target_node->has_async_transaction = 1;

}

t->work.type = BINDER_WORK_TRANSACTION;

// 把transaction加入到目录进程的todo list中

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

//将未完成对象加入到调用者自己的todo队列中,表示当前线程有一个未完成操作

list_add_tail(&tcomplete->entry, &thread->todo);

//唤醒目标线程

if (target_wait)

wake_up_interruptible(target_wait);

return;

}

最终会创建新的binder_transaction 加入到目标线程的todo队列,并唤醒目标线程进行处理,此时调用者线程将返回到binder_thread_write函数,进而返回到binder_ioctl,之后将转入对binder read的处理

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

//从binder_thread_write返回后将进入binder read的处理

...

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

.....

//buffer即mIn,由调用者提供

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

void __user *buffer, int size,

signed long *consumed, int non_block)

{

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

//写入 BR_NOOP到用户空间

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

.....

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

// 在binder_thread_write中已经加入work到本线程todo队列,因此不为空

if (!list_empty(&thread->todo))

w = list_first_entry(&thread->todo, struct binder_work, entry);

else if (!list_empty(&proc->todo) && wait_for_proc_work)

w = list_first_entry(&proc->todo, struct binder_work, entry);

else {

if (ptr - buffer == 4 && !(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN)) /* no data added */

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

t = container_of(w, struct binder_transaction, work);

} break;

//调用者发起的getService请求时对应BINDER_WORK_TRANSACTION_COMPLETE

case BINDER_WORK_TRANSACTION_COMPLETE: {

// 向用户空间写入BR_TRANSACTION_COMPLETE命令

cmd = BR_TRANSACTION_COMPLETE;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

binder_debug(BINDER_DEBUG_TRANSACTION_COMPLETE,

"binder: %d:%d BR_TRANSACTION_COMPLETE\n",

proc->pid, thread->pid);

list_del(&w->entry);

kfree(w);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

} break;

}

.....

}

return 0;

}

binder_thread_read主要向用户空间发送了BR_NOOP和BINDER_WORK_TRANSACTION_COMPLETE两条命令,之后将返回到binder_ioctl,此时返回到talkWithDriver,说明检测到mIn中有数据,则waitForResponse继续执行

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

//将已有数据封装BINDER_WRITE_READ信息,发送给binder驱动

if ((err=talkWithDriver()) < NO_ERROR) break;

//此处有接收到binder回复,说明binder server已经执行完毕(如getService)

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(nullptr,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}

waitForResponse中首先读取到的是BR_NOOP命令,会做空处理,然后继续talkWithDriver,由于

mIn.dataPosition() < mIn.dataSize() ,所以mIn中还有数据没有读完,因此不会执行ioctl,因此会继续处理BR_TRANSACTION_COMPLETE命令,如果不需要同步则结束,如果需要同步则继续循环,此处为后一种情况,在进入下一次循环之前bwr.write_size=0, bwr.read_size>0, 所以在binder_ioctl中不需要再执行binder_thread_write, 只需要执行binder_thread_read(TODO)

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

void __user *buffer, int size,

signed long *consumed, int non_block)

{

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

//放入BR_NOOP

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

//此时由于thread->transaction_stack不为空(TODO),wait_for_proc_work false

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);

if (thread->return_error != BR_OK && ptr < end) {

if (thread->return_error2 != BR_OK) {

if (put_user(thread->return_error2, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (ptr == end)

goto done;

thread->return_error2 = BR_OK;

}

if (put_user(thread->return_error, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

thread->return_error = BR_OK;

goto done;

}

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work)

proc->ready_threads++;

mutex_unlock(&binder_lock);

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("binder: %d:%d ERROR: Thread waiting "

"for process work before calling BC_REGISTER_"

"LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else

ret = wait_event_interruptible_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

if (non_block) {

if (!binder_has_thread_work(thread))

ret = -EAGAIN;

} else

//此处进入休眠等待,直到SM来唤醒

ret = wait_event_interruptible(thread->wait, binder_has_thread_work(thread));

}

mutex_lock(&binder_lock);

......

因此到目前为止,调用者进程阻塞等待,直到SM进程唤醒。

我们知道SM唤醒发生在binder_ioctl->binder_thread_write->binder_transaction函数中,那么在唤醒之前,SM进程处于何种状态?

void binder_loop(struct binder_state *bs, binder_handler func)

390{

391 int res;

392 struct binder_write_read bwr;

393 uint32_t readbuf[32];

394

395 bwr.write_size = 0;

396 bwr.write_consumed = 0;

397 bwr.write_buffer = 0;

398

399 readbuf[0] = BC_ENTER_LOOPER;

400 binder_write(bs, readbuf, sizeof(uint32_t));

401

402 for (;;) {

403 bwr.read_size = sizeof(readbuf);

404 bwr.read_consumed = 0;

405 bwr.read_buffer = (uintptr_t) readbuf;

406

407 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

408

409 if (res < 0) {

410 ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

411 break;

412 }

413

414 res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

415 if (res == 0) {

416 ALOGE("binder_loop: unexpected reply?!\n");

417 break;

418 }

419 if (res < 0) {

420 ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

421 break;

422 }

423 }

424}

通过前面分析我们知道,当SM启动后经进入binder_loop循环

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

void __user *buffer, int size,

signed long *consumed, int non_block)

{

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

//放入BR_NOOP

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

//此时由于thread->transaction_stack和thread->todo为空,wait_for_proc_work true

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);

...

thread->looper |= BINDER_LOOPER_STATE_WAITING;

if (wait_for_proc_work)

proc->ready_threads++;

mutex_unlock(&binder_lock);

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("binder: %d:%d ERROR: Thread waiting "

"for process work before calling BC_REGISTER_"

"LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else

//此处发生阻塞休眠

ret = wait_event_interruptible_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

if (non_block) {

if (!binder_has_thread_work(thread))

ret = -EAGAIN;

} else

//此处进入休眠等待,直到SM来唤醒

ret = wait_event_interruptible(thread->wait, binder_has_thread_work(thread));

}

mutex_lock(&binder_lock);

......

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

if (!list_empty(&thread->todo))

w = list_first_entry(&thread->todo, struct binder_work, entry);

else if (!list_empty(&proc->todo) && wait_for_proc_work)

w = list_first_entry(&proc->todo, struct binder_work, entry);

else {

if (ptr - buffer == 4 && !(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN)) /* no data added */

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

t = container_of(w, struct binder_transaction, work);

} break;

...

通过上述代码知道SM通过ioctl-> binder_ioctl->binder_transaction->binder_thread_read进入休眠,调用者唤醒后会进入while循环,进行BINDER_WORK_TRANSACTION处理,会将client请求复制到SM中(TODO)

之后将退出binder_ioctl,进入binder_parse进行解析,对于getService,会先初始化reply,binder_parse将会解析BR_TRANSACTION子命令,并调用svcmgr_handler处理请求,将reply通过binder_send_reply发给binder驱动,进而返回给发送请求的client。

此处reply为binder_io类型,svcmgr_handler函数如下:

int svcmgr_handler(struct binder_state *bs,

251 struct binder_transaction_data *txn,

252 struct binder_io *msg,

253 struct binder_io *reply)

254{

255 struct svcinfo *si;

256 uint16_t *s;

257 size_t len;

258 uint32_t handle;

259 uint32_t strict_policy;

260 int allow_isolated;

261 uint32_t dumpsys_priority;

262

263 //ALOGI("target=%p code=%d pid=%d uid=%d\n",

264 // (void*) txn->target.ptr, txn->code, txn->sender_pid, txn->sender_euid);

265

266 if (txn->target.ptr != BINDER_SERVICE_MANAGER)

267 return -1;

268

269 if (txn->code == PING_TRANSACTION)

270 return 0;

271

272 // Equivalent to Parcel::enforceInterface(), reading the RPC

273 // header with the strict mode policy mask and the interface name.

274 // Note that we ignore the strict_policy and don't propagate it

275 // further (since we do no outbound RPCs anyway).

276 strict_policy = bio_get_uint32(msg);

277 s = bio_get_string16(msg, &len);

278 if (s == NULL) {

279 return -1;

280 }

281

282 if ((len != (sizeof(svcmgr_id) / 2)) ||

283 memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

284 fprintf(stderr,"invalid id %s\n", str8(s, len));

285 return -1;

286 }

299

300 switch(txn->code) {

301 case SVC_MGR_GET_SERVICE:

302 case SVC_MGR_CHECK_SERVICE:

303 s = bio_get_string16(msg, &len);

304 if (s == NULL) {

305 return -1;

306 }

//查找service

307 handle = do_find_service(s, len, txn->sender_euid, txn->sender_pid);

308 if (!handle)

309 break;

310 bio_put_ref(reply, handle);

311 return 0;

312

313 case SVC_MGR_ADD_SERVICE:

314 s = bio_get_string16(msg, &len);

315 if (s == NULL) {

316 return -1;

317 }

318 handle = bio_get_ref(msg);

319 allow_isolated = bio_get_uint32(msg) ? 1 : 0;

320 dumpsys_priority = bio_get_uint32(msg);

321 if (do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, dumpsys_priority,

322 txn->sender_pid))

323 return -1;

324 break;

325

...

351 default:

352 ALOGE("unknown code %d\n", txn->code);

353 return -1;

354 }

355

356 bio_put_uint32(reply, 0);

357 return 0;

358}

对于SVC_MGR_GET_SERVICE将会调用do_find_service,得到的是IBinder指针,如果client和server为同一个进程则返回给reply的是IBinder指针,否则是服务的句柄handle, reply中最终存储的就是一个binder_object数据结构,实际上是写入reply的data区域,类型为BINDER_TYPE_HANDLE, binder_object.pointer就是SM找到的server指针,最终转换成IBinder指针,一切就绪将通过binder_send_reply->binder_write->ioctl->binder_ioctl->binder_thread_write->binder_transaction发送给binder驱动, 命令类型为BC_REPLY, 也要找到调用者进程/线程(保存在ServiceManager->transaction_statck中)

和BC_TRSACTION类似,BC_REPLY中也生成了t和tcomplete, 将t保存到client的目标进程的todo链表,由于之前client进程阻塞在binder_thread_read,此处将会进行唤醒,唤醒后client进程继续执行,取出reply请求数据拷贝到client的用户空间mIn中,回到WaitForResponse,对BR_REPLY进行处理,处理完毕最终返回到ServiceManagerProxy的getService()中,通过readStrongBinder->unflatten_binder,最终生成一个BpBinder

4. 参考文档

1.《深入理解Android内核设计思想》林学森

2. https://weishu.me/2016/01/12/binder-index-for-newer/

3. https://blog.csdn.net/universus/article/details/6211589