<Android音频>Android native层使用TrackPlayer播放pcm_王二の黄金时代的博客-CSDN博客

<Android音频>Android native层直接使用AudioFlinger播放pcm_王二の黄金时代的博客-CSDN博客

目录

一:概述? ?一个c语言demo可执行程序,直接使用AudioFlinger 播放pcm.

环境? ubuntu22.04 编译 aosp11 源码

源码? main()

一:概述

这是一个c语言demo程序,android源码环境,编译得到 bin文件,push到设备上在shell环境运行,播放pcm数据。如果是app java开发,没有系统源码,就不建议往下看了。

用于研究AudioFlinger源码,这是一个demo,在源码层面编译,直接获取AuduioFlinger服务,使用AudioFlinger的 public方法来播放一个demo.? 原创,分析源码参考AudioTrack(native层)编写而来。?

二:实现

上demo:(github**暂未上传)

环境

- ubuntu22.04 编译 aosp11 源码,得到emulator 运行。?

- 编译本demo 得到 ?out/target/product/generic_x86_64/system/bin/AFdemo 可执行程序,push到 emulator上运行。

- 程序需要一个 48K,16bit, 双通道立体声的pcm 数据文件作为源,(可使用ffmpeg制作)

源码

main.cpp

/*

* author:cnaok 20220630

* aosp 11 http://aospxref.com/android-11.0.0_r21/

* 直接使用 AudioFlinger接口,播放pcm数据

* 参照源码中 AudioTrack.cpp 和AudioFlinger的交互

*/

#include <stdlib.h>

#include <stdio.h>

#include <iostream>

#include <media/AudioSystem.h>

#include <media/IAudioFlinger.h>

#include <binder/IPCThreadState.h>

#include <private/media/AudioTrackShared.h> // for audio_track_cblk_t

using namespace android;

// namespace android

// {

// 以每20ms的数据量,读一次文件

#define WRITE_TIME_MS 20

int track_demo()

{

// 初始化下源数据

FILE *fp_in = fopen("yk_48000_2_16.pcm", "r");

if (fp_in == NULL)

{

printf("[canok]erro to open file\n");

return -1;

}

int chanles = 2; // static inline uint32_t audio_channel_count_from_out_mask(audio_channel_mask_t channel)

int samplerate = 48000;

int bits = 16;

size_t mFrameSize = chanles * bits / 8; // channelCount * audio_bytes_per_sample(format);

size_t mFrames = samplerate * WRITE_TIME_MS / 1000;

int buf_size = mFrames * mFrameSize;

void *buf = malloc(buf_size);

if (buf == NULL)

{

printf("[canok]malloc erro\n");

return -1;

}

// get_audio_flinger

const sp<IAudioFlinger> &audioFlinger = AudioSystem::get_audio_flinger();

if (audioFlinger == 0)

{

std::cout << "err to get audio_flinger" << std::endl;

}

IAudioFlinger::CreateTrackInput input;

IAudioFlinger::CreateTrackOutput output;

input.speed = 1.0;

input.attr = AUDIO_ATTRIBUTES_INITIALIZER;

input.frameCount = samplerate * WRITE_TIME_MS / 1000;

input.config = AUDIO_CONFIG_INITIALIZER;

input.config.sample_rate = samplerate;

input.config.channel_mask = AUDIO_CHANNEL_OUT_STEREO; // 0x1u // system/media/audio/include/system/audio-base.h

input.config.format = AUDIO_FORMAT_PCM_16_BIT; // AUDIO_FORMAT_PCM | AUDIO_FORMAT_PCM_16_BIT; // system/media/audio/include/system/audio-base.h

// input.config.offload_info = mOffloadInfoCopy;

input.clientInfo.clientTid = -1;

input.sessionId = AUDIO_SESSION_ALLOCATE;

input.clientInfo.clientPid = IPCThreadState::self()->getCallingPid();

input.clientInfo.clientUid = IPCThreadState::self()->getCallingPid();

status_t status = NO_ERROR;

// 1.1 Audioflinger 请求创建mAudioTrack (Audioflinger内部会根据请求input的配置,从AudioPolicy获取合适的输出设备,打开)

sp<IAudioTrack> mAudioTrack = audioFlinger->createTrack(input,

output,

&status);

std::cout << "status:" << status << std::endl;

std::cout << "creatTrack_ output:" << std::endl

<< " flags " << output.flags << std::endl

<< " frameCount " << output.frameCount << std::endl

<< " notificationFrameCount " << output.notificationFrameCount << std::endl

<< " selectedDeviceId " << output.selectedDeviceId << std::endl

<< " sessionId " << output.sessionId << std::endl

<< " afFrameCount " << output.afFrameCount << std::endl

<< " afSampleRate " << output.afSampleRate << std::endl

<< " afLatencyMs " << output.afLatencyMs << std::endl

<< " outputId " << output.outputId << std::endl

<< " portId " << output.portId << std::endl;

if (mAudioTrack == nullptr)

{

printf("[canok]create audioTrack err\n");

return -1;

}

// 1.2 start AudioTrack

mAudioTrack->start();

// 2.0 mCblkMemory 从AudioTrack获取mCblkMemory,用于后续 “共享内存” 操作对象

sp<IMemory> mCblkMemory = mAudioTrack->getCblk();

if (mCblkMemory == 0)

{

printf("[canok]%s: Could not get control block", __func__);

}

// 2.1 mCblk

void *iMemPointer = mCblkMemory->unsecurePointer();

if (iMemPointer == NULL)

{

printf("[canok]%s: Could not get control block pointer", __func__);

status = NO_INIT;

}

audio_track_cblk_t *mCblk = static_cast<audio_track_cblk_t *>(iMemPointer);

// 2.2 mProxy

//创建一个 audio_track_cblk 的辅助操作对象,后续就通过这个对象,来往audioflinger放数据。

sp<AudioTrackClientProxy> mProxy; // primary owner of the memory

mProxy = new AudioTrackClientProxy(mCblk, mCblk + 1, output.frameCount, mFrameSize);

// 3.0 从mProxy 获取buffer,填充buffer,释放buffer

while (fread(buf, 1, buf_size, fp_in) > 0)

{

int count = mFrames;

uint8_t *bufsrc = (uint8_t *)buf;

while (count > 0)

{

printf("[canok]track: to obtainBuffer<<<<<<<<<[%s%d] count %d \n", __FUNCTION__, __LINE__, count);

/* code */

// 3.1获取一个 buffer

Proxy::Buffer audioBuffer;

audioBuffer.mFrameCount = count; //希望获取的frame 数量。

const struct timespec *requested = &ClientProxy::kForever; // 无限超时

status = mProxy->obtainBuffer(&audioBuffer, requested, NULL); // elapsed 为Null, elaped是一个输出参数,他会告诉你这个调用阻塞了多少时间,如果不需要这个数,给null

// 获取的audioBuffer , mFrameCount中是实际获取到的帧数。

std::cout << "obtainBufffer:" << std::endl

<< " status: " << status << std::endl

<< " mFrameCount: " << audioBuffer.mFrameCount << std::endl;

if (status != NO_ERROR)

{

printf("[canok][%s%d] erro to obtainBuffer :%d \n", __FUNCTION__, __LINE__, status);

}

// 3.2 填充这个buffer

size_t toWrite = audioBuffer.mFrameCount * mFrameSize;

printf("[canok]track:[%s%d] toWrite:%zu srcPostion: %ld , srcLeaveSize: %ld \n", __FUNCTION__, __LINE__, toWrite, bufsrc - (uint8_t *)buf, buf_size - (bufsrc - (uint8_t *)buf));

memcpy(audioBuffer.mRaw, bufsrc, toWrite);

// 3.3 释放这个buffer

mProxy->releaseBuffer(&audioBuffer);

count -= audioBuffer.mFrameCount;

bufsrc += toWrite;

}

}

free(buf);

fclose(fp_in);

return 0;

}

int main(int argc, const char *argv[])

{

track_demo();

return 0;

}

// }Android.bp

cc_binary {

name: "AFdemo",

srcs: [

"main.cpp",

],

shared_libs: [

"libaudioclient",

"libaudioutils",

"libutils",

"libbinder",

],

header_libs: [

"libmedia_headers",

],

include_dirs: [

// "frameworks/av/media/libavextensions",

"frameworks/av/media/libnbaio/include_mono/",

],

cflags: [

"-Wall",

"-Werror",

"-Wno-error=deprecated-declarations",

"-Wno-unused-parameter",

"-Wno-unused-variable",

],

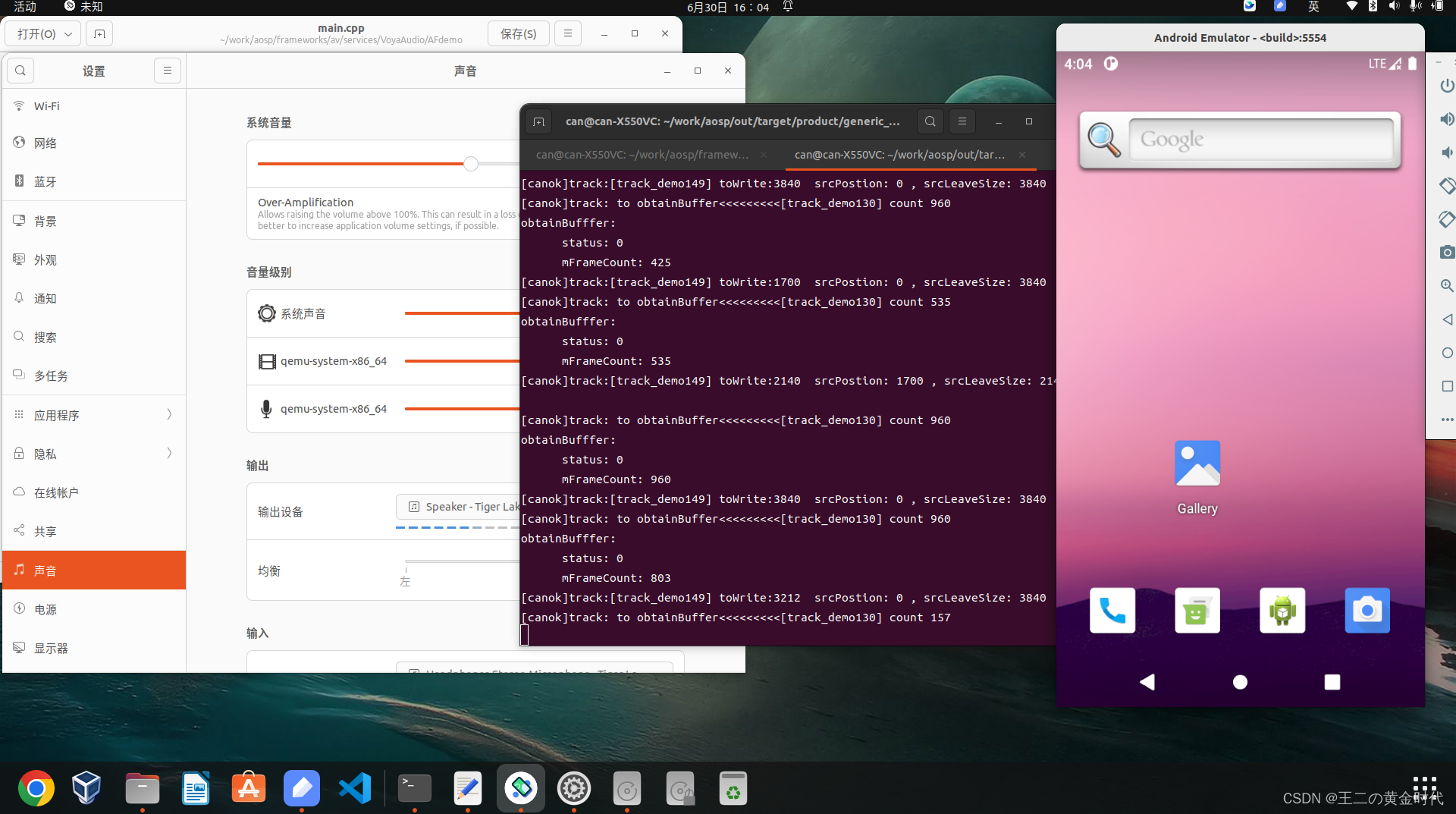

}结果

可执行程序,push到设备上可以直接 shell环境运行,能播放声音。

参照AudioTrack源码