前言

flutter渲染视频的方法有多种,比如texture、platformview、ffi,其中texture是通过flutter自己提供的一个texture对象与dart界面关联后进行渲染,很容易搜索到android和ios的相关资料,但是大部分资料不够详细,尤其是jni渲染部分基本都略过了,对于使用flutter但不熟悉安卓的情况下,是比较难搞清楚通过texure拿到surface之后该怎么渲染。所以本文将说明整体的渲染流程。

一、如何实现?

1、定义Texture控件

在界面中定义一个Texture

Container(

width: 640,

height: 360,

child: Texture(

textureId: textureId,

))

2、创建Texture对象

java

TextureRegistry.SurfaceTextureEntry entry =flutterEngine.getRenderer().createSurfaceTexture();

3、关联TextureId

dart

int textureId = -1;

if (textureId < 0) {

//调用本地方法获取textureId

methodChannel.invokeMethod('startPreview',<String,dynamic>{'path':'test.mov'}).then((value) {

textureId = value;

setState(() {

print('textureId ==== $textureId');

});

});

}

java

//methodchannel的startPreview方法实现,此处略

TextureRegistry.SurfaceTextureEntry entry =flutterEngine.getRenderer().createSurfaceTexture();

result.success(entry.id());

4、写入rgba

java

TextureRegistry.SurfaceTextureEntry entry = flutterEngine.getRenderer().createSurfaceTexture();

SurfaceTexture surfaceTexture = entry.surfaceTexture();

Surface surface = new Surface(surfaceTexture);

String path = call.argument("path");

//调用jni并传入surface

native_start_play(path,surface);

jni c++

ANativeWindow *a_native_window = ANativeWindow_fromSurface(env,surface);

ANativeWindow_setBuffersGeometry(a_native_window,width,height,WINDOW_FORMAT_RGBA_8888);

ANativeWindow_Buffer a_native_window_buffer;

ANativeWindow_lock(a_native_window,&a_native_window_buffer,0);

uint8_t *first_window = static_cast<uint8_t *>(a_native_window_buffer.bits);

//rgba数据

uint8_t *src_data = data[0];

int dst_stride = a_native_window_buffer.stride * 4;

//ffmpeg的linesize

int src_line_size = linesize[0];

for(int i = 0; i < a_native_window_buffer.height;i++){

memcpy(first_window+i*dst_stride,src_data+i*src_line_size,dst_stride);

}

ANativeWindow_unlockAndPost(a_native_window);

其中jni方法定义:

extern "C" JNIEXPORT void JNICALL Java_com_example_ffplay_1plugin_FfplayPlugin_native_1start_1play( JNIEnv *env, jobject /* this*/, jstring path,jobject surface) ;

二、示例

1.使用ffmpeg解码播放

main.dart

import 'package:flutter/material.dart';

import 'package:flutter/services.dart';

MethodChannel methodChannel = MethodChannel('ffplay_plugin');

void main() {

runApp(MyApp());

}

class MyApp extends StatelessWidget {

// This widget is the root of your application.

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Flutter Demo',

theme: ThemeData(

primarySwatch: Colors.blue,

),

home: MyHomePage(title: 'Flutter Demo Home Page'),

);

}

}

class MyHomePage extends StatefulWidget {

MyHomePage({Key? key, required this.title}) : super(key: key);

final String title;

@override

_MyHomePageState createState() => _MyHomePageState();

}

class _MyHomePageState extends State<MyHomePage> {

int _counter = 0;

int textureId = -1;

Future<void> _createTexture() async {

print('textureId = $textureId');

//调用本地方法播放视频

if (textureId < 0) {

methodChannel.invokeMethod('startPreview',<String,dynamic>{'path':'https://sf1-hscdn-tos.pstatp.com/obj/media-fe/xgplayer_doc_video/flv/xgplayer-demo-360p.flv'}).then((value) {

textureId = value;

setState(() {

print('textureId ==== $textureId');

});

});

}

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text(widget.title),

),

//控件布局

body: Center(

child: Row(

mainAxisAlignment: MainAxisAlignment.center,

children: <Widget>[

if (textureId > -1)

ClipRect (

child: Container(

width: 640,

height: 360,

child: Texture(

textureId: textureId,

)),

),

],

),

),

floatingActionButton: FloatingActionButton(

onPressed: _createTexture,

tooltip: 'createTexture',

child: Icon(Icons.add),

),

);

}

}

定义一个插件我这里是fflay_plugin。

fflayplugin.java

if (call.method.equals("startPreview")) {

//创建texture

TextureRegistry.SurfaceTextureEntry entry =flutterEngine.getRenderer().createSurfaceTexture();

SurfaceTexture surfaceTexture = entry.surfaceTexture();

Surface surface = new Surface(surfaceTexture);

//获取参数

String path = call.argument("path");

//调用jni开始渲染

native_start_play(path,surface);

//返回textureId

result.success(entry.id());

}

public native void native_start_play(String path, Surface surface);

native-lib.cpp

extern "C" JNIEXPORT void

JNICALL

Java_com_example_ffplay_1plugin_FfplayPlugin_native_1start_1play(

JNIEnv *env,

jobject /* this */, jstring path,jobject surface) {

//获取用于绘制的NativeWindow

ANativeWindow *a_native_window = ANativeWindow_fromSurface(env,surface);

//转换视频路径字符串为C中可用的

const char *video_path = env->GetStringUTFChars(path,0);

//初始化播放器,Play中封装了ffmpeg

Play *play=new Play;

//播放回调

play->Display=[=](unsigned char* data[8], int linesize[8], int width, int height, AVPixelFormat format)

{

//设置NativeWindow绘制的缓冲区

ANativeWindow_setBuffersGeometry(a_native_window,width,height,WINDOW_FORMAT_RGBA_8888);

//绘制时,用于接收的缓冲区

ANativeWindow_Buffer a_native_window_buffer;

//加锁然后进行渲染

ANativeWindow_lock(a_native_window,&a_native_window_buffer,0);

uint8_t *first_window = static_cast<uint8_t *>(a_native_window_buffer.bits);

uint8_t *src_data = data[0];

//拿到每行有多少个RGBA字节

int dst_stride = a_native_window_buffer.stride * 4;

int src_line_size = linesize[0];

//循环遍历所得到的缓冲区数据

for(int i = 0; i < a_native_window_buffer.height;i++){

//内存拷贝进行渲染

memcpy(first_window+i*dst_stride,src_data+i*src_line_size,dst_stride);

}

//绘制完解锁

ANativeWindow_unlockAndPost(a_native_window);

};

//开始播放

play->Start(video_path,AV_PIX_FMT_RGBA);

env->ReleaseStringUTFChars(path,video_path);

}

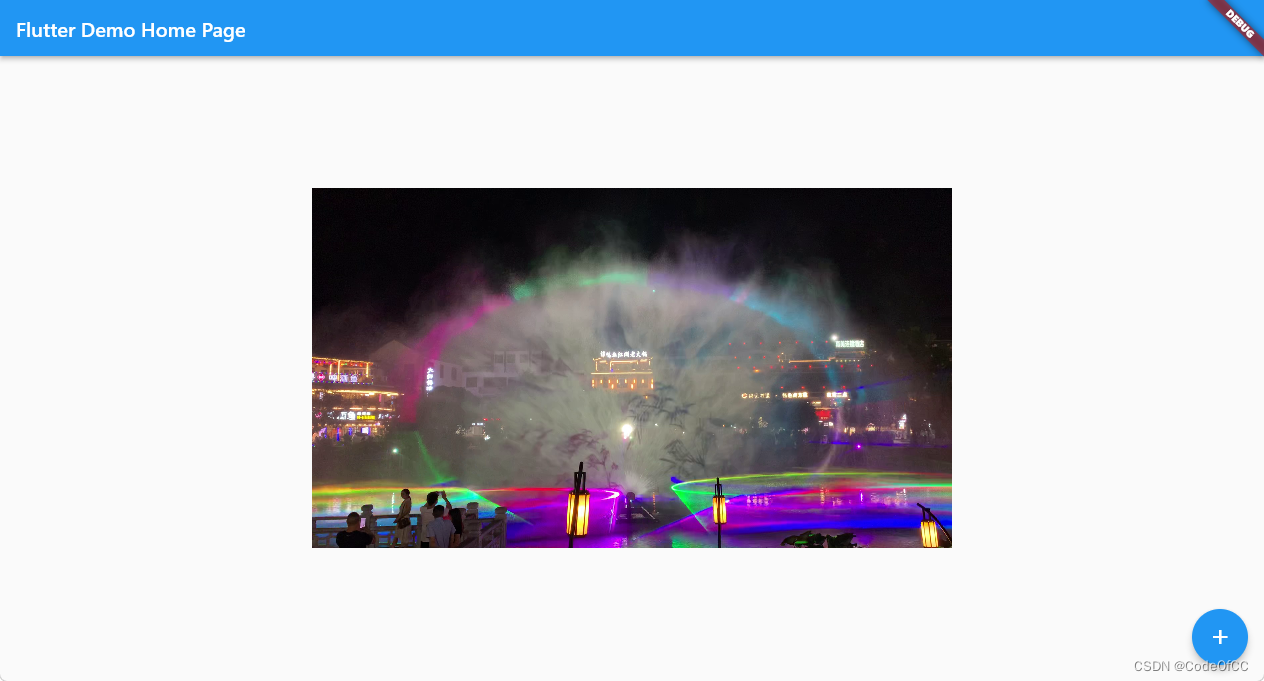

效果预览

android TV横屏

总结

以上就是今天要讲的内容,flutter在安卓上渲染视频还是相对容易实现的。因为资料比较多,但是只搜索fluter相关的资料只能了解调texture的用法,无法搞清楚texture到ffmpeg的串连,我们需要单独去了解安卓调用ffmpeg渲染才能找到的它们之间的关联。总的来说,实现相对容易效果也能接受。