Spark安装

Linux下安装

解压

tar -zxvf spark-3.1.2-bin-hadoop3.2.tgz -C /opt/software/

改名

mv spark-3.1.2-bin-hadoop3.2/ spark312

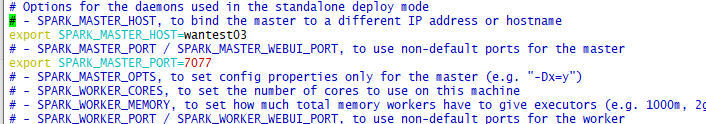

修改conf下配置文件

#释放配置文件

cp spark-env.sh.template spark-env.sh

#配置信息

export SPARK_MASTER_HOST=wantest03

export SPARK_MASTER_PORT=7077

配置系统环境变量

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export SPARK_HOME=/opt/software/spark312

export PATH=$SPARK_HOME/bin:$SPARK_HOME/sbin:$PATH

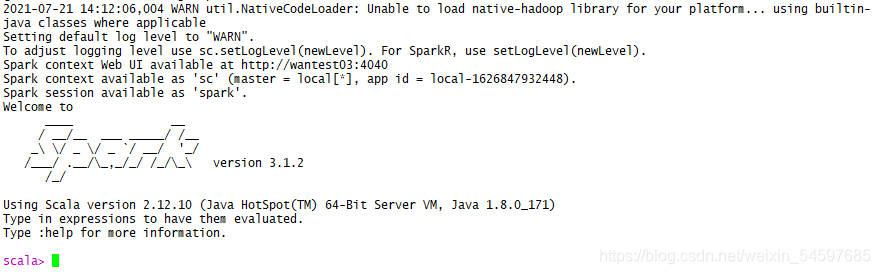

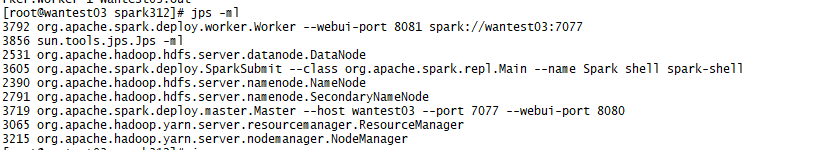

启动测试

#激活系统环境

source /etc/profile

#测试客户端启动

spark-shell

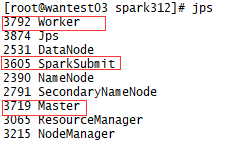

#测试集群启动

start-master.sh

start-slave.sh spark://wantest03:7077

Windows下安装

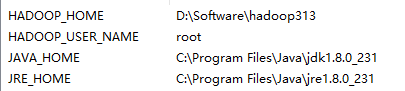

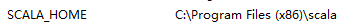

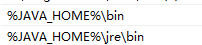

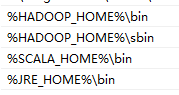

系统变量配置

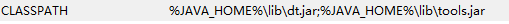

Path变量配置

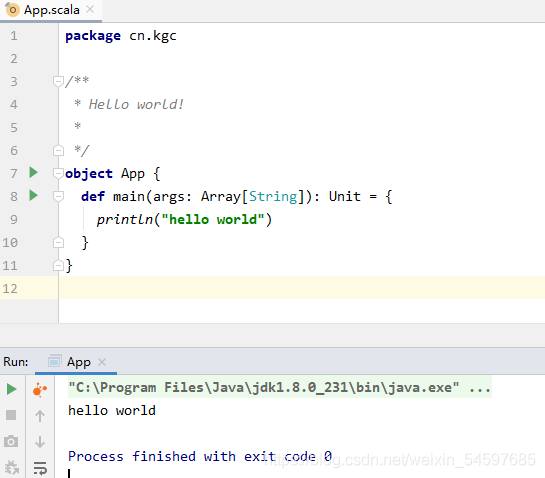

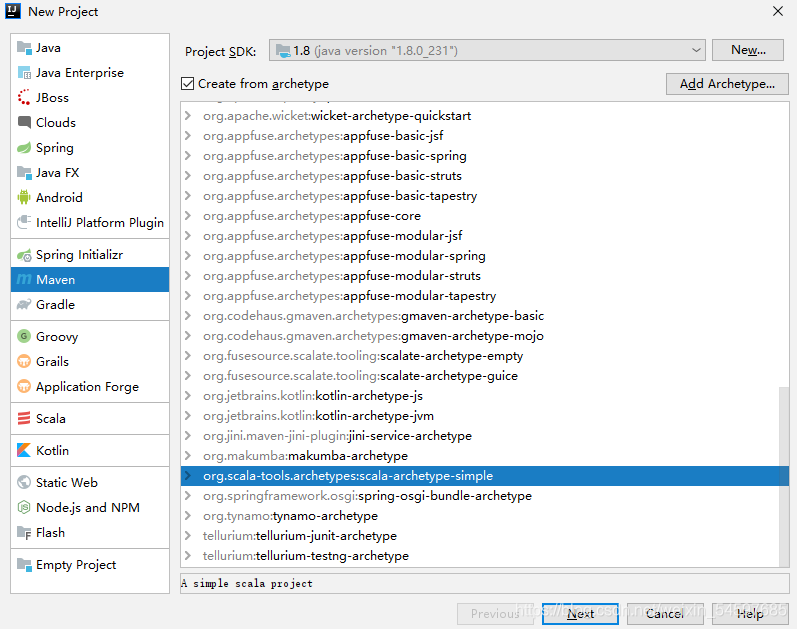

创建IDEA程序

新建一个maven工程,选择scala-simple

删除test文件夹

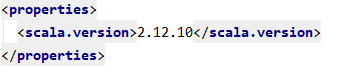

修改scala版本,与本地一致

添加依赖

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>commons-collections</groupId>

<artifactId>commons-collections</artifactId>

<version>3.2.2</version>

</dependency>

</dependencies>

测试