emqx和kafka消息通信插件实现应用

插件地址:https://github.com/ULTRAKID/emqx_plugin_kafka

1、拉取插件代码导入自己仓库

拉取插件代码

git clone https://github.com/ULTRAKID/emqx_plugin_kafka.git

之后通过git传入自己仓库或者直接fork一份到自己github仓库

2、EMQX编译

拉取EMQX源码(先配置ssh免密)

git clone git@github.com:emqx/emqx.git

修改EMQX主目录下Makefile文件,添加如下行

export EMQX_EXTRA_PLUGINS = emqx_plugin_kafka

修改EMQX目录下lib-extra/plugins文件,在erlang_plugins中添加如下行打入emqx_plugin_kafka插件

, {emqx_plugin_kafka, {git, "git@git.talkweb.com.cn:iot3.0/cloud/iot/emqx_plugin_kafka.git", {branch, "main"}}} #这里仓库为自己仓库地址 方便之后代码修改提交

编译

make

此过程可能会有点长 甚至有些依赖包下不下来 无法访问github

这是由于访问github网速慢的原因 推荐SwitchHosts这个软件来替换Hosts文件加快访问github

具体操作见链接:https://zhuanlan.zhihu.com/p/443847295

3、运行

配置kafka地址,修改emqx配置文件emqx_plugin_kafka.conf,具体目录在_build/emqx/rel/emqx/etc/plugins

注意:_bulid目录需要在编译后才会生成

vim _build/emqx/rel/emqx/etc/plugins/emqx_plugin_kafka.conf

kafka.host = 192.168.141.77 #这里修改为自己kafka所在服务器的ip

kafka.port = 9092

开启emqx日志级别为info 方便之后日志查看

vim _build/emqx/rel/emqx/etc/emqx.conf

log.level = info

运行emqx

_build/emqx/rel/emqx/bin/emqx console

此时应该没什么问题

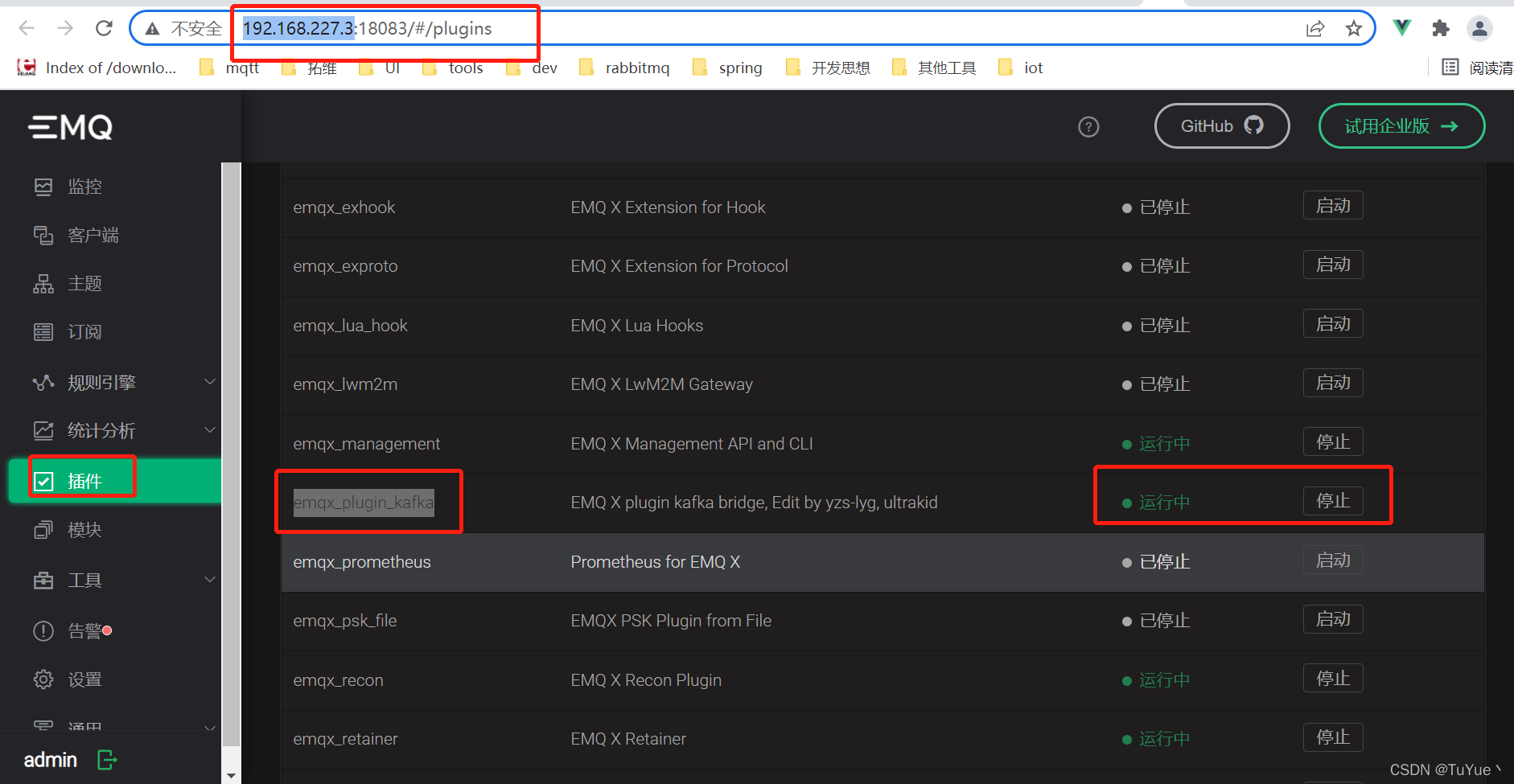

访问emqx启动服务器ip:18083,找到插件(plugins)菜单,找到插件emqx_plugin_kafka选择启动

通过MQTTX工具连接emqx,ip选择emqx安装所在服务器ip,名字随便起,就可以连接

连接成功后就可以往emqx指定topic发送消息

4、Kafka工具

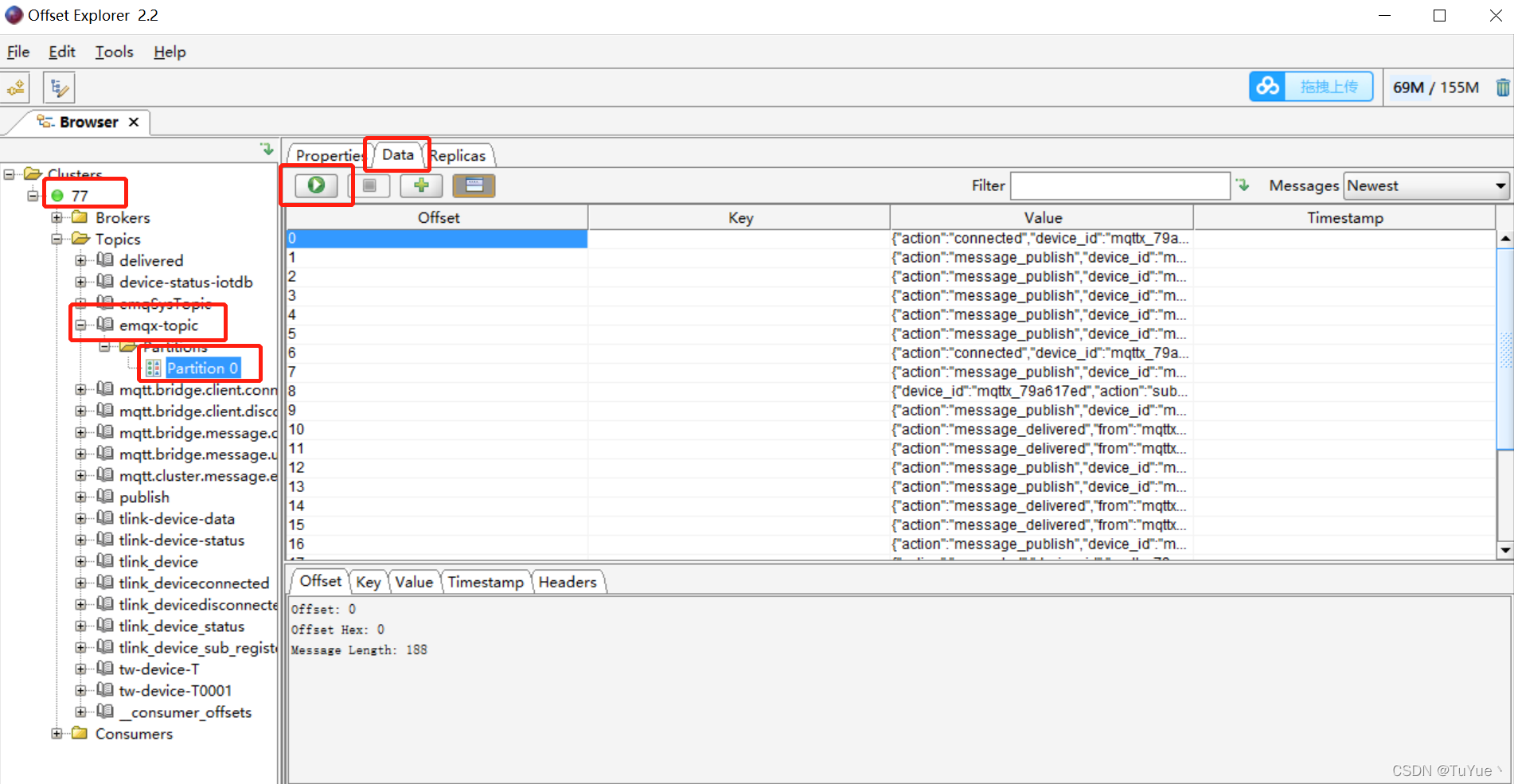

这里我们选择KafkaTools,连接到Kafka服务器所在ip的Kafka之后,因为我们还没有修改插件中Kafka默认topic,我们在topic – emqx-topic就会收到上述消息

同时emqx所在服务器也会打印info信息

5、插件代码修改

注意:此部分只是按照个人需求修改

修改配置文件emqx_plugin_kafka.conf

kafka.host = 192.168.141.77 #kafka的服务器地址 这里配置就不用每次重新编译emqx时去改kafka的配置文件的host

kafka.payloadtopic = tlink_device_ #kafka的topic前缀

修改rebar.config.script变量GitDescribe为master

EMQX_MGMT_DEP = {emqx_management, {git, UrlPrefix ++ "emqx-management", "master"}},

修改主要逻辑代码emqx_plugin_kafka.erl

添加按mqtt的topic分发消息到kafka不同的topic

get_kafka_topic_produce(Topic, Message) ->

?LOG_INFO("[KAFKA PLUGIN]Kafka topic = -s-n", [Topic]),

TopicPrefix = string:left(binary_to_list(Topic),6),

TlinkFlag = string:equal(TopicPrefix, <<"tlink/">>),

if

TlinkFlag == true ->

TopicStr = binary_to_list(Topic),

OtaIndex = string:str(TopicStr,"ota"),

SubRegisterIndex = string:str(TopicStr,"sub/register"),

SubLogin = string:str(TopicStr,"sub/login"),

if

OtaIndex /= 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"ota">>]);

SubRegisterIndex /= 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"sub_register">>]);

SubLogin /= 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"sub_status">>]);

OtaIndex + SubRegisterIndex + SubLogin == 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"msg">>])

end,

produce_kafka_payload(TopicKafka, Topic, Message);

TlinkFlag == false ->

?LOG_INFO("[KAFKA PLUGIN]MQTT topic prefix is not tlink = ~s~n",[Topic])

end,

ok.

完整代码如下

%%--------------------------------------------------------------------

%% Copyright (c) 2015-2017 Feng Lee <feng@emqtt.io>.

%%

%% Modified by Ramez Hanna <rhanna@iotblue.net>

%%

%% Licensed under the Apache License, Version 2.0 (the "License");

%% you may not use this file except in compliance with the License.

%% You may obtain a copy of the License at

%%

%% http://www.apache.org/licenses/LICENSE-2.0

%%

%% Unless required by applicable law or agreed to in writing, software

%% distributed under the License is distributed on an "AS IS" BASIS,

%% WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

%% See the License for the specific language governing permissions and

%% limitations under the License.

%%--------------------------------------------------------------------

-module(emqx_plugin_kafka).

% -include("emqx_plugin_kafka.hrl").

% -include_lib("emqx/include/emqx.hrl").

-include("emqx.hrl").

-include_lib("kernel/include/logger.hrl").

-export([load/1, unload/0]).

%% Client Lifecircle Hooks

-export([on_client_connect/3

, on_client_connack/4

, on_client_connected/3

, on_client_disconnected/4

, on_client_authenticate/3

, on_client_check_acl/5

, on_client_subscribe/4

, on_client_unsubscribe/4

]).

%% Session Lifecircle Hooks

-export([on_session_created/3

, on_session_subscribed/4

, on_session_unsubscribed/4

, on_session_resumed/3

, on_session_discarded/3

, on_session_takeovered/3

, on_session_terminated/4

]).

%% Message Pubsub Hooks

-export([on_message_publish/2

, on_message_delivered/3

, on_message_acked/3

, on_message_dropped/4

]).

%% Called when the plugin application start

load(Env) ->

ekaf_init([Env]),

emqx:hook('client.connect', {?MODULE, on_client_connect, [Env]}),

emqx:hook('client.connack', {?MODULE, on_client_connack, [Env]}),

emqx:hook('client.connected', {?MODULE, on_client_connected, [Env]}),

emqx:hook('client.disconnected', {?MODULE, on_client_disconnected, [Env]}),

emqx:hook('client.authenticate', {?MODULE, on_client_authenticate, [Env]}),

emqx:hook('client.check_acl', {?MODULE, on_client_check_acl, [Env]}),

emqx:hook('client.subscribe', {?MODULE, on_client_subscribe, [Env]}),

emqx:hook('client.unsubscribe', {?MODULE, on_client_unsubscribe, [Env]}),

emqx:hook('session.created', {?MODULE, on_session_created, [Env]}),

emqx:hook('session.subscribed', {?MODULE, on_session_subscribed, [Env]}),

emqx:hook('session.unsubscribed', {?MODULE, on_session_unsubscribed, [Env]}),

emqx:hook('session.resumed', {?MODULE, on_session_resumed, [Env]}),

emqx:hook('session.discarded', {?MODULE, on_session_discarded, [Env]}),

emqx:hook('session.takeovered', {?MODULE, on_session_takeovered, [Env]}),

emqx:hook('session.terminated', {?MODULE, on_session_terminated, [Env]}),

emqx:hook('message.publish', {?MODULE, on_message_publish, [Env]}),

emqx:hook('message.delivered', {?MODULE, on_message_delivered, [Env]}),

emqx:hook('message.acked', {?MODULE, on_message_acked, [Env]}),

emqx:hook('message.dropped', {?MODULE, on_message_dropped, [Env]}).

on_client_connect(ConnInfo = #{clientid := ClientId}, Props, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) connect, ConnInfo: ~p, Props: ~p~n",

[ClientId, ConnInfo, Props]),

ok.

on_client_connack(ConnInfo = #{clientid := ClientId}, Rc, Props, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) connack, ConnInfo: ~p, Rc: ~p, Props: ~p~n",

[ClientId, ConnInfo, Rc, Props]),

ok.

on_client_connected(ClientInfo = #{clientid := ClientId}, ConnInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) connected, ClientInfo:~n~p~n, ConnInfo:~n~p~n",

[ClientId, ClientInfo, ConnInfo]),

{IpAddr, _Port} = maps:get(peername, ConnInfo),

Action = <<"connected">>,

Now = now_mill_secs(os:timestamp()),

Online = 1,

Payload = [

{action, Action},

{clientid, ClientId},

{username, maps:get(username, ClientInfo)},

{keepalive, maps:get(keepalive, ConnInfo)},

{ipaddress, iolist_to_binary(ntoa(IpAddr))},

{proto_name, maps:get(proto_name, ConnInfo)},

{proto_ver, maps:get(proto_ver, ConnInfo)},

{timestamp, Now},

{online, Online}

],

Topic = list_to_binary([ekaf_get_topic(), <<"status">>]),

{ok, MessageBody} = emqx_json:safe_encode(Payload),

produce_kafka_payload(Topic, Action, MessageBody),

ok.

on_client_disconnected(ClientInfo = #{clientid := ClientId}, ReasonCode, ConnInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) disconnected due to ~p, ClientInfo:~n~p~n, ConnInfo:~n~p~n",

[ClientId, ReasonCode, ClientInfo, ConnInfo]),

Action = <<"disconnected">>,

Now = now_mill_secs(os:timestamp()),

Online = 0,

Payload = [

{action, Action},

{clientid, ClientId},

{username, maps:get(username, ClientInfo)},

{reason, ReasonCode},

{timestamp, Now},

{online, Online}

],

Topic = list_to_binary([ekaf_get_topic(), <<"status">>]),

{ok, MessageBody} = emqx_json:safe_encode(Payload),

produce_kafka_payload(Topic, Action, MessageBody),

ok.

on_client_authenticate(_ClientInfo = #{clientid := ClientId}, Result, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) authenticate, Result:~n~p~n", [ClientId, Result]),

ok.

on_client_check_acl(_ClientInfo = #{clientid := ClientId}, Topic, PubSub, Result, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) check_acl, PubSub:~p, Topic:~p, Result:~p~n",

[ClientId, PubSub, Topic, Result]),

ok.

%%---------------------------client subscribe start--------------------------%%

on_client_subscribe(#{clientid := ClientId}, _Properties, TopicFilters, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) will subscribe: ~p~n", [ClientId, TopicFilters]),

ok.

%%---------------------client subscribe stop----------------------%%

on_client_unsubscribe(#{clientid := ClientId}, _Properties, TopicFilters, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Client(~s) will unsubscribe ~p~n", [ClientId, TopicFilters]),

ok.

on_message_dropped(#message{topic = <<"$SYS/", _/binary>>}, _By, _Reason, _Env) ->

ok;

on_message_dropped(Message, _By = #{node := Node}, Reason, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Message dropped by node ~s due to ~s: ~s~n",

[Node, Reason, emqx_message:format(Message)]),

ok.

%%---------------------------message publish start--------------------------%%

on_message_publish(Message = #message{topic = <<"$SYS/", _/binary>>}, _Env) ->

ok;

on_message_publish(Message, _Env) ->

%%?LOG_INFO("[KAFKA PLUGIN]Client publish before produce, Message:~n~p~n",[Message]),

%%{ok, Payload} = format_payload(Message),

%%get_kafka_topic_produce(Message#message.topic, Payload),

%% produce_kafka_payload(<<"publish">>, Message#message.topic, Payload),

%%?LOG_INFO("[KAFKA PLUGIN]Client publish after produce, Payload:~n~p~n, Topic:~n~p~n",[Payload, Message#message.topic]),

ok.

%%---------------------message publish stop----------------------%%

on_message_delivered(_ClientInfo = #{clientid := ClientId}, Message, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Message delivered to client(~s): ~s~n",

[ClientId, emqx_message:format(Message)]),

Topic = Message#message.topic,

Payload = Message#message.payload,

get_kafka_topic_produce(Topic, Payload),

ok.

on_message_acked(_ClientInfo = #{clientid := ClientId}, Message, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Message acked by client(~s): ~s~n",

[ClientId, emqx_message:format(Message)]),

Topic = Message#message.topic,

Payload = Message#message.payload,

get_kafka_topic_produce(Topic, Payload),

ok.

%%--------------------------------------------------------------------

%% Session Lifecircle Hooks

%%--------------------------------------------------------------------

on_session_created(#{clientid := ClientId}, SessInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) created, Session Info:~n~p~n", [ClientId, SessInfo]),

ok.

on_session_subscribed(#{clientid := ClientId}, Topic, SubOpts, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) subscribed ~s with subopts: ~p~n", [ClientId, Topic, SubOpts]),

ok.

on_session_unsubscribed(#{clientid := ClientId}, Topic, Opts, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) unsubscribed ~s with opts: ~p~n", [ClientId, Topic, Opts]),

ok.

on_session_resumed(#{clientid := ClientId}, SessInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) resumed, Session Info:~n~p~n", [ClientId, SessInfo]),

ok.

on_session_discarded(_ClientInfo = #{clientid := ClientId}, SessInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) is discarded. Session Info: ~p~n", [ClientId, SessInfo]),

ok.

on_session_takeovered(_ClientInfo = #{clientid := ClientId}, SessInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) is takeovered. Session Info: ~p~n", [ClientId, SessInfo]),

ok.

on_session_terminated(_ClientInfo = #{clientid := ClientId}, Reason, SessInfo, _Env) ->

?LOG_INFO("[KAFKA PLUGIN]Session(~s) is terminated due to ~p~nSession Info: ~p~n",

[ClientId, Reason, SessInfo]),

ok.

ekaf_init(_Env) ->

io:format("Init emqx plugin kafka....."),

{ok, BrokerValues} = application:get_env(emqx_plugin_kafka, broker),

KafkaHost = proplists:get_value(host, BrokerValues),

?LOG_INFO("[KAFKA PLUGIN]KafkaHost = ~s~n", [KafkaHost]),

KafkaPort = proplists:get_value(port, BrokerValues),

?LOG_INFO("[KAFKA PLUGIN]KafkaPort = ~s~n", [KafkaPort]),

KafkaPartitionStrategy = proplists:get_value(partitionstrategy, BrokerValues),

KafkaPartitionWorkers = proplists:get_value(partitionworkers, BrokerValues),

KafkaTopic = proplists:get_value(payloadtopic, BrokerValues),

?LOG_INFO("[KAFKA PLUGIN]KafkaTopic = ~s~n", [KafkaTopic]),

application:set_env(ekaf, ekaf_bootstrap_broker, {KafkaHost, list_to_integer(KafkaPort)}),

application:set_env(ekaf, ekaf_partition_strategy, list_to_atom(KafkaPartitionStrategy)),

application:set_env(ekaf, ekaf_per_partition_workers, KafkaPartitionWorkers),

application:set_env(ekaf, ekaf_bootstrap_topics, list_to_binary(KafkaTopic)),

application:set_env(ekaf, ekaf_buffer_ttl, 10),

application:set_env(ekaf, ekaf_max_downtime_buffer_size, 5),

% {ok, _} = application:ensure_all_started(kafkamocker),

{ok, _} = application:ensure_all_started(gproc),

% {ok, _} = application:ensure_all_started(ranch),

{ok, _} = application:ensure_all_started(ekaf).

ekaf_get_topic() ->

{ok, Topic} = application:get_env(ekaf, ekaf_bootstrap_topics),

Topic.

%% Called when the plugin application stop

unload() ->

emqx:unhook('client.connect', {?MODULE, on_client_connect}),

emqx:unhook('client.connack', {?MODULE, on_client_connack}),

emqx:unhook('client.connected', {?MODULE, on_client_connected}),

emqx:unhook('client.disconnected', {?MODULE, on_client_disconnected}),

emqx:unhook('client.authenticate', {?MODULE, on_client_authenticate}),

emqx:unhook('client.check_acl', {?MODULE, on_client_check_acl}),

emqx:unhook('client.subscribe', {?MODULE, on_client_subscribe}),

emqx:unhook('client.unsubscribe', {?MODULE, on_client_unsubscribe}),

emqx:unhook('session.created', {?MODULE, on_session_created}),

emqx:unhook('session.subscribed', {?MODULE, on_session_subscribed}),

emqx:unhook('session.unsubscribed', {?MODULE, on_session_unsubscribed}),

emqx:unhook('session.resumed', {?MODULE, on_session_resumed}),

emqx:unhook('session.discarded', {?MODULE, on_session_discarded}),

emqx:unhook('session.takeovered', {?MODULE, on_session_takeovered}),

emqx:unhook('session.terminated', {?MODULE, on_session_terminated}),

emqx:unhook('message.publish', {?MODULE, on_message_publish}),

emqx:unhook('message.delivered', {?MODULE, on_message_delivered}),

emqx:unhook('message.acked', {?MODULE, on_message_acked}),

emqx:unhook('message.dropped', {?MODULE, on_message_dropped}).

produce_kafka_payload(Topic, Key, Payload) ->

%% Topic = ekaf_get_topic(),

?LOG_INFO("[KAFKA PLUGIN]Message = ~s~n",[Payload]),

ekaf_lib:common_async(produce_async_batched, Topic, {Key,Payload}).

get_kafka_topic_produce(Topic, Message) ->

?LOG_INFO("[KAFKA PLUGIN]Kafka topic = -s-n", [Topic]),

TopicPrefix = string:left(binary_to_list(Topic),6),

TlinkFlag = string:equal(TopicPrefix, <<"tlink/">>),

if

TlinkFlag == true ->

TopicStr = binary_to_list(Topic),

OtaIndex = string:str(TopicStr,"ota"),

SubRegisterIndex = string:str(TopicStr,"sub/register"),

SubLogin = string:str(TopicStr,"sub/login"),

if

OtaIndex /= 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"ota">>]);

SubRegisterIndex /= 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"sub_register">>]);

SubLogin /= 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"sub_status">>]);

OtaIndex + SubRegisterIndex + SubLogin == 0 ->

TopicKafka = list_to_binary([ekaf_get_topic(), <<"msg">>])

end,

produce_kafka_payload(TopicKafka, Topic, Message);

TlinkFlag == false ->

?LOG_INFO("[KAFKA PLUGIN]MQTT topic prefix is not tlink = ~s~n",[Topic])

end,

ok.

consume_kafka(Topic) ->

?LOG_INFO("[KAFKA PLUGIN]Consuming kafka message"),

ekaf:pick(Topic,(a,b,c) ->

?LOG_INFO("[KAFKA PLUGIN]Consuming kafka message a = ~s~n, b = ~s~n, c = ~s~n", a, b, c),

Msg = emqx_message:make(ClientId, Qos, Topic, Payload),

?LOG_INFO("[KAFKA PLUGIN]Consuming kafka message = ~s~n", Msg),

emqx_broker:publish(Msg).

)

ntoa({0, 0, 0, 0, 0, 16#ffff, AB, CD}) ->

inet_parse:ntoa({AB bsr 8, AB rem 256, CD bsr 8, CD rem 256});

ntoa(IP) ->

inet_parse:ntoa(IP).

now_mill_secs({MegaSecs, Secs, _MicroSecs}) ->

MegaSecs * 1000000000 + Secs * 1000 + _MicroSecs.

修改代码之后需要先清除再编译执行

make clean

make

具体见2、3步

另可能会发生修改的代码会不生效的情况,这里有两种解决办法

1、直接再emqx中修改代码,缺点是没有代码提示

2、在其它端修改代码,但是清除时需要清除所有(make clean-all) 缺点是emqx编译时需要重新下载所有依赖

我们可以采取在其他端修改代码,修改完之后将代码覆盖到emqx中

-------------------------------------------------------------分割线-------------------------------------------------------------------------------------

大翻新

上述连接kafka转发消息用的是ekaf,为了应对需求,满足消费kafka消息转给emqx,后续是使用brod作为连接kafka并转发消息的客户端

6、主要功能描述

1、根据emqx的消息topic后缀转发到kafka的指定topic;

2、消费kafka的msg_down topic消息转发到emqx;

3、消费kafka的kick_out topic将emqx中符合要求的客户端连接踢下线;

4、消费kafka的acl_clean topic将emqx中符合要求的客户端连接acl缓存删除。

7、主要方法描述

1、brod_get_topic

用于读取配置文件中配置的kafka的topic前缀

2、getPartition

用于读取kafka指定key的partition

3、produce_kafka_message

用于将message通过brod转发到指定kafka的topic、key和partition

4、get_kafka_topic_produce

根据emqx的topic拼接kafka的topic,随后在方法中调用produce_kafka_message执行向kafka中写入消息

5、kafka_consume

kafka消费指定topic集合的消息,init和handlemessage是它默认的回调

6、handle_message

该方法通过判断不同的topic名字,调用其他不同的方法来实现相关功能,包括消息转发emqx、剔除客户端、删除客户端acl权限

7、handle_message_msg_down

用于将kafka中的消息转发到emqx中

8、get_username

根据消息体获取其中的数据,来查询到username

9、get_client_list_by_username

根据username获取该username下的所有client信息

10、kick_out

将指定client集合中的clientid连接踢下线

11、acl_clean

将指定client集合中的clientid的连接acl缓存删除

8、主要业务代码

brod_get_topic() ->

{ok, Kafka} = application:get_env(?MODULE, broker),

Topic_prefix = proplists:get_value(payloadtopic, Kafka),

Topic_prefix.

getPartition(Key) ->

{ok, Kafka} = application:get_env(?MODULE, broker),

PartitionNum = proplists:get_value(partition, Kafka),

<<Fix:120, Match:8>> = crypto:hash(md5, Key),

abs(Match) rem PartitionNum.

produce_kafka_message(Topic, Message, ClientId, Env) ->

Key = iolist_to_binary(ClientId),

Partition = getPartition(Key),

%%JsonStr = jsx:encode(Message),

Kafka = proplists:get_value(broker, Env),

IsAsyncProducer = proplists:get_value(is_async_producer, Kafka),

if

IsAsyncProducer == false ->

brod:produce_sync(brod_client_1, Topic, Partition, ClientId, Message);

true ->

brod:produce_no_ack(brod_client_1, Topic, Partition, ClientId, Message)

%% brod:produce(brod_client_1, Topic, Partition, ClientId, JsonStr)

end,

ok.

get_kafka_topic_produce(Topic, Message, Env) ->

?LOG_INFO("[KAFKA PLUGIN]Kafka topic = -s-n", [Topic]),

TopicPrefix = string:left(binary_to_list(Topic),6),

TlinkFlag = string:equal(TopicPrefix, <<"tlink/">>),

if

TlinkFlag == true ->

TopicStr = binary_to_list(Topic),

OtaIndex = string:str(TopicStr,"ota"),

SubRegisterIndex = string:str(TopicStr,"sub/register"),

SubLogin = string:str(TopicStr,"sub/login"),

if

OtaIndex /= 0 ->

TopicKafka = list_to_binary([brod_get_topic(), <<"ota">>]);

SubRegisterIndex /= 0 ->

TopicKafka = list_to_binary([brod_get_topic(), <<"sub_register">>]);

SubLogin /= 0 ->

TopicKafka = list_to_binary([brod_get_topic(), <<"sub_status">>]);

true ->

TopicKafka = list_to_binary([brod_get_topic(), <<"msg">>])

end,

produce_kafka_message(TopicKafka, Message, Topic, Env);

TlinkFlag == false ->

?LOG_INFO("[KAFKA PLUGIN]MQTT topic prefix is not tlink = ~s~n",[Topic])

end,

ok.

kafka_consume(Topics)->

GroupConfig = [{offset_commit_policy, commit_to_kafka_v2},

{offset_commit_interval_seconds, 5}

],

GroupId = <<"emqx_cluster">>,

ConsumerConfig = [{begin_offset, earliest}],

brod:start_link_group_subscriber(brod_client_1, GroupId, Topics,

GroupConfig, ConsumerConfig,

_CallbackModule = ?MODULE,

_CallbackInitArg = []).

handle_message(_Topic, Partition, Message, State) ->

#kafka_message{ offset = Offset

, key = Key

, value = Value

} = Message,

error_logger:info_msg("~p ~p: offset:~w key:~s value:~s\n",

[self(), Partition, Offset, Key, Value]),

TopicStr = binary_to_list(_Topic),

MsgDown = string:equal(TopicStr,"tlink_device_msg_down"),

KickOut = string:equal(TopicStr,"tlink_device_kick_out"),

AclClean = string:equal(TopicStr,"tlink_device_acl_clean"),

if

MsgDown ->

handle_message_msg_down(Key, Value);

KickOut ->

{ok, UserName} = get_username(Value),

{ok, ClientList} = get_client_list_by_username(UserName),

kick_out(ClientList);

AclClean ->

{ok, UserName} = get_username(Value),

{ok, ClientList} = get_client_list_by_username(UserName),

acl_clean(ClientList);

true ->

error_logger:error_msg("the kafka comsume topic is not exist\n")

end,

error_logger:info_msg("message:~p\n",[Message]),

{ok, ack, State}.

handle_message_msg_down(Key, Value) ->

Topic = Key,

Payload = Value,

Qos = 0,

Msg = emqx_message:make(<<"consume">>, Qos, Topic, Payload),

emqx_broker:safe_publish(Msg),

ok.

get_username(Value) ->

{ok,Term} = emqx_json:safe_decode(Value),

Map = maps:from_list(Term),

%% 获取消息体中数据

MessageUserName = maps:get(<<"username">>, Map),

MessageProductKey = maps:get(<<"username">>, Map),

MessageDeviceName = maps:get(<<"username">>, Map),

%% 获取数据是否存在

MessageUserNameLen = string:len(binary_to_list(MessageUserName)),

MessageProductKeyLen = string:len(binary_to_list(MessageProductKey)),

MessageDeviceNameLen = string:len(binary_to_list(MessageDeviceName)),

%% 确定最终username

if

MessageUserNameLen /= 0 ->

UserName = MessageUserName;

MessageProductKeyLen /= 0, MessageDeviceNameLen/= 0 ->

UserName = list_to_binary([MessageDeviceName, <<"&">>, MessageProductKey]);

true ->

UserName = <<"">>

end,

{ok, UserName}.

get_client_list_by_username(UserName) ->

UserNameLen = string:len(binary_to_list(UserName)),

if

UserNameLen /= 0 ->

UserNameMap = #{

username => UserName

},

{ok, Client} = emqx_mgmt_api_clients:lookup(UserNameMap, {}),

%% 获取客户端list

ClientList = maps:get(data, Client);

true ->

ClientList = [],

error_logger:error_msg("the kafka comsume kick out username is not exist")

end,

{ok, ClientList}.

kick_out(ClientList) ->

ClientListLength = length(ClientList),

if

ClientListLength > 0 ->

%% 遍历剔除clientid

lists:foreach(fun(C) ->

ClientId = maps:get(clientid, C),

ClientIdMap = #{

clientid => ClientId

},

emqx_mgmt_api_clients:kickout(ClientIdMap, {}) end,

ClientList

);

true ->

error_logger:error_msg("the kafka comsume kick out clientlist length is zero")

end,

ok.

acl_clean(ClientList) ->

ClientListLength = length(ClientList),

if

ClientListLength > 0 ->

%% 遍历剔除clientid

lists:foreach(fun(C) ->

ClientId = maps:get(clientid, C),

ClientIdMap = #{

clientid => ClientId

},

emqx_mgmt_api_clients:clean_acl_cache(ClientIdMap, {}) end,

ClientList

);

true ->

error_logger:error_msg("the kafka comsume acl clean clientlist length is zero")

end,

ok.