基本思想:使用mingw32编译ffmpeg,然后使用clion+mingw32进行 测试、学习、使用;

自己尝试编译了好多次,总是失败,从x86_64-8.1.0-release-posix-sjlj-rt_v6-rev0到msys2的ming64编译器编译,没成功过,。。。。。还是用人家的方法编译库吧,,脚本运行即可

首先要保证pc电脑上安装和配置了mingw32的环境?x86_64-8.1.0-release-posix-sjlj-rt_v6-rev0 一定要保证是sjlj支持x86和x86_64编译工具

18、window10+Clion2022+minGW编译opencv4.4.0+opencv_contrib4.4.0并测试_sxj731533730的博客-CSDN博客

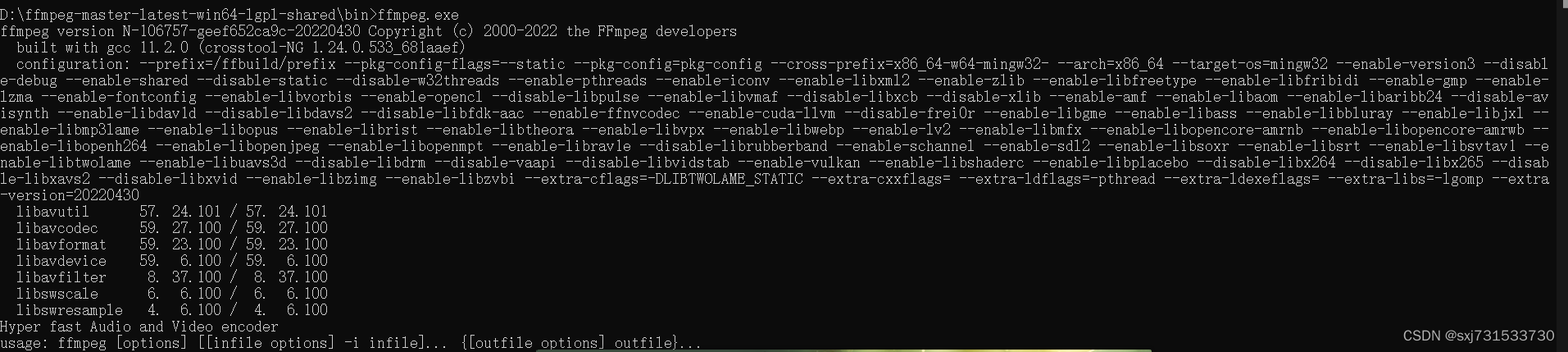

第一步:去官网下载编译好的ffmpeg库https://github.com/BtbN/FFmpeg-Builds/releases,去下载静态包ffmpeg-master-latest-win64-lgpl-shared.zip,使用其提供的bat脚本编译也可以,我尝试了编译没有问题,也可以使用官方编译好的静态包导入对应的clion目录

测试msys2和x86_64-8.1.0-release-posix-sjlj-rt_v6-rev0 都是支持

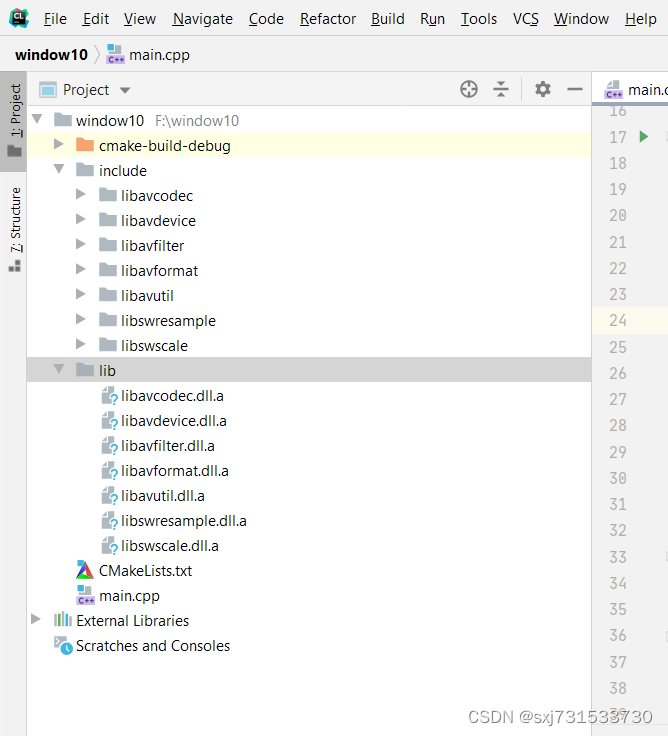

?第二步:目录结构

cmakelists.txt内容

cmake_minimum_required(VERSION 3.16)

project(window11)

set(CMAKE_CXX_STANDARD 11)

include_directories(${CMAKE_SOURCE_DIR}/include)

set(OpenCV_DIR "D:\\Opencv455\\buildMinGW")#改为mingw-bulid的位置

set(CMAKE_MODULE_PATH ${CMAKE_MODULE_PATH} "${CMAKE_SOURCE_DIR}/cmake/")

find_package(OpenCV REQUIRED)

set(OpenCV_LIBS opencv_core opencv_imgproc opencv_highgui opencv_imgcodecs)

add_library(libavformat STATIC IMPORTED)

set_target_properties(libavformat PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libavformat.dll.a)

add_library(libavdevice STATIC IMPORTED)

set_target_properties(libavdevice PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libavdevice.dll.a)

add_library(libavcodec STATIC IMPORTED)

set_target_properties(libavcodec PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libavcodec.dll.a)

add_library(libavfilter STATIC IMPORTED)

set_target_properties(libavfilter PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libavfilter.dll.a)

add_library(libavutil STATIC IMPORTED)

set_target_properties(libavutil PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libavutil.dll.a)

add_library(libswresample STATIC IMPORTED)

set_target_properties(libswresample PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libswresample.dll.a)

add_library(libswscale STATIC IMPORTED)

set_target_properties(libswscale PROPERTIES IMPORTED_LOCATION ${CMAKE_SOURCE_DIR}/lib/libswscale.dll.a)

add_executable(window11 main.cpp )

target_link_libraries(window11 ${OpenCV_LIBS}

libavformat

libavdevice

libavcodec

libavfilter

libavutil

libswresample

libswscale

)?测试代码,记得开启nginx流媒体服务5、Ubuntu下使用Clion调用ffmpeg测试开发环境_sxj731533730的博客-CSDN博客

#include <iostream>

#include <vector>

#include <opencv2/highgui.hpp>

#include <opencv2/video.hpp>

#include <opencv2/opencv.hpp>

extern "C"

{

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavutil/imgutils.h>

#include <libswscale/swscale.h>

}

using namespace std;

using namespace cv;

int main() {

const char* out_url = "rtmp://192.168.1.3:1935/live/livestream";

// 注册所有网络协议

avformat_network_init();

// 输出的数据结构

AVFrame* yuv = NULL;

Mat frame;

// 1.使用opencv 打开usb 摄像头

VideoCapture video_ptr;

video_ptr.open(0);

if (!video_ptr.isOpened()) {

cout << "camera open usb camera error" << endl;

return -1;

}

cout << "open usb camera successful." << endl;

int width = video_ptr.get(CAP_PROP_FRAME_WIDTH);

int height = video_ptr.get(CAP_PROP_FRAME_HEIGHT);

int fps = video_ptr.get(CAP_PROP_FPS);

// 如果fps为0,这里就设置25。因为在fps=0时,调用avcodec_open2返回-22,

// 参数不合法

if (0 == fps) { fps = 25; }

// 2.初始化格式转换上下文

SwsContext* sws_context = NULL;

sws_context = sws_getCachedContext(sws_context,

width, height, AV_PIX_FMT_BGR24, // 源格式

width, height, AV_PIX_FMT_YUV420P, // 目标格式

SWS_BICUBIC, // 尺寸变化使用算法

0, 0, 0);

if (NULL == sws_context) {

cout << "sws_getCachedContext error" << endl;

return -1;

}

// 3.初始化输出的数据结构

yuv = av_frame_alloc();

yuv->format = AV_PIX_FMT_YUV420P;

yuv->width = width;

yuv->height = height;

yuv->pts = 0;

// 分配yuv空间

int ret_code = av_frame_get_buffer(yuv, 32);

if (0 != ret_code) {

cout << " yuv init fail" << endl;

return -1;

}

// 4.初始化编码上下文

// 4.1找到编码器

const AVCodec* codec = avcodec_find_encoder(AV_CODEC_ID_H264);

if (NULL == codec) {

cout << "Can't find h264 encoder." << endl;

return -1;

}

// 4.2创建编码器上下文

AVCodecContext* codec_context = avcodec_alloc_context3(codec);

if (NULL == codec_context) {

cout << "avcodec_alloc_context3 failed." << endl;

return -1;

}

// 4.3配置编码器参数

// vc->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

codec_context->codec_id = codec->id;

codec_context->thread_count = 8;

// 压缩后每秒视频的bit流 50k

codec_context->bit_rate = 50 * 1024 * 8;

codec_context->width = width;

codec_context->height = height;

codec_context->time_base = { 1, fps };

codec_context->framerate = { fps, 1 };

// 画面组的大小,多少帧一个关键帧

codec_context->gop_size = 50;

codec_context->max_b_frames = 0;

codec_context->pix_fmt = AV_PIX_FMT_YUV420P;

codec_context->qmin = 10;

codec_context->qmax = 51;

AVDictionary* codec_options = nullptr;

//(baseline | high | high10 | high422 | high444 | main)

av_dict_set(&codec_options, "profile", "baseline", 0);

av_dict_set(&codec_options, "preset", "superfast", 0);

av_dict_set(&codec_options, "tune", "zerolatency", 0);

// 4.4打开编码器上下文

ret_code = avcodec_open2(codec_context, codec, &codec_options);

if (0 != ret_code) {

return -1;

}

cout << "avcodec_open2 success!" << endl;

// 5.输出封装器和视频流配置

// 5.1创建输出封装器上下文

// rtmp flv封装器

AVFormatContext* format_context = nullptr;

ret_code = avformat_alloc_output_context2(&format_context, 0, "flv", out_url);

if (0 != ret_code) {

return -1;

}

// 5.2添加视频流

AVStream* vs = avformat_new_stream(format_context, NULL);

if (NULL == vs) {

cout << "avformat_new_stream failed." << endl;

return -1;

}

vs->codecpar->codec_tag = 0;

// 从编码器复制参数

avcodec_parameters_from_context(vs->codecpar, codec_context);

av_dump_format(format_context, 0, out_url, 1);

// 打开rtmp 的网络输出IO

ret_code = avio_open(&format_context->pb, out_url, AVIO_FLAG_WRITE);

if (0 != ret_code) {

cout << "avio_open failed." << endl;

return -1;

}

// 写入封装头

ret_code = avformat_write_header(format_context, NULL);

if (0 != ret_code) {

cout << "avformat_write_header failed." << endl;

return -1;

}

AVPacket pack;

memset(&pack, 0, sizeof(pack));

int vpts = 0;

uint8_t* in_data[AV_NUM_DATA_POINTERS] = { 0 };

int in_size[AV_NUM_DATA_POINTERS] = { 0 };

for (;;) {

// 读取rtsp视频帧,解码视频帧

video_ptr >> frame;

// If the frame is empty, break immediately

if (frame.empty()) break;

imshow("video", frame);

waitKey(1);

// rgb to yuv

in_data[0] = frame.data;

// 一行(宽)数据的字节数

in_size[0] = frame.cols * frame.elemSize();

int h = sws_scale(sws_context, in_data, in_size, 0, frame.rows,

yuv->data, yuv->linesize);

if (h <= 0) { continue; }

// h264编码

yuv->pts = vpts;

vpts++;

ret_code = avcodec_send_frame(codec_context, yuv);

if (0 != ret_code) { continue; }

ret_code = avcodec_receive_packet(codec_context, &pack);

if (0 != ret_code || pack.buf !=nullptr) {//

cout << "avcodec_receive_packet." << endl;

}

else {

cout << "avcodec_receive_packet contiune." << endl;

continue;

}

// 推流

pack.pts = av_rescale_q(pack.pts, codec_context->time_base, vs->time_base);

pack.dts = av_rescale_q(pack.dts, codec_context->time_base, vs->time_base);

pack.duration = av_rescale_q(pack.duration,

codec_context->time_base,

vs->time_base);

ret_code = av_interleaved_write_frame(format_context, &pack);

if (0 != ret_code)

{

cout << "pack is error" << endl;

}

av_packet_unref(&pack);

frame.release();

}

av_dict_free(&codec_options);

avcodec_free_context(&codec_context);

av_frame_free(&yuv);

avio_close(format_context->pb);

avformat_free_context(format_context);

sws_freeContext(sws_context);

video_ptr.release();

destroyAllWindows();

return 0;

}

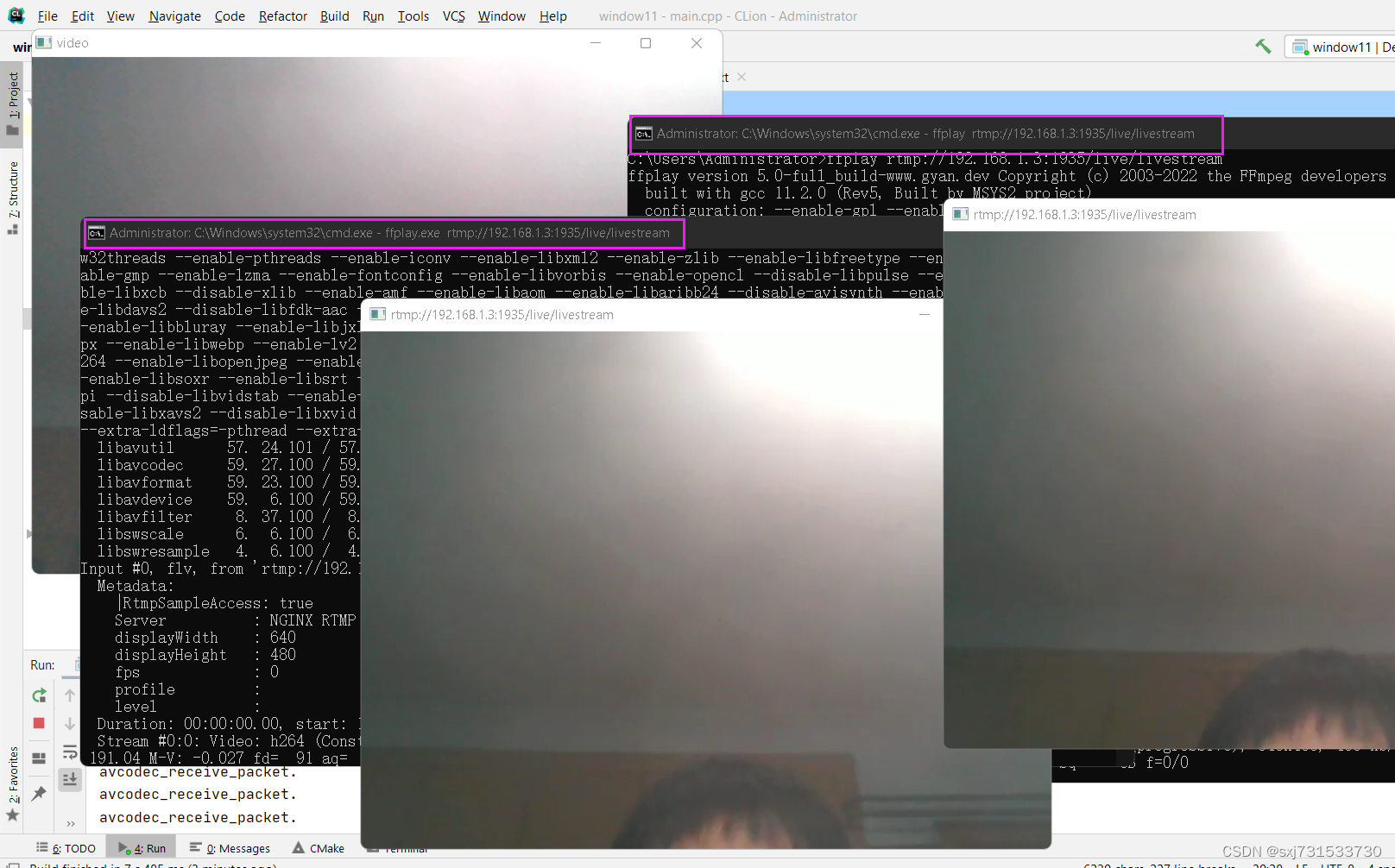

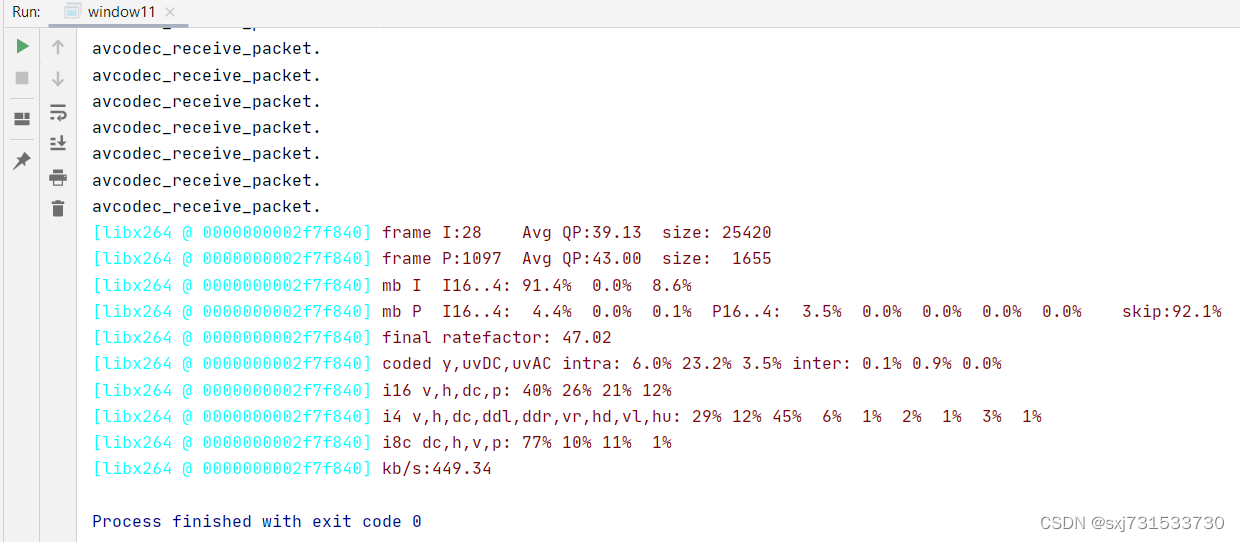

测试还是相当友好

使ming64编译ffplay.exe的库拉流和使用官方的下载的生成ffplay.exe拉流都没有问题

结束

这样对于MinGW32编译器就相当友好了,目前支持ncnn 、mnn、 tnn、 tengine-lite 等深度学习框架,支持opencv、opencv-contrib、boost 、curl、nlohmann等库,支持头文件eigen 、rapidjson、spdlog等库,支持ffmpeg、vulkan、opencl等库,只用过这么多。。

参考