文章目录

操作系统、依赖环境

基于ubuntu 18.04裸机

sudo apt-get update

sudo apt-get upgrade

# 重启服务器

sudo apt-get install build-ess* python3.8 python3.8-dev llvm-10 llvm-10-dev llvm-10-runtime llvm-10-tools python3-setuptools libtinfo-dev zlib1g-dev libedit-dev libxml2-dev liblapack-dev

# 下载 cmake

wget -c https://github.com/Kitware/CMake/releases/download/v3.22.5/cmake-3.22.5-linux-x86_64.tar.gz

tar -zxvf cmake-3.22.5-linux-x86_64.tar.gz

cp -R cmake-3.22.5-linux-x86_64/* ~/.local/

rm -rf cmake-3.22.5-linux-x86_64 cmake-3.22.5-linux-x86_64.tar.gz

# 在 .bashrc 最后添加下面内容:

# export PATH=$HOME/.local/bin:$PATH

# export TVM_LOG_DEBUG="ir/transform.cc=1,relay/ir/transform.cc=1"

source .bashrc

# 系统自带python3为 3.6.9 版本,将 /usr/bin/python3.8 软链接至 ~/.local/bin/python3

ln -s /usr/bin/python3.8 $HOME/.local/bin/python3

# 更新 pip

python3 -m pip install --upgrade pip

# 重启服务器

下载源代码

# cwd $HOME/workspace/tvm_workspace

git clone https://github.com/apache/tvm tvm

cd tvm

git submodule init

git submodule update

编译

# cwd $HOME/workspace/tvm_workspace/tvm

mkdir build

cd build

cp ../cmake/config.cmake .

# 修改 config.cmake 文件中的相关配置

# set(USE_RELAY_DEBUG ON)

# set(USE_LLVM llvm-config-10)

cmake -DCMAKE_BUILD_TYPE="Debug" ..

make -j 4

Python配置TVM模块

# 在 ~/.bashrc 中添加下面两个环境变量

# 设置 TVM_HOME 环境变量为 tvm源码目录

# export TVM_HOME=$HOME/workspace/tvm_workspace/tvm

# python模块搜索路径中添加 tvm/python

# export PYTHONPATH=$TVM_HOME/python:$PYTHONPATH

source ~/.bashrc

cpptest验证

安装googletest

# cwd $HOME/workspace/tvm_workspace

git clone https://github.com/google/googletest

cd googletest

mkdir build

cd build

cmake -DBUILD_SHARED_LIBS=ON -DCMAKE_INSTALL_PREFIX=$HOME/.local ..

make -j 4

sudo make install

执行tvm的cpp测试脚本,无报错就说明没问题

# cwd $HOME/workspace/tvm_workspace/tvm

./tests/scripts/task_cpp_unittest.sh

vscode安装插件

通过remote ssh插件连接开发服务器后,安装下列插件

- Python Extension Pack

- C/C++ Extension Pack

- CodeLLDB

- FFI Navigator

安装后,忽略FFI Navigator插件的报错,通过下面的命令安装FFI Navigator的Python模块:

pip3 install --user git+https://github.com/tqchen/ffi-navigator.git#subdirectory=python

安装后,重启vscode

通过Python接口编译、优化模型

安装Python模块

# 安装依赖的python模块,包括 auto-tuning 依赖的模块

pip3 install --user cython

pip3 install --user numpy

pip3 install --user decorator attrs tornado psutil

pip3 install --user pybind11 pythran

pip3 install --user xgboost cloudpickle ninja pytest

pip3 install --user torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cpu

pip3 install --user onnx onnxruntime

# onnx会自带protobuf模块

# 我们需要更新 protoc 的版本,确保两者一致

pip3 show protobuf

# 我的显示是 Version: 3.20.1

/usr/bin/protoc --version

# 我的显示是

# 从 https://github.com/protocolbuffers/protobuf/releases 下载 3.20.1 版本的 protoc

wget -c https://github.com/protocolbuffers/protobuf/releases/download/v3.20.1/protoc-3.20.1-linux-x86_64.zip

mkdir protoc

mv protoc-3.20.1-linux-x86_64.zip protoc/

cd protoc

unzip protoc-3.20.1-linux-x86_64.zip

cp -R bin include ~/.local/

cd ..

rm -rf protoc

pip3 uninstall onnx onnxruntime

pip3 install --user onnx onnxruntime

通过TVM的Python API完成如下任务:

- Compile a pre-trained ResNet-50 v2 model for the TVM runtime.

- Run a real image through the compiled model, and interpret the output and model performance.

- Tune the model that model on a CPU using TVM.

- Re-compile an optimized model using the tuning data collected by TVM.

- Run the image through the optimized model, and compare the output and model performance.

下面是完成这些任务的Python代码文件test.py的内容:

import onnx

from tvm.contrib.download import download_testdata

from PIL import Image

import numpy as np

import tvm.relay as relay

import tvm

from tvm.contrib import graph_executor

# ==============================加载 ONNX 模型================================

model_path="/home/ubuntu/workspace/tvm_workspace/tvm/.vscode/ws/resnet50-v2-7.onnx"

onnx_model = onnx.load(model_path)

# Seed numpy's RNG to get consistent results

np.random.seed(0)

# =============================下载、预处理和加载测试图像==========================

img_path = "/home/ubuntu/workspace/tvm_workspace/tvm/.vscode/ws/kitten.jpg"

# Resize it to 224x224

resized_image = Image.open(img_path).resize((224, 224))

img_data = np.asarray(resized_image).astype("float32")

# Our input image is in HWC layout while ONNX expects CHW input, so convert the array

img_data = np.transpose(img_data, (2, 0, 1))

# Normalize according to the ImageNet input specification

imagenet_mean = np.array([0.485, 0.456, 0.406]).reshape((3, 1, 1))

imagenet_stddev = np.array([0.229, 0.224, 0.225]).reshape((3, 1, 1))

norm_img_data = (img_data / 255 - imagenet_mean) / imagenet_stddev

# Add the batch dimension, as we are expecting 4-dimensional input: NCHW.

img_data = np.expand_dims(norm_img_data, axis=0)

# ===============================使用relay编译模型==============================

target = "llvm"

# The input name may vary across model types. You can use a tool

# like Netron to check input names

input_name = "data"

shape_dict = {input_name: img_data.shape}

mod, params = relay.frontend.from_onnx(onnx_model, shape_dict)

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

# =============================在tvm运行时上执行================================

dtype = "float32"

module.set_input(input_name, img_data)

module.run()

output_shape = (1, 1000)

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

# =============================收集基本性能数据=================================

import timeit

timing_number = 10

timing_repeat = 10

unoptimized = (

np.array(timeit.Timer(lambda: module.run()).repeat(repeat=timing_repeat, number=timing_number))

* 1000

/ timing_number

)

unoptimized = {

"mean": np.mean(unoptimized),

"median": np.median(unoptimized),

"std": np.std(unoptimized),

}

print(unoptimized)

# ============================后处理输出========================================

from scipy.special import softmax

labels_path = "/home/ubuntu/workspace/tvm_workspace/tvm/.vscode/ws/synset.txt"

with open(labels_path, "r") as f:

labels = [l.rstrip() for l in f]

# Open the output and read the output tensor

scores = softmax(tvm_output)

scores = np.squeeze(scores)

ranks = np.argsort(scores)[::-1]

for rank in ranks[0:5]:

print("class='%s' with probability=%f" % (labels[rank], scores[rank]))

# ===================================调整模型===================================

import tvm.auto_scheduler as auto_scheduler

from tvm.autotvm.tuner import XGBTuner

from tvm import autotvm

number = 10

repeat = 1

min_repeat_ms = 0 # since we're tuning on a CPU, can be set to 0

timeout = 10 # in seconds

# create a TVM runner

runner = autotvm.LocalRunner(

number=number,

repeat=repeat,

timeout=timeout,

min_repeat_ms=min_repeat_ms,

enable_cpu_cache_flush=True,

)

# =========================定义优化算法 设置tune参数==============================

tuning_option = {

"tuner": "xgb",

"trials": 20,

"early_stopping": 100,

"measure_option": autotvm.measure_option(

builder=autotvm.LocalBuilder(build_func="default"), runner=runner

),

"tuning_records": "resnet-50-v2-autotuning.json",

}

# begin by extracting the tasks from the onnx model

tasks = autotvm.task.extract_from_program(mod["main"], target=target, params=params)

# Tune the extracted tasks sequentially.

for i, task in enumerate(tasks):

prefix = "[Task %2d/%2d] " % (i + 1, len(tasks))

tuner_obj = XGBTuner(task, loss_type="rank")

tuner_obj.tune(

n_trial=min(tuning_option["trials"], len(task.config_space)),

early_stopping=tuning_option["early_stopping"],

measure_option=tuning_option["measure_option"],

callbacks=[

autotvm.callback.progress_bar(tuning_option["trials"], prefix=prefix),

autotvm.callback.log_to_file(tuning_option["tuning_records"]),

],

)

# =========================使用调整数据编译优化模型================================

with autotvm.apply_history_best(tuning_option["tuning_records"]):

with tvm.transform.PassContext(opt_level=3, config={}):

lib = relay.build(mod, target=target, params=params)

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

# ===========================验证优化模型是否运行并产生相同的结果=====================

dtype = "float32"

module.set_input(input_name, img_data)

module.run()

output_shape = (1, 1000)

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

scores = softmax(tvm_output)

scores = np.squeeze(scores)

ranks = np.argsort(scores)[::-1]

for rank in ranks[0:5]:

print("class='%s' with probability=%f" % (labels[rank], scores[rank]))

# ============================比较调整和未调整的模型===============================

timing_number = 10

timing_repeat = 10

optimized = (

np.array(timeit.Timer(lambda: module.run()).repeat(repeat=timing_repeat, number=timing_number))

* 1000

/ timing_number

)

optimized = {"mean": np.mean(optimized), "median": np.median(optimized), "std": np.std(optimized)}

print("optimized: %s" % (optimized))

print("unoptimized: %s" % (unoptimized))

配置工程

下文默认已使用vscode的Remote SSH插件连接至远程服务器,并打开了TVM工程。

Ctrl + ~可以在vscode中打开一个终端

# cwd $HOME/workspace/tvm_workspace/tvm

mkdir -p .vscode/ws

# .vscode/ws是我的工作目录

# 将 test.py 放到 .vscode/ws 下

# 下载 resnet50-v2-7.onnx 模型,放到 .vscode/ws 下

# https://media.githubusercontent.com/media/onnx/models/main/vision/classification/resnet/model/resnet50-v2-7.onnx

# 下载 kitten.jpg 图片,放到 .vscode/ws 下

# https://s3.amazonaws.com/model-server/inputs/kitten.jpg

# 下载 synset.txt 放到 .vscode/ws 下

# https://s3.amazonaws.com/onnx-model-zoo/synset.txt

.vscode/launch.json

{

// 使用 IntelliSense 了解相关属性。

// 悬停以查看现有属性的描述。

// 欲了解更多信息,请访问: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "Python: Current File",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": true,

"cwd": "${workspaceFolder}/.vscode/ws"

}

]

}

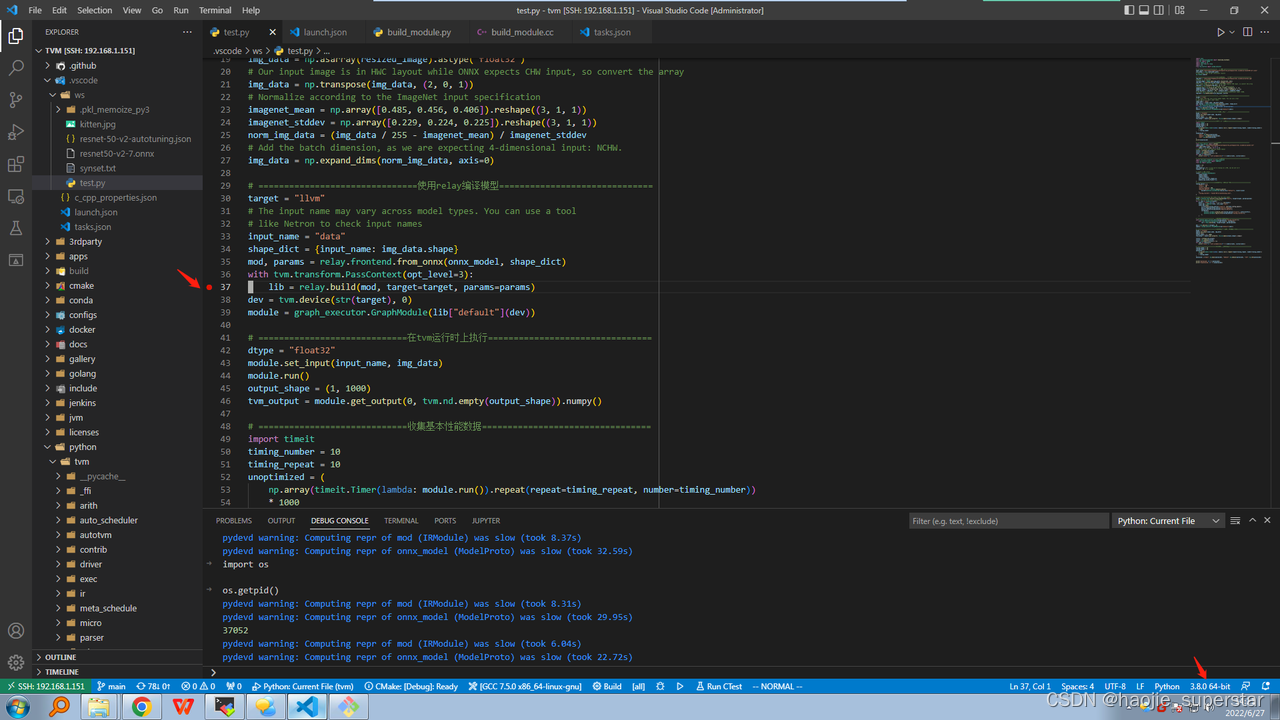

运行工程

打开test.py文件,F5运行程序

测试Python、C++互相跳转

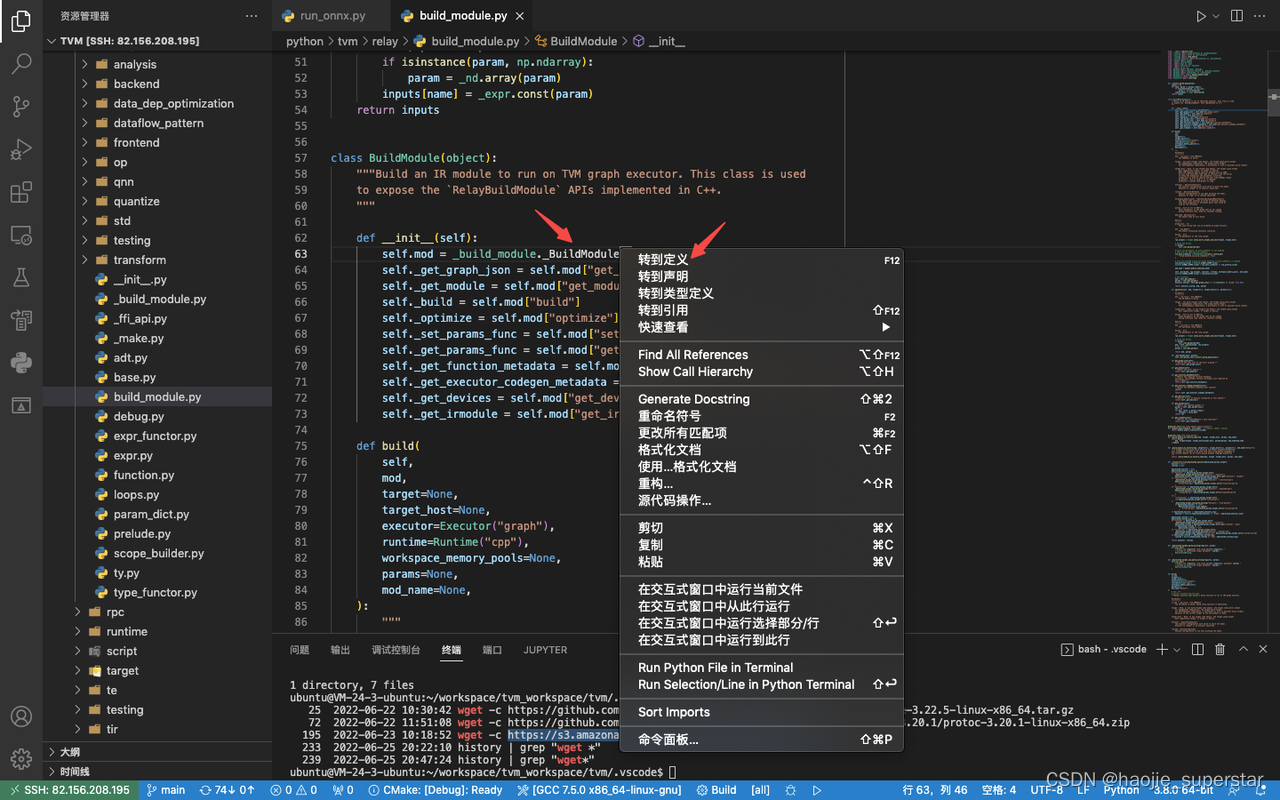

Python跳转C++

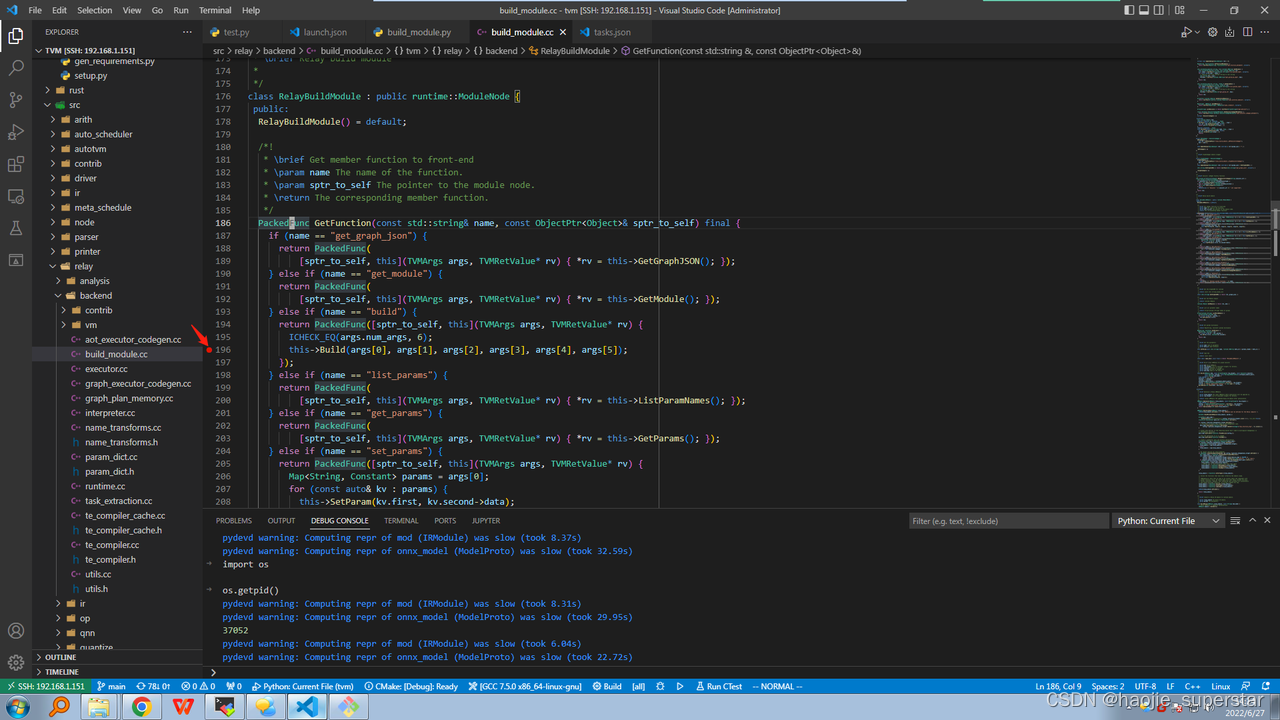

打开python/tvm/relay/build_module.py文件,定位到下面位置:

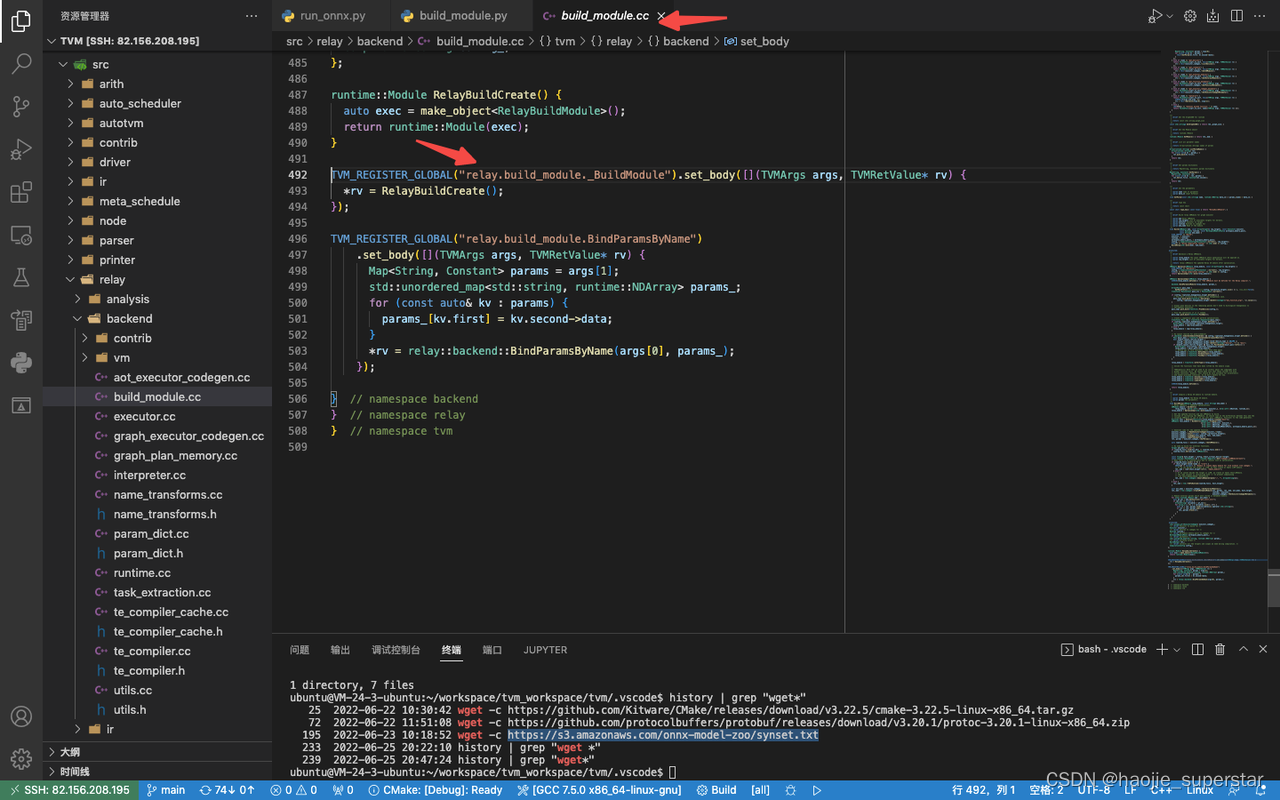

点击转到定义后,跳转到src/relay/backend/build_module.cc文件,定位在下面位置,跳转成功:

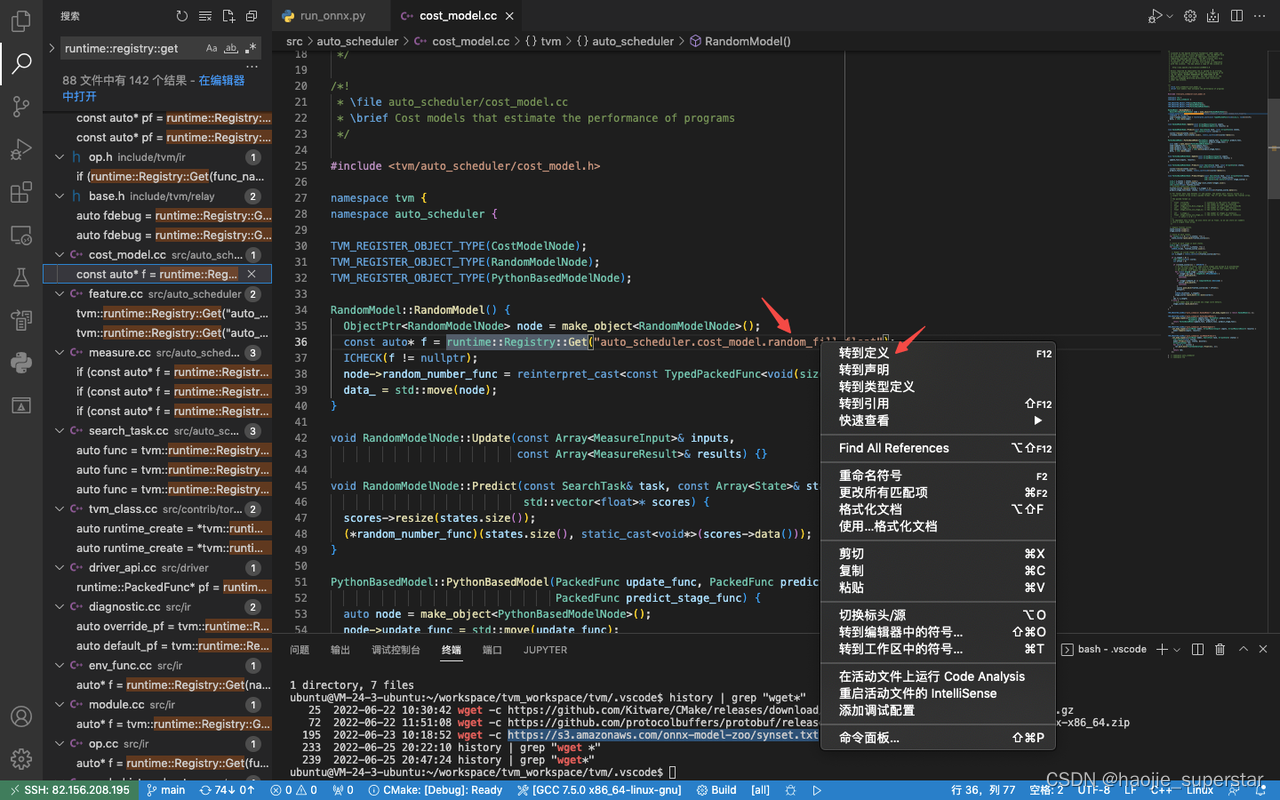

C++跳转Python

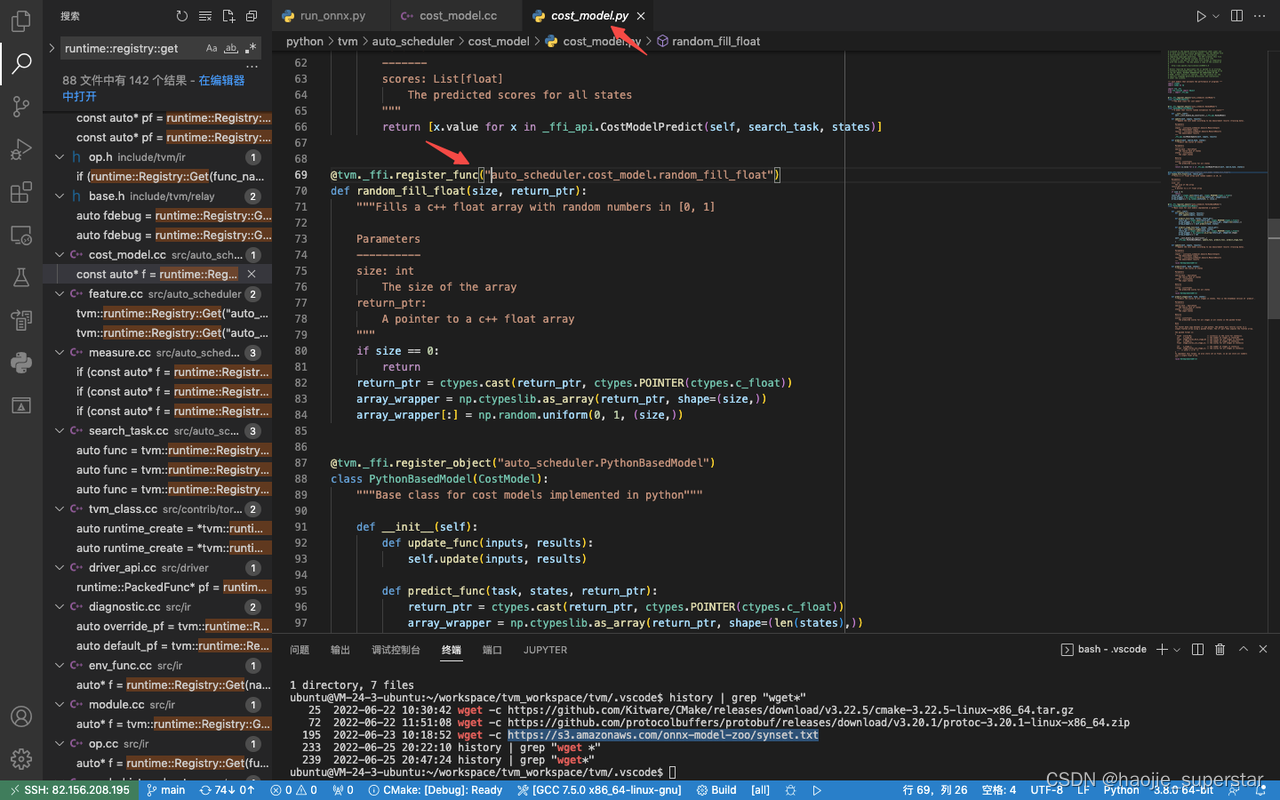

打开src/auto_scheduler/cost_model.cc文件,定位到下面位置:

点击转到定义后,跳转到python/tvm/auto_scheduler/cost_model/cost_model.py文件,定位在下面位置,跳转成功:

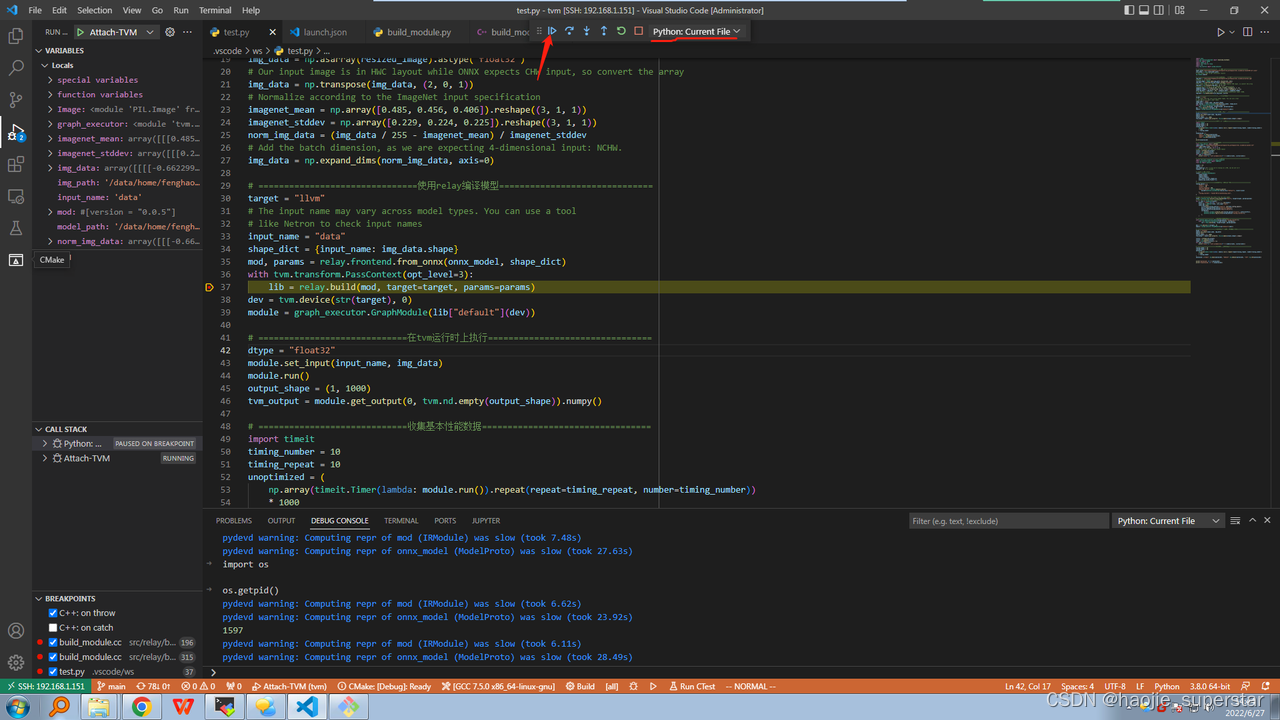

调试 test.py 跳转到C++代码断点

账户需要具有

sudo权限

vscode中ctrl + p可以模糊搜索文件

.vscode/launch.json

{

// 使用 IntelliSense 了解相关属性。

// 悬停以查看现有属性的描述。

// 欲了解更多信息,请访问: https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "Python: Current File",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": true,

"cwd": "${workspaceFolder}/.vscode/ws",

"preLaunchTask": "myShellCmd"

},

{

"type": "lldb",

"request": "attach",

"name": "Attach-TVM",

"pid": "${command:pickMyProcess}"

}

]

}

.vscode/tasks.json

{

"version": "2.0.0",

"tasks": [

{

"label": "myShellCmd",

"type": "shell",

"command":"echo 0 | sudo tee /proc/sys/kernel/yama/ptrace_scope"

}

]

}

.vscode/c_cpp_properties.json

{

"configurations": [

{

"name": "Linux",

"includePath": [

"${workspaceFolder}/include"

],

"defines": [],

"compilerPath": "/usr/bin/gcc",

"cStandard": "c11",

"cppStandard": "gnu++14",

"intelliSenseMode": "linux-gcc-x64",

"configurationProvider": "ms-vscode.cmake-tools"

}

],

"version": 4

}

调试

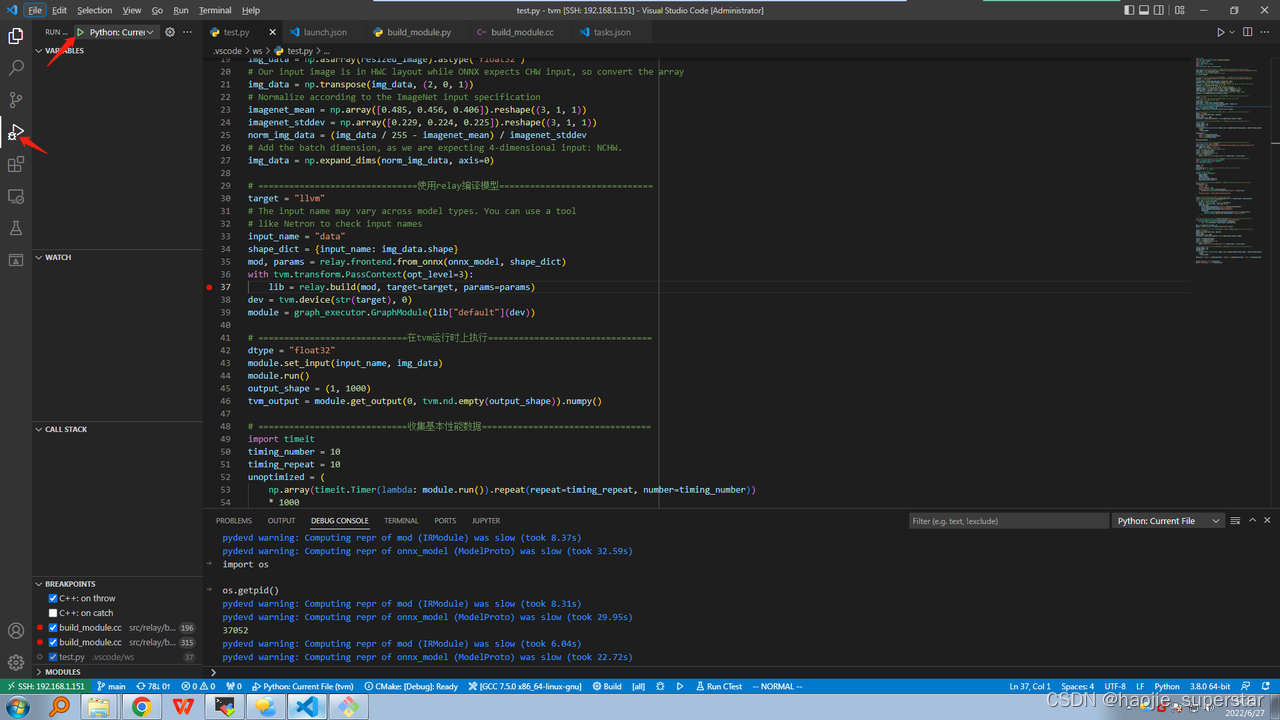

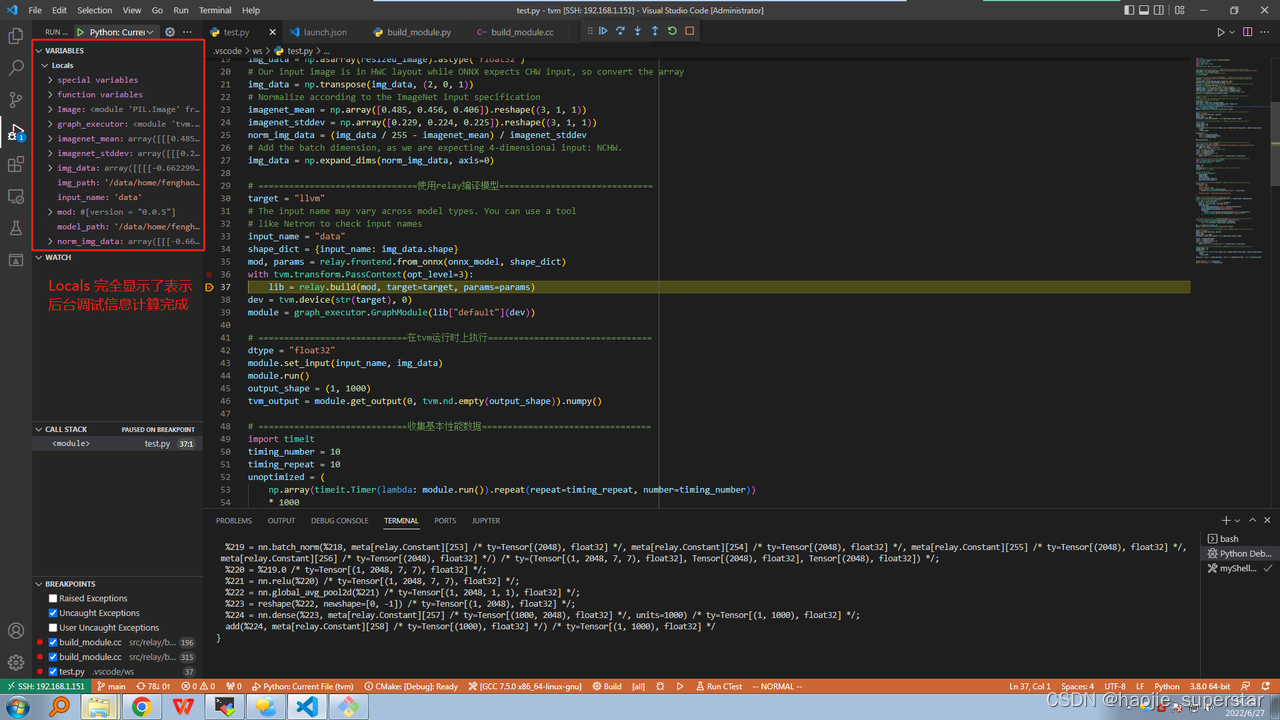

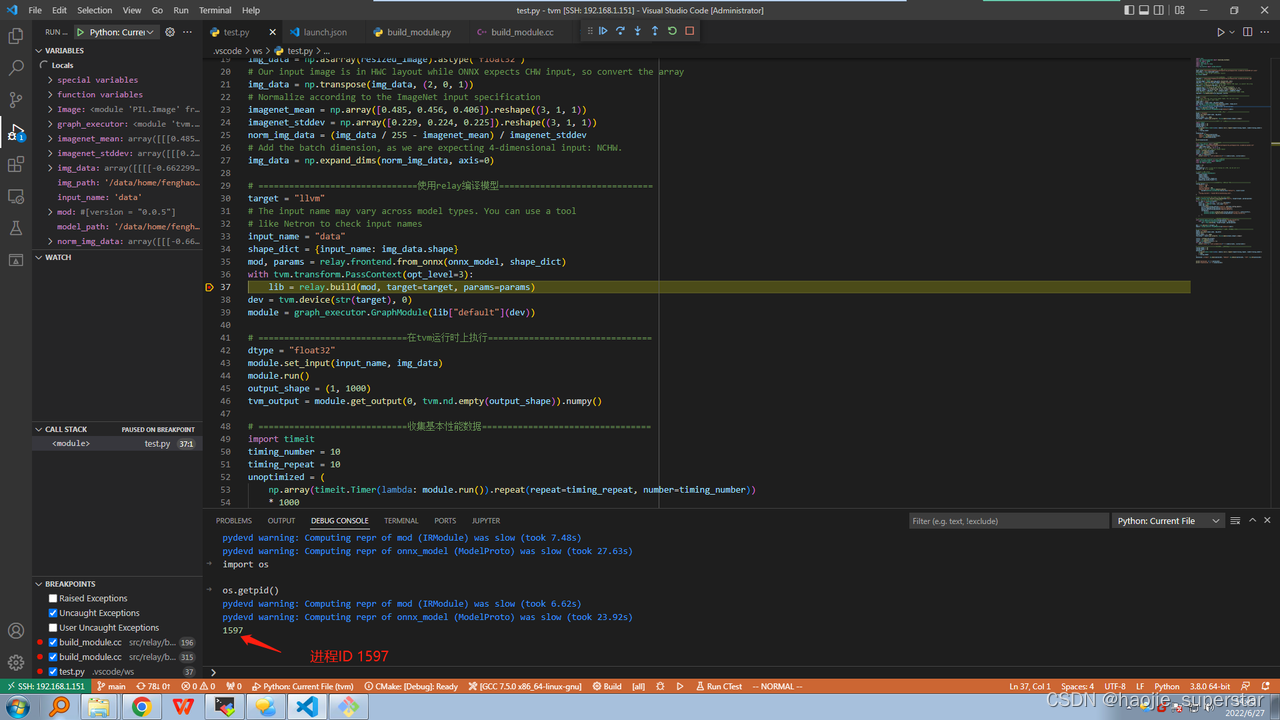

在test.py文件的relay.build行设置断点:

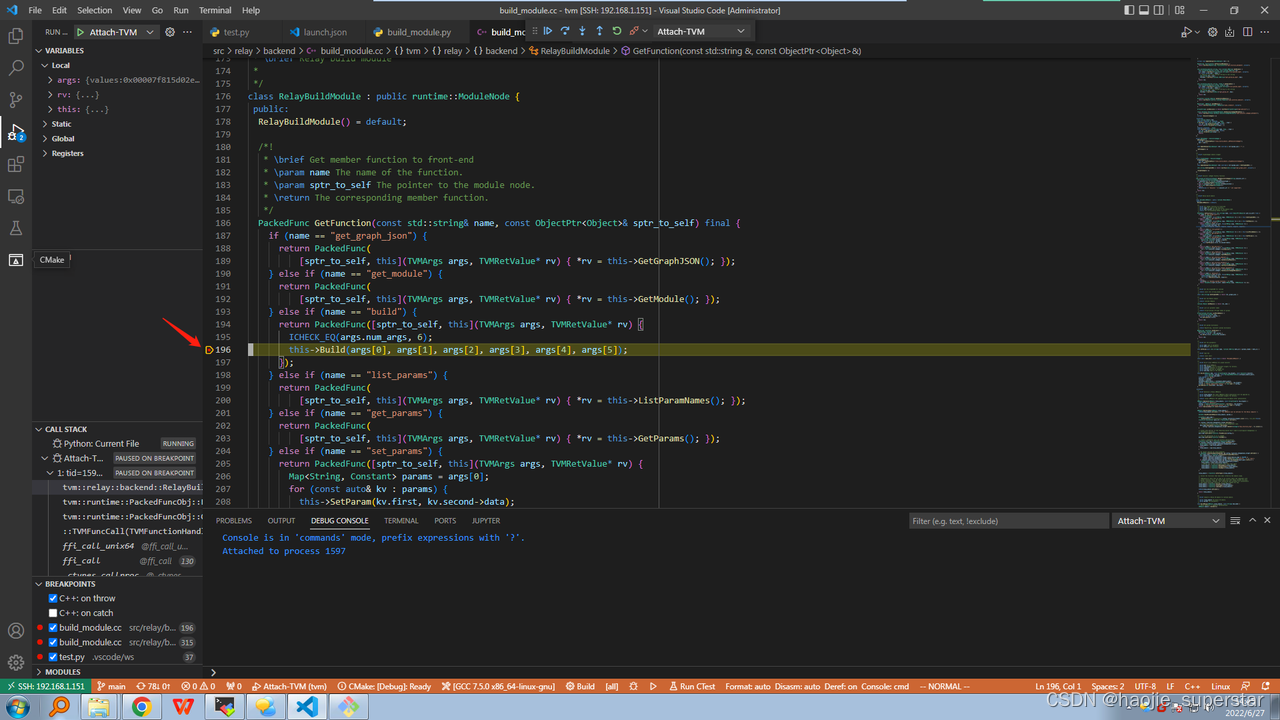

在src/relay/backend/build_module.cc文件的build行设置断点:

切到test.py文件,点击绿色三角后,开始调试:

命中test.py文件的断点,此处需要等后台计算完调试信息:

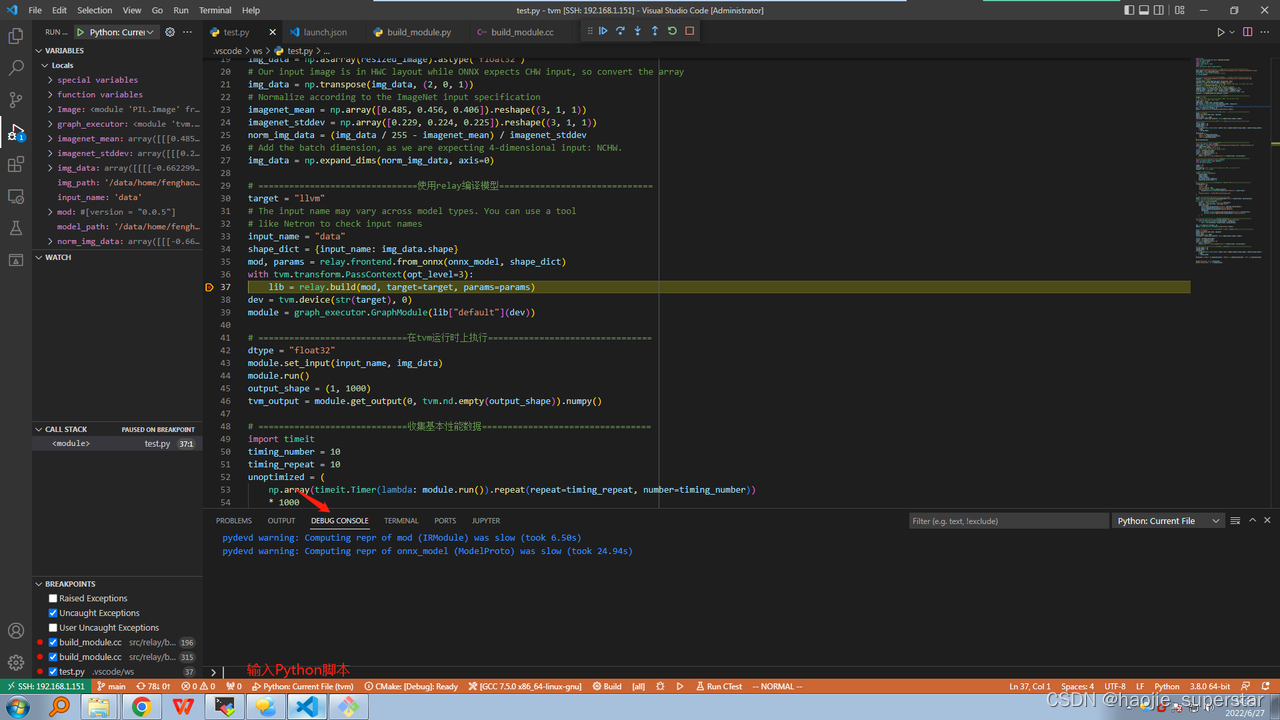

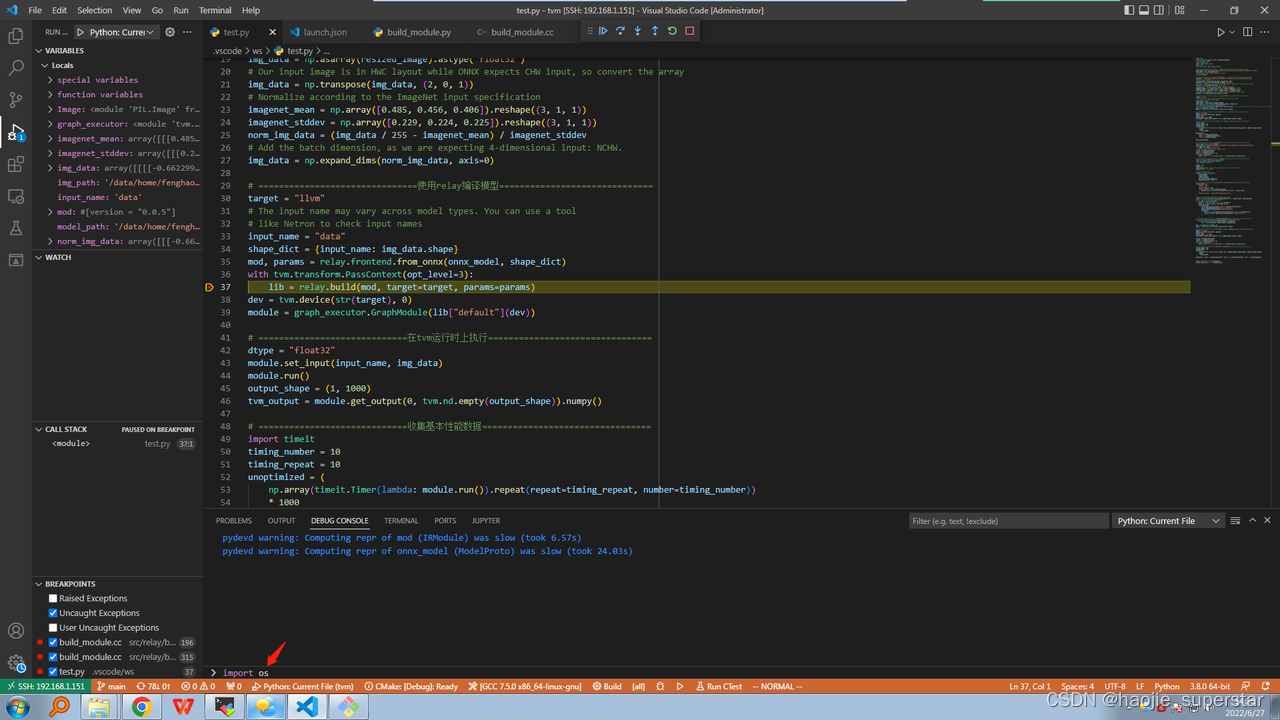

点击输出区的 DEBUG CONSOLE,通过Python脚本获取调试进程ID:

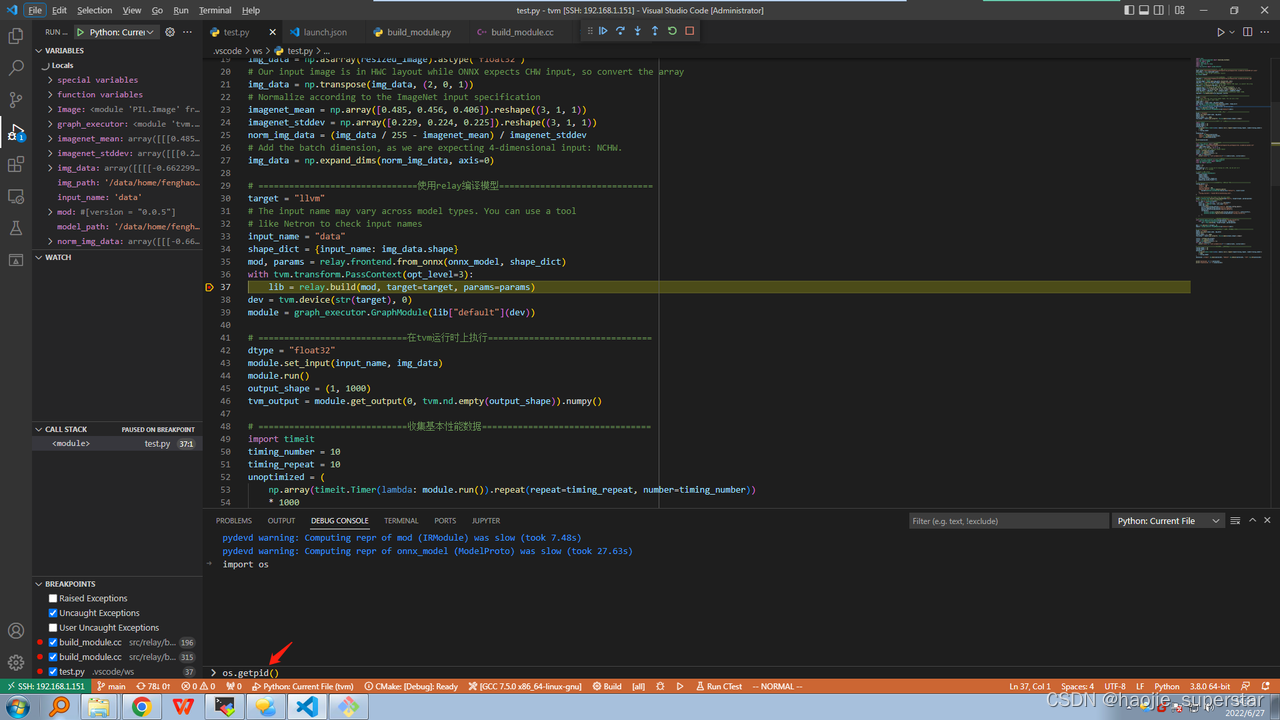

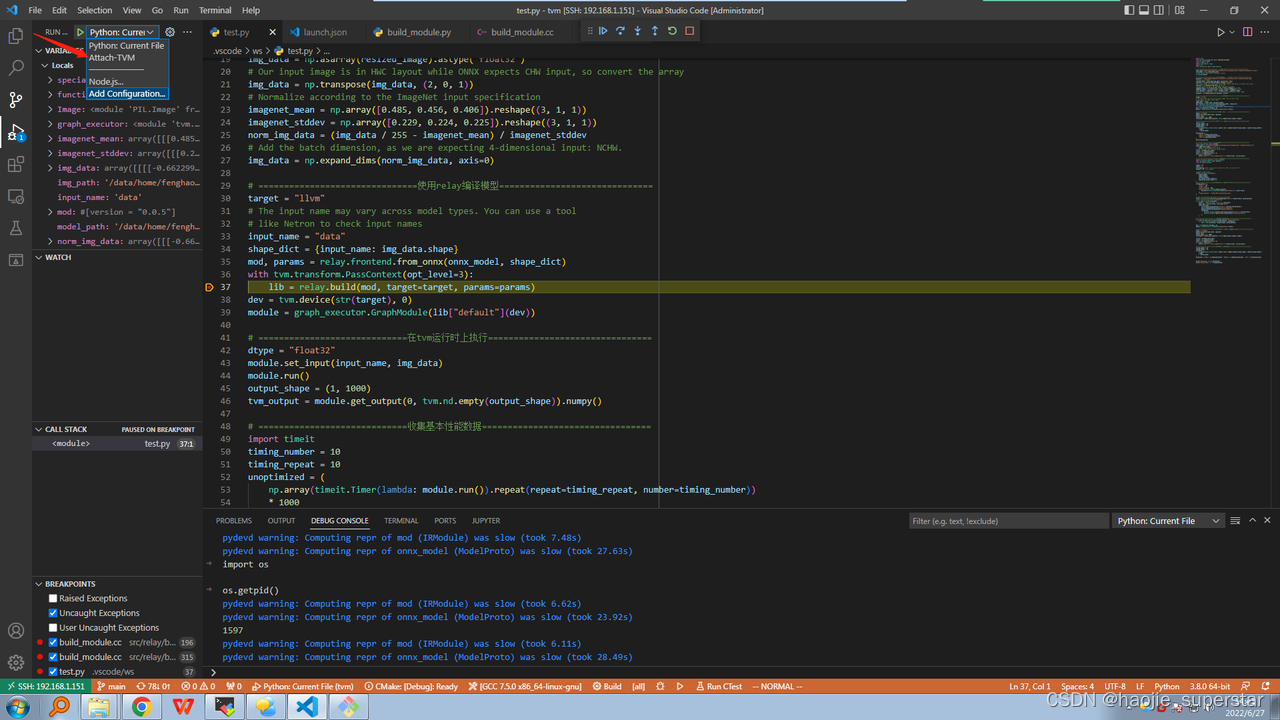

切换到 lldb 调试器:

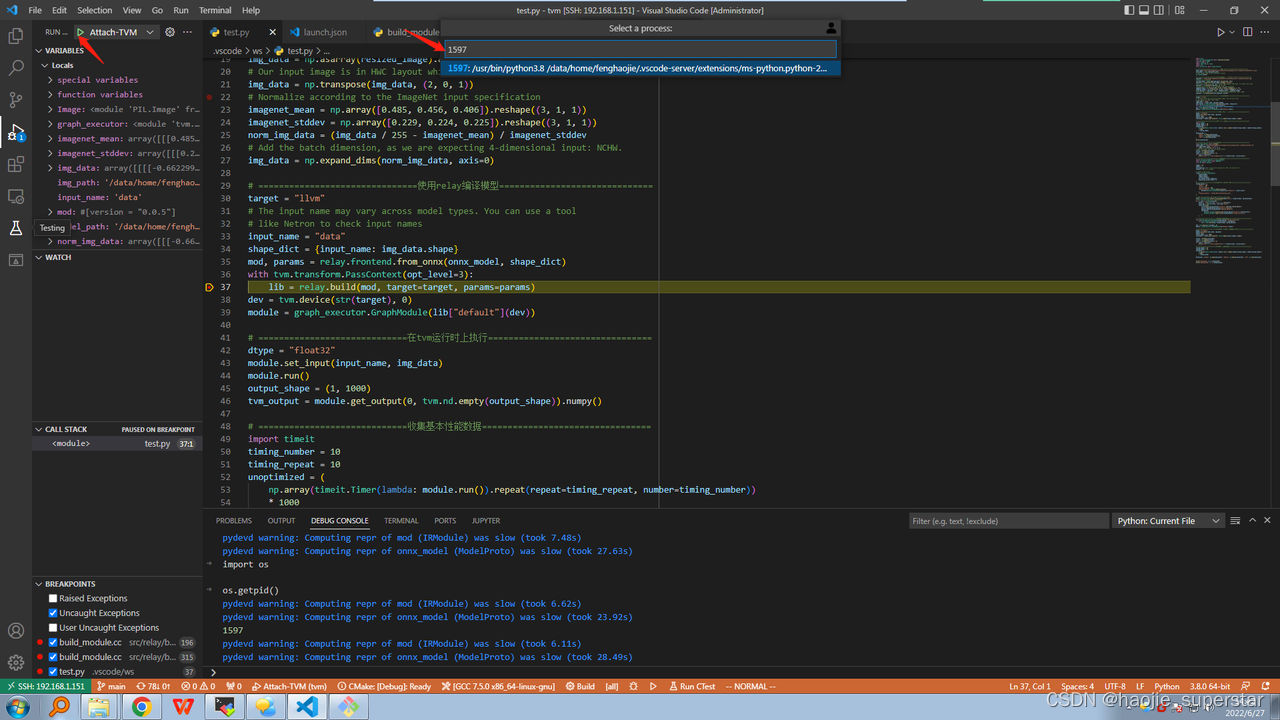

点击绿色三角,运行调试器,输入进程ID:

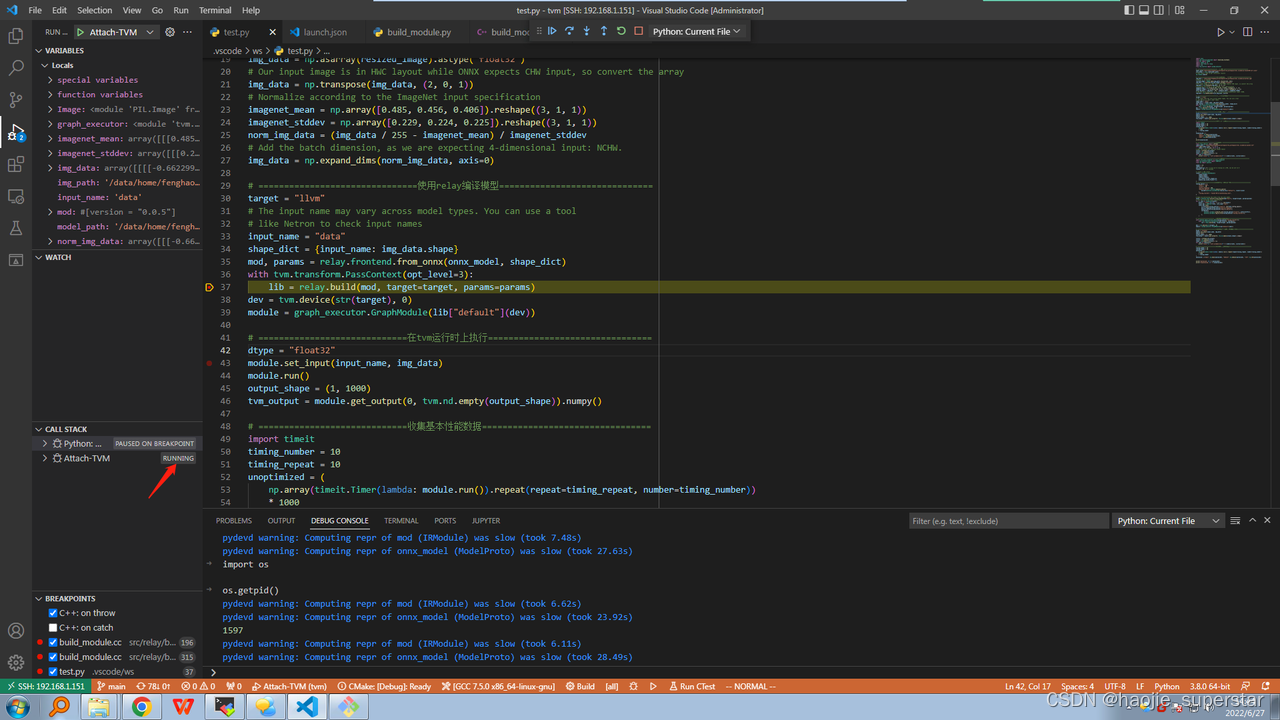

lldb调试器已处于运行状态:

继续运行程序:

命中c++断点: