A vector is an n * 1 matrix

??

? scalar multiplication 标量乘法

??

? Matrix multiplication is really useful,since you can pack a lot of computation(运算) into just one matrix multiplication operation.

??

? matrix multiplication is not commutative(不可交换,不符合交换律)

? matrix multiplication is associative(符合结合律)

?

?Identity Matrix(单位矩阵)

?A.dot(I(单位矩阵))=I.dot(A) ?(两个单位矩阵的维度不一定相同)

?

?matrix inverse

?

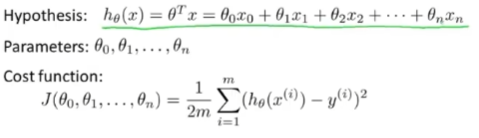

?multivariate linear regression?

?one that works with multiple variables or with multiple features

?

?feature scaling(特征缩放)

?Idea:Make sure features are on a similar scale

?主要在课件上

?

?if α is too small: slow convergence(收敛)

?if α is too large:J(theta) may not decrease on every iteration;may not converge(收敛)

?

?when you're applying linear regression,you don't necessarily have to use just features x_1 and x_2 that you are given,What you can do is to create features by yourself?

?

??

? polynomial regression 多项式回归 ?

? the machinery of multivariant linear regression多元线性回归

? set the feature x to be the square or cube of one thing(the size of the house)

? if you choose your features like this,then feature scaling becomes increasingly important

??

??

? the normal equation which for some linear regression problems will give us a much better way to solve for the optimal value of the parameters theta

? 对于某些线性回归方程,它会给我们更好的方法来求得参数θ的最优值

??

? Normal equation:Method to solve for θ analytically只需一步,不需要迭代

??

? use highly optimized numerical linear algebra routines to compute inner product between two vectors,and not only is the vectorized implementation simpler,it will also run more efficiently

? 利用高度优化的数值线性代数算法来计算两个向量的内积,向量化的实现方式不仅代码更简单,也会让运行更高效

??

??

? classification probblems where the variable y that you want to predict is discrete value

? y是离散变量情况下的分类问题

??

??

? logistic regression algorithm ---classification algorithm

? hypothesis representation假设表示 that is what is the function we're going to use to represent our hypothesis when we have a classification problem

??

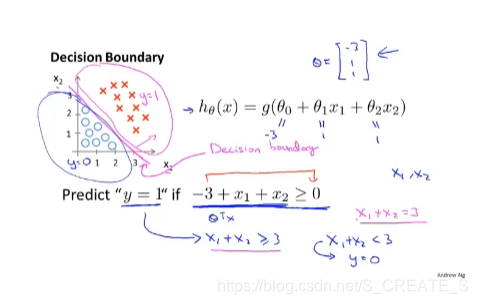

??decision boundary决策边界

? this will give us a better sense of what the logistic regression,hypothesis function is computing

?the dicision boundary is a property of the hypothesis决策边界是假设函数的性质

? even if we take away the data set,this dicision boundary and a region where we predict Y equals 1 versus Y equals 0,that's the property of the hypothesis and of the parameters of the hypothesis and not a property of the data set决策边界是假设函数的性质,决定于其参数,它不是数据集的属性

? So long as we've given my parameter vector θ,that defines the decision boundary

? The training set is not used to define the dicision boundary,but used to fit the parameter θ 训练集不是用来定义决策边界的,而是用来拟合参数θ

??

??

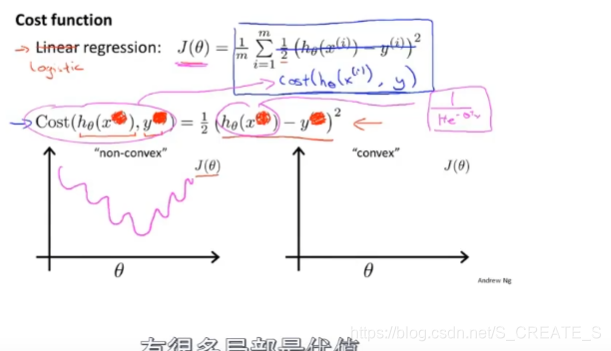

? We'll talk about how to fit the parameters theta for logistic regression如何拟合logistic回归模型的参数θ

??

? optimization objective or the cost function that we will use to fit the parameters

? 优化目标或者叫做代价函数

??

? non convex function非凸函数

??

?this cost function can be derived from statistics using the principle of maximum likelihood estimation从统计学中利用极大似然法得到的

? a nice property: it is convex凸函数

??

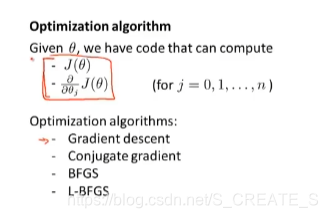

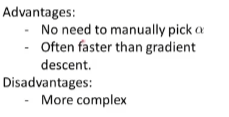

? advanced optimization algorithm高级优化算法 and some advanced optimization concepts高级的优化概念

? Using some of these ideas,we'll be able to get logistic regression to run much more quickly than it's possible with gradient descent and this will also let the algorithm scale much better to very large machine learning problems such as if we had a very large number of features

??

??

? gradient descent isn't the only algorithm we can use,and there are other algorithms,more advanced,more sophisticated ones,that,if we only provide them a way to compute these two things,then these are different approches to optimize the cost function for us

?we will talk about how to get logistic regression to work for multi-class classification problems用逻辑回归来解决多类别分类问题

??

? one-versus-all classification"一对多"的分类算法

这几天的笔记有点乱了,从明天开始一定好好整理!!!!!