决策树的相关介绍可以先看看这篇统计学习(三)决策树ID3、C4.5、CART。

这里做一下使用sklearn直接导入决策树模型进行分类问题,回归问题的解决演示。

关于sklearn中封装的决策树模型的详细参数可以看这篇文章SKlearn中分类决策树的重要参数详解。

下面的示例所有数据和代码看这里。

如果对你的学习有所帮助欢迎点star,谢谢~

一、分类问题

这里同样的,我们还是使用手写数字集进行分类问题演示。

项目里面的data_process.py文件是运行加载分别生成train_data.npz和test_data.npz文件,分别表示训练集和测试集。

1.1 C4.5和CART树做分类

加载sklearn中的决策树模块。

from sklearn import tree #导入tree模块

model = tree.DecisionTreeClassifier(criterion='entropy')#加载分类决策树模型,这里用信息熵entropy作为信息复杂度度量,也可以用基尼系数'gini'

model.fit(train_x,train_y) #用训练集数据训练模型

predict = model.predict(test_x)#预测

完整运行代码

from sklearn import tree #导入需要的模块

import numpy as np

from sklearn.metrics import accuracy_score

train_data=np.load('train_data.npz',allow_pickle=True)#加载数据

test_data=np.load('test_data.npz',allow_pickle=True)

train_x=train_data['arr_0'][:,:-1]#训练集

train_y=train_data['arr_0'][:,-1]#训练集标签

test_x=test_data['arr_0'][:,:-1]

test_y=test_data['arr_0'][:,-1]

model = tree.DecisionTreeClassifier(criterion='entropy')#加载分类决策树模型

model.fit(train_x,train_y) #用训练集数据训练模型

predict = model.predict(test_x)#预测

print("accuracy_score: %.4lf" % accuracy_score(predict,test_y))

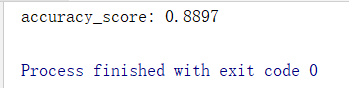

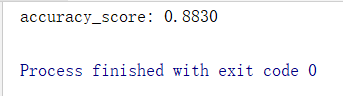

上图为采用信息熵作为度量依据,下图为采用基尼系数作为度量依据结果。

所以看来信息熵在分类问题效果更好些。当上面的模型采用信息熵entropy可以看成是C4.5模型,采用基尼系数gini可以看成是CART树。

1.2 随机森林做分类

随机森林是一种集成学习方法,它是包含了多棵树,每棵树随机选择原始数据集中的部分数据或部分特征,各自训练得到一棵树,最后把所有树对测试集的预测结果进行综合得到随机森林的输出,包括后面功能强大的回归模型XGBoost和LGB也是利用了同样的思想,把每棵树作为一个弱学习器进行训练,不过XGB和LGB比随机森林复杂太多,底层的公式比较复杂。

随机森林详细参数看这里SKlearn参数详解—随机森林。

加载随机森林

import numpy as np

import matplotlib.pyplot as plt

from sklearn.metrics import accuracy_score

from sklearn.ensemble import RandomForestClassifier

from sklearn.naive_bayes import MultinomialNB,BernoulliNB,GaussianNB

train_data=np.load('train_data.npz',allow_pickle=True)#加载数据

test_data=np.load('test_data.npz',allow_pickle=True)

train_x=train_data['arr_0'][:,:-1]#训练集

train_y=train_data['arr_0'][:,-1]#训练集标签

test_x=test_data['arr_0'][:,:-1]

test_y=test_data['arr_0'][:,-1]

model = RandomForestClassifier()

model.fit(train_x,train_y)

predict = model.predict(test_x)

print("随机森林 accuracy_score: %.4lf" % accuracy_score(predict,test_y))

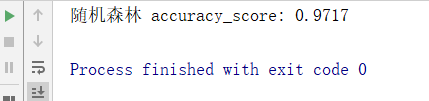

这精度,,,直接飞到了0.97,不愧是集成学习模型。

1.3 XGB做分类

是一个比随机森林复杂更多功能更强大的集成学习回归模型。

import numpy as np

from sklearn.metrics import accuracy_score

from xgboost import XGBClassifier

train_data=np.load('train_data.npz',allow_pickle=True)#加载数据

test_data=np.load('test_data.npz',allow_pickle=True)

train_x=train_data['arr_0'][:,:-1]#训练集

train_y=train_data['arr_0'][:,-1]#训练集标签

test_x=test_data['arr_0'][:,:-1]

test_y=test_data['arr_0'][:,-1]

model = XGBClassifier()

model.fit(train_x,train_y)

predict = model.predict(test_x)

print("XGB accuracy_score: %.4lf" % accuracy_score(predict,test_y))

1.4 XGBRF分类

融合了XGB和随机森林。

import numpy as np

from sklearn.metrics import accuracy_score

from xgboost import XGBRFClassifier

train_data=np.load('train_data.npz',allow_pickle=True)#加载数据

test_data=np.load('test_data.npz',allow_pickle=True)

train_x=train_data['arr_0'][:,:-1]#训练集

train_y=train_data['arr_0'][:,-1]#训练集标签

test_x=test_data['arr_0'][:,:-1]

test_y=test_data['arr_0'][:,-1]

model = XGBRFClassifier()

model.fit(train_x,train_y)

predict = model.predict(test_x)

print("XGB accuracy_score: %.4lf" % accuracy_score(predict,test_y))

1.5 LGBM

LGBM是谷歌针对XGB模型一些不足做出的改进,优化了XGB的精度和训练时间,是目前很流行功能很强大的集成学习树模型。

import numpy as np

from sklearn.metrics import accuracy_score

from lightgbm import LGBMClassifier

train_data=np.load('train_data.npz',allow_pickle=True)#加载数据

test_data=np.load('test_data.npz',allow_pickle=True)

train_x=train_data['arr_0'][:,:-1]#训练集

train_y=train_data['arr_0'][:,-1]#训练集标签

test_x=test_data['arr_0'][:,:-1]

test_y=test_data['arr_0'][:,-1]

model = LGBMClassifier()

model.fit(train_x,train_y)

predict = model.predict(test_x)

print("LGBM accuracy_score: %.4lf" % accuracy_score(predict,test_y))

后面的XGB和LGBM因为信息太多,训练超级耗时,但是从比赛经验来看,数据量很大的时候,XGB和LGBM表现的性能效果是非常棒的,LGBM又胜于XGB。

二、回归问题

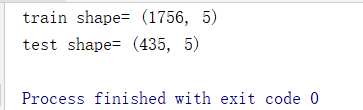

回归问题数据集我找了个简单的,写个爬虫爬了上海的天气气温数据作为训练集。详细看Python构建爬虫模型爬取天气数据。

这里我修改代码命名为weather_data.txt,爬取区间为2015到2020年。

加载处理数据的代码如下

import pandas as pd

import numpy as np

data=pd.read_csv('weather_data.txt',header=None).values#加载数据

data=data.reshape([1,len(data)])[0]

train_x = []

train_y = []

test_x = []

test_y = []

for i in range(int(len(data)*0.8)):

train_x.append(list(data[i:i+5]))

train_y.append(data[i+5])

for i in range(int(len(data)*0.8),len(data)-5):

test_x.append(list(data[i:i+5]))

test_y.append(data[i+5])

train_x=np.array(train_x)#将列表转换为数组

train_y=np.array(train_y)

test_x=np.array(test_x)

test_y=np.array(test_y)

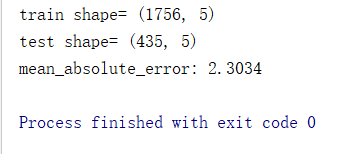

print('train shape=',train_x.shape)

print('test shape=',test_x.shape)

2.1 CART回归树

回归问题的CART树了,导入模型代码如下

from sklearn import tree #导入需要的模块

import numpy as np

from sklearn.metrics import accuracy_score

model = tree.DecisionTreeRegressor(criterion='mse')#加载分类决策树模型

model.fit(x_train,y_train)

predict = model.predict(x_test)

加载数据使用CART树预测

import pandas as pd

import numpy as np

from sklearn import tree #导入需要的模块

from sklearn.metrics import mean_absolute_error

data=pd.read_csv('weather_data.txt',header=None).values#加载数据

data=data.reshape([1,len(data)])[0]

train_x = []

train_y = []

test_x = []

test_y = []

for i in range(int(len(data)*0.8)):

train_x.append(list(data[i:i+5]))

train_y.append(data[i+5])

for i in range(int(len(data)*0.8),len(data)-5):

test_x.append(list(data[i:i+5]))

test_y.append(data[i+5])

train_x=np.array(train_x)#将列表转换为数组

train_y=np.array(train_y)

test_x=np.array(test_x)

test_y=np.array(test_y)

print('train shape=',train_x.shape)

print('test shape=',test_x.shape)

model = tree.DecisionTreeRegressor(criterion='mse')#加载分类决策树模型

model.fit(train_x,train_y)

predict = model.predict(test_x)

print('mean_absolute_error: %.4lf' % mean_absolute_error(predict,test_y))

误差为2.3034,感觉还行。

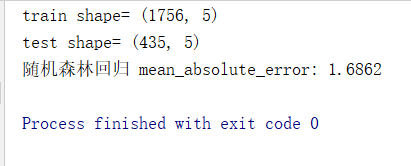

2.2 RF 随机森林回归树

刚才说到随机森林是集成了多棵树的结果,那么随机森林的具体功能取决于内部每棵树的功能,分类问题中每棵树是分类树,回归问题就是回归树,所以随机森林也可以做回归问题。

看代码。

import pandas as pd

import numpy as np

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

data=pd.read_csv('weather_data.txt',header=None).values#加载数据

data=data.reshape([1,len(data)])[0]

train_x = []

train_y = []

test_x = []

test_y = []

for i in range(int(len(data)*0.8)):

train_x.append(list(data[i:i+5]))

train_y.append(data[i+5])

for i in range(int(len(data)*0.8),len(data)-5):

test_x.append(list(data[i:i+5]))

test_y.append(data[i+5])

train_x=np.array(train_x)#将列表转换为数组

train_y=np.array(train_y)

test_x=np.array(test_x)

test_y=np.array(test_y)

print('train shape=',train_x.shape)

print('test shape=',test_x.shape)

model = RandomForestRegressor()#加载随机森林回归模型

model.fit(train_x,train_y)

predict = model.predict(test_x)

print('随机森林回归 mean_absolute_error: %.4lf' % mean_absolute_error(predict,test_y))

误差明显减小很多。

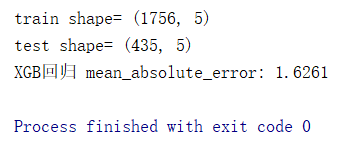

2.3 XGBoost模型回归

是一个比随机森林复杂更多功能更强大的集成学习回归模型。

直接调用模型如下:

import pandas as pd

import numpy as np

from sklearn.metrics import mean_absolute_error

from xgboost import XGBRegressor

data=pd.read_csv('weather_data.txt',header=None).values#加载数据

data=data.reshape([1,len(data)])[0]

train_x = []

train_y = []

test_x = []

test_y = []

for i in range(int(len(data)*0.8)):

train_x.append(list(data[i:i+5]))

train_y.append(data[i+5])

for i in range(int(len(data)*0.8),len(data)-5):

test_x.append(list(data[i:i+5]))

test_y.append(data[i+5])

train_x=np.array(train_x)#将列表转换为数组

train_y=np.array(train_y)

test_x=np.array(test_x)

test_y=np.array(test_y)

print('train shape=',train_x.shape)

print('test shape=',test_x.shape)

model = XGBRegressor()#加载随机森林回归模型

model.fit(train_x,train_y)

predict = model.predict(test_x)

print('XGB回归 mean_absolute_error: %.4lf' % mean_absolute_error(predict,test_y))

好像效果还没随机森林回归好,,,,,,于是在XGB的基础上采用了随机森林集成的过程,也就是随机采样随机采用部分特征训练得到XGBRF。

2.4 XGBRF回归

import pandas as pd

import numpy as np

from sklearn.metrics import mean_absolute_error

from xgboost import XGBRFRegressor

data=pd.read_csv('weather_data.txt',header=None).values#加载数据

data=data.reshape([1,len(data)])[0]

train_x = []

train_y = []

test_x = []

test_y = []

for i in range(int(len(data)*0.8)):

train_x.append(list(data[i:i+5]))

train_y.append(data[i+5])

for i in range(int(len(data)*0.8),len(data)-5):

test_x.append(list(data[i:i+5]))

test_y.append(data[i+5])

train_x=np.array(train_x)#将列表转换为数组

train_y=np.array(train_y)

test_x=np.array(test_x)

test_y=np.array(test_y)

print('train shape=',train_x.shape)

print('test shape=',test_x.shape)

model = XGBRFRegressor()#加载随机森林回归模型

model.fit(train_x,train_y)

predict = model.predict(test_x)

print('XGBRF回归 mean_absolute_error: %.4lf' % mean_absolute_error(predict,test_y))

效果很明显,误差降低很多。

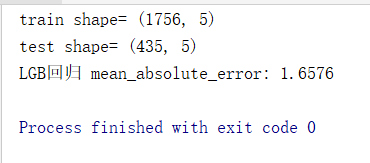

2.5 LGBM回归

LGBM是谷歌针对XGB模型一些不足做出的改进,优化了XGB的精度和训练时间,是目前很流行功能很强大的集成学习树模型。

import pandas as pd

import numpy as np

from sklearn.metrics import mean_absolute_error

from lightgbm import LGBMRegressor

data=pd.read_csv('weather_data.txt',header=None).values#加载数据

data=data.reshape([1,len(data)])[0]

train_x = []

train_y = []

test_x = []

test_y = []

for i in range(int(len(data)*0.8)):

train_x.append(list(data[i:i+5]))

train_y.append(data[i+5])

for i in range(int(len(data)*0.8),len(data)-5):

test_x.append(list(data[i:i+5]))

test_y.append(data[i+5])

train_x=np.array(train_x)#将列表转换为数组

train_y=np.array(train_y)

test_x=np.array(test_x)

test_y=np.array(test_y)

print('train shape=',train_x.shape)

print('test shape=',test_x.shape)

model = LGBMRegressor()#加载随机森林回归模型

model.fit(train_x,train_y)

predict = model.predict(test_x)

print('LGB回归 mean_absolute_error: %.4lf' % mean_absolute_error(predict,test_y))

可能是数据量太少的原因吧,因为这个模型的改进就是特意针对了大数据量如果优化性能,在之前打比赛中LGB的效果确实是比XGB好非常多。