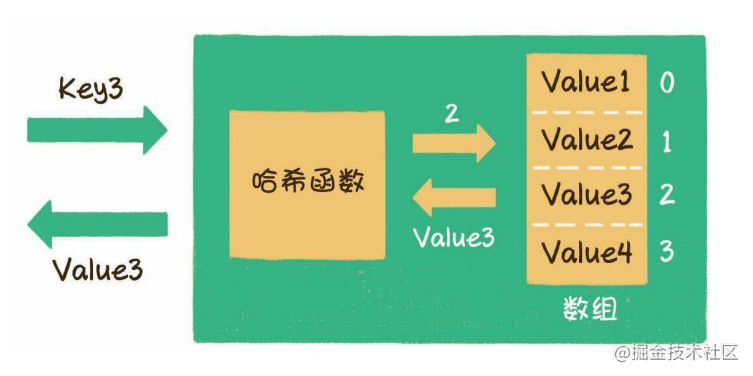

哈希表

哈希表也叫散列表,这种数据结构提供了键(Key)和值(Value)的映射关系。只要给出一个key,就可以高效查找到它所匹配的value,时间复杂度接近于O(1)。如下所示:

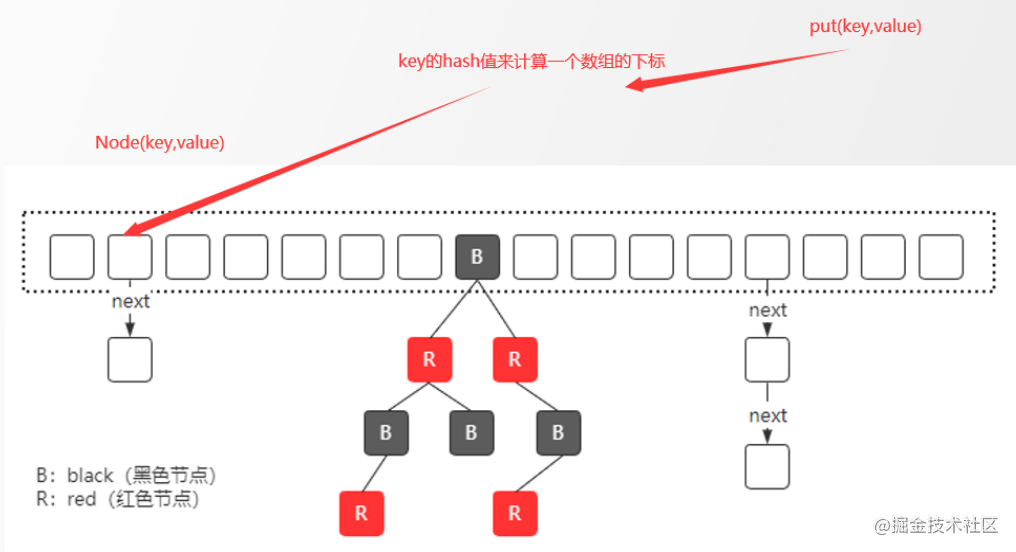

1.7和1.8的区别

主要是两方面的,一方面是1.7里加锁用的是segment分段锁,锁的粒度较大。1.8里面用的是synchronized+CAS来实现的,锁的粒度也从分段锁缩小为节点锁。

第二个是数据结构的层面,1.7里面是数组+链表,1.8里面是数组+链表+红黑树,时间复杂度O(n) -> O(logn)。 如下所示:

put()

点开put方法

public V put(K key, V value) {

return putVal(key, value, false);

}

然后再进入putVal方法

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

//计算key的hash值

int hash = spread(key.hashCode());

int binCount = 0;

//自旋

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

//tab为空,进行初始化

if (tab == null || (n = tab.length) == 0)

//初始化完成后,进入下一次循环

tab = initTable();

//(n - 1) & hash 计算数组下标的位置,相当于取模

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

//如果当前的node位置为空,直接存放到该位置,通过cas来保证原子性

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break; // no lock when adding to empty bin

}

//MOVED是常量-1,如果hash值是负一,说明在扩容,去帮忙一起扩

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

else {

V oldVal = null;

//锁住当前的node节点,避免线程安全问题

synchronized (f) {

//重新判断一遍,怕当中有没有被修改掉

if (tabAt(tab, i) == f) {

//针对链表来处理

if (fh >= 0) {

//记录链表长度

binCount = 1;

for (Node<K,V> e = f;; ++binCount) {

K ek;

//是否存在相同的key,如果存在就把里面的值覆盖

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

//如果不存在并且达到链表尾部了,说明是最后一个节点了,直接添加

//在尾节点后面

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

//针对红黑树处理

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

//链表长度大于等于8的话转红黑树

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

//计数,+1,后面size()统计元素个数的时候用到

addCount(1L, binCount);

return null;

}

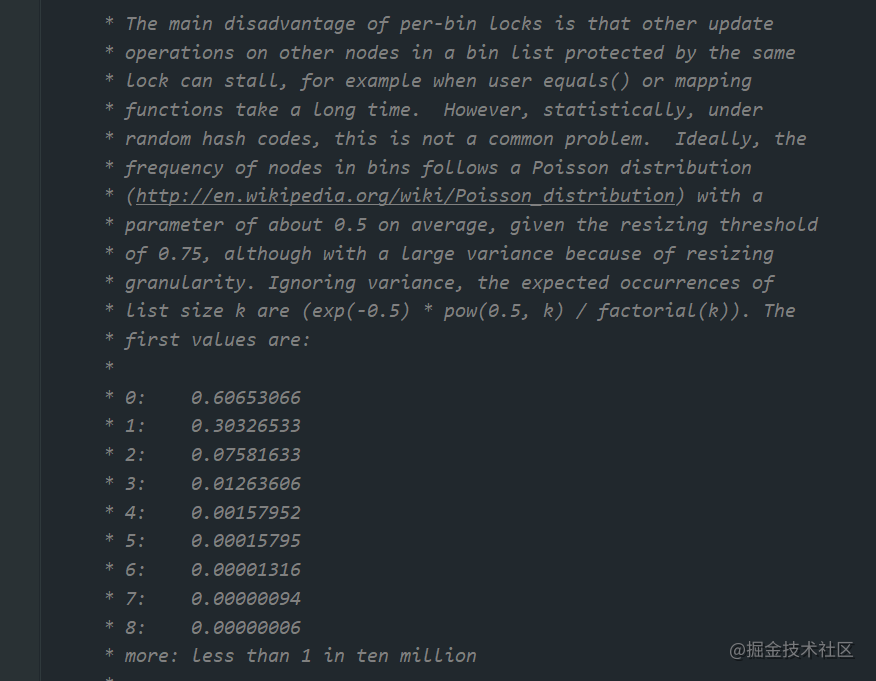

这里有一个常量TREEIFY_THRESHOLD值是8,也就是在大于等于8的时候转红黑树,为什么是8呢,我们来看下作者写的注释,意思是说hash函数计算的结果离散性好,分布的很均匀,很少会出现链表很长的情况,当长度为 8 的时候,概率仅为 0.00000006。这是一个小于千万分之一的概率,等达到了8再转也不迟。

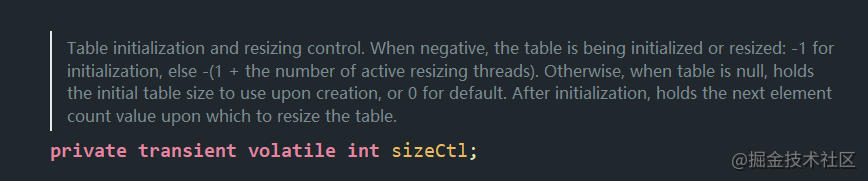

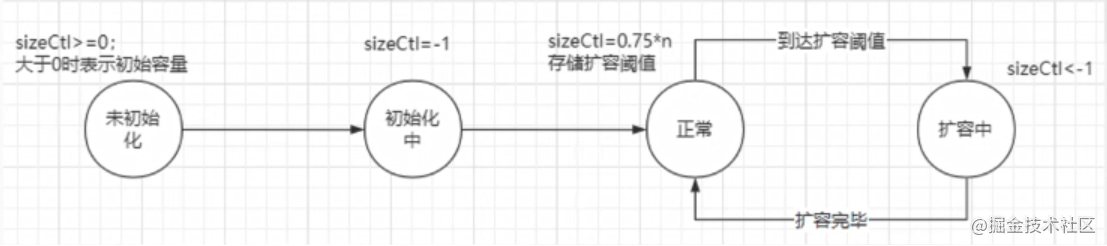

initTable()

初始化数组,首先看下sizeCtl这个变量,可以理解为一个状态机,作者在字段上面写了注释,我在下面也画了图,不理解这个字段看代码也是看不懂的。

下面是源码讲解

private final Node<K,V>[] initTable() {

Node<K,V>[] tab; int sc;

//只要tab没有初始化,就不停的循环直到初始化完成

while ((tab = table) == null || tab.length == 0) {

if ((sc = sizeCtl) < 0)

Thread.yield(); // lost initialization race; just spin

//通过cas自旋将sc的值变为-1

else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if ((tab = table) == null || tab.length == 0) {

//sc的值可能大于0,因为在 new ConcurrentHashMap(32)的时候可以指定大小

//如果没指定就取 DEFAULT_CAPACITY 默认值为16

int n = (sc > 0) ? sc : DEFAULT_CAPACITY;

@SuppressWarnings("unchecked")

//初始化数组,赋值

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = tab = nt;

//最后sc赋的就是下一次扩容的阈值,即0.75*n

//这里用的是无符号右移两位,而不是直接写sc=0.75*n

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

break;

}

}

return tab;

}

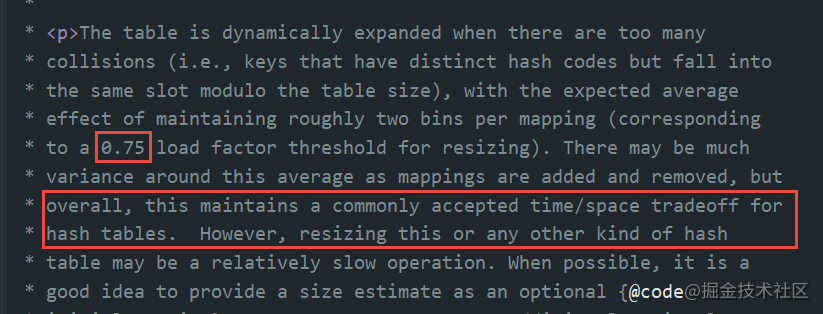

我们看到sc = n - (n >>> 2)其实就相当于sc=0.75*n,那为什么选择0.75作为扩容的阈值呢,我们还是看下作者写的注释,负载因子太小了浪费空间并且会发生更多次数的resize,太大了哈希冲突增加会导致性能不好,总的来说,这保持了一个普遍接受的时间/空间权衡。

treeifyBin()

如果链表长度大于等于8的话会进到这个方法,进来以后会判断是进行扩容还是转红黑树。

private final void treeifyBin(Node<K,V>[] tab, int index) {

Node<K,V> b; int n, sc;

if (tab != null) {

//如果数组长度小于64则进行扩容

if ((n = tab.length) < MIN_TREEIFY_CAPACITY)

tryPresize(n << 1);

//否则转红黑树

else if ((b = tabAt(tab, index)) != null && b.hash >= 0) {

//锁住节点

synchronized (b) {

if (tabAt(tab, index) == b) {

TreeNode<K,V> hd = null, tl = null;

for (Node<K,V> e = b; e != null; e = e.next) {

TreeNode<K,V> p =

new TreeNode<K,V>(e.hash, e.key, e.val,

null, null);

if ((p.prev = tl) == null)

hd = p;

else

tl.next = p;

tl = p;

}

setTabAt(tab, index, new TreeBin<K,V>(hd));

}

}

}

}

}

所以以后回答链表长度大于等于8的时候转红黑树是不严谨的,应该是链表长度大于等于8并且数组长度大于64的时候,链表才会转为红黑树。

tryPresize()

该方法是扩容方法,有以下几个注意点。

- 扩容的时候是多线程并发(允许多个线程来协助)

- 扩容大小是乘以2的扩容,32->64,64->128

- 新建一个新的数组以后,需要把老数组里的值迁移到新数组中去

private final void tryPresize(int size) {

//如果size大于最大的整数的一半,就是直接给它最大的整数,否则进行tableSizeFor计算

//tableSizeFor的作用就是返回大于输入参数且最近的2的整数次幂的数。比如10,则返回16

int c = (size >= (MAXIMUM_CAPACITY >>> 1)) ? MAXIMUM_CAPACITY :

tableSizeFor(size + (size >>> 1) + 1);

int sc;

while ((sc = sizeCtl) >= 0) {

Node<K,V>[] tab = table; int n;

//再次判断有没有初始化,没初始化的话进行初始化,和initTable()里面代码一样

//为什么要再次判断有没有初始化,因为别的地方也在调tryPresize方法

if (tab == null || (n = tab.length) == 0) {

//初始容量和扩容的目标容量,谁大选谁

n = (sc > c) ? sc : c;

if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {

try {

if (table == tab) {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];

table = nt;

sc = n - (n >>> 2);

}

} finally {

sizeCtl = sc;

}

}

}

//如果sc比扩容容量大,或者已经是最大容量了,则不扩容,直接返回

else if (c <= sc || n >= MAXIMUM_CAPACITY)

break;

else if (tab == table) {

//生成一个扩容戳,保证当前扩容范围的唯一性

int rs = resizeStamp(n);

//第一次扩容的时候sc是大于0的,不会走这段逻辑

if (sc < 0) {

Node<K,V>[] nt;

//表示扩容结束,break掉

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

//表示没有结束,每增加一个扩容线程,则在低位+1

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

//第一次走这段逻辑,第一个线程在进行扩容

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

transfer(tab, null);

}

}

}

上面的int rs = resizeStamp(n)就是生成扩容戳,大概长这样:

1000 0000 0001 1011 1010 0000 0000 0010

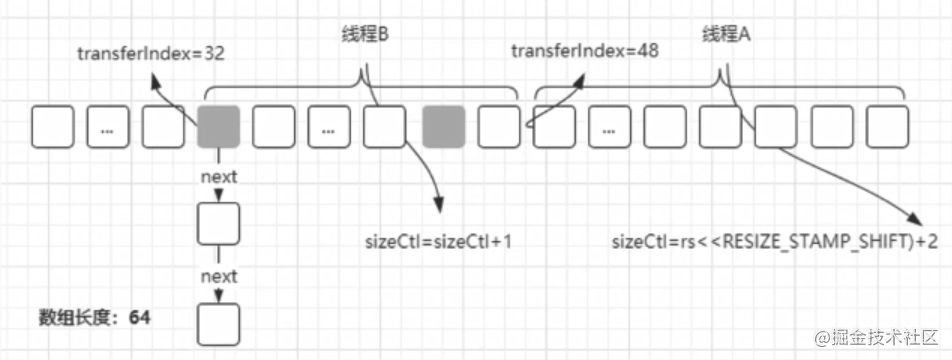

上面提到过扩容的时候是多线程帮忙扩容的,如下图:

但是具体有多少个线程参与数据的迁移工作,要保证所有的线程完成了迁移的动作,才能够表示扩容完成,所以需要有个地方来记录,记录的地方就是在sizeCtl。当第一个线程进来的时候,将扩容戳左移16位然后加2,然后赋值给sizeCtl,此时sizeCtl就变成了:

1010 0000 0000 0010 0000 0000 0000 0010

高位16表示当前的扩容标记,保证唯一性,低16位表示当前扩容的线程数量。 当再有线程进来帮忙扩容的时候低16位就不停的加1,加1…

1010 0000 0000 0010 0000 0000 0000 0011

1010 0000 0000 0010 0000 0000 0000 0100

1010 0000 0000 0010 0000 0000 0000 0101

...

transfer()

transfer是数据的迁移,首先计算当前线程的数据迁移空间,然后创建一个新的数组,最后进行数据的迁移。

- 如果是红黑树,数据迁移后,不满足红黑树的条件,则红黑树转链表

- 如果是链表,进行高低位链表迁移

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

//计算每个线程处理数组区间的大小,最小是16

//在上图中我们看到线程A搬运16个,线程B也是搬运16个

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

//如果新数组为空的话,先进行初始化

if (nextTab == null) { // initiating

try {

@SuppressWarnings("unchecked")

//在原有的长度上扩大两倍

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

//开始迁移的索引,老数组的长度

transferIndex = n;

}

int nextn = nextTab.length;

//用来表示已经迁移完的状态,也就是说,如果某个old数组的节点完成了迁移,则需要更改成fwd。

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

boolean advance = true;

boolean finishing = false; // to ensure sweep before committing nextTab

//下面是一个很大的for循环,当迁移完成以后break

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

//这段while的作用是计算搬迁的区间,比如老数组长度是64,第一次搬迁的范围是

//[48(nextBound),63(i)],第二次是[32,47]

while (advance) {

int nextIndex, nextBound;

if (--i >= bound || finishing)

advance = false;

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

//是否扩容结束

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

//finish的话结束掉

if (finishing) {

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

finishing = advance = true;

i = n; // recheck before commit

}

}

//如果老数组的第i个位置是空的,说明不用搬迁

else if ((f = tabAt(tab, i)) == null)

//直接把该节点改为pwd,表示迁移完成

advance = casTabAt(tab, i, null, fwd);

//如果该节点在搬迁中了,直接进入下一段区间去搬迁

else if ((fh = f.hash) == MOVED)

advance = true; // already processed

else {

//锁住当前搬迁的节点

synchronized (f) {

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

//迁移链表

if (fh >= 0) {

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

//这里在计算高低位链

if (runBit == 0) {

ln = lastRun;

hn = null;

}

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

//迁移红黑树

else if (f instanceof TreeBin) {

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}

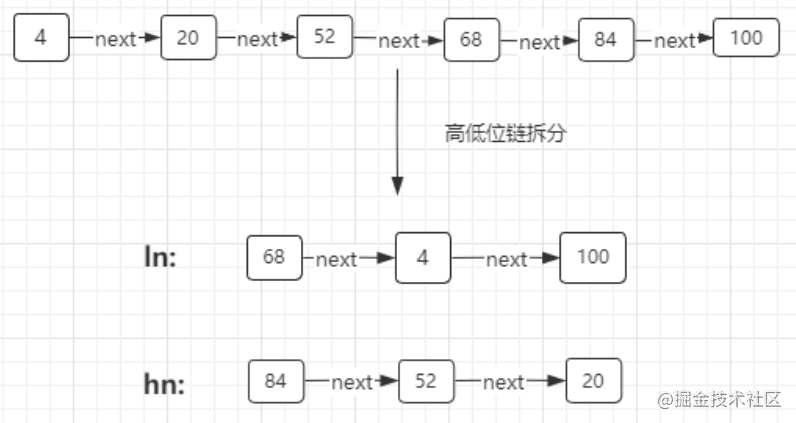

比如有key的hash值为4,20,52,68,84,100,它们在 &(n-1) 即 &15 后的值都为 4,但是在扩容以后 n-1变为了 &31,此时我们再计算一下:

4 & 31 = 4

20 & 31 = 20

52 & 31 = 20

68 & 31 = 4

84 & 31 = 20

100 & 31 = 4

也就是说4,68,100还是留在原来4的位置,20,52,84则需要迁移到新的20的位置,这个就是高低位链迁移。

需要迁移的数据放在高位链,不需要迁移的放在低位链,然后一次性把高位和低位链set到指定的新的数组下标位置。 所以我们最后在源码中看到低位链留在原来的i(4)的位置,高位链则迁移到i(4)+n(16) 20的位置。

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

helpTransfer()

最后回到一开始putVal方法中,当判断当前节点正常迁移的过程中,则去帮忙迁移。

//MOVED为-1

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

这段代码和tryPresize方法里的一段代码是一样的,最终还是调用transfer方法。

final Node<K,V>[] helpTransfer(Node<K,V>[] tab, Node<K,V> f) {

Node<K,V>[] nextTab; int sc;

if (tab != null && (f instanceof ForwardingNode) &&

(nextTab = ((ForwardingNode<K,V>)f).nextTable) != null) {

int rs = resizeStamp(tab.length);

while (nextTab == nextTable && table == tab &&

(sc = sizeCtl) < 0) {

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1)) {

//最终还是调用transfer方法

transfer(tab, nextTab);

break;

}

}

return nextTab;

}

return table;

}

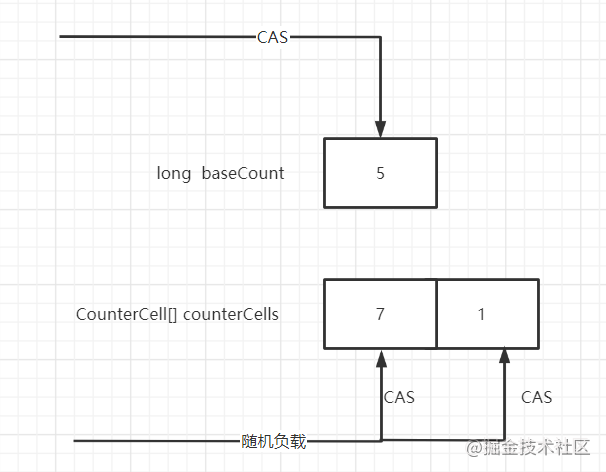

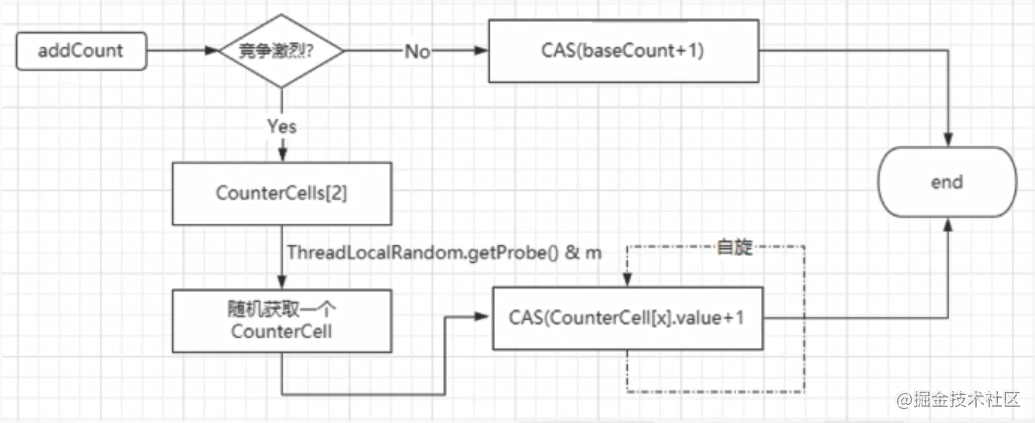

addCount()

putVal方法里面还有个方法addCount就是计数,每put一个元素就+1,最后给size()统计用。我们知道肯定不是简单的+1,因为是非线程安全的,源码里是这样做的,定义baseCount和counterCells两个变量。

private transient volatile long baseCount;

private transient volatile CounterCell[] counterCells;

- 如果竞争不激烈的情况下,直接用cas( baseCount+1)

- 如果竞争激烈的情况下,随机负载数组中的某一个值进行cas+1,这其实就是负载均衡,达到分流效果的思想。

流程图:

private final void addCount(long x, int check) {

CounterCell[] as; long b, s;

if ((as = counterCells) != null ||

//这里在尝试CAS baseCount+1,如果成功了说明没有线程在竞争

!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {

CounterCell a; long v; int m;

boolean uncontended = true;

//去尝试随机counterCells数组里面的一个值进行CAS+1

if (as == null || (m = as.length - 1) < 0 ||

(a = as[ThreadLocalRandom.getProbe() & m]) == null ||

//尝试数组随机加还是失败,说明竞争很激烈了

!(uncontended =

U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))) {

//完成CounterCell的初始化以及元素的累加

fullAddCount(x, uncontended);

return;

}

if (check <= 1)

return;

s = sumCount();

}

//是否要扩容

if (check >= 0) {

Node<K,V>[] tab, nt; int n, sc;

//跟tryPresize里面的一段代码一样

while (s >= (long)(sc = sizeCtl) && (tab = table) != null &&

(n = tab.length) < MAXIMUM_CAPACITY) {

int rs = resizeStamp(n);

if (sc < 0) {

if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||

sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||

transferIndex <= 0)

break;

if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))

transfer(tab, nt);

}

else if (U.compareAndSwapInt(this, SIZECTL, sc,

(rs << RESIZE_STAMP_SHIFT) + 2))

transfer(tab, null);

s = sumCount();

}

}

}

fullAddCount()

这个就是在初始化数组,以及对数组里的值进行+1操作。

private final void fullAddCount(long x, boolean wasUncontended) {

int h;

if ((h = ThreadLocalRandom.getProbe()) == 0) {

ThreadLocalRandom.localInit(); // force initialization

h = ThreadLocalRandom.getProbe();

wasUncontended = true;

}

boolean collide = false; // True if last slot nonempty

for (;;) {

CounterCell[] as; CounterCell a; int n; long v;

//不为空,说明数组已经初始化好了

if ((as = counterCells) != null && (n = as.length) > 0) {

//如果某个位置是空的

if ((a = as[(n - 1) & h]) == null) {

if (cellsBusy == 0) { // Try to attach new Cell

//把x的值保存进去

CounterCell r = new CounterCell(x); // Optimistic create

//抢锁成功

if (cellsBusy == 0 &&

U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) {

boolean created = false;

try { // Recheck under lock

//针对已经初始化的数组的某个位置,去添加一个CounterCell。

CounterCell[] rs; int m, j;

if ((rs = counterCells) != null &&

(m = rs.length) > 0 &&

rs[j = (m - 1) & h] == null) {

rs[j] = r;

created = true;

}

} finally {

cellsBusy = 0;

}

if (created)

break;

continue; // Slot is now non-empty

}

}

collide = false;

}

else if (!wasUncontended) // CAS already known to fail

wasUncontended = true; // Continue after rehash

//如果已经存在的话,把当前值+x放进去

else if (U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))

break;

else if (counterCells != as || n >= NCPU)

collide = false; // At max size or stale

else if (!collide)

collide = true;

//扩容部分

else if (cellsBusy == 0 &&

U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) {

try {

if (counterCells == as) {// Expand table unless stale

//扩容一倍

CounterCell[] rs = new CounterCell[n << 1];

//遍历数组,添加到新的数组中

for (int i = 0; i < n; ++i)

rs[i] = as[i];

counterCells = rs;

}

} finally {

cellsBusy = 0;

}

collide = false;

continue; // Retry with expanded table

}

h = ThreadLocalRandom.advanceProbe(h);

}

//counterCells为空,先进行初始化

else if (cellsBusy == 0 && counterCells == as &&

//cas占位,将CELLSBUSY置为1

U.compareAndSwapInt(this, CELLSBUSY, 0, 1)) {

boolean init = false;

try { // Initialize table

if (counterCells == as) {

//初始化长度为2的数组

CounterCell[] rs = new CounterCell[2];

//把x保存到某个位置

rs[h & 1] = new CounterCell(x);

//复制给成员变量counterCells

counterCells = rs;

init = true;

}

} finally {

//释放锁

cellsBusy = 0;

}

if (init)

break;

}

//最终的情况,直接修改baseCount,保底

else if (U.compareAndSwapLong(this, BASECOUNT, v = baseCount, v + x))

break; // Fall back on using base

}

}

size()

size()方法里汇总数组+baseCount的值来完成数据累加即可。

public int size() {

long n = sumCount();

return ((n < 0L) ? 0 :

(n > (long)Integer.MAX_VALUE) ? Integer.MAX_VALUE :

(int)n);

}

final long sumCount() {

CounterCell[] as = counterCells; CounterCell a;

long sum = baseCount;

if (as != null) {

for (int i = 0; i < as.length; ++i) {

if ((a = as[i]) != null)

sum += a.value;

}

}

return sum;

}

结尾

最后向读到这里的读者说声抱歉,本人水平有限,ConcurrentHashMap有6000多行源码,其中的很多变量,很多函数方法的细节我也不是完全懂,只能理解个大概,如果有写的不对或者没写到的地方,还请多多包涵,留言评论指教!