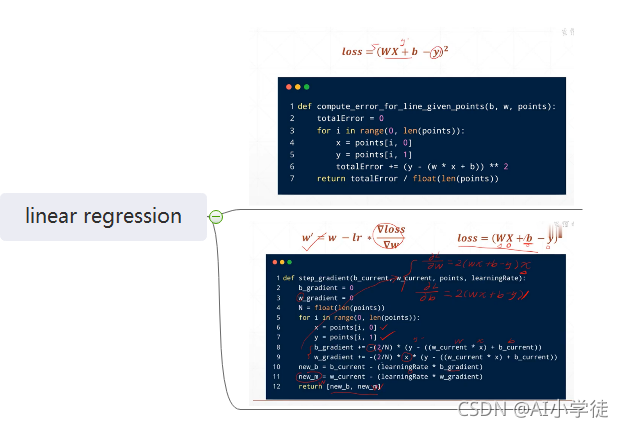

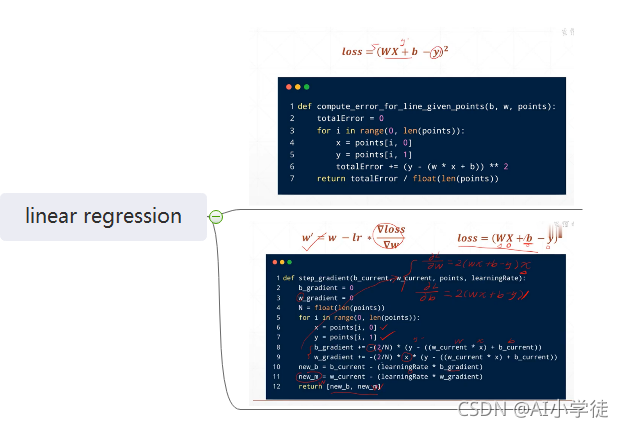

思维导图

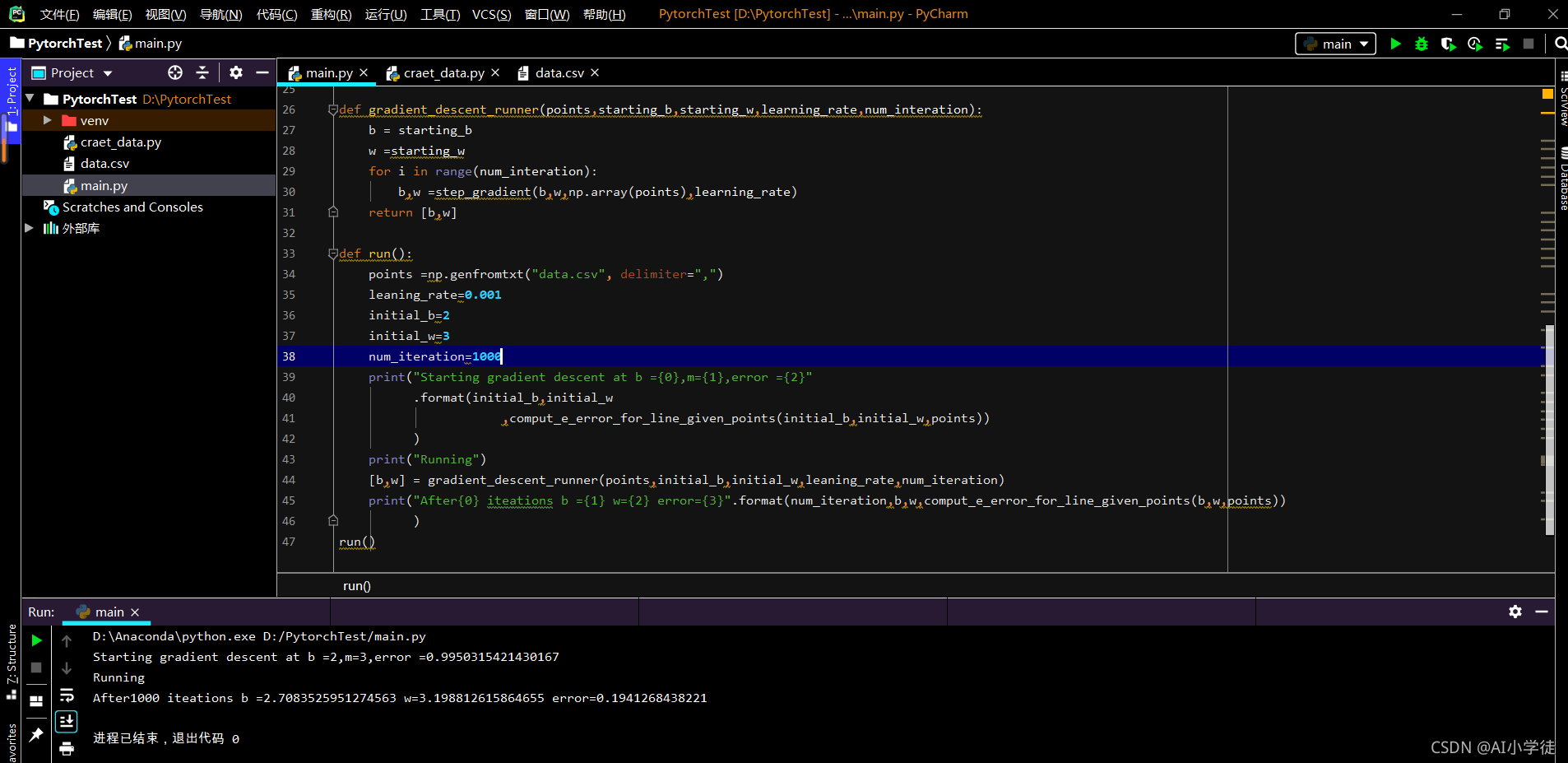

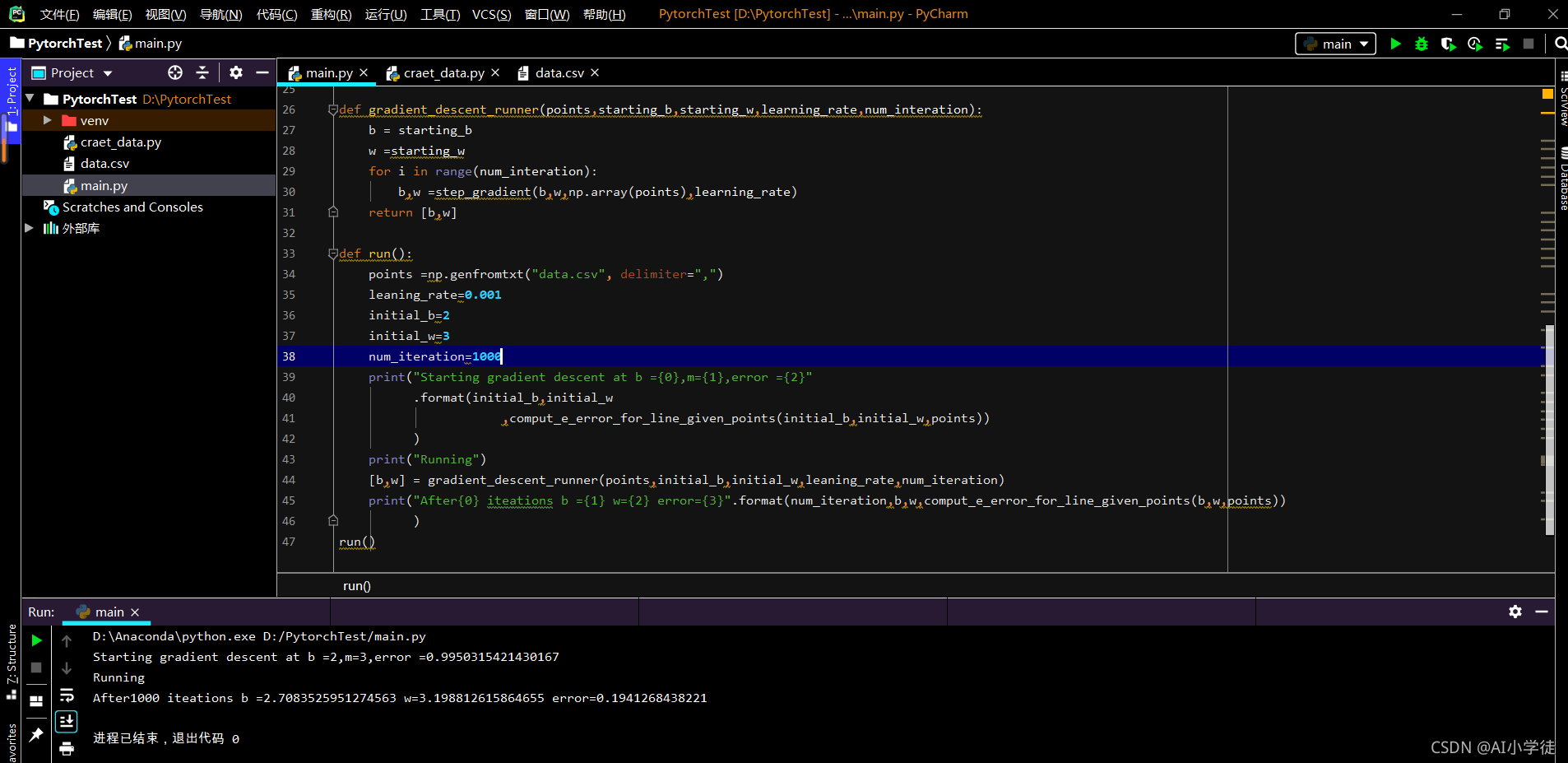

main.py文件代码如下:

import torch

import numpy as np

def comput_e_error_for_line_given_points(b, w, points):

totalEorr = 0

for i in range(0,len(points)):

x=points[i,0]

y=points[i,1]

totalEorr+=(y-(w*x+b))**2

return totalEorr/float(len(points))

def step_gradient(b_current,w_current,points,learningRate):

b_gradient=0

w_gradient=0

N=float(len(points))

for i in range(0,len(points)):

x=points[i,0]

y=points[i,1]

b_gradient += -(2/N)*(y-((w_current*x)+b_current))

w_gradient += -(2/N)*x*(y-((w_current*x)+b_current))

new_b=b_current-(learningRate*b_gradient)

new_w=w_current-(learningRate*w_gradient)

return [new_b,new_w]

def gradient_descent_runner(points,starting_b,starting_w,learning_rate,num_interation):

b = starting_b

w =starting_w

for i in range(num_interation):

b,w =step_gradient(b,w,np.array(points),learning_rate)

return [b,w]

def run():

points =np.genfromtxt("data.csv", delimiter=",")

leaning_rate=0.001

initial_b=2

initial_w=3

num_iteration=10000

print("Starting gradient descent at b ={0},m={1},error ={2}"

.format(initial_b,initial_w

,comput_e_error_for_line_given_points(initial_b,initial_w,points))

)

print("Running")

[b,w] = gradient_descent_runner(points,initial_b,initial_w,leaning_rate,num_iteration)

print("After{0} iteations b ={1} w={2} error={3}".format(num_iteration,b,w,comput_e_error_for_line_given_points(b,w,points))

)

run()

生成数据集代码如下(data.csv) :

import numpy as np

from matplotlib import pyplot as plt

for i in range(0,100):

xdata = np.random.random()

random1 = np.random.random()

ydata = 2 * xdata+3+random1

print("{0},{1}".format(xdata, ydata))

运行效果展示如下:

线性回归数据集样例(data.csv):

0.7959268131949154,5.383253081048107

0.10792226843451991,3.5672430653843614

0.2236481182771649,4.382010400684081

0.891695496410794,5.455785790798779

0.4107177706568511,3.9167518925643465

0.7098110526762221,5.199094617324766

0.5726718092577726,4.386343890884308

0.850689677164127,4.960650345986238

0.527341989113963,4.418461070582508

0.5407383809937704,4.5627824491847955

0.9393720199081832,5.407068167027157

0.404111321556505,3.903080094784834

0.5876819809625564,4.421394423335027

0.7566599371624856,5.240679612703435

0.0031178329236888347,3.8442504171697847

0.270310921739623,3.983739867944232

0.6643683517996607,4.359459463576226

0.9527310211083514,4.968393331955517

0.2892343760050178,4.040681819521732

0.46474848712503447,4.013226297178308

0.38899395293092753,3.7954249709832015

0.8393131259276586,5.601939985957008

0.10964512586659714,3.94851985635711

0.6261757159186817,4.379742390947595

0.08565667598505655,3.606665516431419

0.2370180363736345,3.9625511283429713

0.7230523363252718,4.7348974176283365

0.7197066759784131,4.678245747524764

0.8806035990816686,5.193817064942353

0.876398017062797,5.600633869952809

0.8933393915717712,5.575496230757278

0.7068823078576177,4.778773075393766

0.709033905181841,4.732462037265902

0.8402420992227093,4.695932293253544

0.10837407805690058,3.2415365443499438

0.5631490491018708,4.373276665217919

0.4472270081739842,4.548709891719379

0.19150799074937952,3.4555041178151438

0.7821204649835846,4.833793474769088

0.5447451854388814,4.424243330219422

0.5536264693840504,4.907500684923544

0.8032002914486217,4.827199696053637

0.4244326395755429,4.540430034046199

0.6571746863360602,4.916846569671098

0.39928751719262146,3.9128596629191783

0.8461790206375631,4.715795724237515

0.48164498728976635,4.0434483299889585

0.21243940642655335,3.9350069589576737

0.5459791966500642,4.178299647642944

0.15591629833189136,4.208862653825564

0.3318545713261687,4.607069147207268

0.31337560872349335,3.681321872351009

0.8746815858444879,5.272427255091438

0.39391686710395657,4.388534391646889

0.4793015019357064,3.967322943039812

0.050359282562026375,3.15624439605456

0.4346404837996358,4.824577504350639

0.5637581740174465,4.498518989232363

0.7234594570524097,4.829171724018326

0.6588940040417601,5.0248055753643115

0.10455070414976741,3.287324564852888

0.8472013204912199,5.220390502713326

0.8993465276185247,5.292857170440293

0.25408619265199506,4.456075856474079

0.33545554644583775,4.135687745216281

0.531801937690468,4.618679650306403

0.6967017410342263,5.159739489522426

0.9323388229203458,5.0692772708558405

0.14683451371051082,3.384713814368769

0.10370090011007094,3.6740992437380076

0.9527298299437502,5.354806096815416

0.09349077824028018,3.38987042788691

0.12945204991905068,3.966301687383356

0.2935058975079232,3.8480254159268306

0.5972685377661336,4.532457919626502

0.5307169141975102,4.826574087837489

0.4265298582030739,4.50333560555219

0.27716945105123814,4.2856318500195645

0.7218085392053041,4.749430370829406

0.3926147186480382,4.027877145111341

0.860373996404969,5.146214100068526

0.9164588262130305,5.57223206392356

0.6491618151006234,4.663168027847816

0.15535218391420036,3.9910332036211984

0.3725747901005698,4.250937515531864

0.4704407827973769,4.699327725407754

0.1165160789572447,3.9365087193959853

0.8392828002905782,5.1504058711641365

0.9305998527731457,5.461888219112742

0.5657952945675231,4.549410660145753

0.8936753318690827,5.633624420799182

0.30145455997523884,3.698892542353196

0.017762394148341465,3.800492967019841

0.4850334935570817,4.95502562398627

0.9161554507745315,5.4506454086330685

0.5105198903372674,4.480026680840481

0.06874387575796892,3.318011713652211

0.8278604344296541,5.264489583300447

0.9003304092956863,5.048744821834357

0.9824348313198714,5.636684504177316

|