Java实现DFA算法敏感词过滤。

一. 应用场景

模拟非法词汇自动替换成*字符,且敏感词汇支持动态调整。

效果如下,若配置了敏感词:今天,则当用户在输入:今天,天气真不错。实际应该输出:** **,天气真不错 **

二. 实现思路

- 使用MySQL作为敏感词库

- 使用DFA算法实现文字过滤

Q:什么是DFA?

DFA全称为:Deterministic Finite Automaton,即确定有穷自动机。其特征为:有一个有限状态集合和一些从一个状态通向另一个状态的边,每条边上标记有一个符号,其中一个状态是初态,某些状态是终态。但不同于不确定的有限自动机,DFA中不会有从同一状态出发的两条边标志有相同的符号。

确定:状态以及引起状态转换的事件都是可确定的,不存在“意外”。

有穷:状态以及事件的数量都是可穷举的。

DFA算法模型如下:

state_event_dict = {

"匹": {

"配": {

"算": {

"法": {

"is_end": True

},

"is_end": False

},

"关": {

"键": {

"词": {

"is_end": True

},

"is_end": False

},

"is_end": False

},

"is_end": False

},

"is_end": False

},

"信": {

"息": {

"抽": {

"取": {

"is_end": True

},

"is_end": False

},

"is_end": False

},

"is_end": False

}

}

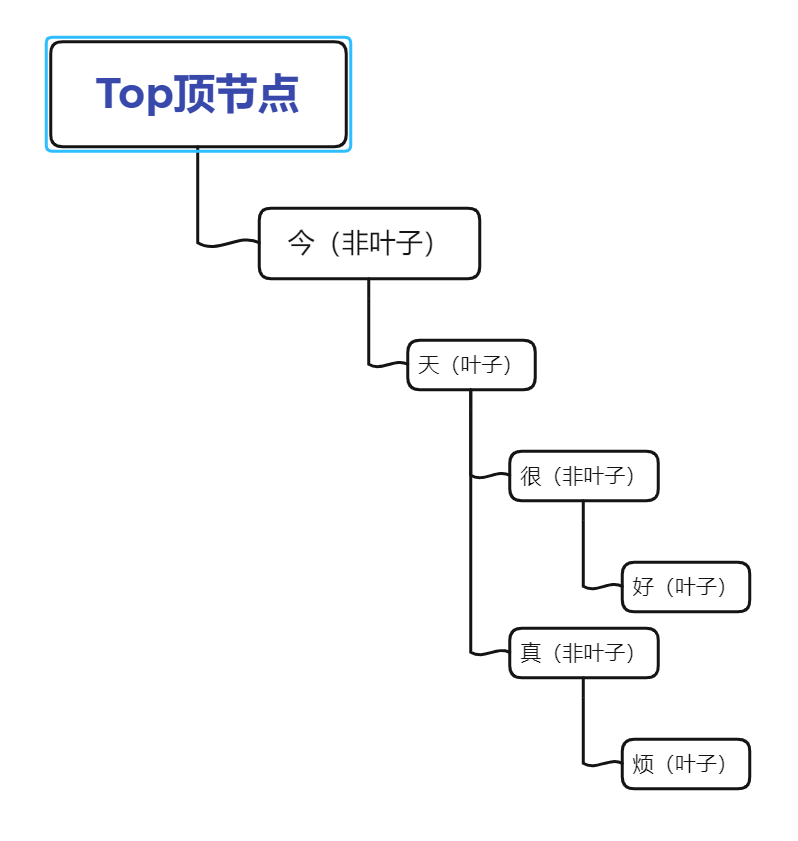

用通俗易懂的话来解释,就是将数据库中的敏感词进行建立树结构,举个例子,数据库的敏感词汇有三个,分别是:今天,今天很好,今天真烦。

建立树结构,并且标记好三个词汇的非叶子节点和叶子节点(即最后一个字符是叶子节点),并且制定好匹配规则,只有碰到叶子节点才算一次过滤:

模拟用户输入以下一句话:

我觉得今天还行。

接下来我们将这句话逐个字拆分并将每一个字代入到上面的树状结构图中。

前面三个字不在敏感词树中直接可以跳过,直到遇到了今这个字,发现匹配上了敏感词树,接下来看树状结构发现只有下一个字是天才能被捕获,继续往下走果然发现天这个字也匹配到了;

再接着走发现在树结构中天这个字的下一个字只有匹配到很或者真才能继续匹配,而用户输入的下一个字是还,第一步判断当前已经走到了叶子节点,故先将今天置为敏感词,然后将还这个字从Top顶节点中重新继续流转,发现无法匹配。

过滤结束,且当前的节点是叶子节点,故这句话仅仅被敏感词过滤了今天这两个字,最终的过滤结果应该是:

我觉得**还行

要注意的是只有完整的碰到过一次叶子节点才算一次过滤,且一句话可以被多次过滤。以上就是针对DFA算法的简单说明。

三. 源码实现

理论知识加身,接下来就该实干了。Talk is cheap, show me the code:

import java.util.HashMap;

import java.util.Map;

/**

* @author: Vainycos

* @description 敏感字树生成器

* @date: 2021/12/4 12:45

*/

public class TreeGenerator {

/**

* 将指定的词分成字构建到一棵树中。

*

* @param tree

* 字树

* @param sensitiveWord

* 敏感词词

* @return

*/

public static void addWord2Tree(Map<String, Map> tree, String sensitiveWord) {

addWord2Tree(tree, sensitiveWord, 0);

}

private static Map<String, Map> addWord2Tree(Map<String, Map> tree,

String word, int index) {

if (index == word.length()) {

tree.put(Finder.TREE_END_KEY, generateWordMap(word));

return tree;

}

String next = word.substring(index, index + 1);

Map<String, Map> subTree = tree.get(next);

if (subTree == null) {

subTree = new HashMap<String, Map>();

}

tree.put(next, addWord2Tree(subTree, word, index + 1));

return tree;

}

private static Map<String, Object> generateWordMap(String word) {

Map<String, Object> wordMap = new HashMap<String, Object>();

wordMap.put(Finder.WORD_VALUE, word);

wordMap.put(Finder.WORD_LENGTH, word.length());

return wordMap;

}

}

import lombok.extern.slf4j.Slf4j;

import java.util.HashSet;

import java.util.Iterator;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.ConcurrentHashMap;

/**

*

* 敏感词过滤

*

*/

@Slf4j

@SuppressWarnings({ "rawtypes", "unchecked" })

public class Finder {

// 敏感词

private static Set<String> WORDS = new HashSet<String>();

// 由敏感词生成的字树

private static Map<String, Map> TREE = new ConcurrentHashMap<String, Map>();

// 默认敏感词分割符

public static final String DEFAULT_SEPARATOR = ",";

/* 在树当中标志一个词的结束 */

public static final String TREE_END_KEY = "^";

// 敏感词value标记

public static final String WORD_VALUE = "v";

// 敏感词长度标记

public static final String WORD_LENGTH = "l";

// 默认替换符

public static final char DEFAULT_REPLACEMENT = '*';

// 默认起始标记

public static final String DEFAULT_START_TAG = "<font color=\"red\">";

// 默认结束标记

public static final String DEFAULT_END_TAG = "</font>";

public Finder(String[] words) {

addSensitiveWords(words);

}

/**

* 删除所有敏感词

*/

public static void clearSensitiveWords() {

WORDS.clear();

TREE.clear();

}

/**

*

* 添加敏感词

*

* @author hymer

* @param words

*/

public static void addSensitiveWords(String[] words) {

if (words == null || words.length == 0) {

return;

}

check(words);

addWords(words);

}

/**

*

* 添加敏感词

*

* @author hymer

* @param words

* @param separator

*/

public static void addSensitiveWords(String words, String separator) {

if (words != null && !"".equals(words.trim())) {

check(words);

String[] sensitiveWords = words.split(separator);

addWords(sensitiveWords);

}

}

/**

*

* 添加敏感词,默认使用,分割

*

* @author hymer

* @param words

*/

public static void addSensitiveWords(String words) {

check(words);

addSensitiveWords(words, DEFAULT_SEPARATOR);

}

/**

* 删除敏感词

*

* @param words

*/

public static void removeSensitiveWords(String words) {

check(words);

removeSensitiveWords(words, DEFAULT_SEPARATOR);

}

/**

* 删除敏感词

*

* @param words

*/

public static void removeSensitiveWords(String words, String separator) {

if (words != null && !"".equals(words.trim())) {

check(words);

String[] sensitiveWords = words.split(separator);

removeWords(sensitiveWords);

}

}

/**

* 删除敏感词

*

* @param words

*/

public static void removeSensitiveWords(String[] words) {

if (words == null || words.length == 0) {

return;

}

check(words);

removeWords(words);

}

/**

*

* 找出文本中的敏感词

*

* @author hymer

* @param text

* @return

*/

public static Set<String> find(String text) {

return new TextAnalysis().analysis(TREE, text);

}

/**

* 替换文本中的敏感词

*

* @param text

* 含敏感词的文本

* @return

*/

public static String replace(String text) {

return new TextAnalysis().replace(TREE, text, DEFAULT_REPLACEMENT);

}

/**

* 替换文本中的敏感词

*

* @param text

* 含敏感词的文本

* @param replacement

* 替换字符

* @return

*/

public static String replace(String text, Character replacement) {

return new TextAnalysis().replace(TREE, text, replacement);

}

/**

*

* 过滤文本,并标记出敏感词,默认使用HTML中红色font标记

*

* @author hymer

* @param text

* @return

*/

public static String filter(String text) {

return new TextAnalysis().mark(TREE, text, DEFAULT_START_TAG, DEFAULT_END_TAG);

}

/**

*

* 过滤文本,并标记出敏感词

*

* @author hymer

* @param text

* @param startTag

* @param endTag

* @return

*/

public static String filter(String text, String startTag, String endTag) {

return new TextAnalysis().mark(TREE, text, startTag, endTag);

}

private static void check(String... words) {

for (String word : words) {

if (word != null && word.contains(TREE_END_KEY)) {

throw new RuntimeException("包含非法字符:" + TREE_END_KEY);

}

}

}

private static void addWords(String... sensitiveWords) {

for (String word : sensitiveWords) {

if (word != null && !word.trim().equals("")) {

word = word.trim();

int len = word.length();

if (len > 1024) {

throw new RuntimeException("敏感词太长[最长1024]!");

}

// 添加该词,如果未重复,则添加到tree

if (WORDS.add(word)) {

TreeGenerator.addWord2Tree(TREE, word);

}

}

}

log.info("当前敏感词数量:", WORDS.size());

}

private static void removeWords(String... sensitiveWords) {

for (String word : sensitiveWords) {

if (word != null && !word.trim().equals("")) {

word = word.trim();

WORDS.remove(word);

}

}

TREE.clear();

addWords(WORDS.toArray(new String[WORDS.size()]));

}

@SuppressWarnings("unused")

private static void printTree(Map<String, Map> wordTree, int level) {

if (wordTree == null || wordTree.isEmpty()) {

return;

}

Iterator<String> it = wordTree.keySet().iterator();

while (it.hasNext()) {

String next = it.next();

Object tmp = wordTree.get(next);

if (tmp instanceof Map) {

printTree((Map) tmp, level + 1);

}

}

}

}

以上就是关键代码,接下来我们测试一下效果:

public static void main(String[] args) {

String content = "我觉得今天还行。";

// 定义敏感词库 后续可以对接数据库

String[] sensitiveWords = {"今天", "今天很好", "今天真烦"};

// 设置敏感词库

Finder.addSensitiveWords(sensitiveWords);

// 敏感词过滤替换字符为*

String replace = Finder.replace(content, '*');

// 输出结果:我觉得**还行。

System.out.println(replace);

}

发现效果还不错。至此,一个简单的DFA算法实现敏感词过滤功能就实现了。

参考资料: