在调试蓝牙钥匙定位中,由于RSSI信号的不稳定性,蓝牙定位准确率不高,最近也在研究机器学习的算法,用决策树算法模型来测试验证蓝牙RSSI定位的精确度

1、数据

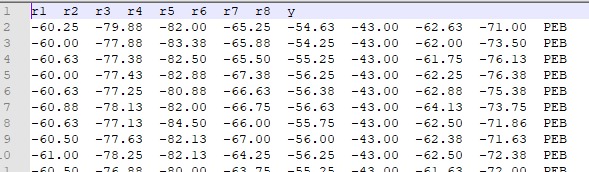

汽车上布置了8个基站,基站编号从R1到R8,y对应的采集数据的位置。

2、读取数据

#读取了两份文件的数据

def loaddata():

feature_name = ['r1','r2','r3','r4','r5','r6','r7','r8']

dataSet = []

fr = open('data/ble_rssi1.txt')

for line in fr.readlines():

lineArr = line.strip().split('\t')

inList = []

n = len(lineArr)

#listLine = [float(e) for e in lineArr[] ]

for i in range(n - 1):

inList.append(float(lineArr[i]))

inList.append(lineArr[n-1])

dataSet.append(inList)

fr = open('data/ble_rssi_android1.txt')

for line in fr.readlines():

lineArr = line.strip().split('\t')

inList = []

n = len(lineArr)

#listLine = [float(e) for e in lineArr[] ]

for i in range(n - 1):

inList.append(float(lineArr[i]))

inList.append(lineArr[n-1])

dataSet.append(inList)

return dataSet,feature_name

3 信息增益算法

3.1 计算信息熵

#计算数据集的熵

def entropy(dataSet):

#数据集条数

m = len(dataSet)

#标签不同类别的计数字典

labelCounts = {}

#循环数据集

for featVec in dataSet:

#取标签 最后一列

currentLabel = featVec[-1]

#print('currentLabel =' ,currentLabel)

#如果字典中不存在则值为0,否则值加1 (key对应的数据个数)

if currentLabel not in labelCounts.keys(): labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

#保存最终的熵值

e = 0.0

#根据公式计算熵

for key in labelCounts:

prob = float(labelCounts[key])/m

e -= prob * log(prob,2)

return e

3.2 数据划分

#划分数据集

def splitDataSet(dataSet, axis, value):

#按轴和值划分好的数据集

retDataSet1 = []

retDataSet2 = []

#循环数据集

for featVec in dataSet:

#当前数据按轴取出的数据符合传入的value值

# print(featVec);

reducedFeatVec = featVec[:axis] #留下axis前的列

reducedFeatVec.extend(featVec[axis:])#连接上从axis开始取到最后列

#print('featVec[axis] = ' ,featVec[axis] , ' value = ' ,value)

if float(featVec[axis]) <= value:#如果符合则留下

retDataSet1.append(reducedFeatVec)

else:

retDataSet2.append(reducedFeatVec)

#print('retDataSet1 ' ,retDataSet1 , ' retDataSet2' ,retDataSet2)

return retDataSet1,retDataSet2

3.3 数据排序和离散化

#冒泡排序,降序排列

def bubbleSort(arr):

for i in range(1, len(arr)):

for j in range(0, len(arr)-i):

if arr[j] > arr[j+1]:

arr[j], arr[j + 1] = arr[j + 1], arr[j]

return arr

#数据离散化处理

def discreteFeat(featList):

featList = list(featList)

featList = bubbleSort(featList)

featVals = []

for i in range(len(featList) - 1):

value = (featList[i] + featList[i+1])/2;

featVals.append(value)

return featVals

3.4 选择最好特征和特征值

#选择最好的特征

def chooseBestFeature(dataSet):

#特征数

n = len(dataSet[0]) - 1

#计数整个数据集的熵

baseEntropy = entropy(dataSet)

#定义两个变量,最好的信息增益,最好特征

bestInfoGain = 0.0; bestFeature = -1

bestFeatureValue = 0.0;

#遍历每个特征

for i in range(n):

#获取当前特征的所有值 i = 0 即取特征第一列数据 即r1

featList = [example[i] for example in dataSet]

featList = set(featList)

# print('featList = ' ,featList)

featCount = len(featList)

if featCount > 1:

#数据离散化处理

featVals = discreteFeat(featList)

else:

featVals = featList

#当前特征的可能取值

uniqueVals = set(featVals)

#最小条件熵

minEntropy = 0.0

#定义一临时变量保存当前的条件熵

newEntropy = 0.0

#特征连续值T对应最好的划分值Ta

bestValue = 0.0

#循环每一个可能的取值

for value in uniqueVals:

#按连续值二分法进行数据集的划分

subDataSet1,subDataSet2 = splitDataSet(dataSet, i, value)

#计算条件熵(2行代码)

prob1 = len(subDataSet1)/float(len(dataSet))

prob2 = len(subDataSet2)/float(len(dataSet))

#二分类划分的条件熵,选择最小条件熵(即信息增益最大)

newEntropy = prob1 * entropy(subDataSet1) + prob2* entropy(subDataSet2)

if minEntropy == 0:

minEntropy = newEntropy

bestValue = value

if newEntropy < minEntropy:

minEntropy = newEntropy

bestValue = value

#计算信息增益

infoGain = baseEntropy - minEntropy

#保存当前最大的信息增益及对应的特征

if (infoGain > bestInfoGain):

bestInfoGain = infoGain

bestFeature = i

bestFeatureValue = bestValue

#返回最优特征

return bestFeature,bestFeatureValue,

3.5 生成决策树

global i

i = 0

#递归训练一棵树

def trainTree(dataSet,feature_name):

global i

i = i + 1

#print('dataSet ' ,i)

#print(dataSet)

#将dataSet中的数据先按行依次放入example中,然后取得example中的example[i]元素,放入列表featList中

classList = [example[-1] for example in dataSet]

#print('classList ' , i)

#print(classList)

#print('classList[0] = ' ,classList[0], ' dataSet[0] = ' ,dataSet[0])

if len(classList) <= 0:

return 'Unknow'

#所有元素的类别一样

if classList.count(classList[0]) == len(classList):

return classList[0]#所有类别都一致

if len(dataSet[0]) == 1: #数据集中只剩下一个特征

return classVote(classList)

if i > 100:

i = 0

return classVote(classList)

bestFeat,bestFeatValue = chooseBestFeature(dataSet)

bestFeatName = feature_name[bestFeat]

#print('i = ' , i , ' bestFeat = ' ,bestFeat , ' bestFeatName = ' , bestFeatName ,' bestFeatValue = ' ,bestFeatValue)

myTree = {bestFeatName:{}}

#del(labels[bestFeat])

sub_feature_name = feature_name[:];

splitDataSet1 ,splitDataSet2 = splitDataSet(dataSet, bestFeat, bestFeatValue)

#print('splitDataSet1 = ' ,splitDataSet1)

#print('splitDataSet2 = ' ,splitDataSet2)

value_greate_equal = str(bestFeatValue) + '-'

value_less = str(bestFeatValue) + '+'

#print(' mTree ' , myTree)

myTree[bestFeatName][value_greate_equal] = trainTree(splitDataSet1,sub_feature_name)

myTree[bestFeatName][value_less] = trainTree(splitDataSet2,sub_feature_name)

return myTree

3.6 训练

myDat,feature_name = loaddata()

myTree = trainTree(myDat,feature_name)

print('myTree = ' ,myTree)

4 基尼指数算法

4.1 基尼指数

#计算基尼指数Gini(D) 1- sum{pow[(Ck/D),2]}

def giniIndex(dataSet):

#数据集条数

m = len(dataSet)

#标签不同类别的计数字典

labelCounts = {}

#循环数据集

for featVec in dataSet:

#取标签 最后一列

currentLabel = featVec[-1]

#print('currentLabel =' ,currentLabel)

#如果字典中不存在则值为0,否则值加1 (key对应的数据个数)

if currentLabel not in labelCounts.keys(): labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

#保存最终的熵值

e = 0.0

#根据公式计算熵

for key in labelCounts:

prob = float(labelCounts[key])/m

e += pow(prob,2)

e = 1 - e

return e

4.2 根据基尼指数值选择最优特征

#根据gini 选择最好的特征

def chooseBestFeatureGini(dataSet):

#特征数

n = len(dataSet[0]) - 1

#定义两个变量,最好的基尼系数,最好特征

bestGini = -1; bestFeature = -1

bestFeatureValue = 0.0;

#遍历每个特征

for i in range(n):

#获取当前特征的所有值 i = 0 即取特征第一列数据 即r1

featList = [example[i] for example in dataSet]

featList = set(featList)

featCount = len(featList)

if featCount > 1:

#数据离散化处理

featVals = discreteFeat(featList)

else:

featVals = featList

#当前特征的可能取值

uniqueVals = set(featVals)

#print('i = ' ,i , ' featList = ' , featList , ' featVals = ' , featVals , ' dataSet =' ,dataSet)

#最小条件熵

minGiniDA = -1

#给定特征A时的基尼指数

giniDA = 0.0

#特征连续值T对应最好的划分值Ta

bestValue = 0.0

#循环每一个可能的取值

for value in uniqueVals:

#按连续值二分法进行数据集的划分

subDataSet1,subDataSet2 = splitDataSet(dataSet, i, value)

#计算数据集D1,D2占比

prob1 = len(subDataSet1)/float(len(dataSet))

prob2 = len(subDataSet2)/float(len(dataSet))

#给定特征A条件下的基尼指数)

giniDA = prob1 * giniIndex(subDataSet1) + prob2* giniIndex(subDataSet2)

#print('value = ' ,value , ' i = ' ,i)

#print(' subDataSet1 =' ,subDataSet1 , ' giniIndex(subDataSet1) = ' ,giniIndex(subDataSet1))

#print(' subDataSet2 =' ,subDataSet2 , ' giniIndex(subDataSet2) = ' ,giniIndex(subDataSet2))

#选择基尼系数最小的特征和值进行划分

if minGiniDA == -1:

minGiniDA = giniDA

bestValue = value

if giniDA < minGiniDA:

minGiniDA = giniDA

bestValue = value

#选择最小基尼指数,保存当前最小的基尼指数特征和对应的特征值

if bestGini == -1:

bestGini = minGiniDA

bestFeature = i

bestFeatureValue = bestValue

if minGiniDA < bestGini:

bestGini = minGiniDA

bestFeature = i

bestFeatureValue = bestValue

#print('bestGini = ' ,bestGini , ' minGiniDA = ' ,minGiniDA , ' bestFeatureValue' ,bestFeatureValue)

#返回最优特征

return bestFeature,bestFeatureValue,

4.3 生成树

#递归训练一棵树

def trainTree(dataSet,feature_name):

global i

i = i + 1

#print('dataSet ' ,i)

#print(dataSet)

#将dataSet中的数据先按行依次放入example中,然后取得example中的example[i]元素,放入列表featList中

classList = [example[-1] for example in dataSet]

#print('classList ' , i)

#print(classList)

#print('classList[0] = ' ,classList[0], ' dataSet[0] = ' ,dataSet[0])

if len(classList) <= 0:

return 'Unknow'

#所有元素的类别一样

if classList.count(classList[0]) == len(classList):

return classList[0]#所有类别都一致

if len(dataSet[0]) == 1: #数据集中只剩下一个特征

return classVote(classList)

if i > 100:

i = 0

return classVote(classList)

bestFeat,bestFeatValue = chooseBestFeatureGini(dataSet)

bestFeatName = feature_name[bestFeat]

#print('i = ' , i , ' bestFeat = ' ,bestFeat , ' bestFeatName = ' , bestFeatName ,' bestFeatValue = ' ,bestFeatValue)

myTree = {bestFeatName:{}}

# del(labels[bestFeat])

sub_feature_name = feature_name[:];

splitDataSet1 ,splitDataSet2 = splitDataSet(dataSet, bestFeat, bestFeatValue)

#print('splitDataSet1 = ' ,splitDataSet1)

#print('splitDataSet2 = ' ,splitDataSet2)

value_greate_equal = str(bestFeatValue) + '-'

value_less = str(bestFeatValue) + '+'

#print(' mTree ' , myTree)

myTree[bestFeatName][value_greate_equal] = trainTree(splitDataSet1,sub_feature_name)

myTree[bestFeatName][value_less] = trainTree(splitDataSet2,sub_feature_name)

return myTree

5 预测

#预测

def predict(inputTree,featLabels,testVec):

firstStr = list(inputTree.keys())[0]

secondDict = inputTree[firstStr]

featIndex = featLabels.index(firstStr)

key = testVec[featIndex]

key_values = secondDict.keys()

key_value1 = list(key_values)[0]

key_value2 = list(key_values)[1]

value = str(key_value1)

value = value[0:len(value) - 1]

f_value = float(value)

if(key <= f_value):

key = key_value1

else:

key = key_value2

valueOfFeat = secondDict[key]

if isinstance(valueOfFeat, dict):

classLabel = predict(valueOfFeat, featLabels, testVec)

else: classLabel = valueOfFeat

return classLabel

6 后剪枝

#定义一个常用函数 用来求numpy array中数值等于某值的元素数量

def equalNums(x ,y):

#定义一字典,记录每个标签对应的个数

classCount = 0

#循环计数

for vote in x:

if vote == y:

classCount += 1

return classCount

#创建预剪枝决策树

#names feature_name

global j

j = 0

def createTreePrePruning(dataSet,feature_name):

global j

j = j + 1

classList = [example[-1] for example in dataSet]

if len(classList) <= 0:

return 'Unknow'

#所有元素的类别一样

elif classList.count(classList[0]) == len(classList):

return classList[0]#所有类别都一致

elif len(dataSet[0]) == 1: #数据集中只剩下一个特征

return classVote(classList)

elif j > 100:

j = 0

return classVote(classList)

bestFeat,bestFeatValue = chooseBestFeatureGini(dataSet)

bestFeatName = feature_name[bestFeat]

# 根据最优特征进行分割

sub_feature_name = feature_name[:];

splitDataSet1 ,splitDataSet2 = splitDataSet(dataSet, bestFeat, bestFeatValue)

#print('splitDataSet1 = ' ,splitDataSet1)

#print('splitDataSet2 = ' ,splitDataSet2)

# 预剪枝评估

# 划分前的分类标签的精度

labelTrainLabelPre = classVote(classList)

labelTrainRatioPre = equalNums(classList, labelTrainLabelPre) / len(classList)

#划分后 左后叶子结点的精度

classList1 = [example[-1] for example in splitDataSet1]

classList2 = [example[-1] for example in splitDataSet2]

labelTrainLabelPost1 = classVote(classList1)

labelTrainRatioPost1 = equalNums(classList1, labelTrainLabelPost1) / len(classList1)

labelTrainLabelPost2 = classVote(classList2)

labelTrainRatioPost2 = equalNums(classList2, labelTrainLabelPost2) / len(classList2)

labelTrainRatioPost = labelTrainRatioPost1 + labelTrainRatioPost2;

value_greate_equal = str(bestFeatValue) + '-'

value_less = str(bestFeatValue) + '+'

if dataSet is None:

return labelTrainLabelPre

#如果划分后的精度相比划分前的精度下降, 则直接作为叶子节点返回

elif labelTrainRatioPost < labelTrainRatioPre:

return labelTrainLabelPre

else :

#根据选取的特征名称创建树节点

decisionTree = {bestFeatName: {}}

#对最优特征的每个特征值所分的数据子集进行计算

decisionTree[bestFeatName][value_greate_equal] = createTreePrePruning(splitDataSet1,sub_feature_name)

decisionTree[bestFeatName][value_less] = createTreePrePruning(splitDataSet2,sub_feature_name)

return decisionTree

7、预测测试

xgTreePrePruning = createTreePrePruning(myDat,feature_name)

print('xgTreePrePruning = ' ,xgTreePrePruning)

#测试,计算预测准备度

from sklearn import model_selection

X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=0.3, random_state=1)

y_hat = []

n = X_test.shape[0] - 1

X_test = np.array(X_test)

y_test = np.array(y_test)

y_pre = []

predict_cnt = 0;

for i in range(n):

#y_predet = predict(myTree,feature_name,X_test[i])

y_predet = predict(xgTreePrePruning,feature_name,X_test[i])

y_pre.append(y_predet)

#print('i = ' ,i ,'y_test[i] = ' ,y_test[i] , ' y_predet =' ,y_predet)

if (str(y_predet) == str(y_test[i])):

predict_cnt = predict_cnt + 1

rate = predict_cnt/n

print('rate = ' ,rate)

参考:https://blog.csdn.net/ylhlly/article/details/93213633