背景故事:? ? ? ??

????????《深度学习推荐系统》中在介绍这一部分的内容时,给的标题是: 从深度学习的视角重新审视矩阵分解模型。个人觉得这句话非常非常精准,哈哈哈。。。

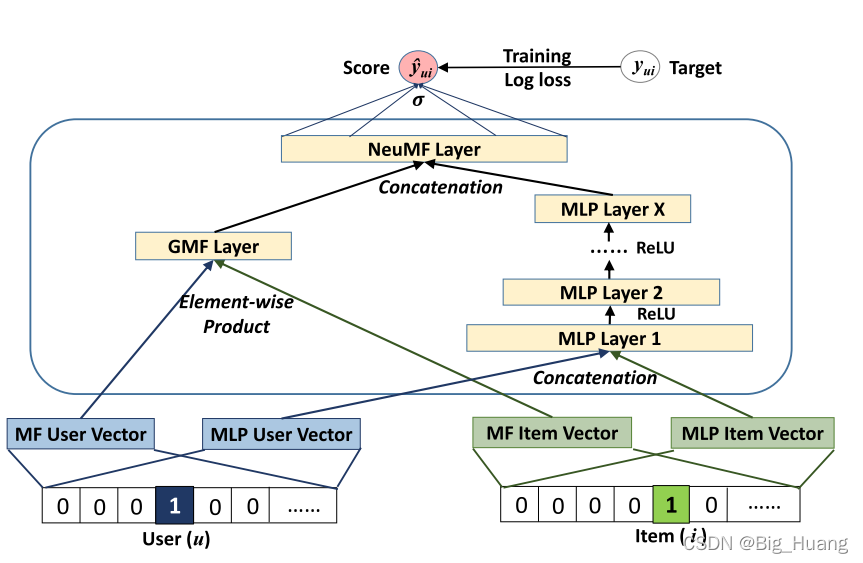

????????LFM作为CF的实现方法一种,根据 user-item 关系矩阵,找到表征 用户特征、物品特征的隐向量,再通过 内积 的计算方式确定用户和物品直接的关系(相似度?)。这里面主要有两块内容,第一是求解隐向量(或者说描述用户和物品的特征向量?);第二是用户和物品之间关系的求解。LFM 争对这两部分给出了比较确定的计算方法,但是该问题本身并不是显式计算嘛,都是特征表达方法,那么自然可以用其它类似的方法替代。

????????比如说针对隐向量的求解, embedding在NLP中非常流行,通过一个低维稠密的向量表示一个对象,Embedding向量之间的距离甚至可以表征一些语义信息,例如同词性的单词离得更近等等,那么是否可以将用户和物品编码成这种embedding呢? 根据大量的用户-物品喜好数据输入,使得Embedding?能够表征不同属性的用户和物品,相似的用户的特征距离更近,用户和物品的距离反映一些喜好程度?等等等? ? ?NeuralCF在这一点这么做的嘛

? ? ? ? 再比如针对用户和物品相似度计算方式,只用了当前?用户和物品 的特征进行内积,是否换其他计算方式,比如说元素积,或者直接使用神经网络理论上可以拟合任何函数的特点,构建神经网络交叉各个特征,综合给出最终相似度??NeuralCF在这一点也是这么做的嘛

????????有一点需要注意,模型输入就是 user_id 和 item_id ;我开始的时候会难以理解,仅仅由两个ID和二进制结果表明是否喜欢,这种简单的关系怎么弄泛化到模型嘛。但是仔细想想,user_id 和 大量的item_id 之间有关联;相对的 item_id 也与大量user_id 相关联,那么这里面不就蕴含了 user 和 item 的喜好关系了嘛 (这就是 UserCF 和 ItemCF嘛)

代码复现:

????????故事讲完了,原本是需要讲下数学公式的,但是这里面确实没有太多公式,根据作者的图示就能很好的复现模型,本文采用的数据集是 ml-1m,网上找到很多处理该数据集的方式,主要是通过处理数据集,对有评分数据标注1,未评分标注0;同时注意控制正负样本比例,这里所给代码参考Github用户 HeartbreakSurvivor 给出的处理代码,但是这里并未给出测试集sample和评估方式,但是似乎原作者给出了处理完成的数据和评估方法,可以参考:hexiangnan/neural_collaborative_filtering at 4aab159e81c44b062c091bdaed0ab54ac632371f (github.com)![]() https://github.com/hexiangnan/neural_collaborative_filtering/tree/4aab159e81c44b062c091bdaed0ab54ac632371f

https://github.com/hexiangnan/neural_collaborative_filtering/tree/4aab159e81c44b062c091bdaed0ab54ac632371f

数据处理程序:

import random

import pandas as pd

import numpy as np

from copy import deepcopy

random.seed(0)

class DataProcess(object):

def __init__(self, filename):

self._filename = filename

self._loadData()

self._preProcess()

self._binarize(self._originalRatings)

# 对'userId'这一列的数据,先去重,然后构成一个用户列表

self._userPool = set(self._originalRatings['userId'].unique())

self._itemPool = set(self._originalRatings['itemId'].unique())

print("user_pool size: ", len(self._userPool))

print("item_pool size: ", len(self._itemPool))

self._select_Negatives(self._originalRatings)

self._split_pool(self._preprocessRatings)

def _loadData(self):

self._originalRatings = pd.read_csv(self._filename, sep='::', header=None, names=['uid', 'mid', 'rating', 'timestamp'],

engine='python')

return self._originalRatings

def _preProcess(self):

"""

对user和item都重新编号,这里这么做的原因是因为,模型的输入是one-hot向量,需要把user和item都限制在Embedding的长度之内,

模型的两个输入的长度分别是user和item的数量,所以要重新从0编号。

"""

# 1. 新建名为"userId"的列,这列对用户从0开始编号

user_id = self._originalRatings[['uid']].drop_duplicates().reindex()

user_id['userId'] = np.arange(len(user_id)) #根据user的长度创建一个数组

# 将原先的DataFrame与user_id按照"uid"这一列进行合并

self._originalRatings = pd.merge(self._originalRatings, user_id, on=['uid'], how='left')

# 2. 对物品进行重新排列

item_id = self._originalRatings[['mid']].drop_duplicates()

item_id['itemId'] = np.arange(len(item_id))

self._originalRatings = pd.merge(self._originalRatings, item_id, on=['mid'], how='left')

# 按照['userId', 'itemId', 'rating', 'timestamp']的顺序重新排列

self._originalRatings = self._originalRatings[['userId', 'itemId', 'rating', 'timestamp']]

# print(self._originalRatings)

# print('Range of userId is [{}, {}]'.format(self._originalRatings.userId.min(), self._originalRatings.userId.max()))

# print('Range of itemId is [{}, {}]'.format(self._originalRatings.itemId.min(), self._originalRatings.itemId.max()))

def _binarize(self, ratings):

"""

binarize data into 0 or 1 for implicit feedback

"""

ratings = deepcopy(ratings)

ratings['rating'][ratings['rating'] > 0] = 1.0

self._preprocessRatings = ratings

# print("binary: \n", self._preprocessRatings)

def _select_Negatives(self, ratings):

"""

Select al;l negative samples and 100 sampled negative items for each user.

"""

# 构造user-item表

interact_status = ratings.groupby('userId')['itemId'].apply(set).reset_index().rename(

columns={'itemId': 'interacted_items'})

print("interact_status: \n", interact_status)

# 把与用户没有产生过交互的样本都当做是负样本

interact_status['negative_items'] = interact_status['interacted_items'].apply(lambda x: self._itemPool - x)

# 从上面的全部负样本中随机选99个出来

interact_status['negative_samples'] = interact_status['negative_items'].apply(lambda x: random.sample(x, 99))

# print("after sampling interact_status: \n", interact_status)

# print("select and rearrange columns")

self._negatives = interact_status[['userId', 'negative_items', 'negative_samples']]

def _split_pool(self, ratings):

"""leave one out train/test split """

print("sort by timestamp descend")

# 先按照'userID'进行分组,然后根据时间戳降序排列

ratings['rank_latest'] = ratings.groupby(['userId'])['timestamp'].rank(method='first', ascending=False)

# print(ratings)

# 选取排名第一的数据作为测试集,也就是最新的那个数据

test = ratings[ratings['rank_latest'] == 1]

# 选取所有排名靠后的,也就是历史数据当做训练集

train = ratings[ratings['rank_latest'] > 1]

# print("test: \n", test)

# print("train: \n", train)

# print("size of test {0}, size of train {1}".format(len(test), len(train)))

# 确保训练集和测试集的userId是一样的

assert train['userId'].nunique() == test['userId'].nunique()

self.train_ratings = train[['userId', 'itemId', 'rating']]

self.test_ratings = test[['userId', 'itemId', 'rating']]

def sample_generator(self, num_negatives):

# 合并之后的train_ratings的列包括['userId','itemId','rating','negative_items']

train_ratings = pd.merge(self.train_ratings, self._negatives[['userId', 'negative_items']], on='userId')

# 从用户的全部负样本集合中随机选择num_negatives个样本当做负样本,并产生一个新的名为"negatives"的列

train_ratings['negatives'] = train_ratings['negative_items'].apply(lambda x: random.sample(x, num_negatives))

# print(train_ratings)

# 构造模型所需要的数据,分别是输入user、items以及目标分值ratings。

users, items, ratings = [], [], []

for row in train_ratings.itertuples():

# 构造正样本,分别是userId, itemId以及目标分值1

users.append(int(row.userId))

items.append(int(row.itemId))

ratings.append(float(row.rating))

# 为每个用户构造num_negatives个负样本,分别是userId, itemId以及目标分值0

for i in range(num_negatives):

users.append(int(row.userId))

items.append(int(row.negatives[i]))

ratings.append(float(0)) # 负样本的ratings为0,直接强行设置为0

return users, items, ratings

数据下载链接:

(1条消息) ml-1m数据集CF系列-深度学习文档类资源-CSDN文库![]() https://download.csdn.net/download/Big_Huang/85203063

https://download.csdn.net/download/Big_Huang/85203063

模型构建和训练程序:

import pandas

from sympy import im

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import matplotlib.pyplot as plt

from torch.utils.data import DataLoader, Dataset

from getMlData import DataProcess

class NeuralCF(nn.Module):

def __init__(self, user_nums, item_nums, mlp_layers, mf_dim):

super().__init__()

# 构建四个embedding 层

self.mf_user_embed = nn.Embedding(user_nums, mf_dim)

self.mf_item_embed = nn.Embedding(item_nums, mf_dim)

self.mlp_user_embed = nn.Embedding(user_nums, mlp_layers[0] // 2)

self.mlp_item_embed = nn.Embedding(item_nums, mlp_layers[0] // 2)

# 构建 MLP 中的多个层 Linear + ReLU

self.mlp_layers = nn.ModuleList([

nn.Linear(in_feas, out_feas) for in_feas, out_feas in zip(mlp_layers[:-1], mlp_layers[1:])

])

# MLP 中最后一个 Linear 直接输出, 不加ReLU

self.mlp_last_linear = nn.Linear(mlp_layers[-1], mf_dim)

# NeuralCF的Linear

self.nearcf = nn.Linear(2 * mf_dim, 1)

# 输出CTR得分的 sigmoid

self.sigmoid = nn.Sigmoid()

def forward(self, x):

user_data = x[:, 0]

item_data = x[:, 1]

# 左边 GMF

self.mf_user_vec = self.mf_user_embed(user_data)

self.mf_item_vec = self.mf_item_embed(item_data)

gmf = torch.mul(self.mf_user_vec, self.mf_item_vec)

# 右边 MLP

self.mlp_user_vec = self.mlp_user_embed(user_data)

self.mlp_item_vec = self.mlp_item_embed(item_data)

mlp = torch.cat([self.mlp_user_vec, self.mlp_item_vec], dim=-1)

for layer in self.mlp_layers:

mlp = layer(mlp)

mlp = F.relu(mlp)

mlp = self.mlp_last_linear(mlp)

# 输出

out_put = torch.cat([gmf, mlp], dim=-1)

return self.sigmoid(self.nearcf(out_put))

class UserItemRatingDataset(Dataset):

"""

Wrapper, convert input <user, item, rating> Tensor into torch Dataset

"""

def __init__(self, user_tensor, item_tensor, target_tensor):

"""

args:

target_tensor: torch.Tensor, the corresponding rating for <user, item> pair

"""

self._user_tensor = user_tensor

self._item_tensor = item_tensor

self._target_tensor = target_tensor

def __getitem__(self, index):

return self._user_tensor[index], self._item_tensor[index], self._target_tensor[index]

def __len__(self):

return self._user_tensor.size(0)

def Construct_DataLoader(users, items, ratings, batchsize):

assert batchsize > 0

dataset = UserItemRatingDataset(user_tensor=torch.LongTensor(users),

item_tensor=torch.LongTensor(items),

target_tensor=torch.LongTensor(ratings))

return DataLoader(dataset, batch_size=batchsize, shuffle=True)

class TrainTask:

def __init__(self, model, lr=0.001, use_cuda=False):

self.__device = torch.device("cuda" if torch.cuda.is_available() and use_cuda else "cpu")

self.__model = model.to(self.__device)

self.__loss_fn = nn.BCELoss().to(self.__device)

self.__optimizer = torch.optim.Adam(model.parameters(), lr=lr)

self.train_loss = []

self.eval_loss = []

self.train_metric = []

self.eval_metric = []

def __train_one_batch(self, feas, labels):

""" 训练一个batch

"""

self.__optimizer.zero_grad()

# 1. 正向

outputs = self.__model(feas)

# 2. loss求解

loss = self.__loss_fn(outputs.squeeze(), labels)

# 3. 梯度回传

loss.backward()

self.__optimizer.step()

return loss.item(), outputs

def __train_one_epoch(self, train_dataloader, epoch_id):

""" 训练一个epoch

"""

self.__model.train()

loss_sum = 0

batch_id = 0

for batch_id, (user, item, target) in enumerate(train_dataloader):

input = torch.stack([user, item]).T

input, target = Variable(input).to(self.__device), Variable(target.float()).to(self.__device)

loss, outputs = self.__train_one_batch(input, target)

loss_sum += loss

self.train_loss.append(loss_sum / (batch_id + 1))

print("Training Epoch: %d, mean loss: %.5f" % (epoch_id, loss_sum / (batch_id + 1)))

def train(self, sampleGenerator, num_negatives, epochs, batch_size):

for epoch in range(epochs):

print('-' * 20 + ' Epoch {} starts '.format(epoch) + '-' * 20)

users, items, ratings = sampleGenerator(num_negatives=num_negatives)

# 构造DataLoader

train_data_loader = Construct_DataLoader(users=users, items=items, ratings=ratings,

batchsize=batch_size)

# 训练一个轮次

self.__train_one_epoch(train_data_loader, epoch_id=epoch)

# 验证一遍

# self.__eval(eval_data_loader, epoch_id=epoch)

def __eval(self, eval_dataloader, epoch_id):

""" 验证集上推理一遍

"""

batch_id = 0

loss_sum = 0

self.__model.eval()

for batch_id, (feas, labels) in enumerate(eval_dataloader):

with torch.no_grad():

feas, labels = Variable(feas).to(self.__device), Variable(labels).to(self.__device)

# 1. 正向

outputs = self.__model(feas)

# 2. loss求解

loss = self.__loss_fn(outputs.view(-1), labels)

loss_sum += loss.item()

self.eval_loss.append(loss_sum / (batch_id + 1))

print("Evaluate Epoch: %d, mean loss: %.5f" % (epoch_id, loss_sum / (batch_id + 1)))

def __plot_metric(self, train_metrics, val_metrics, metric_name):

""" 指标可视化

"""

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Training and validation '+ metric_name)

plt.xlabel("Epochs")

plt.ylabel(metric_name)

plt.legend(["train_"+metric_name, 'val_'+metric_name])

plt.show()

def plot_loss_curve(self):

self.__plot_metric(self.train_loss, self.eval_loss, "Loss")

if __name__ == "__main__":

# 获取数据

data = pandas.read_csv("./data/ml-1m/ratings.dat", sep='::', header=None, names=['uid', 'mid', 'rating', 'timestamp'])

user_nums = len(data['uid'].unique())

item_nums = len(data['mid'].unique())

dp = DataProcess("./data/ml-1m/ratings.dat")

# 构建模型

model = NeuralCF(user_nums, item_nums, [20, 64, 32, 16], 10)

task = TrainTask(model, use_cuda=False)

task.train(dp.sample_generator, 4, 50, 16)

task.plot_loss_curve()

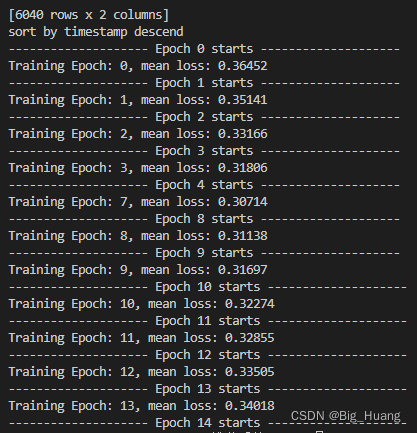

?????????简单测试下是能够收敛的,应该还行,就是没有验证确实不稳妥。其次就是数据建议采用原作者预生成的方式,在线生成有点慢,哈哈

参考:

1. 《深度学习推荐系统》