使用selenium库和xpath抓取昆特牌官网卡组快照

1 实验目的

使用python以及一些库进行官网卡组的抓取,分目录存储卡组快照和链接。

2 实验思路

- python是脚本语言,所以我们可以使用其做一些重复性很强的工作;

- 使用selenium库,可以实例化浏览器对象在后台运行,进行页面拉取,下载视频、图片文件等;

- 使用BeautifulSoup库等可以解析DOM,获取我们所需要的内容,但是此处我们只需几个网页上按钮的信息,而且PlayGwent官网搭建的很清晰,所以直接f12读取xpath即可。

3 整体设计

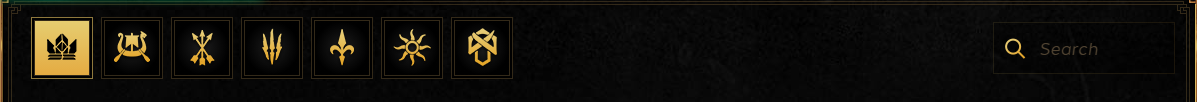

- 分 l i b r a r y library library和 f a c t i o n faction faction获取 x p a t h xpath xpath;

-

初始化 d a t e 1 , d e c k _ u r l 2 , l i b r a r i e s _ x p a t h _ d i c t 3 , f a c t i o n s _ x p a t h _ d i c t 4 date^{1}, deck\_url^{2}, libraries\_xpath\_dict^{3}, factions\_xpath\_dict^{4} date1,deck_url2,libraries_xpath_dict3,factions_xpath_dict4,1调函数即可,2是PlayGwent官网网址,3是3个library按钮所对应 n a m e ? x p a t h \textcolor{red}{name-xpath} name?xpath对组成的字典,4是 a l l all all和6个 f a c t i o n faction faction按钮所对应 n a m e ? x p a t h \textcolor{red}{name-xpath} name?xpath对组成的字典;

-

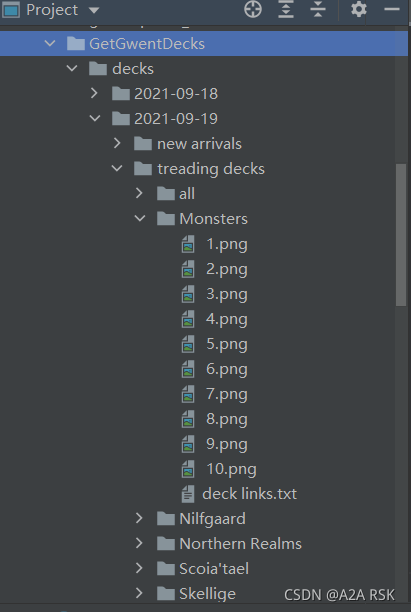

首先进行目录的创建,使用 o s os os库和 m k _ d i r s mk\_dirs mk_dirs方法分时间、 l i b r a r y library library和 f a c t i o n faction faction创建目录;

-

对于不同的 l i b r a r y library library和 f a c t i o n faction faction分别调用 g e t _ g w e n t _ d e c k s get\_gwent\_decks get_gwent_decks方法进行卡组快照和链接的抓取,传入的参数 p a g e page page还能决定每次抓几面,返回的 l i n k s links links链表传入 m k _ l i n k s _ t x t mk\_links\_txt mk_links_txt方法,生成txt文件保存链接(本步也可合并到 g e t _ g w e n t _ d e c k s get\_gwent\_decks get_gwent_decks方法中);

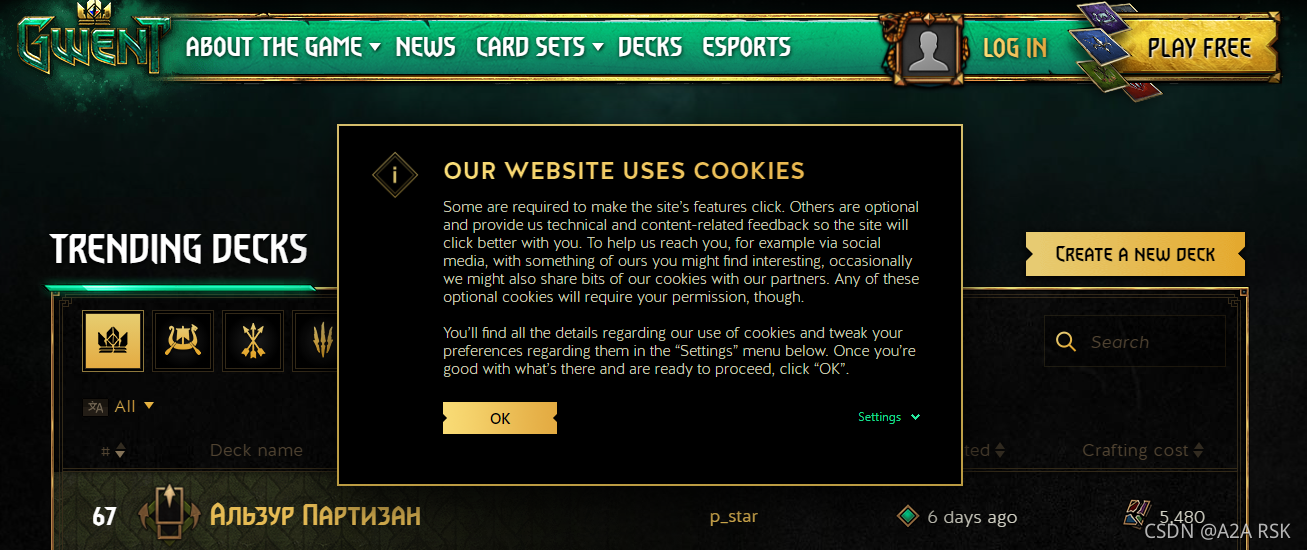

- 对于每次抓取操作,首先先进行初始化确定每个卡组的xpath?和存储为png格式时的名称和位置,接着设置 d r i v e r driver driver,添加userAgent,设置模式为headless,改变 d r i v e r driver driver的比例以截取长图(实际是在启动后设置),再浏览指定网页,点击cookies按钮,再执行选择 l i b r a r y library library和 f a c t i o n faction faction操作,紧接着进行卡组快照和链接的抓取,最后返回链接链表。

4 代码实现

# -*- coding: utf-8 -*-

# @Time : 2021-09-19 12:54

# @Author : fuchaoxin2002

# @FileName: crawler2_for_gwent_decks_chrome_edition.py

# @Version : v0.1

# @Software: PyCharm

# @Cnblogs : https://blog.csdn.net/Antonioxv

# 这个版本的selenium不支持PhamtonJS所以我就用Chrome了,可是不能像PhamtonJS那样截全图就只能缩放了,可是有些老哥的简介巨长就框不进来了

import os

import time

import datetime

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

date = str(datetime.datetime.now()).split()[0]

deck_url = 'https://www.playgwent.com/en/decks/'

libraries_xpath_dict = {

'treading decks': '//*[@id="root"]/div/div[2]/div[1]/ul/li[1]',

'new arrivals': '//*[@id="root"]/div/div[2]/div[1]/ul/li[2]'

# '*top decks': //*[@id="root"]/div/div[2]/div[1]/ul/li[3]

}

factions_xpath_dict = {

'all': '//*[@id="root"]/div/section/div[1]/div[1]/div[1]',

'Skellige': '//*[@id="root"]/div/section/div[1]/div[1]/div[2]',

'Scoia\'tael': '//*[@id="root"]/div/section/div[1]/div[1]/div[3]',

'Monsters': '//*[@id="root"]/div/section/div[1]/div[1]/div[4]',

'Northern Realms': '//*[@id="root"]/div/section/div[1]/div[1]/div[5]',

'Nilfgaard': '//*[@id="root"]/div/section/div[1]/div[1]/div[6]',

'Syndicate': '//*[@id="root"]/div/section/div[1]/div[1]/div[7]'

}

def mk_dirs(library, faction):

if not os.path.exists(fr'decks/{date}/{library}/{faction}'):

os.mkdir(fr'decks/{date}/{library}/{faction}')

return fr'decks/{date}/{library}/{faction}'

def get_gwent_decks(library, faction, page):

"""获取卡组图片与相应的links

:param library: 索引方式,由于top decks的有些卡组过于古老,故略去

:param faction: 阵营

:param page: 页面数

:return: 返回卡组links list

"""

# # initialization

links = []

element = [f'//*[@id="root"]/div/section/div[2]/div[{x}]' for x in range(2, 12)]

names = [fr'decks/{date}/{library}/{faction}/ %d.png' % x for x in range(1, (page*10+1))]

# # set driver

# add userAgent

dcap = dict(DesiredCapabilities.CHROME)

dcap["chrome.page.settings.userAgent"] = ("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/"

"537.36 (KHTML, like Gecko) Chrome/93.0.4577.63 Safari/537.36")

# headless

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

driver = webdriver.Chrome(desired_capabilities=dcap, service_args=['--ignore- ssl-errors=true'],

options=chrome_options)

# get deck_url

driver.implicitly_wait(20)

driver.get(url=deck_url)

driver.refresh()

# click cookies button

# //*[@id="CybotCookiebotDialogBodyButtonAccept"]

driver.find_element_by_xpath('//*[@id="CybotCookiebotDialogBodyButtonAccept"]').click()

# set driver size

scroll_width = driver.execute_script('return document.body.parentNode.scrollWidth')

scroll_height = driver.execute_script('return document.body.parentNode.scrollHeight')

# print(scroll_width, scroll_height)

driver.set_window_size(scroll_width, scroll_height)

# select library

driver.find_element_by_xpath(libraries_xpath_dict.get(library)).click()

# select faction

driver.find_element_by_xpath(factions_xpath_dict.get(faction)).click()

# select deck and save pic

for _ in range(0, 10):

try:

# select deck

driver.find_element_by_xpath(element[_]).click()

# loading time

time.sleep(2)

# current url

cur_url = str(driver.current_url)

links.append(cur_url)

# save pic

driver.save_screenshot(names[_])

# back to deck library

driver.back()

# wait

driver.implicitly_wait(20)

except IOError:

print('IOError')

# click next button

# //*[@id="root"]/div/section/div[3]/a[2]

if _ == 9 and page > 1:

page -= 1

driver.find_element_by_xpath('//*[@id="root"]/div/section/div[3]/a[2]').click()

return links

def mk_links_txt(save_path, links):

file = open(fr'{save_path}/deck links.txt', 'w')

i = 1

for link in links:

file.write(f'{i}: ' + link + '\n')

i += 1

file.close()

def main():

page = 1

libraries = ['treading decks', 'new arrivals']

factions = ['all', 'Skellige', 'Scoia\'tael', 'Monsters', 'Northern Realms', 'Nilfgaard', 'Syndicate']

mk_dirs('', '')

for library in libraries:

mk_dirs(library, '')

for faction in factions:

save_path = mk_dirs(library, faction)

links = get_gwent_decks(library, faction, page)

mk_links_txt(str(save_path), links)

if __name__ == '__main__':

main()

5 不足和缺点

一时兴起写了个小爬虫,也没有怎么改,所以还是会有一些问题。比如,我使用的这个版本的selenium不支持PhamtonJS所以我就用Chrome了,可是不能像PhamtonJS那样截全图就只能缩放了,可是有些老哥的简介巨长就框不进来了,所以可以使用一些旧版本的selenium搭配PhamtonJS实现完美的卡组快照。