python爬取英雄联盟手游的全英雄皮肤

前言

近期这个鸽了好久的英雄联盟手游终于上线了,虽然博主不是英雄联盟端游玩家,但看到这个游戏上线还是超级开心的,受到了一些博主爬王者荣耀皮肤的启发,我们来试试爬英雄联盟的皮肤图片吧。

分析页面

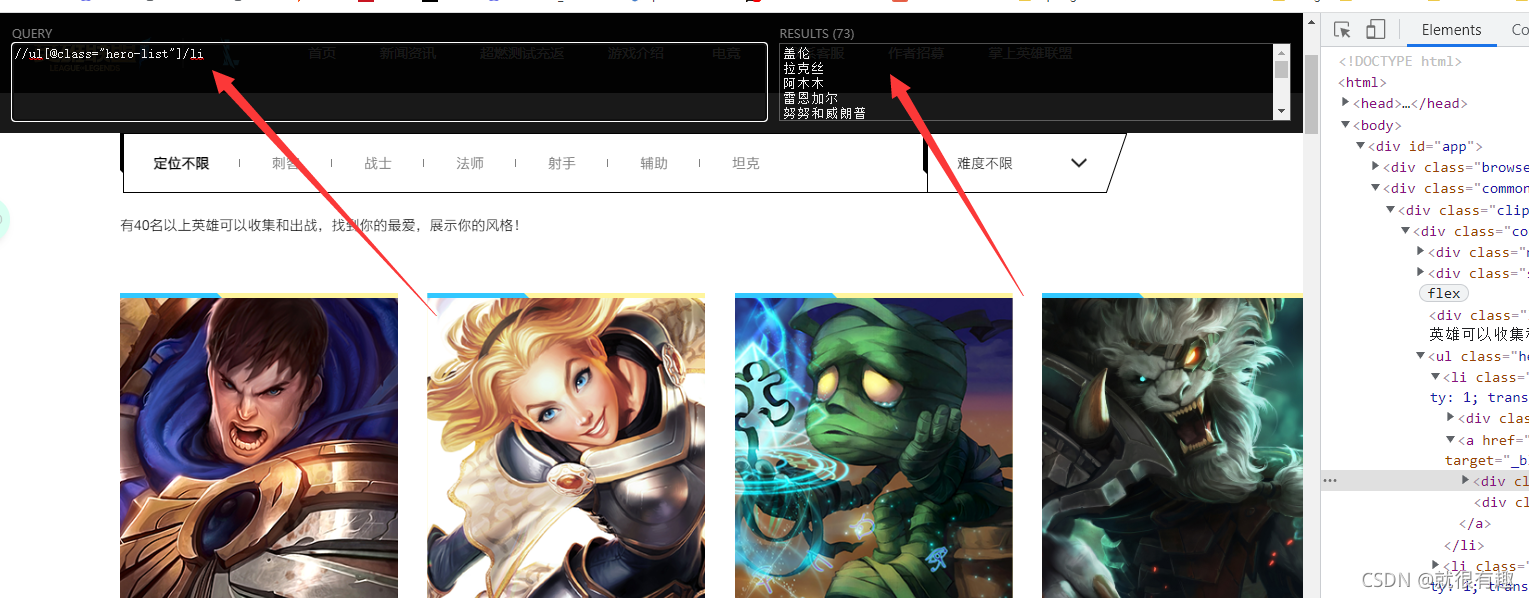

来到英雄联盟手游的官网,我们来看看这个英雄列表的展示形式吧

我们发现所有的英雄都是在一个li标签之下,那么现在的目标就是获取所有的li标签就可以,来写一手xpath

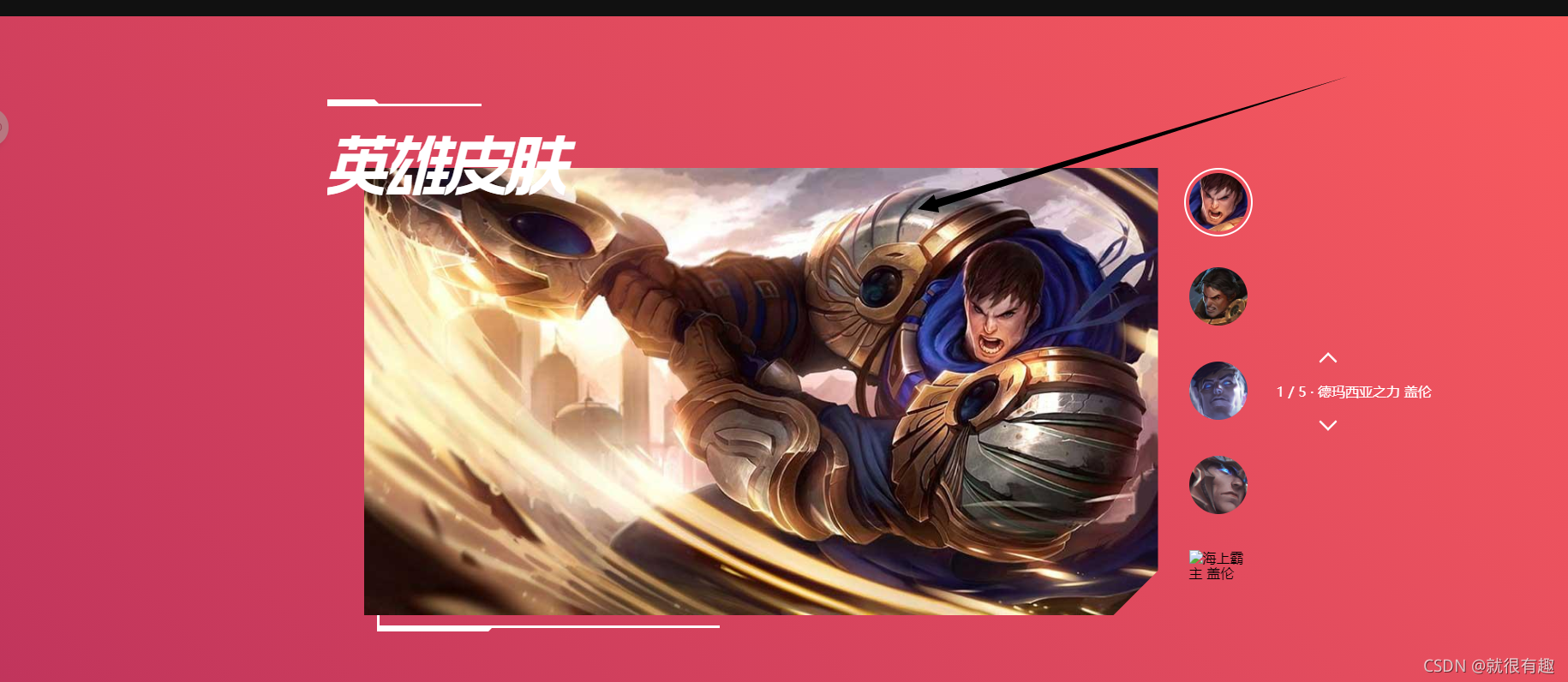

这就直接拿到了,接下来那就是点击进入英雄详情页了,以盖伦为例,来到下图页面

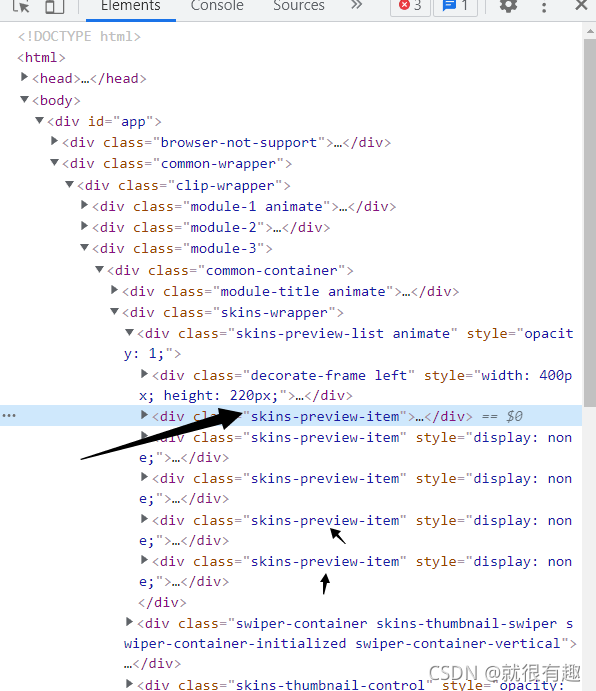

我们的目标是想要拿到黑色箭头指向的图片,还是老样子,打开f12看看页面结构

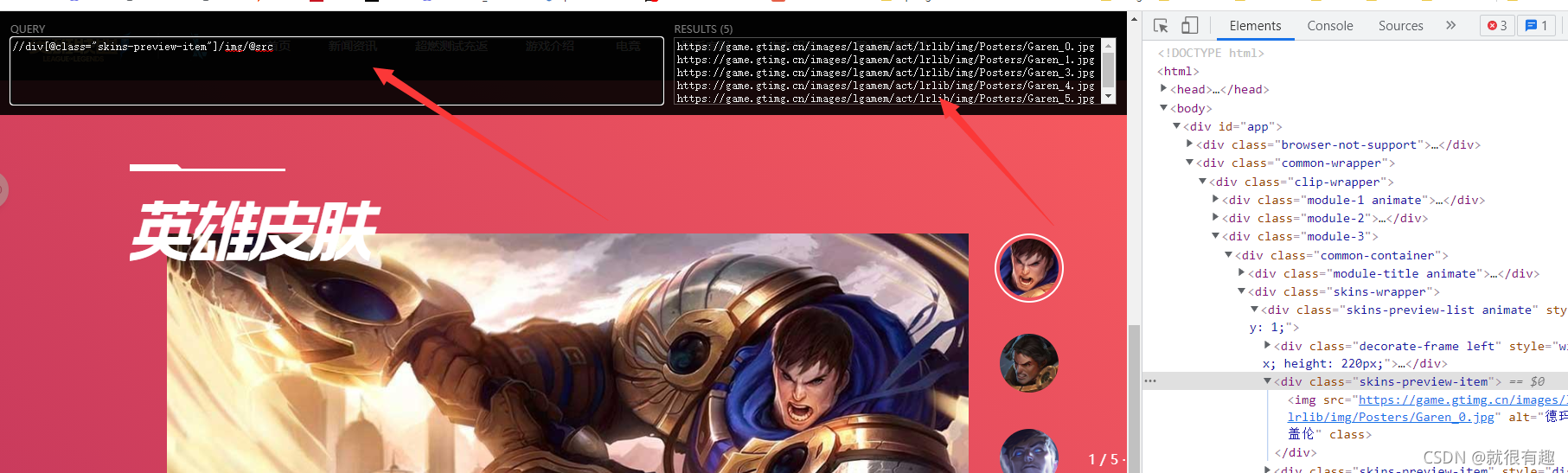

然后我们发现原来这几个皮肤图片都是存放在class为“skins-preview-item”的div中,这就很简单了,拿到链接就完事了

xpath如下

整个流程我们就了解了,那接下来就是快乐的代码过程了

具体代码

下载工具类

import os

from concurrent.futures.thread import ThreadPoolExecutor

import requests

import time

def createFolder(src):

os.makedirs(src)

def downloadFile(name, url):

try:

headers = {'Proxy-Connection': 'keep-alive'}

r = requests.get(url, stream=True, headers=headers)

print("=========================")

print(r)

length = float(r.headers['Content-length'])

f = open(name, 'wb')

count = 0

count_tmp = 0

time1 = time.time()

for chunk in r.iter_content(chunk_size=512):

if chunk:

f.write(chunk) # 写入文件

count += len(chunk) # 累加长度

# 计算时间 两秒打印一次

if time.time() - time1 > 2:

p = count / length * 100

speed = (count - count_tmp) / 1024 / 1024 / 2

count_tmp = count

print(name + ': ' + formatFloat(p) + '%' + ' Speed: ' + formatFloat(speed) + 'M/S')

time1 = time.time()

f.close()

return 1;

except:

print("出现异常")

return 0;

def formatFloat(num):

return '{:.2f}'.format(num)

if __name__ == '__main__':

# 初始化线程池

# downloadFile('D://file//photo//hd.jpg',

# 'https://browser9.qhimg.com/bdr/__85/t01753453b660de14e9.jpg')

createFolder(r"E:\file\lol\1")

获取英雄列表的每个英雄

heros = driver.find_elements(By.XPATH, '//ul[@class="hero-list"]/li')

for hero in heros:

driver.switch_to.window(driver.window_handles[0])

# 点击来到英雄详情页面

hero.click()

获取英雄皮肤链接并下载

skins = driver.find_elements(By.XPATH, '//div[@class="skins-preview-item"]/img')

for i in range(len(skins)):

FileDownload.downloadFile(r'E:\file\lol\{}\{}.jpg'.format(name.text,i),skins[i].get_attribute("src"))

完整代码

# -*- codeing = utf-8 -*-

# @Time : 2021/10/22 21:43

# @Author : xiaow

# @File : lol.py

# @Software : PyCharm

import time

from api import FileDownload

import requests

from selenium import webdriver

from selenium.webdriver.common.by import By

if __name__ == '__main__':

url = 'https://lolm.qq.com/v2/champions.html'

# 躲避智能检测

option = webdriver.ChromeOptions()

# option.headless = True

option.add_experimental_option('excludeSwitches', ['enable-automation'])

option.add_experimental_option('useAutomationExtension', False)

driver = webdriver.Chrome(options=option)

driver.execute_cdp_cmd('Page.addScriptToEvaluateOnNewDocument',

{'source': 'Object.defineProperty(navigator, "webdriver", {get: () => undefined})'

})

driver.get(url)

# 实现缓慢下滑操作

js = "return action=document.body.scrollHeight"

# 初始化现在滚动条所在高度为0

height = 0

# 当前窗口总高度

new_height = driver.execute_script(js)

heros = driver.find_elements(By.XPATH, '//ul[@class="hero-list"]/li')

for hero in heros:

driver.switch_to.window(driver.window_handles[0])

# 来到英雄详情页面

hero.click()

time.sleep(3)

driver.switch_to.window(driver.window_handles[1])

name=driver.find_element(By.XPATH,'//p[@class="heroName_color"]')

print(name.text)

FileDownload.createFolder(r'E:\file\lol\{}'.format(name.text))

skins = driver.find_elements(By.XPATH, '//div[@class="skins-preview-item"]/img')

for i in range(len(skins)):

FileDownload.downloadFile(r'E:\file\lol\{}\{}.jpg'.format(name.text,i),skins[i].get_attribute("src"))

driver.close()

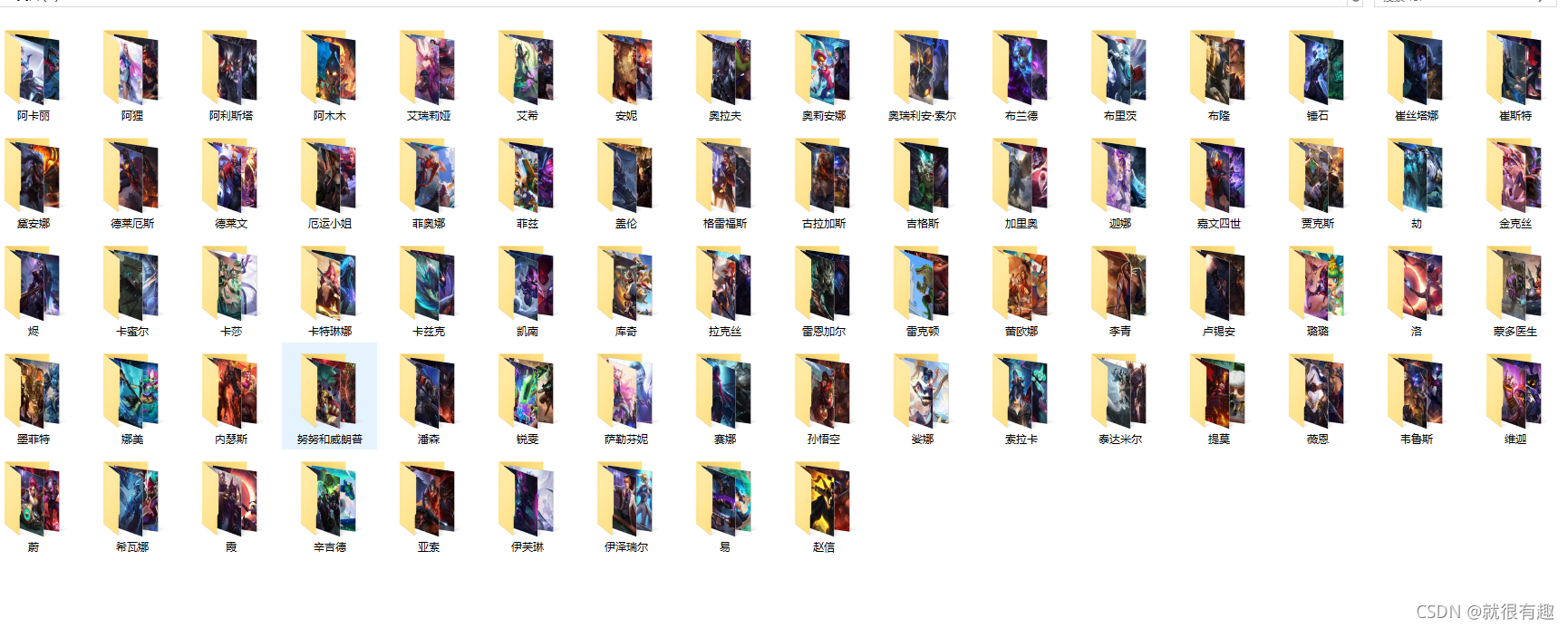

成果

总结

整个过程非常常规并且简单,兄弟们快来试试吧

仅供学习,侵权必删

推荐下自己的爬虫专栏,都是一些入门的爬虫样例,有兴趣的兄弟们可以来看看,顺便点一手关注

??爬虫专栏,快来点我呀??

另外还有博主的爬虫博客目录

爬虫样例汇总,快来看看吧