好久没爬虫了!!!

想起之前搁置的一个项目,爬取Boss直聘的招聘信息,原本以为很简单,像常规一样爬取就好了!

不过,事情还真没那么简单。

避坑:

1. xpath提取页面,只能爬取一页内容,所以选择beautifulsoup提取,成功!

2. 网站网址重定向,需scrapy shell 网址,找出重定向网址,并发送请求

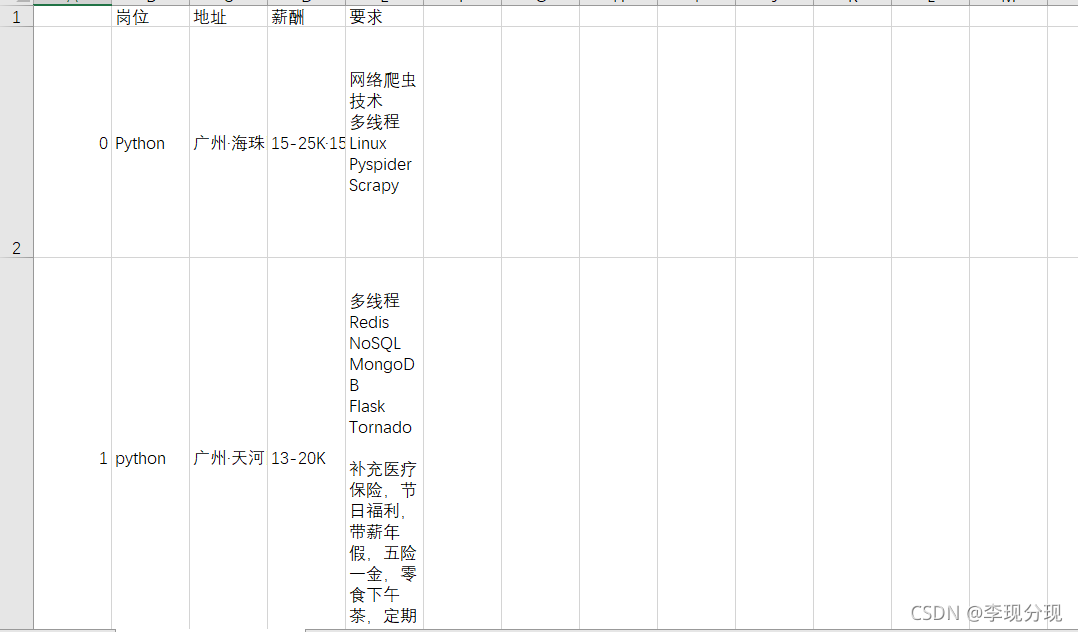

3. 用pandas保存数据为csv文件,以方便查看。

体会:

以前,总习惯用xpath,没想到beautifulsoup这么好用,提取数据也方便。

源码:

import pandas as pd

import time

import selenium.common.exceptions

from bs4 import BeautifulSoup

from selenium.webdriver.support import expected_conditions as EC

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.common.by import By

url='https://www.zhipin.com/web/common/security-check.html?seed=peCvwEBK40kvurrwEUWhtR2XZMf3I20iKfLyZA281RI%3D&name=8552bbf3&ts=1634126289717&callbackUrl=%2Fc101280100%2F%3Fquery%3DPython%26industry%3D%26position%3D%26ka%3Dhot-position-1&srcReferer=https%3A%2F%2Fwww.zhipin.com%2Fguangzhou%2F%3Fsid%3Dsem_pz_bdpc_dasou_title'

# driver= webdriver.Edge("C:\Program Files (x86)\Microsoft\Edge\Application\msedgedriver.exe")

# driver=webdriver.PhantomJS()

driver=webdriver.Chrome()

wait=WebDriverWait(driver,10)

def get_Boss():

driver.get(url)

try:

jobs = []#岗位列表,保存所以岗位

adders = []#地址

moneys = []#薪酬

dems = []#要求

#页数,可自定义

for i in range(3):

# #获取网页源码

html=driver.page_source

bs=BeautifulSoup(html,'html.parser')

job=bs.find_all('span',{'class':'job-name'})#找到对应属性的span标签,格式为列表

adder=bs.find_all('span',{'class':'job-area'})

money=bs.find_all('span',{'class':'red'})

dem=bs.find_all('div',{'class':'info-append clearfix'})

for j in job:

j=j.get_text()#提取标签下text文本

print(j)

jobs.append(j)#添加到列表

# f = pd.DataFrame(data={})

for a in adder:

a=a.get_text()

adders.append(a)

# print(a)

# f = pd.DataFrame(data={})

for m in money:

m = m.get_text()

moneys.append(m)

# print(m)

# f = pd.DataFrame(data={})

for d in dem:

d = d.get_text()

dems.append(d)

# print(d)

#点击下一页

btn=wait.until(EC.element_to_be_clickable((By.CSS_SELECTOR,'#main > div > div.job-list > div.page > a.next')))

driver.execute_script('arguments[0].click();',btn)

time.sleep(10)

#文件保存

f = pd.DataFrame(data={'岗位': jobs, '地址': adders, '薪酬': moneys, '要求': dems})

f.to_csv('Boss直聘招聘信息.csv', encoding='utf-8-sig')

except selenium.common.exceptions.NoSuchElementException:

print('运行出错了!')

if __name__=='__main__':

get_Boss()

结果:

pandas的csv保存,真的很好用,特别适合字符串较多的行。

它的功能强大到直接保存整个列表,一开始我还想着要遍历保存,傻了!