完整代码:

using Intel.RealSense;

using System;

using System.Collections;

using System.Collections.Generic;

using System.Threading;

using UnityEngine;

using UnityEngine.Events;

using UI = UnityEngine.UI;

using OpenCVForUnity.CoreModule;

using OpenCVForUnity.ImgprocModule;

using OpenCVForUnity.UtilsModule;

using OpenCVForUnity.UnityUtils;

public class RsAprilTag : MonoBehaviour

{

private static TextureFormat Convert(Format lrsFormat)

{

switch (lrsFormat)

{

case Format.Z16: return TextureFormat.R16;

case Format.Disparity16: return TextureFormat.R16;

case Format.Rgb8: return TextureFormat.RGB24;

case Format.Rgba8: return TextureFormat.RGBA32;

case Format.Bgra8: return TextureFormat.BGRA32;

case Format.Y8: return TextureFormat.Alpha8;

case Format.Y16: return TextureFormat.R16;

case Format.Raw16: return TextureFormat.R16;

case Format.Raw8: return TextureFormat.Alpha8;

case Format.Disparity32: return TextureFormat.RFloat;

case Format.Yuyv:

case Format.Bgr8:

case Format.Raw10:

case Format.Xyz32f:

case Format.Uyvy:

case Format.MotionRaw:

case Format.MotionXyz32f:

case Format.GpioRaw:

case Format.Any:

default:

throw new ArgumentException(string.Format("librealsense format: {0}, is not supported by Unity", lrsFormat));

}

}

private static int BPP(TextureFormat format)

{

switch (format)

{

case TextureFormat.ARGB32:

case TextureFormat.BGRA32:

case TextureFormat.RGBA32:

return 32;

case TextureFormat.RGB24:

return 24;

case TextureFormat.R16:

return 16;

case TextureFormat.R8:

case TextureFormat.Alpha8:

return 8;

default:

throw new ArgumentException("unsupported format {0}", format.ToString());

}

}

[SerializeField] UI.RawImage _ircamPreview = null;

public RsFrameProvider Source;

[System.Serializable]

public class TextureEvent : UnityEvent<Texture> { }

public Stream _stream;

public Format _format;

public int _streamIndex;

public FilterMode filterMode = FilterMode.Point;

protected Texture2D texture;

FrameQueue q;

Predicate<Frame> matcher;

[SerializeField] Camera cam;

[SerializeField]

Vector2Int _resolution = new Vector2Int(848, 800);

[SerializeField]

int _decimation = 4;

[SerializeField]

float _tagSize = 0.05f;

[SerializeField]

Material _tagMaterial = null;

Texture2D outputTex;

Color32[] _readBuffer;

// AprilTag detector and drawer

AprilTag.TagDetector _detector;

TagDrawer _drawer;

void Start()

{

Source.OnStart += OnStartStreaming;

Source.OnStop += OnStopStreaming;

_readBuffer = new Color32[_resolution.x * _resolution.y];

// Detector and drawer

_detector = new AprilTag.TagDetector(_resolution.x, _resolution.y, _decimation);

_drawer = new TagDrawer(_tagMaterial);

}

void OnDestroy()

{

if (texture != null)

{

Destroy(texture);

texture = null;

}

if (q != null)

{

q.Dispose();

}

_detector.Dispose();

_drawer.Dispose();

}

protected void OnStopStreaming()

{

Source.OnNewSample -= OnNewSample;

if (q != null)

{

q.Dispose();

q = null;

}

}

public void OnStartStreaming(PipelineProfile activeProfile)

{

q = new FrameQueue(1);

matcher = new Predicate<Frame>(Matches);

Source.OnNewSample += OnNewSample;

}

private bool Matches(Frame f)

{

using (var p = f.Profile)

return p.Stream == _stream && p.Format == _format && (p.Index == _streamIndex || _streamIndex == -1);

}

void OnNewSample(Frame frame)

{

try

{

if (frame.IsComposite)

{

using (var fs = frame.As<FrameSet>())

using (var f = fs.FirstOrDefault(matcher))

{

if (f != null)

q.Enqueue(f);

return;

}

}

if (!matcher(frame))

return;

using (frame)

{

q.Enqueue(frame);

}

}

catch (Exception e)

{

Debug.LogException(e);

// throw;

}

}

bool HasTextureConflict(VideoFrame vf)

{

return !texture ||

texture.width != vf.Width ||

texture.height != vf.Height ||

BPP(texture.format) != vf.BitsPerPixel;

}

protected void LateUpdate()

{

if (q != null)

{

VideoFrame frame;

if (q.PollForFrame<VideoFrame>(out frame))

using (frame)

ProcessFrame(frame);

}

}

private void ProcessFrame(VideoFrame frame)

{

if (HasTextureConflict(frame))

{

if (texture != null)

{

Destroy(texture);

}

using (var p = frame.Profile) {

bool linear = (QualitySettings.activeColorSpace != ColorSpace.Linear)

|| (p.Stream != Stream.Color && p.Stream != Stream.Infrared);

texture = new Texture2D(frame.Width, frame.Height, Convert(p.Format), false, linear)

{

wrapMode = TextureWrapMode.Clamp,

filterMode = filterMode

};

}

}

texture.LoadRawTextureData(frame.Data, frame.Stride * frame.Height);

texture.Apply();

//关键代码:利用opencv将t265的图像垂直翻转

Mat mat = new Mat (texture.height, texture.width, CvType.CV_8UC1);

Utils.texture2DToMat (texture, mat, true, 1);

//利用opencv进行水平翻转

outputTex = new Texture2D (mat.cols(), mat.rows(), TextureFormat.RGBA32, false);

Utils.matToTexture2D (mat, outputTex, true, -1);

_ircamPreview.texture = outputTex;

//Takes a few frames to get the output tex

if (outputTex == null)

{

return;

}

foreach (var tag in _detector.DetectedTags)

{

Debug.Log("ID:" + tag.ID + " 坐标:" + tag.Position + " 旋转:" + tag.Rotation);

}

//Get latest read from output texture

_readBuffer = outputTex.GetPixels32();

//Get up to date FOV. Realsense D430 horizontal FOV = 86 degrees

var fov = cam.fieldOfView * Mathf.Deg2Rad;

_detector.ProcessImage(_readBuffer, fov, _tagSize);

//Left this in, but changed so much, not sure if it works.

foreach (var tag in _detector.DetectedTags)

_drawer.Draw(tag.ID, tag.Position, tag.Rotation, _tagSize);

}

}

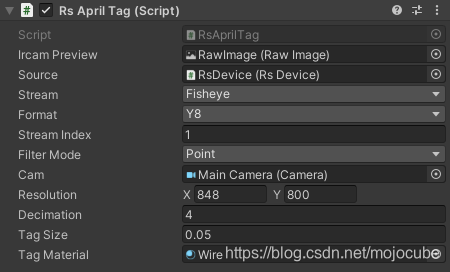

Inspector设置:

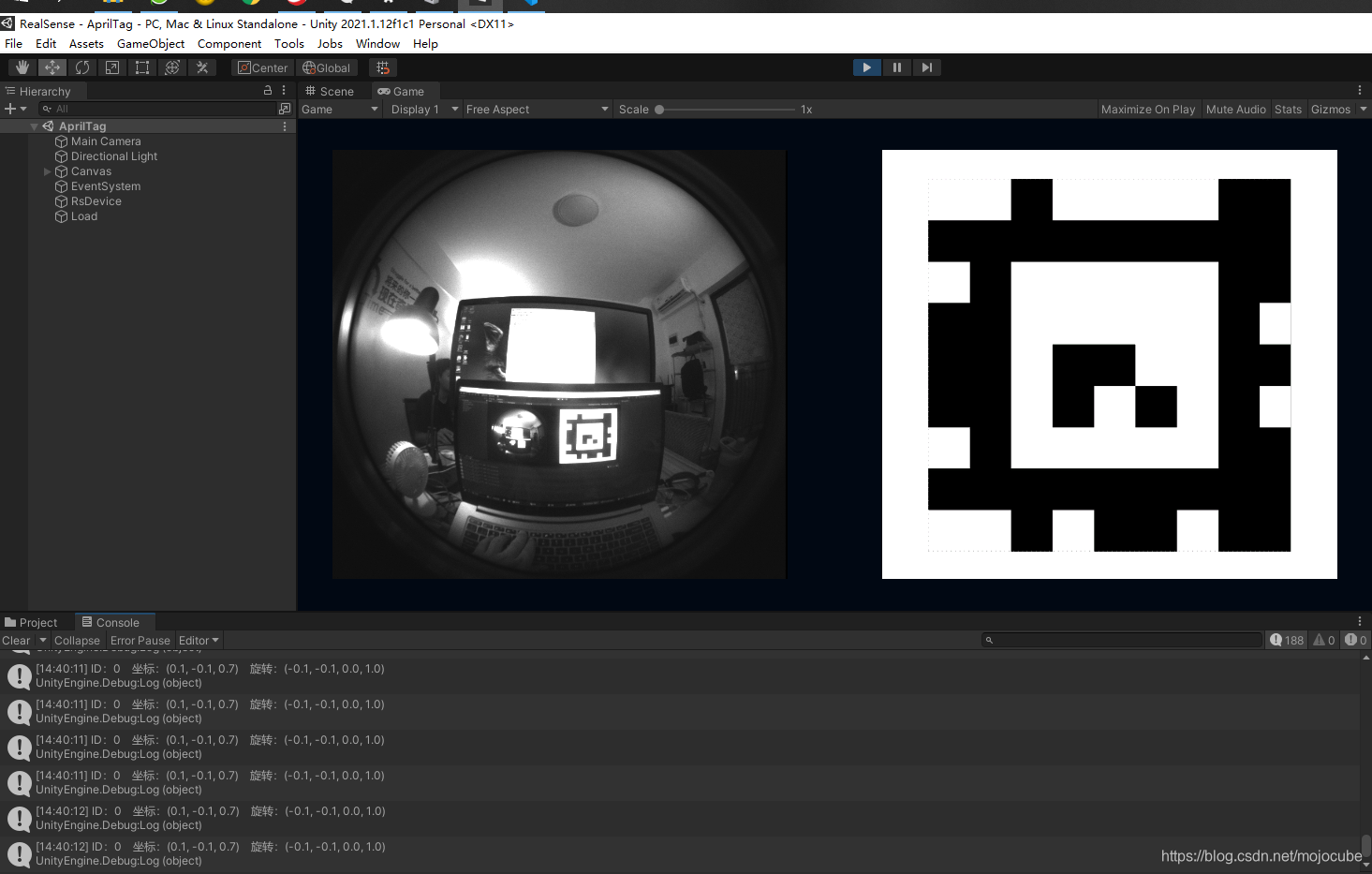

相机效果以及打印输出:

这里还没有对鱼眼镜头进行矫正,所以并不是很精确,下一步对鱼眼进行矫正。