shadertoy

网址

一个通过屏幕着色器写出的很多炫酷效果的网站

网站使用的是GLSL语言编写shader

搬运方法:

通过工具搬运:

工具地址:shaderman

里边带有一些搬运好的例子

使用方法:打开项目,window-shaderMan-将shadertoy中MainImage中的代码复制进去 点击convert即可。

问题:效果不是很好,且只能搬运没有使用buffer输入输出的,且太长编译会出问题。

通过自己翻译:

无非就是GLSL语言写的转换为unity的shaderlab

贴一下shadertoy里内置的变量

着色器输入

uniform vec3 iResolution; // viewport resolution (in pixels)

uniform float iTime; // shader playback time (in seconds)

uniform float iTimeDelta; // render time (in seconds)

uniform int iFrame; // shader playback frame

uniform float iChannelTime[4]; // channel playback time (in seconds)

uniform vec3 iChannelResolution[4]; // channel resolution (in pixels)

uniform vec4 iMouse; // mouse pixel coords. xy: current (if MLB down), zw: click

uniform samplerXX iChannel0…3; // input channel. XX = 2D/Cube

uniform vec4 iDate; // (year, month, day, time in seconds)

uniform float iSampleRate; // sound sample rate (i.e., 44100)

先推荐冯乐乐文章:基本的转换模板

通过宏命令的方式将GLSL替换成shaderlab

再贴一个自己使用中稍微改了的模板:

Shader "ShadertoyTemplate"{

Properties{

iMouse ("Mouse Pos", Vector) = (100, 100, 0, 0)

iChannel0("iChannel0", 2D) = "white" {}

iChannelResolution0 ("iChannelResolution0", Vector) = (100, 100, 0, 0)

}

CGINCLUDE

#include "UnityCG.cginc"

#pragma target 3.0

#define vec2 float2

#define vec3 float3

#define vec4 float4

#define mat2 float2x2

#define mat3 float3x3

#define mat4 float4x4

#define iTime _Time.y//

#define iGlobalTime _Time.y

#define mod fmod

#define mix lerp

#define fract frac

#define texture tex2D//

#define texture2D tex2D

#define iResolution _ScreenParams

#define gl_FragCoord ((_iParam.scrPos.xy/_iParam.scrPos.w) * _ScreenParams.xy)

#define PI2 6.28318530718

#define pi 3.14159265358979

#define halfpi (pi * 0.5)

#define oneoverpi (1.0 / pi)

fixed4 iMouse;

sampler2D iChannel0;

fixed4 iChannelResolution0;

struct v2f {

float4 pos : SV_POSITION;

float4 scrPos : TEXCOORD0;

};

v2f vert(appdata_base v) {

v2f o;

o.pos = mul (UNITY_MATRIX_MVP, v.vertex);

o.scrPos = ComputeScreenPos(o.pos);

return o;

}

vec4 main(vec2 fragCoord);

fixed4 frag(v2f _iParam) : COLOR0 {

vec2 fragCoord = gl_FragCoord;

return main(gl_FragCoord);

}

vec4 main(vec2 fragCoord) {

return vec4(1, 1, 1, 1);

}

ENDCG

SubShader {

Pass {

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#pragma fragmentoption ARB_precision_hint_fastest

ENDCG

}

}

FallBack Off

}

鼠标绑定:

using UnityEngine;

using System.Collections;

public class ShaderToyHelper : MonoBehaviour {

private Material _material = null;

private bool _isDragging = false;

// Use this for initialization

void Start () {

Renderer render = GetComponent<Renderer>();

if (render != null) {

_material = render.material;

}

_isDragging = false;

}

// Update is called once per frame

void Update () {

Vector3 mousePosition = Vector3.zero;

if (_isDragging) {

mousePosition = new Vector3(Input.mousePosition.x, Input.mousePosition.y, 1.0f);

} else {

mousePosition = new Vector3(Input.mousePosition.x, Input.mousePosition.y, 0.0f);

}

if (_material != null) {

_material.SetVector("iMouse", mousePosition);

}

}

void OnMouseDown() {

_isDragging = true;

}

void OnMouseUp() {

_isDragging = false;

}

}

使用方法:将shadertoy中mainImage中的代码贴到模板里的main函数中,再将编译错误的地方一一替换成shaderlab识别的语句。(有多个函数的将函也定义并贴进来)

问题:还是只能搬运没有使用buffer输入输出的,有些定义还得自己去修改替换

用到缓存的搬运

参照这个两个连接:

shadertoy水波纹

实现水波纹

这两个看似没有关系,但是相互参照一下,就全都有了。

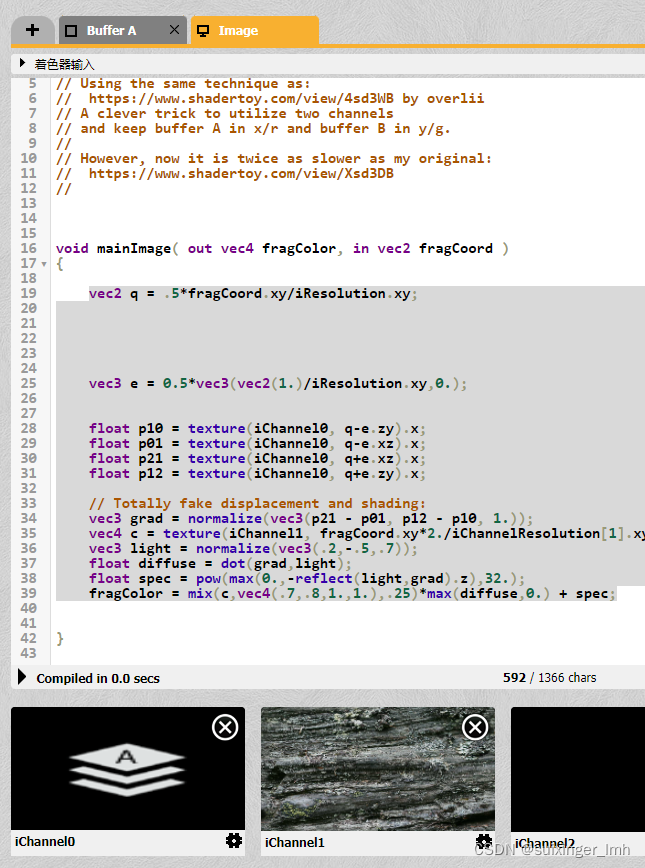

用到缓存的一个搬运例子

刚好业务需要,本来是在shadertoy上找的,然后死活搬不过来,卡了两天在b站上看到一个视频,就是上边贴的,然后就发现新大陆了。照着视频先做了一遍,然后自己又把这个搬运了下来!成功!

建议看上边b站视频,道理是一样的。

搬运步骤:

-

先打开这个水涟漪网页

发现他用到了一个ichannel0的一个缓存,也就是第一个bufferA将shader渲染结果保存在iChannel0中,然后在Image中,读取ichannel0保存的数据来用,输出渲染结果。 -

结合上边b站视频,发现这个ichannel0其实就是一个RenderTexture ;bufferA就是一个shader,计算数据用的shader;

重点代码:

public RenderTexture bufferA;

public RenderTexture CreateRT()

{

//这个RenderTextureFormat很重要,可以试试不同格式,效果不一致

RenderTexture rt = new RenderTexture(1920, 1080, 0, RenderTextureFormat.ARGBFloat)

//new RenderTexture(1920, 1080, 0, RenderTextureFormat.ARGB32)

;

rt.Create();

return rt;

}

//绘制

public void DrawAt(float x,float y,float z)

{

//这里两个参数就是bufferA中需要的参数

bufferMat.SetTexture("iChannel0", bufferA);

bufferMat.SetVector("iMouse", new Vector4(x, y,z));

//通过drawMat shader将数据存储在TempRT中 类似于shadertoy中的输出到buffer中

Graphics.Blit(null, tempRT, bufferMat);//这一步很重要,将数据绘制在RT中

//这里形成一个不断使用上一帧的数据

RenderTexture rt = tempRT;

tempRT = bufferA;

bufferA = rt;

}

3. 一个重要步骤:将bufferA的MainImage搬运到unity的shader中:很简单,上边的shadertoy模板拿一份,将bufferA中的MainImage全部贴到模板shader的main函数中,解决报错即可

一些基本报错

vec2(1.)/iResolution.xy//将vec2(1,1)写完整

d *= float(iFrame>=2); //这个iFrame是shadertoy内置函数,不知道对应unitylab的啥 不过好消息是在shadertoy中删掉再编译,不影响。所以直接注释掉

//都是一些基本问题,想想办法应该都差不多能解决

- 至此,第一个bufferA的问题解决了,接下来两种思路:

- 对mainImage写一个shader挂物体上,将上一步bufferA作为他需要的ichannel0传入即可。

- 依照此方法,再渲染一个RenderTexture作为整体输出结果。

其实是一样的。看自己想怎么用。

- 遇到的问题:

一个是两边的各种分辨率问题容易搞混,我也还没明白过来,需要自己注意吧;

全部弄完之后发现运行效果鼠标位置不对,且波纹不是正圆。(可能跟其中哪一步的分辨率设置有关)我这边在确保分辨率设置的还行(我也迷)的情况下,来回最大化窗口一下,发现正常了。(有时候切窗口大小还会出现诡异情况)

整体问题不大,效果完美搬运。

下面贴上整体代码:一共也就两个shader和一个RenderTexture设置脚本

bufferA 的搬运shader:

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "CalculateBuffer"{

Properties{

iMouse ("Mouse Pos", Vector) = (100, 100, 0, 0)

iChannel0("iChannel0", 2D) = "white" {}

iChannelResolution0 ("iChannelResolution0", Vector) = (100, 100, 0, 0)

}

CGINCLUDE

#include "UnityCG.cginc"

#pragma target 3.0

#define vec2 float2

#define vec3 float3

#define vec4 float4

#define mat2 float2x2

#define mat3 float3x3

#define mat4 float4x4

#define iTime _Time.y//

#define iGlobalTime _Time.y

#define mod fmod

#define mix lerp

#define fract frac

#define texture tex2D//

#define texture2D tex2D

#define iResolution _ScreenParams

#define gl_FragCoord ((_iParam.scrPos.xy/_iParam.scrPos.w) * _ScreenParams.xy)

#define PI2 6.28318530718

#define pi 3.14159265358979

#define halfpi (pi * 0.5)

#define oneoverpi (1.0 / pi)

float4 iMouse;

sampler2D iChannel0;

fixed4 iChannelResolution0;

struct v2f {

float4 pos : SV_POSITION;

float4 scrPos : TEXCOORD0;

};

v2f vert(appdata_base v) {

v2f o;

o.pos = UnityObjectToClipPos (v.vertex);

o.scrPos = ComputeScreenPos(o.pos);

return o;

}

vec4 main(vec2 fragCoord);

fixed4 frag(v2f _iParam) : COLOR0 {

vec2 fragCoord = gl_FragCoord;

return main(gl_FragCoord);

}

vec4 main(vec2 fragCoord) {

vec2 res = iResolution.xy;

res /= 2.;

if( fragCoord.x > res.x || fragCoord.y > res.y )

discard;

vec3 e = vec3(vec2(1,1)/iResolution.xy,0.);//最小偏移量

vec2 q = fragCoord.xy/iResolution.xy;//0-1映射

vec4 c = texture(iChannel0, q);

float p11 = c.y;

float p10 = texture(iChannel0, q-e.zy).x;

float p01 = texture(iChannel0, q-e.xz).x;

float p21 = texture(iChannel0, q+e.xz).x;

float p12 = texture(iChannel0, q+e.zy).x;

float d = 0.;

if (iMouse.z > 0.)

{

// Mouse interaction:

vec2 m = iMouse.xy;

m /= 2.;

d = smoothstep(4.5,.5,length(m - fragCoord));

}

else

{

// Simulate rain drops

float t = iTime*2.;

vec2 pos = fract(floor(t)*vec2(0.456665,0.708618))*res;//iResolution.xy;

float amp = 1.-step(.05,fract(t));

d = -amp*smoothstep(2.5,.5,length(pos - fragCoord.xy/2.));

}

// The actual propagation:

d += (-(p11-.5)*2. + (p10 + p01 + p21 + p12 - 2.));

d *= .99; // dampening

// d *= float(iFrame>=2); // clear the buffer at iFrame < 2

d = d*.5 + .5;

// Put previous state as "y":

//fragColor =;

return vec4(d, c.x, 0, 0);

}

ENDCG

SubShader {

Pass {

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#pragma fragmentoption ARB_precision_hint_fastest

ENDCG

}

}

FallBack Off

}

mainImage的搬运shader:

// Upgrade NOTE: replaced 'mul(UNITY_MATRIX_MVP,*)' with 'UnityObjectToClipPos(*)'

Shader "BlenderShader"{

Properties{

iMouse ("Mouse Pos", Vector) = (100, 100, 0, 0)

iChannel0("iChannel0", 2D) = "white" {}

iChannel1("iChannel1", 2D) = "white" {}

iChannelResolution0 ("iChannelResolution0", Vector) = (100, 100, 0, 0)

iChannelResolution1 ("iChannelResolution1", Vector) = (100, 100, 0, 0)

}

CGINCLUDE

#include "UnityCG.cginc"

#pragma target 3.0

#define vec2 float2

#define vec3 float3

#define vec4 float4

#define mat2 float2x2

#define mat3 float3x3

#define mat4 float4x4

#define iTime _Time.y//

#define iGlobalTime _Time.y

#define mod fmod

#define mix lerp

#define fract frac

#define texture tex2D//

#define texture2D tex2D

#define iResolution _ScreenParams

#define gl_FragCoord ((_iParam.scrPos.xy/_iParam.scrPos.w) * _ScreenParams.xy)

#define PI2 6.28318530718

#define pi 3.14159265358979

#define halfpi (pi * 0.5)

#define oneoverpi (1.0 / pi)

fixed4 iMouse;

sampler2D iChannel0;

fixed4 iChannelResolution0;

sampler2D iChannel1;

fixed4 iChannelResolution1;

struct v2f {

float4 pos : SV_POSITION;

float4 scrPos : TEXCOORD0;

};

v2f vert(appdata_base v) {

v2f o;

o.pos = UnityObjectToClipPos (v.vertex);

o.scrPos = ComputeScreenPos(o.pos);

return o;

}

vec4 main(vec2 fragCoord);

fixed4 frag(v2f _iParam) : COLOR0 {

vec2 fragCoord = gl_FragCoord;

return main(gl_FragCoord);

}

vec4 main(vec2 fragCoord) {

vec2 q = .5*fragCoord.xy/iResolution.xy;

vec3 e = 0.5*vec3(vec2(1,1)/iResolution.xy,0.);

float p10 = texture(iChannel0, q-e.zy).x;

float p01 = texture(iChannel0, q-e.xz).x;

float p21 = texture(iChannel0, q+e.xz).x;

float p12 = texture(iChannel0, q+e.zy).x;

// Totally fake displacement and shading:

vec3 grad = normalize(vec3(p21 - p01, p12 - p10, 1.));

vec4 c = texture(iChannel1, fragCoord.xy*2./iChannelResolution1.xy + grad.xy*.35);

vec3 light = normalize(vec3(.2,-.5,.7));

float diffuse = dot(grad,light);

float spec = pow(max(0.,-reflect(light,grad).z),32.);

//fragColor = mix(c,vec4(.7,.8,1.,1.),.25)*max(diffuse,0.) + spec;

return mix(c,vec4(.7,.8,1.,1.),.25)*max(diffuse,0.) + spec;

}

ENDCG

SubShader {

Pass {

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#pragma fragmentoption ARB_precision_hint_fastest

ENDCG

}

}

FallBack Off

}

设置渲染缓存的脚本:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.UI;

public class NewWater : MonoBehaviour

{

//shader计算缓存

public RenderTexture bufferA;

public RenderTexture tempRT;

public Texture _Texture;

public RenderTexture ShowRT;

public Shader blendShader;

public Material blendMat;

public Shader bufferShader;

public Material bufferMat;

public Camera mainCamera;

public RawImage image;

// Start is called before the first frame update

void Start()

{

mainCamera = Camera.main;

bufferA = CreateRT();

tempRT = CreateRT();

ShowRT = CreateRT();

bufferMat = new Material(bufferShader);

blendMat = new Material(blendShader);

image.texture = ShowRT;

GetComponent<Renderer>().material.SetTexture("iChannel0", bufferA);

}

// Update is called once per frame

void Update()

{

if (Input.GetMouseButton(0))

{

DrawAt(Input.mousePosition.x, Input.mousePosition.y, 1);

// Debug.Log(Input.mousePosition.x);

//Ray ray = mainCamera.ScreenPointToRay(Input.mousePosition);

//RaycastHit hit;

//if(Physics.Raycast(ray,out hit))

//{

// DrawAt(Input.mousePosition.x, Input.mousePosition.y, 1);

// Debug.Log(Input.mousePosition.x);

// // DrawAt(hit.textureCoord.x, hit.textureCoord.y,1);

//}

}

else

{

DrawAt(100, 100, 1);

}

blendMat.SetTexture("iChannel0", bufferA);

blendMat.SetTexture("iChannel1", _Texture);

Graphics.Blit(null, ShowRT, blendMat);//通过涟漪计算shader 将数据保存在tempRT中

//Graphics.Blit(TempRT, PrevRT);

//RenderTexture rt = PrevRT;

//PrevRT = CurrentRT;

//CurrentRT = rt;

}

public void DrawAt(float x,float y,float z)

{

bufferMat.SetTexture("iChannel0", bufferA);

bufferMat.SetVector("iMouse", new Vector4(x, y,z));

//通过drawMat shader将数据存储在TempRT中 类似于shadertoy中的输出到buffer中

Graphics.Blit(null, tempRT, bufferMat);

RenderTexture rt = tempRT;

tempRT = bufferA;

bufferA = rt;

}

public RenderTexture CreateRT()

{

RenderTexture rt = new RenderTexture(1920, 1080, 0, RenderTextureFormat.ARGBFloat)

//new RenderTexture(1920, 1080, 0, RenderTextureFormat.ARGB32)

;

rt.Create();

return rt;

}

}