前言

元旦的时候睡不着,也不想让自己闲下来颓废,就接一下百度AI玩玩,定位到百度智能云的人体识别。

实现步骤

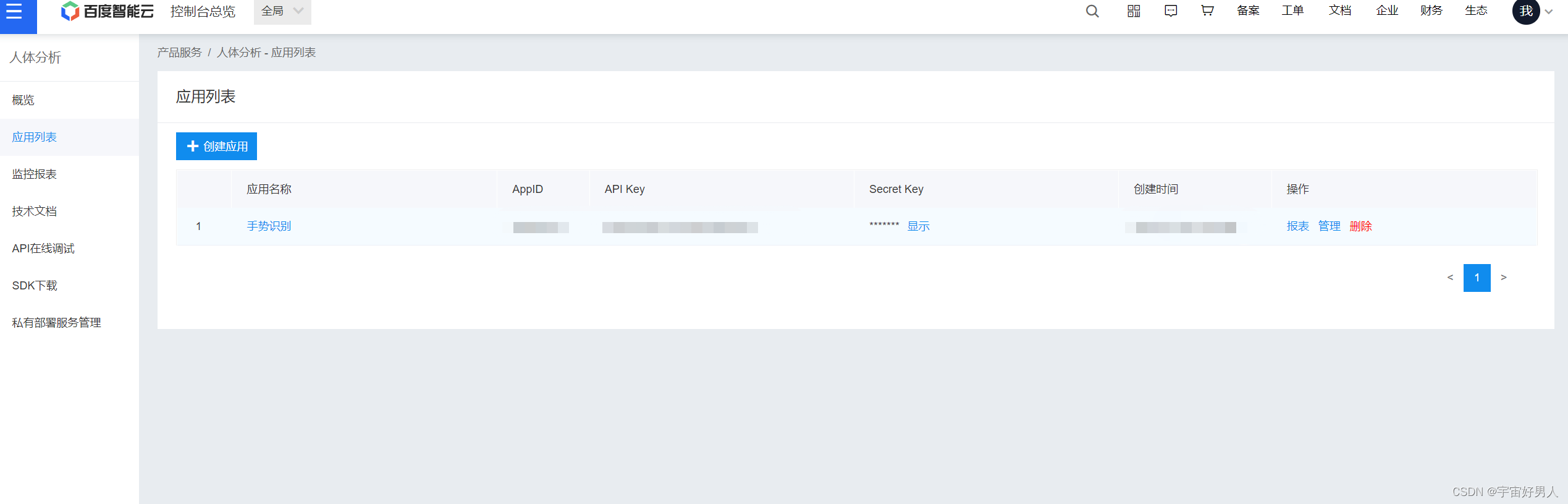

一、百度智能云人体分析应用创建,得到自己的API Key和Secret Key

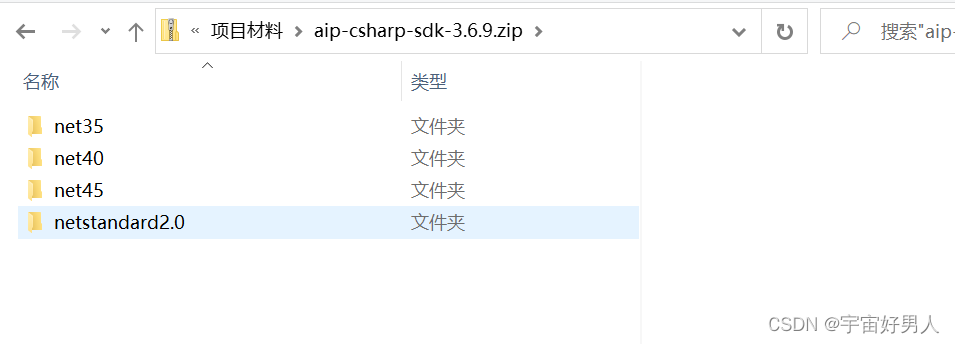

二、下载相应的SDK

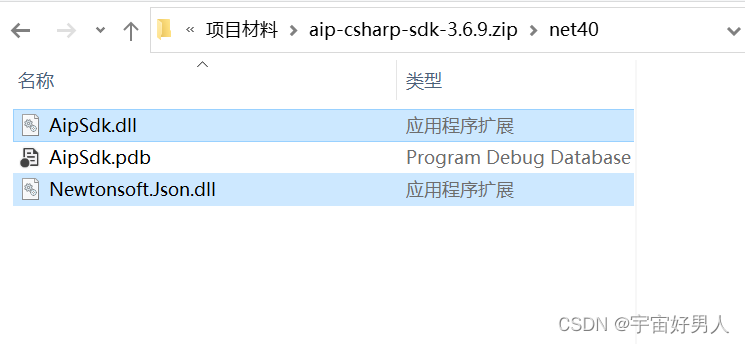

文件下载解压之后得到这四个文件夹

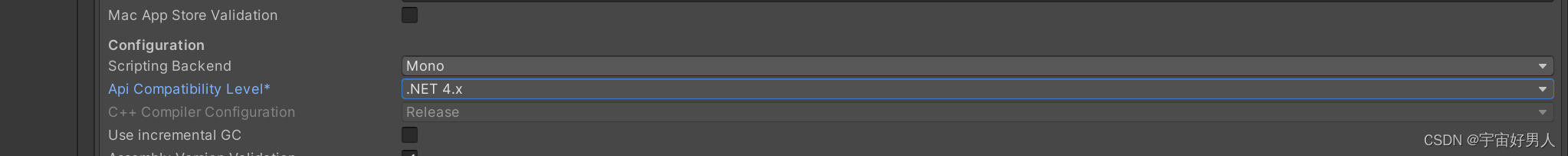

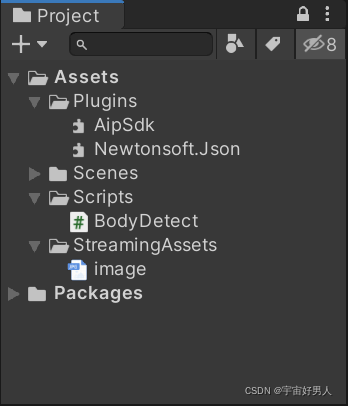

文件夹后面的数字是指.net框架的版本,可以在Unity工程中去设置,我这里使用的是.net4,将net40文件夹中的dll文件导入unity工程中的Plugins文件夹中

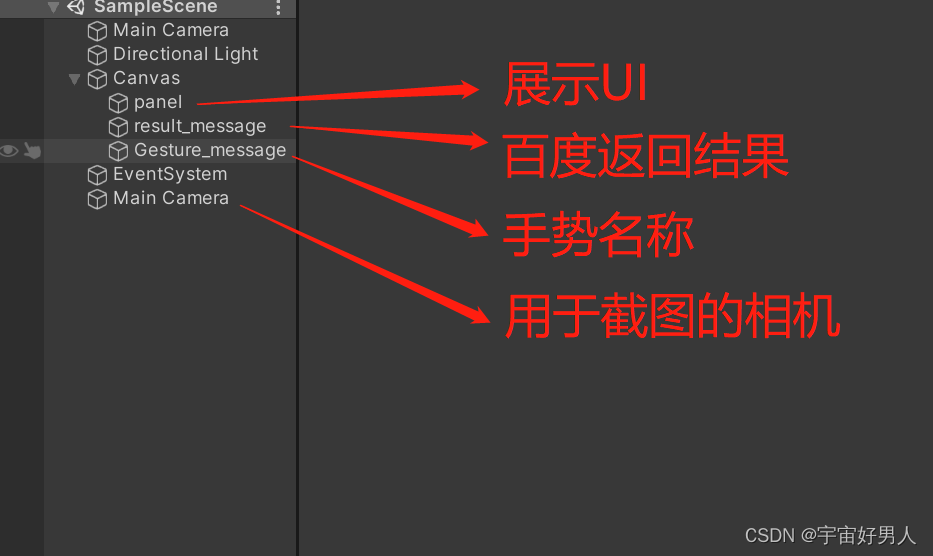

三、创建UI界面,用于展示

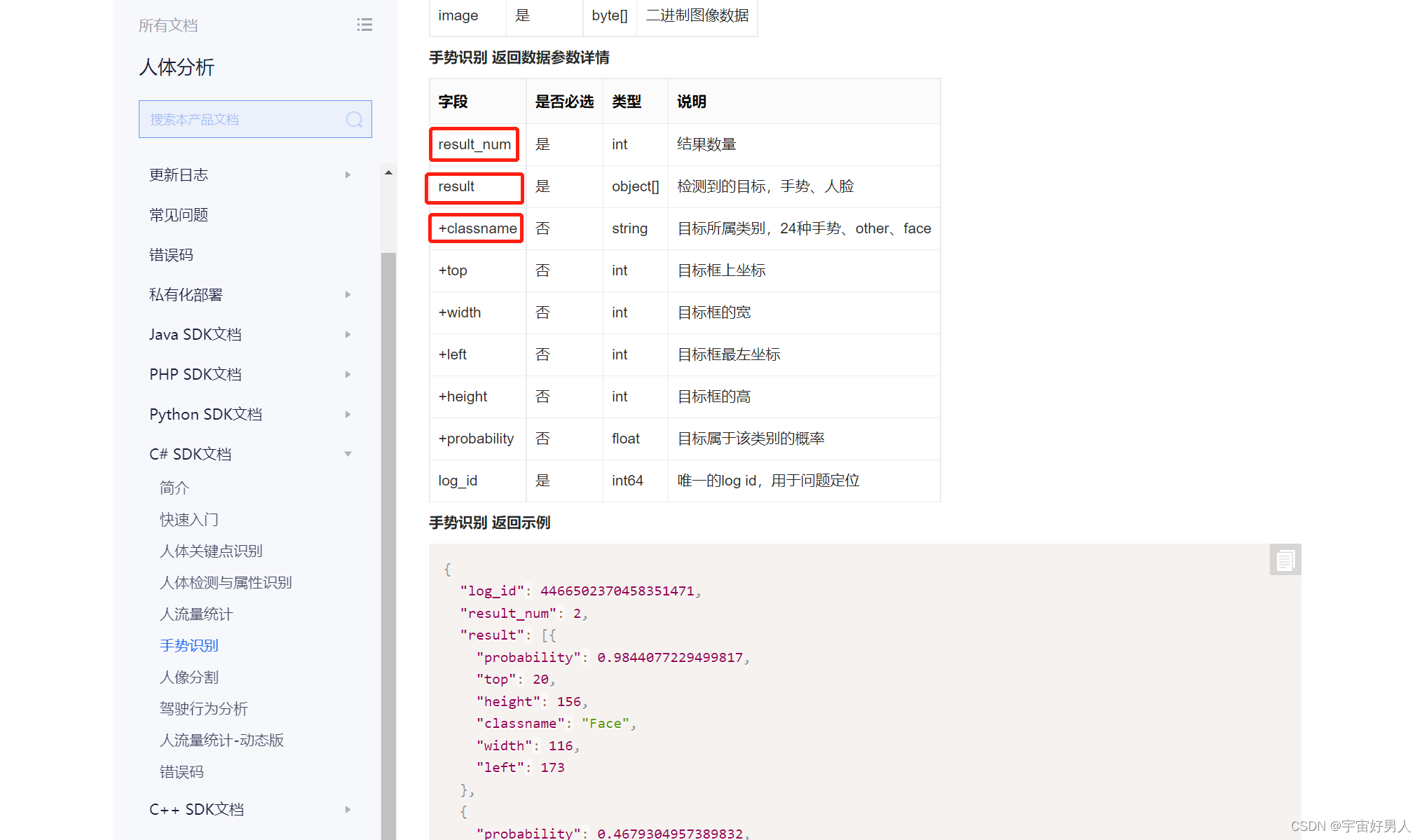

四、查看API文档,编写代码,实现功能

因为此次所演示的主要是手势识别,所以主要查看手势识别的API参数,以上圈起来的是我此次所用到的。

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Baidu.Aip.BodyAnalysis;

using System.IO;

using UnityEngine.UI;

public class BodyDetect : MonoBehaviour

{

//自己的百度智能云api_key和secret_key

//public string app_id;

public string api_key;

public string secret_key;

Body client;

private string deviceName;

private WebCamTexture webCamTex;

public Text result_message;//百度AI结果数据

public Text detectedGesture_message;//展示手势名称

private int Frame = 30;//摄像画面的帧数

public int width;//画面大小

public int height;

public Camera cam;//截图摄像头

public string imageName;

RawImage panelRawimage;

float timer = 0;

float CupPictureTime = 1f;

string imagepath = Application.streamingAssetsPath + "/image.jpg";

// Use this for initialization

void Start()

{

StartCoroutine(OpenCamera());

client = new Body(api_key, secret_key);

client.Timeout = 60000; //超时时间

panelRawimage = transform.GetComponent<RawImage>();

}

// Update is called once per frame

void Update()

{

CupScreen();

}

/// <summary>

/// 调用摄像机

/// </summary>

/// <returns></returns>

IEnumerator OpenCamera()

{

yield return Application.RequestUserAuthorization(UserAuthorization.WebCam);

if (Application.HasUserAuthorization(UserAuthorization.WebCam))

{

WebCamDevice[] devices = WebCamTexture.devices;

deviceName = devices[0].name;

webCamTex = new WebCamTexture(deviceName, width, height, Frame);//设置摄像机摄像的区域

webCamTex.Play();//开始摄像

panelRawimage.texture = webCamTex;

}

}

/// <summary>

/// 截屏

/// </summary>

void CupScreen()

{

timer += Time.deltaTime;

if (timer > CupPictureTime)

{

File.Delete(imagepath);

Texture2D screenShot;

RenderTexture rt = new RenderTexture(width, height, 1);

cam.targetTexture = rt;

cam.Render();

RenderTexture.active = rt;

screenShot = new Texture2D(width, height, TextureFormat.RGB24, false);

screenShot.ReadPixels(new Rect(0, 0, width, height), 0, 0);

screenShot.Apply();

imageName = imagepath;

SaveImage(screenShot, 0, 0, width, height);

timer = 0;

}

}

/// <summary>

/// 保存图片

/// </summary>

/// <param name="tex"></param>

/// <param name="x"></param>

/// <param name="y"></param>

/// <param name="Width"></param>

/// <param name="Height"></param>

/// <returns></returns>

byte[] SaveImage(Texture2D tex, int x, int y, float Width, float Height)

{

Color[] pixels = new Color[(int)(Width * Height)];

Texture2D newTex = new Texture2D(Mathf.CeilToInt(Width), Mathf.CeilToInt(Height));

pixels = tex.GetPixels(x, y, (int)Width, (int)Height);//批量获取点像素

newTex.SetPixels(pixels);

newTex.anisoLevel = 2;

newTex.Apply();

byte[] bytes = newTex.EncodeToJPG();

System.IO.File.WriteAllBytes(imageName, bytes);//将截图后的新图片存在相应的路径中

GetGestureFromBaiduAI(imageName);

return bytes;

}

/// <summary>

/// 上传图片,获取返回结果,并且解析显示

/// </summary>

/// <param name="filesPath"></param>

public void GetGestureFromBaiduAI(string path)

{

var image = File.ReadAllBytes(path);

try

{

var result = client.Gesture(image);

result_message.text = result.ToString();

string[] msgStr = result_message.text.Split(',');

//解析返回的数据获取手势名称

for (int i = 0; i < msgStr.Length; i++)

{

if (msgStr[i].Contains("classname"))

{

string[] strArr = msgStr[i].Split(':');

detectedGesture_message.text = strArr[2];

break;

}

}

}

catch (System.Exception)

{

throw;

}

}

}

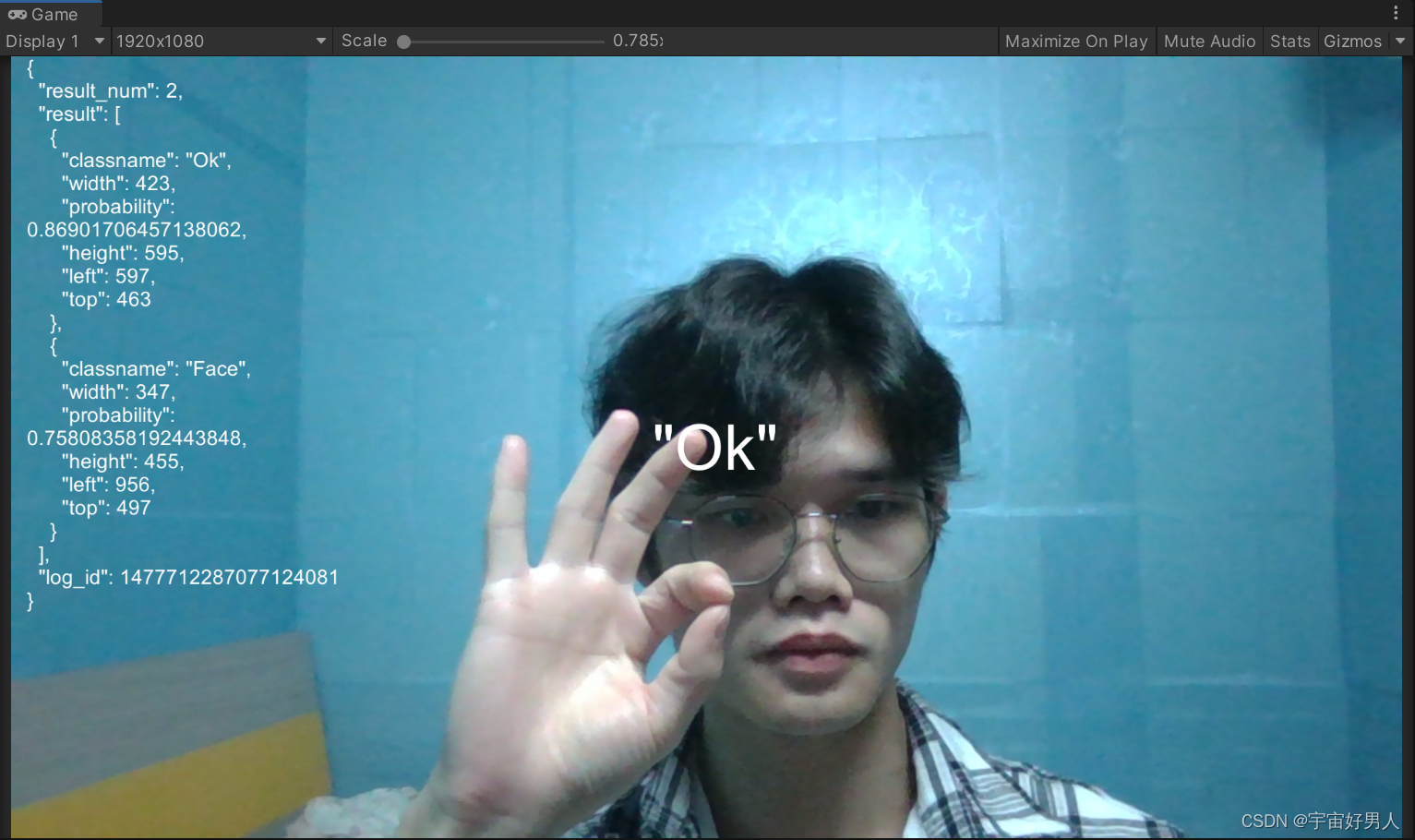

五、给脚本赋值,运行展示效果