5 View Selection

5.1 Global View Selection

For each reference view R, global view selection seeks a set N of neighboring views that are good candidates for stereo matching in terms of scene content, appearance, and scale. In addition, the neighboring views should provide sufficient parallax with respect to R and each other in order to enable a stable match. Here we describe a scoring function designed to measure the quality of each candidate neighboring view based on these desiderata.

对于每个参考视图R,“全局视图选择”(global view selection)会寻找一组N个相邻视图,这些视图在场景内容、外观和比例方面都是立体匹配的良好候选视图。此外,相邻视图应提供足够的视差,以实现稳定的匹配。在这里,我们描述了一个评分函数,用于根据这些需求来衡量每个候选相邻视图的质量。

:现在我们如果有了一张图,该找哪张图片跟它一起生成深度图呢?这一章提供了一个思路。

首先作者把待匹配的这张图片叫做参考试图 R,然后作者设计了打分器给其他图片打分,这个过程叫做全局视图选择(Global View Selection)。打分的过程是这样的:它不是给所有的图片都打分,而是先挑选出一组邻近的候选视图 N,然后给N中的每个视图打分。

打分的依据有三点:

- 相比于参考试图得有一定视差

- 得有相似的场景内容

- 有相似的分辨率(比例)

前2点都比较好理解,第3点的意思是两张图片内容所占的画面比例是相似的,你可以这么理解:两张图片都是从差不多远的距离拍摄的,或者理解为这两张图片的相机位置没有发生很大变化。从图片的角度理解就是,不能一张图片只拍了你的鼻子,鼻子占了整个画面,另一张是拍的全脸,鼻子只占了画面的10%,鼻子在这两张图像中的分辨率是不同的。至于为什么要这么做呢?不知道。

To first order, the number of shared feature points reconstructed in the SfM phase is a good indicator of the compatibility of a given view V with the reference view R. Indeed, images with many shared features generally cover a similar portion of the scene. Moreover, success in SIFT matching is a good predictor that pixel-level matching will also succeed across much of the image. In particular, SIFT selects features with similar appearance, and thus images with many shared features tend to have similar appearance to each other, overall.

首先,在SfM阶段重建的共享特征点的数量是给定视图V与参考视图R兼容性的良好指标。实际上,具有许多共享特征的图像通常覆盖场景的类似部分。此外,SIFT匹配的成功是一个很好的预测,像素级匹配也将在图像的大部分区域成功。特别是,SIFT选择具有相似外观的特征,因此,总体而言,具有许多共享特征的图像往往彼此具有相似的外观。

:因为已经经过了SFM的阶段,所以哪些图片有共用的特征点我们是知道的,一个很容易想到的点就是:给定视图V和参考视图R的共用特征点越多,这两张图片越应该匹配到一起,因为数量多的共用特征点说明了它们覆盖了更多的共同场景。

However, the number of shared feature points is not sufficient to ensure good reconstructions. First, the views with the most shared feature points tend to be nearly collocated and as such do not provide a large enough baseline for accurate reconstruction. Second, the scale invariance of the SIFT feature detector causes images of substantially different resolutions to match well, but such resolution differences are problematic for stereo matching.

然而,共享特征点的数量不足以确保良好的重建。首先,具有最多共享特征点的视图往往几乎并置,因此无法提供足够大的基线进行精确重建。第二,SIFT特征检测器的尺度不变性使得分辨率相差很大的图像能够很好地匹配,但这种分辨率差异对于立体匹配来说是有问题的。

但是!如果只用两张图片相互匹配的特征点数量来进行选择,选择跟视图R共用特征点最多的视图V来进行深度计算就会出问题,因为他们很可能满足不了有一定视差这个条件,因为它们很可能太像一张图片了,用作者的话来说他们往往几乎是并置的(nearly collocated)所以无法提供足够的基线来进行重建计算。

另外!上面提到的分辨率问题,两张图片如果在分辨率上差很多,这在SIFT特征点匹配的时候是没有问题的,但是,在立体匹配的时候会出大问题。所以如果只按跟R共有的特征点数量来取V,就有可能取到分辨率差很多的两张图片。

Thus, we compute a global score gR for each view V within a candidate neighborhood N (which includes R) as a weighted sum over features shared with R:

where FX is the set of feature points observed in view X, and the weight functions are described below.

To encourage a good range of parallax within a neighborhood, the weight function wN (f) is defined as a product over all pairs of views in N:

where wα(f,Vi,Vj) = min((α/αmax)^2,1) and α is the angle between the lines of sight from Vi and Vj to f . The function wα(f,Vi,Vj) downweights triangulation angles below αmax, which we set to 10 degrees in all of our experiments. The quadratic weight function serves to counteract the trend of greater numbers of features in common with decreasing angle. At the same time, excessively large triangulation angles are automatically discouraged by the associated scarcity of shared SIFT features.

所以作者根据此设置了一个打分器,给参考视图R的邻域候选集中N中的每个V打分。注意,候选集N中也包括了R。

公式中左边gR表示此候选视图V的分数。求和符号下面的f是指共视点,FV和FR是指V和R中的特征点集,意思就是f是V和R的共视点。

wN是用来描述V和R的视差的,它的计算公式是:

不理解没关系,先看下面,其中:

简单来说是啥呢,α是从两张图片Vi和Vj到f的视线之间的夹角,但直接用角度来计算不太好,因为希望它是一个归一化的数,所以就有了wα,wα就是α的归一化参数。右式中的αMAX是人为设置的,取10°,从这里可以看出作者认为两张图片的视角超过10°就能保证重建效果了,角度再大也不会有显著提升。之所以加一个平方是为了抵消更多特征与角度减小的共同趋势(不懂),我的理解是平方是为了增强角度下降的影响,惩罚小角。另外,SIFT特征本身就不会出现过大的角度,两张图片角度过大就提不出SIFT特征。

好的,现在wα我们会算了,wN是个啥呢?再看一眼公式

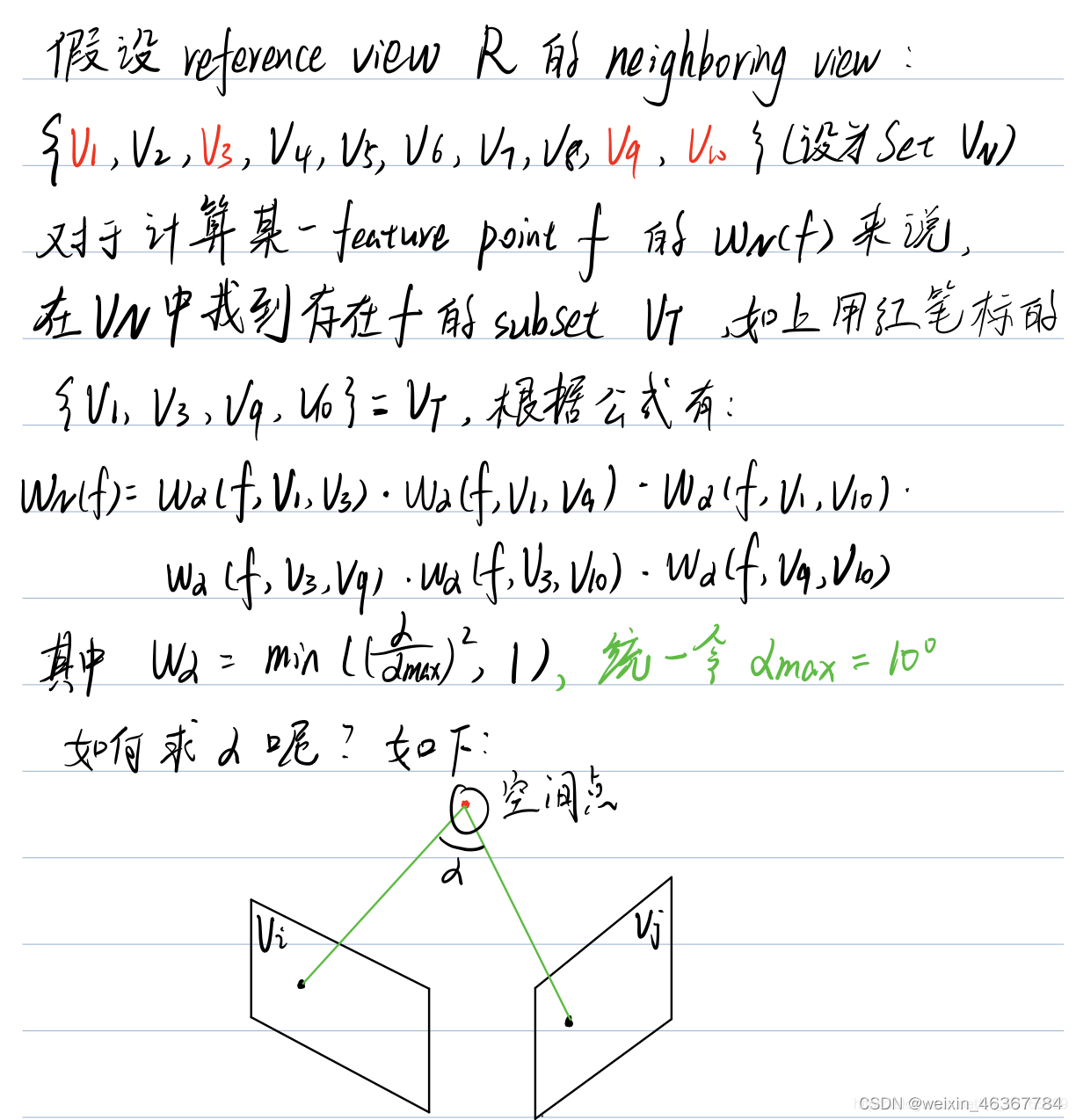

理解它的关键在于理解连乘符号下面的那一坨东西,它是指对于这个共视点f,在候选集N中找到所有能看到这个f的V,然后把它们两两配对,算一下之间的wα,最后把wα乘到一起就是wN。

有个博主举了一个很好的例子:

说实话我不理解为啥要这么做,如果有两张图片特近不就整个退化了吗?而且一个点越多的视角可以看见得分反而越低?

The weighting function ws(f) measures similarity in resolution of images R and V at feature f. To estimate the 3D sampling rate of V in the vicinity of the feature f, we compute the diameter sV (f) of a sphere centered at f whose projected diameter in V equals the pixel spacing in V . We similarly compute sR(f) for R and define the scale weight ws based on the ratio r = sR(f)/sV (f) using

This weight function favors views with equal or higher res- olution than the reference view.

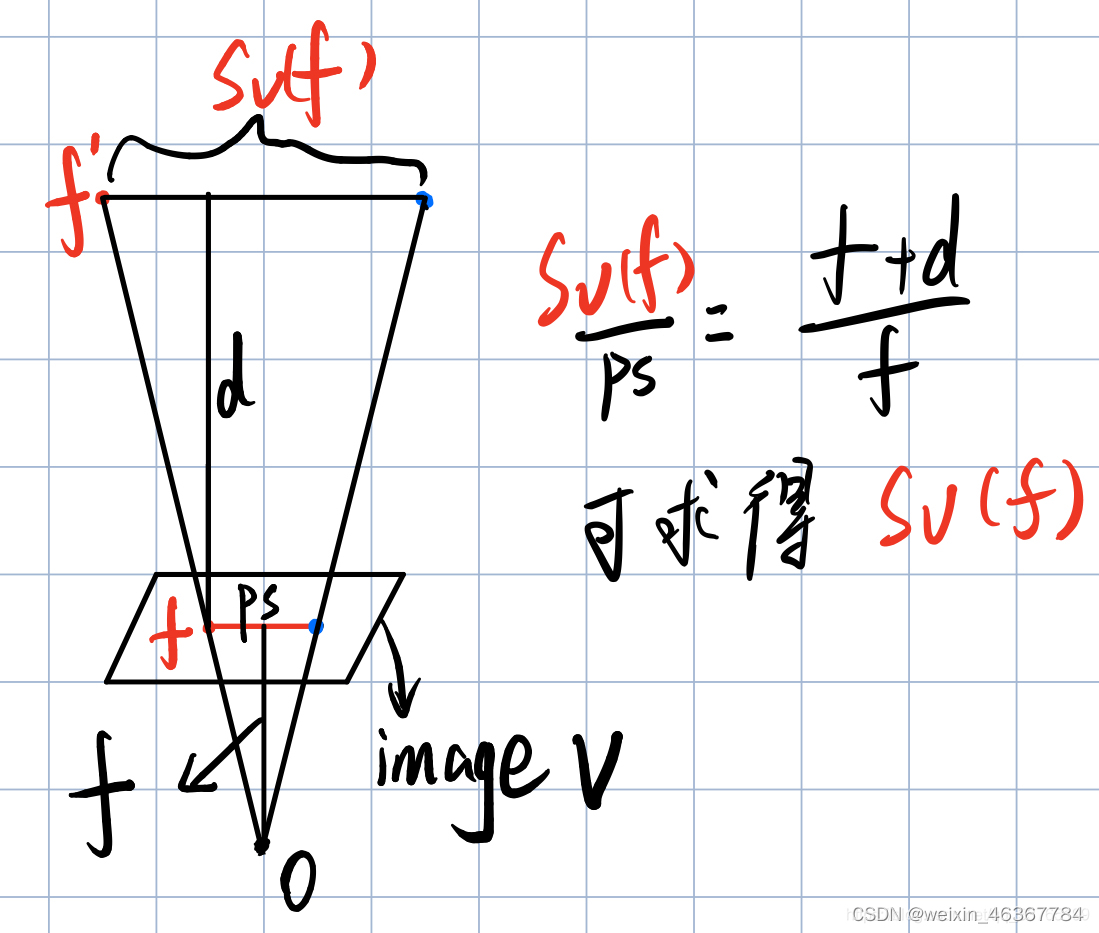

ws(scale weight)是用来测量R和V在共视点f处分辨率的相似性的,计算ws要先计算sR(f)和sV(f),它俩是一样的东西,例如sV是指V中f点处的分辨率。什么叫f点的分辨率呢,就是如果这点在画面上移动了一个像素间距(pixel spacing),那么实际3D中的f点在空间中移动了多少距离,这个距离就是sV(f)。还是那位博主的图

红点是f原来的位置,蓝点是移动了一个像素距离投影过去的位置(投影是投到O为圆心,Of‘为半径的球),他们的间距就是sV(f)。同理可算sR(f)。

最后另 r = sR(f)/sV (f)

意思是V比R的分辨率小一半以内是可以接受的,R比V大或者比V小一半以上都会受到惩罚。我估摸着这意思就是V图要尽量包含R图(r > 1),这样R图上的点都能在V上找到,因为目标还是重建R;但也不能太远了(r < 2),保证清晰度。总体来说,该权重函数支持分辨率等于或高于参考视图的视图。

Having defined the global score for a view V and neighborhood N, we could now find the best N of a given size (usually |N| = 10), in terms of the sum of view scores ∑V ∈N gR(v). For efficiency, we take a greedy approach and grow the neighborhood incrementally by iteratively adding to N the highest scoring view given the current N (which initially contains only R).

这段主要在说R的最佳候选集N是怎么来的,最开始候选集N里面只有R自己,之后计算N中所有V的分数之和∑gR(v),以此为依据来用贪心算法通过迭代地向N添加得分最高的视图来递增N(通常会规定N的大小不会超过10)。

Rescaling Views Although global view selection tries to select neighboring views with compatible scale, some amount of scale mismatch is unavoidable due to variability in resolution within CPCs, and can adversely affect stereo matching. We therefore seek to adapt, through proper filtering, the scale of all views to a common, narrow range either globally or on a per-pixel basis. We chose the former to avoid varying the size of the matching window in different areas of the depth map and to improve efficiency. Our approach is to find the lowest-resolution view Vmin ∈ N relative to R, resample R to approximately match that lower resolution, and then resample images of higher resolution to match R.

global view selection 虽然会尽力去选择scale compatible较好的neighbouring view进入N,但是难免会有较大的resolution 差距从而导致mismatch,这些mismatch 会对立体匹配产生很大的影响。可以通过过滤器将views的scale进行归一化,尽量减小取值范围。作者的方法是首先计算相对与R,在N中的最低分辨率view Vmin,然后根据Vmin对R进行重采样,再利用重采样后的R对其余高分辨率的图像进行重采样。

这里我还不懂,之后再看回头看。

Specifically, we estimate the resolution scale of a view V relative to R based on their shared features:

Vmin is then simply equal to arg minV ∈N scaleR (V ). If scaleR (Vmin ) is smaller than a threshold t (in our case t = 0.6 which corresponds to mapping a 5×5 reference window on a 3×3 window in the neighboring view with the lowest relative scale), we rescale the reference view so that, after rescaling, scaleR (Vmin ) = t. We then rescale all neighboring views with scaleR(V ) > 1.2 to match the scale of the reference view (which has possibly itself been rescaled in the previous step). Note that all rescaled ver- sions of images are discarded when moving on to compute a depth map for the next reference view.

我们V和R在的平均分辨率尺度:

从这个式子可以看到,求和符号的内部就是上面计算过的 r,这个式子相当于是把V和R的所有共视点处的分辨率尺度取了平均。

首先我们找到N中尺度最小的V,把它的尺度设为scaleR(Vmin),如果scaleR(Vmin)<0.6,则对R进行rescale。之后,rescale 所有 scaleR(V)大于1.2的V,使其matchreference view。注意,在计算深度图是,scale的图片都会被丢弃。

这一段也不太懂,之后再看