文章目录

Ch1

definitions of AI

- Systems that thinks like humans

- System that think rationally

- System that act like humans

- Systems that act rationally

The four definitions above vary along two dimensions :

- Human-centered

- Rational

An agent

Commonly an agent is something that acts.

Abstractly, an agent is a function from percept histories to actions:

Ch2

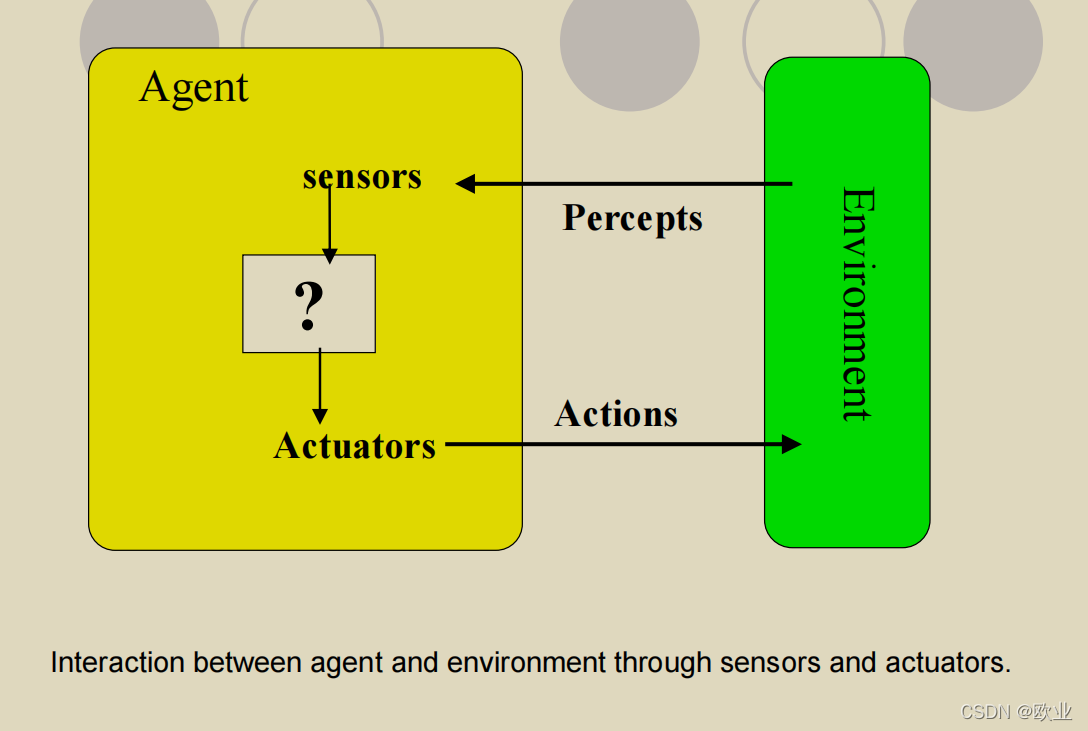

Agents and Environments

Agent

- Perceiving its environment through sensors

- Acting upon that environment through actuators

Percept

Agent’s perceptual inputs at any given instant

Percept Sequence

- The complete history of everything the agent has

ever perceived - An Agent’s choice of action at any given instant can

depend on the entire percept sequence observed to

date

Agent Function

- describe behaviors of an agent

- maps any given percept sequence to an action

Agent function table

- only record the external characterization of the agent

- abstract mathematical description

Agent Program

- Internally, the agent function of an intelligent agent is implemented by an agent program

- Concrete implementation and running on the agent architecture

Good Behavior: The Concept of Rationality

A complete specification of the task facing by the agent

- The description of the environment

- The sensors and actuators of the agent

- The performance measure (The criterion for success of an agent’s behavior)

When the agent is placed in an environment

- It generates a sequence of actions according to the percepts it receives.

- This sequence of actions causes the environment to go through a sequence of states

- If the sequence is desirable, the agent performed well

Rationality depends on

- The performance measure that defines criterion of success . For example, Reward one point for each clean square at each time step; It is better to design the performance measure according to what one actually wants in the environment

- The agent’s prior knowledge of the environment

- The actions that the agent can perform

- The agent’s percept sequence to date

definition of Rational Agent

For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge

Rationality

- It is not the same as perfection

- It is to maximize expected performance

- In contrast, perfection is to maximize actual performance

Information gathering is important part of rationality

Learning

- A rational agent also need to learn as much as possible from what it perceives

- The initial configuration of the agent may reflect some prior knowledge of the environment

- After gaining experience, this may be modified or augmented

- No learning is needed if we completely know the environment a priori…but this is the not case in reality

Successful agents should split the task of computing the agent function into 3 different periods

- When the agent is being designed

- When it is deliberating on its next action

- It learn from experience

The nature of environment

The Task Environment could be described by the PEAS Description

- Performance

- Environment

- Actuators

- Sensors

The Structure of Agents

Difference between program and function

- Agent program

Takes the current percept as input - Agent function

Takes the entire percept history

Four basic types of agent program

- Simple reflex agents

- Model-based reflex agents

- Goal-based agents

- Utility-based agents

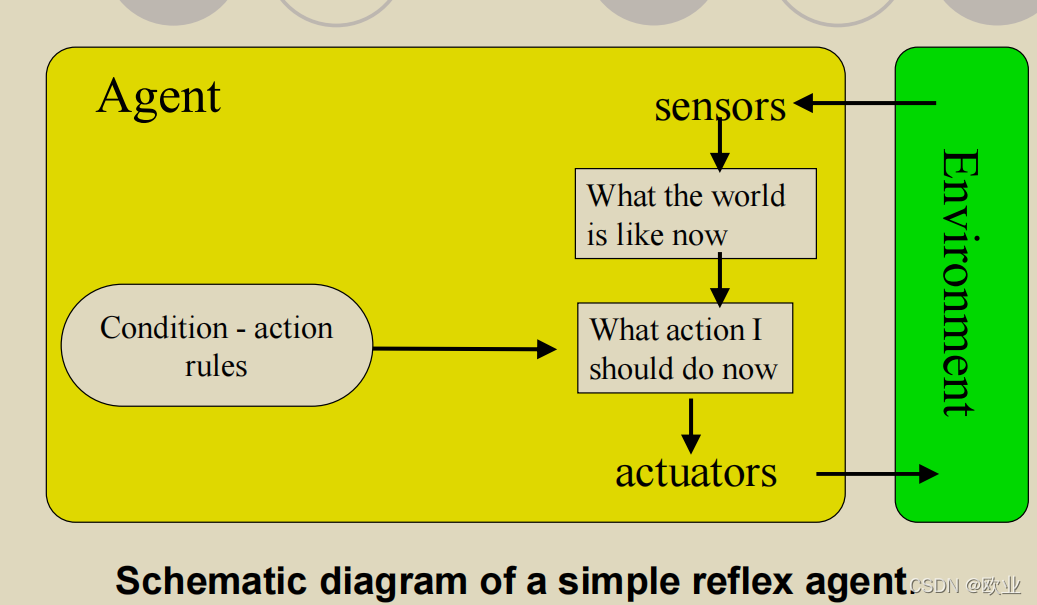

Simple reflex agents

- The agent selects action on the basis of the current percept

- Ignore the rest of the percept history

- Simple, but very limited intelligence

- work only if the correct decision can be made on the basis of only the current percept

- only if the environment is full observable

problem:

- Infinite loops are often unavoidable in partially observable environment

- An agent may escape from infinite loops if the agent can randomize its action

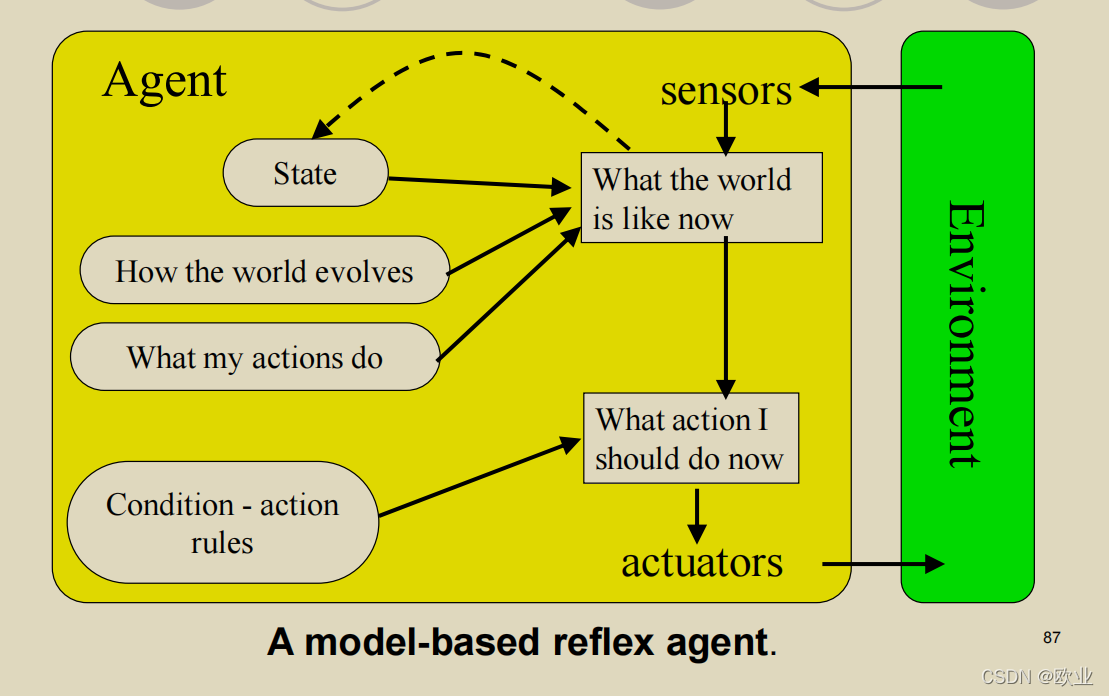

Model-based reflex agents

- The most effective way to handle partial observability is for the agent to keep track of the part of the world it can not see now

- The agent maintain some sort of internal state that depends on the percept history

Updating internal state information as time goes by requires

- Information about how the world evolves independently of agent

- Information about how the agent’s own actions affect

the world

Model of the world

- The knowledge about “How the world works”

- May be implemented in simple Boolean circuits

- May be implemented in complete scientific theories

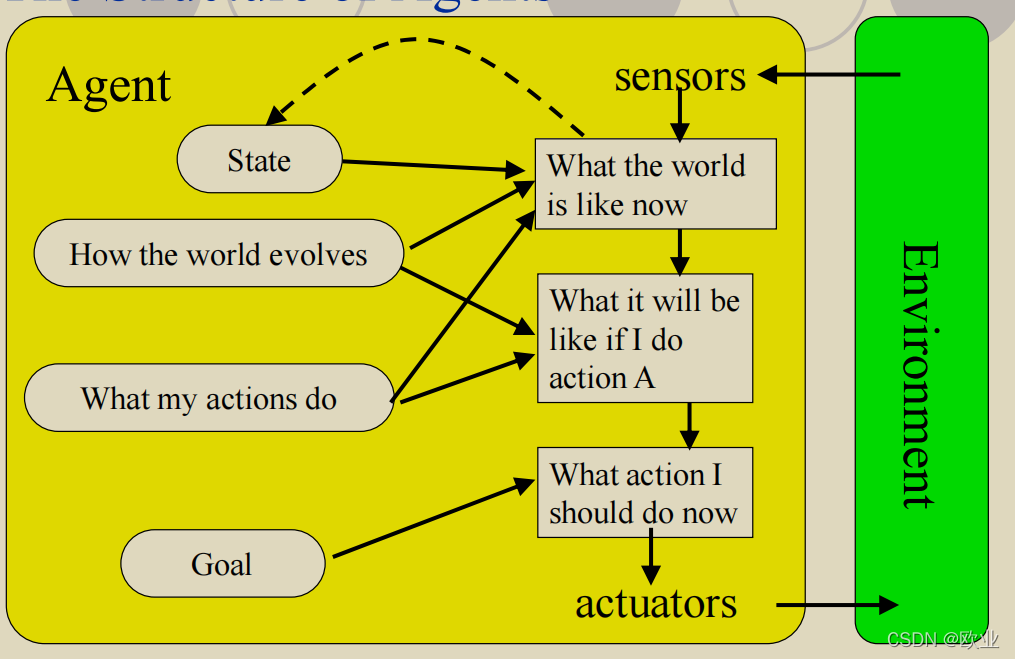

Goal-based agents

- Knowing about the current state of the environment is not always enough to decide what to do

- As well as a current state description, the agent needs some sort of goal information that describes situation that are desirable

Search and Planning are the subfields of AI devoted to finding action sequences that achieve the agent’s goal

It involves consideration of the future

Utility-based agents

A complete specification of the utility function allows rational decisions in two kinds of cases where goals are inadequate

A complete specification of the utility function allows rational decisions in two kinds of cases where goals are inadequate

- When there are conflicting goals or only some of goals could be achieved

- When there are several goals that the agent can aim for, none of which can be achieved with certainty, utility provides a way in which the likelihood of success can be weighed against the importance of the goals