在上一文我们对Unity实时更新纹理数据做了一些研究,但是最后有一步骤,texture.Apply()依然需要在渲染线程中执行,这个函数其实也是非常耗时的,依然会影响到应用的FPS。

这篇文章用了另外一条思路,就是开一个线程,主要处理纹理更新的线程,主要是参考了Unity 的NativeRenderingPlugin插件来写的,搞一个Unity单独更新纹理的插件。

1、EGL

在Android平台上,EGL是OpenGL ES与本地窗口系统(本机窗口系统)之间的通信接口,其主要功能:

与设备的原生窗口系统通信;

查询绘图曲面的可用类型和配置;

创建图形曲面;

保持OpenGL ES和其他图形渲染API之间的同步渲染;

管理渲染资源,如纹理贴图。

OpenGL ES它在EGL实现的帮助下,EGL屏蔽了不同平台之间的差异(苹果提供您自己的EGL API Of iOS实现,声称是EAGL)。

本地窗口相关API提供对本地窗口系统接口的访问,EGL您可以创建渲染曲面EGLSurface,同时,它提供图形渲染上下文EGLContext,用于状态管理,下一个OpenGL ES您可以在此渲染曲面上绘制。

2、共享上下文时,哪些资源可以跨线程共享?

可共享的资源:

texture ;

shader;

program Shader program ;

buffer Class object , Such as VBO、 EBO、 RBO etc. .

无法共享的资源:

FBO Framebuffer object ( Do not belong to buffer class );

VAO Vertex array object ( Do not belong to buffer class ).

这里的解释是,在无法共享的资源中,FBO和VAO它是一个资源管理对象,FBO负责管理多个缓冲区,它不占用资源,VAO负责管理VBO或EBO,它不占用资源。

3、多线程实时更新纹理

在我们开发实时更新纹理的过程中,我们就使用到了EGL这个库,以及共享上下文的特性。

第一步,我们需要写一个Unity Native Plugins来做渲染事情,可以参考GitHub - Unity-Technologies/NativeRenderingPlugin: C++ Rendering Plugin example for Unity![]() https://github.com/Unity-Technologies/NativeRenderingPlugin

https://github.com/Unity-Technologies/NativeRenderingPlugin

第二步,我们在Plugins中,加入我们多线程的一个处理方法。

总体的Plugin代码如下:

// Example low level rendering Unity plugin

#include "PlatformBase.h"

#include "RenderAPI.h"

#include "TextureRender.h"

#include "SafeQueue.h"

#include <EGL/egl.h>

#include <GLES3/gl3.h>

#include <assert.h>

#include <math.h>

#include <stdio.h>

#include <vector>

#include <unistd.h>

#include <jni.h>

static float g_Time;

static void *g_TextureHandle = NULL;

static int g_TextureWidth = 0;

static int g_TextureHeight = 0;

SafeQueue<TextureDataInfo> texDatas;

/* Context related variables. */

EGLContext mainContext = NULL;

EGLContext mainDisplay = NULL;

EGLSurface pBufferSurface = NULL;

EGLContext pBufferContext = NULL;

/* Secondary thread related variables. */

pthread_t secondThread = -1;

bool exitThread = false;

/* Sync objects. */

GLsync mainThreadSyncObj = NULL;

GLuint64 timeout = GL_TIMEOUT_IGNORED;

// --------------------------------------------------------------------------

// UnitySetInterfaces

static void UNITY_INTERFACE_API OnGraphicsDeviceEvent(UnityGfxDeviceEventType eventType);

static IUnityInterfaces *s_UnityInterfaces = NULL;

static IUnityGraphics *s_Graphics = NULL;

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API

UnityPluginLoad(IUnityInterfaces *unityInterfaces) {

s_UnityInterfaces = unityInterfaces;

s_Graphics = s_UnityInterfaces->Get<IUnityGraphics>();

s_Graphics->RegisterDeviceEventCallback(OnGraphicsDeviceEvent);

#if SUPPORT_VULKAN

if (s_Graphics->GetRenderer() == kUnityGfxRendererNull) {

extern void RenderAPI_Vulkan_OnPluginLoad(IUnityInterfaces *);

RenderAPI_Vulkan_OnPluginLoad(unityInterfaces);

}

#endif // SUPPORT_VULKAN

// Run OnGraphicsDeviceEvent(initialize) manually on plugin load

OnGraphicsDeviceEvent(kUnityGfxDeviceEventInitialize);

}

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API UnityPluginUnload() {

s_Graphics->UnregisterDeviceEventCallback(OnGraphicsDeviceEvent);

}

#if UNITY_WEBGL

typedef void (UNITY_INTERFACE_API * PluginLoadFunc)(IUnityInterfaces* unityInterfaces);

typedef void (UNITY_INTERFACE_API * PluginUnloadFunc)();

extern "C" void UnityRegisterRenderingPlugin(PluginLoadFunc loadPlugin, PluginUnloadFunc unloadPlugin);

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API RegisterPlugin()

{

UnityRegisterRenderingPlugin(UnityPluginLoad, UnityPluginUnload);

}

#endif

// --------------------------------------------------------------------------

// GraphicsDeviceEvent

static RenderAPI *s_CurrentAPI = NULL;

static UnityGfxRenderer s_DeviceType = kUnityGfxRendererNull;

static void UNITY_INTERFACE_API OnGraphicsDeviceEvent(UnityGfxDeviceEventType eventType) {

// Create graphics API implementation upon initialization

if (eventType == kUnityGfxDeviceEventInitialize) {

assert(s_CurrentAPI == NULL);

s_DeviceType = s_Graphics->GetRenderer();

s_CurrentAPI = CreateRenderAPI(s_DeviceType);

}

// Let the implementation process the device related events

if (s_CurrentAPI) {

s_CurrentAPI->ProcessDeviceEvent(eventType, s_UnityInterfaces);

}

// Cleanup graphics API implementation upon shutdown

if (eventType == kUnityGfxDeviceEventShutdown) {

delete s_CurrentAPI;

s_CurrentAPI = NULL;

s_DeviceType = kUnityGfxRendererNull;

}

}

static void ModifyTexturePixels(void *data) {

void *textureHandle = g_TextureHandle;

int width = g_TextureWidth;

int height = g_TextureHeight;

if (!textureHandle)

return;

if (!data)

return;

s_CurrentAPI->EndModifyTexture(textureHandle, width, height, 0, data);

}

/* Modify the texture. */

void* animateTexture() {

int textureRowPitch;

void* textureDataPtr = s_CurrentAPI->BeginModifyTexture(g_TextureHandle, g_TextureWidth, g_TextureHeight, &textureRowPitch);

if (!textureDataPtr)

return NULL;

const float t = g_Time * 4.0f;

unsigned char* dst = (unsigned char*)textureDataPtr;

for (int y = 0; y < g_TextureHeight; ++y)

{

unsigned char* ptr = dst;

for (int x = 0; x < g_TextureWidth; ++x)

{

// Simple "plasma effect": several combined sine waves

int vv = int(

(127.0f + (127.0f * sinf(x / 7.0f + t))) +

(127.0f + (127.0f * sinf(y / 5.0f - t))) +

(127.0f + (127.0f * sinf((x + y) / 6.0f - t))) +

(127.0f + (127.0f * sinf(sqrtf(float(x*x + y*y)) / 4.0f - t)))

) / 4;

// Write the texture pixel

ptr[0] = vv;

ptr[1] = vv;

ptr[2] = vv;

ptr[3] = vv;

// To next pixel (our pixels are 4 bpp)

ptr += 4;

}

// To next image row

dst += textureRowPitch;

}

return textureDataPtr;

}

/* [workingFunction 1] */

/* Secondary thread's working function. */

static void *workingFunction(void *arg) {

EGLConfig config = findConfig(mainDisplay, true, true);

pBufferSurface = eglCreatePbufferSurface(mainDisplay, config, pBufferAttributes);

if (pBufferSurface == EGL_NO_SURFACE) {

EGLint error = eglGetError();

LOGE("eglGetError(): %i (0x%.4x)\n", (int) error, (int) error);

LOGE("Failed to create EGL pixel buffer surface at %s:%i\n", __FILE__, __LINE__);

exit(1);

}

LOGI("PBuffer surface created successfully.\n");

/* Unconditionally bind to OpenGL ES API. */

eglBindAPI(EGL_OPENGL_ES_API);

/* [Creating rendering context] */

/* Sharing OpenGL ES objects with main thread's rendering context. */

pBufferContext = eglCreateContext(mainDisplay, config, mainContext, contextAttributes);

/* [Creating rendering context] */

if (pBufferContext == EGL_NO_CONTEXT) {

EGLint error = eglGetError();

LOGE("eglGetError(): %i (0x%.4x)\n", (int) error, (int) error);

LOGE("Failed to create EGL pBufferContext at %s:%i\n", __FILE__, __LINE__);

exit(1);

}

LOGI("PBuffer context created successfully sharing GLES objects with the main context.\n");

eglMakeCurrent(mainDisplay, pBufferSurface, pBufferSurface, pBufferContext);

LOGI("PBuffer context made current successfully.\n");

/*

* Flags to pass to glFenceSync must be zero as there are no flag defined yet.

* The condition must be set to GL_SYNC_GPU_COMMANDS_COMPLETE.

*/

GLbitfield flags = 0;

GLenum condition = GL_SYNC_GPU_COMMANDS_COMPLETE;

while (!exitThread) {

if (mainThreadSyncObj != NULL) {

glWaitSync(mainThreadSyncObj, flags, timeout);

} else {

LOGI("mainThreadSyncObj is null");

continue;

}

TextureDataInfo tempData;

if (texDatas.Consume(tempData)) {

ModifyTexturePixels(tempData.data);

free(tempData.data);

}else {

void * data = animateTexture();

if(data == NULL)

continue;

ModifyTexturePixels(data);

free(data);

}

}

LOGI("Exiting secondary thread.\n");

return NULL;

}

void createTextureThread(void) {

pthread_create(&secondThread, NULL, &workingFunction, NULL);

}

void startTextureRender() {

if (secondThread != -1) {

LOGD("thread is started!");

return;

}

mainContext = eglGetCurrentContext();

if (mainContext == EGL_NO_CONTEXT) {

LOGE("initCL: eglGetCurrentContext() returned 'EGL_NO_CONTEXT', error = %x", eglGetError());

return;

}

mainDisplay = eglGetCurrentDisplay();

if(mainDisplay == EGL_NO_DISPLAY) {

LOGE("initCL: eglGetCurrentDisplay() returned 'EGL_NO_DISPLAY', error = %x", eglGetError());

return;

}

createTextureThread();

exitThread = false;

}

void stopTextureRender() {

exitThread = true;

TextureDataInfo tempData;

while (texDatas.Consume(tempData)) {

free(tempData.data);

}

secondThread = -1;

}

void addTextureData(TextureDataInfo dataInfo) {

if (texDatas.Size() > 20) {

LOGD("texDatas is large");

TextureDataInfo tempData;

if (texDatas.Consume(tempData)) {

free(tempData.data);

}

}

texDatas.Produce(std::move(dataInfo));

}

void renderFrame() {

GLbitfield flags = 0;

GLenum condition = GL_SYNC_GPU_COMMANDS_COMPLETE;

mainThreadSyncObj = glFenceSync(condition, flags);

}

static void UNITY_INTERFACE_API OnRenderEvent(int eventID) {

// Unknown / unsupported graphics device type? Do nothing

if (s_CurrentAPI == NULL)

return;

renderFrame();

}

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API SetTimeFromUnity (float t) { g_Time = t; }

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API

StartTextureRender(void *textureHandle, int w, int h) {

// A script calls this at initialization time; just remember the texture pointer here.

// Will update texture pixels each frame from the plugin rendering event (texture update

// needs to happen on the rendering thread).

g_TextureHandle = textureHandle;

g_TextureWidth = w;

g_TextureHeight = h;

LOGI("texture width: %d, height: %d", w, h);

startTextureRender();

}

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API

StopTextureRender() {

stopTextureRender();

}

// --------------------------------------------------------------------------

// GetRenderEventFunc, an example function we export which is used to get a rendering event callback function.

extern "C" UnityRenderingEvent UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API GetRenderEventFunc() {

return OnRenderEvent;

}

extern "C" void UNITY_INTERFACE_EXPORT UNITY_INTERFACE_API

UpdateTextureDataPtr(void *data, int len) {

void *tempData = new unsigned char[len];

if (tempData == NULL)

return;

memcpy(tempData, data, len);

TextureDataInfo dataInfo;

dataInfo.data = tempData;

dataInfo.len = len;

addTextureData(dataInfo);

}

Unity中使用方法:

using System;

using System.Runtime.InteropServices;

using UnityEngine;

public class MutilTextureRenderer : MonoBehaviour

{

[SerializeField]

private MeshRenderer imageRenderer;

[SerializeField, Tooltip("Frequency of sharing screens")]

private int frequency = 10;

private const string mainTexPropertyName = "_MainTex";

private Texture2D imageTexture;

private int imageWidth = 1920;

private int imageHeight = 1080;

public void OnDestroy()

{

NDKAPI.StopTextureRender();

if (imageTexture != null)

DestroyImmediate(imageTexture);

}

// Start is called before the first frame update

void Start()

{

if (imageTexture == null)

{

imageTexture = new Texture2D(imageWidth, imageHeight, TextureFormat.RGBA32, false, true);

imageTexture.wrapMode = TextureWrapMode.Clamp;

imageRenderer.material.SetTexture(mainTexPropertyName, imageTexture);

// Pass texture pointer to the plugin

NDKAPI.StartTextureRender(imageTexture.GetNativeTexturePtr(), imageTexture.width, imageTexture.height);

}

}

// Update is called once per frame

private void Update()

{

NDKAPI.SetTimeFromUnity(Time.timeSinceLevelLoad);

GL.IssuePluginEvent(NDKAPI.GetRenderEventFunc(), 1);

}

public struct NDKAPI

{

#if (UNITY_IOS || UNITY_TVOS || UNITY_WEBGL) && !UNITY_EDITOR

[DllImport ("__Internal")]

#else

[DllImport("RenderingPlugin")]

#endif

public static extern IntPtr GetRenderEventFunc();

#if (UNITY_IOS || UNITY_TVOS || UNITY_WEBGL) && !UNITY_EDITOR

[DllImport ("__Internal")]

#else

[DllImport("RenderingPlugin")]

#endif

public static extern void StartTextureRender(System.IntPtr texture, int w, int h);

#if (UNITY_IOS || UNITY_TVOS || UNITY_WEBGL) && !UNITY_EDITOR

[DllImport ("__Internal")]

#else

[DllImport("RenderingPlugin")]

#endif

public static extern void StopTextureRender();

#if (UNITY_IOS || UNITY_TVOS || UNITY_WEBGL) && !UNITY_EDITOR

[DllImport ("__Internal")]

#else

[DllImport("RenderingPlugin")]

#endif

public static extern void UpdateTextureDataPtr(System.IntPtr data, int len);

#if (UNITY_IOS || UNITY_TVOS || UNITY_WEBGL) && !UNITY_EDITOR

[DllImport ("__Internal")]

#else

[DllImport("RenderingPlugin")]

#endif

public static extern void SetTimeFromUnity(float t);

}

}

总体效果

常见问题

出现如下错误:

2022/05/06 14:23:17.861 22872 22917 Error libNative initCL: eglGetCurrentContext() returned 'EGL_NO_CONTEXT', error = 3000

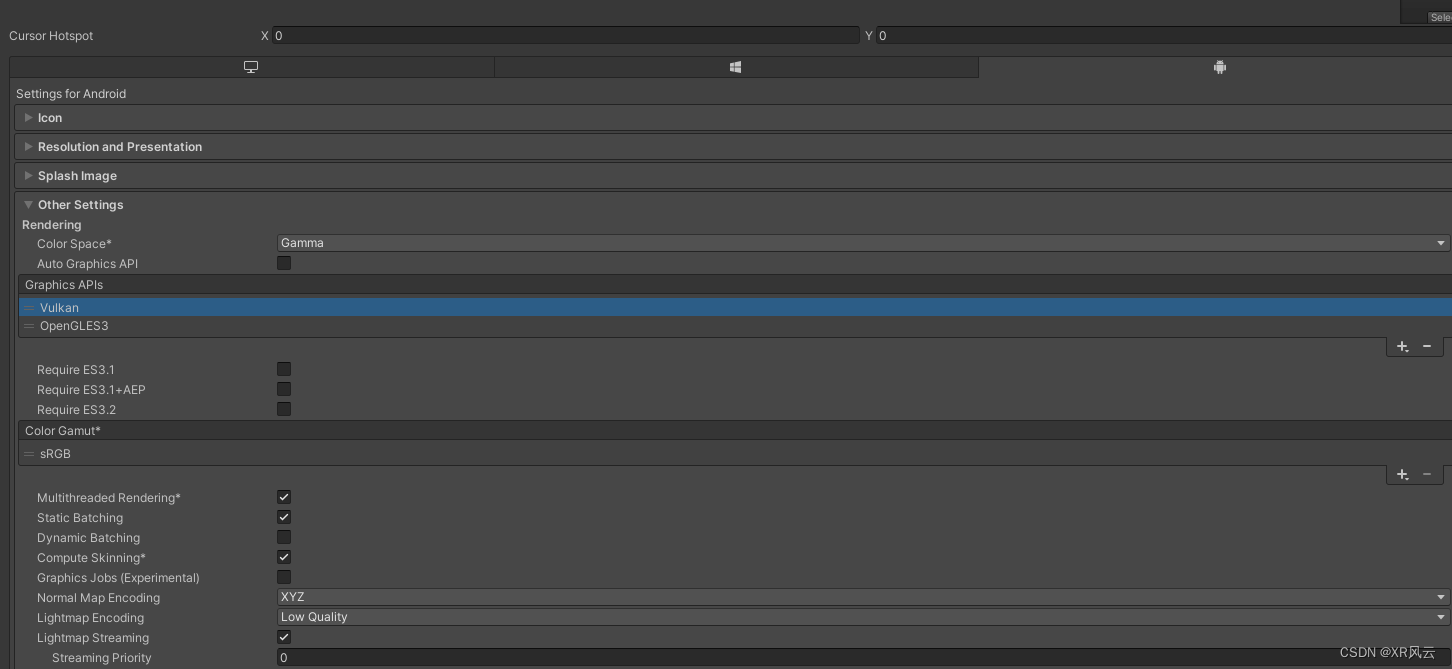

需要在Unity中把Graphics APIS中的Vulkan去掉,以及勾选掉Multithreaded Rendering勾选掉。

我的demo代码下载地址:Unity多线程实时更新纹理数据(Android平台)-Android文档类资源-CSDN下载我们需要实时更新纹理数据,如果在主线程中更新,会影响帧率。当前我们在?Unity中搞了一个Naitv更多下载资源、学习资料请访问CSDN下载频道. https://download.csdn.net/download/grace_yi/85301879

https://download.csdn.net/download/grace_yi/85301879

参考文献

https://github.com/ARM-software/opengl-es-sdk-for-android/blob/4ca9fdb10bcd721bb9af19ccd9bdd95bdf34a07b/samples/advanced_samples/ThreadSync/jni/ThreadSync.cpp![]() https://github.com/ARM-software/opengl-es-sdk-for-android/blob/4ca9fdb10bcd721bb9af19ccd9bdd95bdf34a07b/samples/advanced_samples/ThreadSync/jni/ThreadSync.cppMultithreading rendering with OpenGL es shared context - 文章整合

https://github.com/ARM-software/opengl-es-sdk-for-android/blob/4ca9fdb10bcd721bb9af19ccd9bdd95bdf34a07b/samples/advanced_samples/ThreadSync/jni/ThreadSync.cppMultithreading rendering with OpenGL es shared context - 文章整合![]() https://chowdera.com/2021/06/20210619181834707p.html

https://chowdera.com/2021/06/20210619181834707p.html