unityURP管线学习+后处理

一,前置知识

RenderPipeline

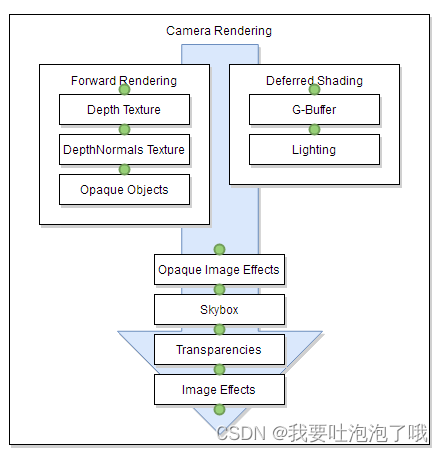

默认管线RenderPipeline

- 一些流程是由代码控制开关的,比如Depth Texture这一步,如果设置了depthTextureMode,则会把所有LightMode为ShadowCaster的Pass执行一遍并存储到Depth Texture中。

- 一些流程是由Shader 中的Tags { “LightMode” “RenderType” “Queue”}标注控制的。渲染时unity根据这些标签,吧Pass放在图中对应顺序运行,因此才可以实现透明物体的“先渲染不透明,再渲染透明”的操作。

Scriptable Render Pipeline可编程渲染管线

-

unity提供的自定义渲染方案,可以灵活根据需求定制。也就是说可以自己随意设定上述谁先谁后的渲染流程,以满足定制化需求。除此以外SRP还有SRP Batcher等优化。

-

而URP就是Unity在SRP基础上定义出的一个适配大部分情况的管线。

二,URP渲染流程(代码向)

Render函数

com.unity.render-pipelines.universal@7.7.1/Runtime/UniversalRenderPipeline.cs

protected override void Render(ScriptableRenderContext renderContext, Camera[] cameras)

{

//固定调用,表示一个相机即将开始渲染

BeginFrameRendering(renderContext, cameras);

//设置是否是线性空间、是否用SRPBatcher

GraphicsSettings.lightsUseLinearIntensity = (QualitySettings.activeColorSpace == ColorSpace.Linear);

GraphicsSettings.useScriptableRenderPipelineBatching = asset.useSRPBatcher;

//设置没开环境反射时的默认SH、阴影颜色等

SetupPerFrameShaderConstants();

……

SortCameras(cameras);//根据相机深度把相机排序

for (int i = 0; i < cameras.Length; ++i)

{

var camera = cameras[i];

……

if (IsGameCamera(camera))

{

//遍历主相机的CameraStack里的每一个Overlay相机,并全部渲染出来

RenderCameraStack(renderContext, camera);

}

else

{

BeginCameraRendering(renderContext, camera);

……

//看当前相机是否在后期Volume内,如果在则触发对应的后期效果

UpdateVolumeFramework(camera, null);

//渲染一个相机(剪裁、设置渲染器、执行渲染器)

RenderSingleCamera(renderContext, camera);

//固定调用,表示一个相机已经结束渲染

EndCameraRendering(renderContext, camera);

}

}

EndFrameRendering(renderContext, cameras);

}

- 这里包含了相机渲染常规流程,以后会常见到:

BeginCameraRendering(context, currCamera);//开始

UpdateVolumeFramework(currCamera, currCameraData);//如果有后处理则触发

//从相机的UniversalAdditionalCameraData里提取设置参数

InitializeCameraData(baseCamera, baseCameraAdditionalData, out var baseCameraData);

//渲染耽搁相机:剪裁、设置渲染器、执行渲染器

RenderSingleCamera(context, overlayCameraData, lastCamera, anyPostProcessingEnabled);

EndCameraRendering(context, currCamera);//结束

RenderSingleCamera函数

其中最关键的是RenderSingleCamera函数:

/// <summary>

/// 渲染一个相机,其过程主要包括剪裁、设置渲染器、执行渲染器三步。

/// </summary>

/// <param name="context">渲染上下文用于记录执行过程中的命令。</param>

/// <param name="cameraData">相机渲染数据,里面可能包含了继承自基础相机的一些参数</param>

/// <param name="anyPostProcessingEnabled">如果相机需要做后期效果处理则为true,否则为false.</param>

static void RenderSingleCamera(ScriptableRenderContext context, CameraData cameraData, bool anyPostProcessingEnabled)

{

Camera camera = cameraData.camera;

//获取当前相机的渲染器renderer,URP中默认使用的是ForwardRenderer

var renderer = cameraData.renderer;

if (renderer == null)

{

Debug.LogWarning(string.Format("Trying to render {0} with an invalid renderer. Camera rendering will be skipped.", camera.name));

return;

}

//获取相机视锥裁剪参数,保存在变量cullingParameters里

if (!camera.TryGetCullingParameters(IsStereoEnabled(camera), out var cullingParameters))

return;

ScriptableRenderer.current = renderer;

bool isSceneViewCamera = cameraData.isSceneViewCamera;

//申请一个CommandBuffer来执行渲染命令

ProfilingSampler sampler = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? new ProfilingSampler(camera.name): _CameraProfilingSampler;

CommandBuffer cmd = CommandBufferPool.Get(sampler.name);

using (new ProfilingScope(cmd, sampler))

{

//重置渲染对象及执行状态,清空pass队列

renderer.Clear(cameraData.renderType);

//根据cameraData设置好cullingParameters,包含shadowDistance等

renderer.SetupCullingParameters(ref cullingParameters, ref cameraData);

context.ExecuteCommandBuffer(cmd);//执行渲染命令

cmd.Clear();//清空CommandBuffer

……

//根据剪裁参数计算剪裁结果cullResults,后续要从cullResults中筛选要渲染的元素。

var cullResults = context.Cull(ref cullingParameters);

//用剪裁结果cullResults、灯光等可变数据 初始化渲染数据renderingData

InitializeRenderingData(asset, ref cameraData, ref cullResults, anyPostProcessingEnabled, out var renderingData);

……

//根据渲染数据renderingData,筛选需要的pass进队列

renderer.Setup(context, ref renderingData);

//执行队列中的渲染pass

renderer.Execute(context, ref renderingData);

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

context.Submit();

ScriptableRenderer.current = null;

}

其中的InitializeRenderingData函数

static void InitializeRenderingData(UniversalRenderPipelineAsset settings, ref CameraData cameraData, ref CullingResults cullResults,

bool anyPostProcessingEnabled, out RenderingData renderingData)

{

//这里主要处理了要不要阴影,并且addlight里只支持SpotLight的阴影

……

renderingData.cullResults = cullResults;

renderingData.cameraData = cameraData;

//

InitializeLightData(settings, visibleLights, mainLightIndex, out renderingData.lightData);

InitializeShadowData(settings, visibleLights, mainLightCastShadows, additionalLightsCastShadows && !renderingData.lightData.shadeAdditionalLightsPerVertex, out renderingData.shadowData);

……

}

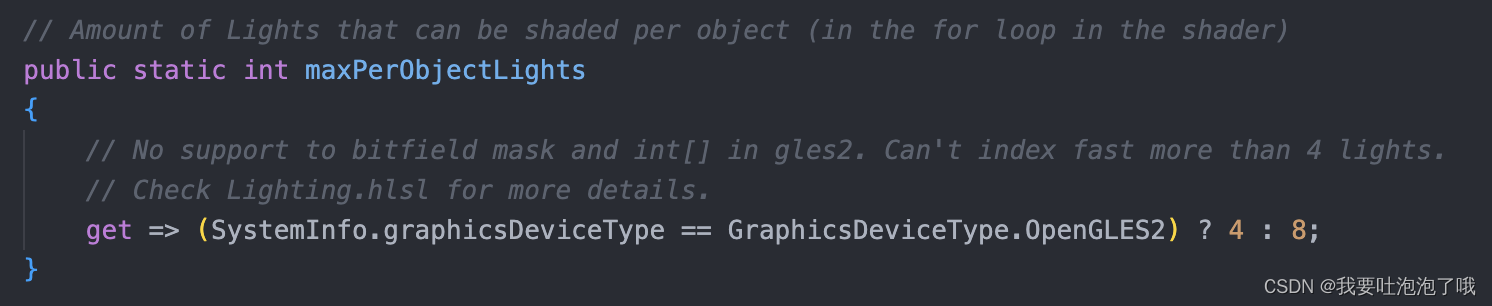

- InitializeLightData设置了最大灯光数maxPerObjectLights,GLES 2最多四盏,其余最多八盏,如下图。

- InitializeShadowData看到:

- 默认光照在pipelineasset设置,特殊光照在光源的UniversalAdditionalLightData组件设置

- 屏幕空间阴影需要设备GLES 2以上才支持

- 阴影质量由shadowmap分辨率和cascade数共同决定

其中的ForwardRenderer类

ForwardRenderer继承于ScriptableRenderer,它维护了一个ScriptableRenderPass的列表,在每帧前王列表里新增pass,然后执行pass渲染画面,每帧结束再清空列表。它的渲染资源被序列化为ScriptableRendererData。

ScriptableRenderer里的核心函数Setup和Execute每帧都会执行,其中Setup会把要执行的pass加入列表,Execute将列表里的pass按渲染顺序分类提取并执行。

ForwardRenderer下Setup函数(重点)

主要是将需要的pass加入渲染队列中。

public override void Setup(ScriptableRenderContext context, ref RenderingData renderingData)

{

……

//1,渲染深度纹理的相机,只加入RenderFeature、不透明物体、天空盒、半透明物体的Pass

bool isOffscreenDepthTexture = cameraData.targetTexture != null && cameraData.targetTexture.format == RenderTextureFormat.Depth;

if (isOffscreenDepthTexture)

{

……

for (int i = 0; i < rendererFeatures.Count; ++i)

{

if(rendererFeatures[i].isActive)

rendererFeatures[i].AddRenderPasses(this, ref renderingData);

}

EnqueuePass(m_RenderOpaqueForwardPass);

EnqueuePass(m_DrawSkyboxPass);

……

EnqueuePass(m_RenderTransparentForwardPass);

return;

}

//获取cameraData、UniversalRenderPipeline.asset、renderingData中的各种参数供判断用

……

// 2,如果需要ColorTexture就设置颜色缓冲为m_CameraColorAttachment;如果需要DepthTexture就设置深度缓冲为m_CameraDepthAttachment

//需要渲染到ColorTexture的条件包括:打开MSAA、打开RenderScale、打开HDR、打开Post-Processing、打开渲染到OpaqueTexture、添加了自定义ScriptableRendererFeature等

if (cameraData.renderType == CameraRenderType.Base)

{

m_ActiveCameraColorAttachment = (createColorTexture) ? m_CameraColorAttachment : RenderTargetHandle.CameraTarget;

m_ActiveCameraDepthAttachment = (createDepthTexture) ? m_CameraDepthAttachment : RenderTargetHandle.CameraTarget;

……

}

else

{

m_ActiveCameraColorAttachment = m_CameraColorAttachment;

m_ActiveCameraDepthAttachment = m_CameraDepthAttachment;

}

ConfigureCameraTarget(m_ActiveCameraColorAttachment.Identifier(), m_ActiveCameraDepthAttachment.Identifier());

//3,将所有自定义的ScriptableRendererFeature加入到ScriptableRenderPass的队列中

for (int i = 0; i < rendererFeatures.Count; ++i)

{

if(rendererFeatures[i].isActive)

rendererFeatures[i].AddRenderPasses(this, ref renderingData);

}

//4,将各种通用Pass根据各自条件加入到ScriptableRenderPass的队列中

if (mainLightShadows)

EnqueuePass(m_MainLightShadowCasterPass);

if (additionalLightShadows)

EnqueuePass(m_AdditionalLightsShadowCasterPass);

if (requiresDepthPrepass)

{

m_DepthPrepass.Setup(cameraTargetDescriptor, m_DepthTexture);

EnqueuePass(m_DepthPrepass);

}

if (generateColorGradingLUT)

{

m_ColorGradingLutPass.Setup(m_ColorGradingLut);

EnqueuePass(m_ColorGradingLutPass);

}

EnqueuePass(m_RenderOpaqueForwardPass);

……

if (camera.clearFlags == CameraClearFlags.Skybox && (RenderSettings.skybox != null || cameraSkybox?.material != null) && !isOverlayCamera)

EnqueuePass(m_DrawSkyboxPass);

// 如果创建了DepthTexture,我们需要复制它,otherwise我们可以将它渲染到renderbuffer

if (!requiresDepthPrepass && renderingData.cameraData.requiresDepthTexture && createDepthTexture)

{

m_CopyDepthPass.Setup(m_ActiveCameraDepthAttachment, m_DepthTexture);

EnqueuePass(m_CopyDepthPass);

}

if (renderingData.cameraData.requiresOpaqueTexture)

{

Downsampling downsamplingMethod = UniversalRenderPipeline.asset.opaqueDownsampling;

m_CopyColorPass.Setup(m_ActiveCameraColorAttachment.Identifier(), m_OpaqueColor, downsamplingMethod);

EnqueuePass(m_CopyColorPass);

}

……

if (transparentsNeedSettingsPass)

EnqueuePass(m_TransparentSettingsPass);

EnqueuePass(m_RenderTransparentForwardPass);

EnqueuePass(m_OnRenderObjectCallbackPass);

……

//5,如果是最后一个渲染的相机,则将一些需要最后Blit的Pass加入到ScriptableRenderPass的队列中。

if (lastCameraInTheStack)

{

// 后处理得到最终的渲染目标,不需要 final blit pass.

if (applyPostProcessing)

{

m_PostProcessPass.Setup(……);

EnqueuePass(m_PostProcessPass);

}

if (renderingData.cameraData.captureActions != null)

{

m_CapturePass.Setup(m_ActiveCameraColorAttachment);

EnqueuePass(m_CapturePass);

}

……

// 执行FXAA或者需要在AA之后做的后处理.

if (applyFinalPostProcessing)

{

m_FinalPostProcessPass.SetupFinalPass(sourceForFinalPass);

EnqueuePass(m_FinalPostProcessPass);

}

// We need final blit to resolve to screen

if (!cameraTargetResolved)

{

m_FinalBlitPass.Setup(cameraTargetDescriptor, sourceForFinalPass);

EnqueuePass(m_FinalBlitPass);

}

}

else if (applyPostProcessing)

{

m_PostProcessPass.Setup(……);

EnqueuePass(m_PostProcessPass);

}

……

}

可以看到可用的pass有MainLightShadowCasterPass、AdditionalLightsShadowCasterPass,DepthPrePass,ScreenSpaceShadowResolvePass,ColorGradingLutPass,RenderOpaqueForwardPass,DrawSkyboxPass,CopyDepthPass,CopyColorPass,TransparentForwardPass,RenderObjectCallbackPass,PostProcessPass,CapturePass,FinalPostProcessPass,FinalBlitPass等等。

- 注意这里比默认管线多了CopyColor和CopyDepth两个步骤(水面折射时抓取color用,但无法多重折射)

ForwardRenderer下Execute函数(重点)

用于执行各个队列里的pass

public void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

……

//1,把m_ActiveRenderPassQueue中的pass进行排序.

SortStable(m_ActiveRenderPassQueue);

……

//2,按照每个pass的renderPassEvent字段大小把ScriptableRenderPass分配到不同block.

FillBlockRanges(blockEventLimits, blockRanges);

……

//设置shader光照常量和光照相关keyword

SetupLights(context, ref renderingData);

//3,根据不同的渲染block,取出这个block所有Pass依次执行其中的渲染过程。ExecuteRenderPass+submit

ExecuteBlock(RenderPassBlock.BeforeRendering, blockRanges, context, ref renderingData);

……

// Opaque blocks...

ExecuteBlock(RenderPassBlock.MainRenderingOpaque, blockRanges, context, ref renderingData, eyeIndex);

// Transparent blocks...

ExecuteBlock(RenderPassBlock.MainRenderingTransparent, blockRanges, context, ref renderingData, eyeIndex);

// Draw Gizmos...

DrawGizmos(context, camera, GizmoSubset.PreImageEffects);

// In this block after rendering drawing happens, e.g, post processing, video player capture.

ExecuteBlock(RenderPassBlock.AfterRendering, blockRanges, context, ref renderingData, eyeIndex);

//4,cleanup所有的pass,释放RT,重置渲染对象,清空pass队列

InternalFinishRendering(context, cameraData.resolveFinalTarget);

……

}

RenderPassEvent字段大小:

public enum RenderPassEvent

{

BeforeRendering = 0,

BeforeRenderingShadows = 50, // 0x00000032

AfterRenderingShadows = 100, // 0x00000064

BeforeRenderingPrepasses = 150, // 0x00000096

AfterRenderingPrePasses = 200, // 0x000000C8

BeforeRenderingOpaques = 250, // 0x000000FA

AfterRenderingOpaques = 300, // 0x0000012C

BeforeRenderingSkybox = 350, // 0x0000015E

AfterRenderingSkybox = 400, // 0x00000190

BeforeRenderingTransparents = 450, // 0x000001C2

AfterRenderingTransparents = 500, // 0x000001F4

BeforeRenderingPostProcessing = 550, // 0x00000226

AfterRenderingPostProcessing = 600, // 0x00000258

AfterRendering = 1000, // 0x000003E8

}

三,后处理

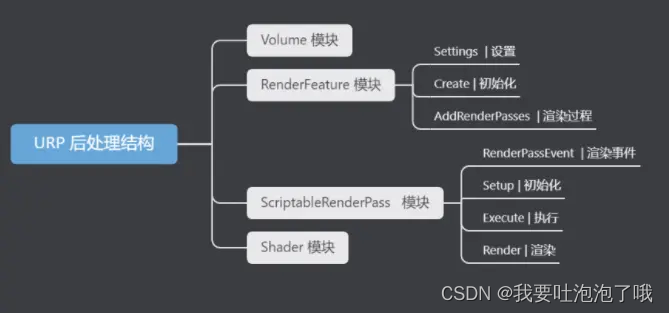

- URP后处理是有4部分组成,分别是渲染器(Forward Renderer)— 后处理(Volume) — Pass模块 —Shader:

3.1 RenderFeature后处理

- RenderFeature是用来拓展Pass的,依附于ForwardRenderer,可以在渲染的某个时机插入一次渲染命令(例如渲染不透明后描边、渲染半透明后滤镜等),因此一般的全屏渲染后处理可以使用RenderFeature处理。

- 「注意:在URP里原MonoBehaviour里的OnRenderImage函数被取消了,需要使用ScriptableRenderPass 来完成类似功能」

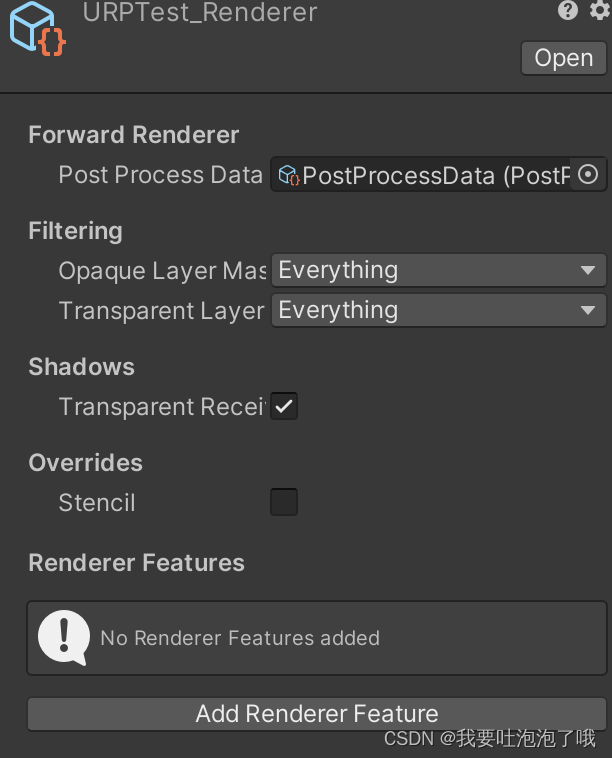

RenderFeature在URPTest_Renderer.asset文件的面板下可以看到,写好类后通过“Add Renderer Feature”新增:

按照下图步骤creat RenderFeature类文件:

-

创建RenderFeature后处理类需要继承ScriptableRendererFeature类,再加两个类组成简单逻辑:

- CustomRenderPass类,继承ScriptableRenderPass类,包含核心渲染逻辑。

- XXXSettings类,用于在RenderFeature面板上传参。

-

大致代码结构如下:

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

public class XXXTest : ScriptableRendererFeature

{

[System.Serializable]

public class XXXSettings

{

//指定该RendererFeature在渲染流程的哪个时机插入

public RenderPassEvent renderPassEvent = RenderPassEvent.AfterRenderingTransparents;

//需要搭载一个材质设定

public Material material = null;

}

public class CustomRenderPass : ScriptableRenderPass

{

//在渲染执行前被调用,可以在这里配置render target,初始化状态,创建临时的渲染纹理

public override void Configure(CommandBuffer cmd, RenderTextureDescriptor cameraTextureDescriptor){}

//后处理的逻辑和渲染核心函数,基本相当于内置管线的OnRenderImage函数

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData){}

//完成渲染相机后调用,用于释放本次渲染流程创建的分配资源

public override void FrameCleanup ( CommandBuffer){}

}

public XXXSettings settings = new XXXSettings();

CustomRenderPass scriptablePass;

public override void Create(){}

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData){}

}

- 其中Create()进行初始化操作,可以把settings里的参数从面板上赋予给CustomRenderPass:

public override void Create()

{

scriptablePass = new CustomRenderPass();

scriptablePass.material = settings.material;

scriptablePass.renderPassEvent = settings.renderPassEvent;

scriptablePass.Scale = settings.Scale;

}

- AddRenderPasses()将CustomRenderPass加入队列,也可以在这里把相机输出给到CustomRenderPass(需要增加Setup函数)。

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

scriptablePass.Setup(renderer.cameraColorTarget);

renderer.EnqueuePass(scriptablePass);

}

- Renderer Pass里核心是Execute函数,基本相当于内置管线的OnRenderImage函数

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

//另一种获取相机tex的方法

RenderTargetIdentifier cameraColorTexture = new RenderTargetIdentifier("_CameraColorTexture");

material.SetFloat("_Distance", _Distance);

material.SetFloat("_Width", _Width);

//CommandBuffer类主要用于收集一系列GL指令,然后执行

CommandBuffer cmd = CommandBufferPool.Get();

//按pass渲染

cmd.Blit(cameraColorTexture, tmpTex, material,passint);

//最后把结果放回相机cameraColorTexture

cmd.Blit(tmpTex, cameraColorTexture);

//执行命令缓冲区的该命令

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

//释放命令

CommandBufferPool.Release(cmd);

}

- 再搭配对应的URP效果shader即可

3.2 Volume后处理

3.2.1urp自带后处理

- URP自带了很多后处理集成在Volume里,使用的时候要在GameObject里创建Volume组件,其中Global Volume代表后处理效果应用所有摄像机;Box Volume是在一个盒子区域内才应用;Sphere Volume是在一个球形区域才应用;Convex Mesh Volume是使用自定义的网格区域。

- Volume组件上有几个参数如下表:

| 名称 | 作用 |

|---|---|

| Mode | Global:无边界的影响每一个摄像机; Local:指定边界,只影响边界内部的摄像机 |

| Weight | Volume在场景中的影响值 |

| Priority | 当场景中有多个Volume时,URP通过此值决定使用哪一个Volume,优先使用priority更高的 |

| Profile | Profile文件存储URP处理Volume的数据 |

- 需要创建一个Volume Profile来设置后处理效果,并且需要在相机里勾选Post Processing开关才能看到效果

- URP自带的后处理效果有:辉光(Bloom)、通道混合(Channel Mixer)、色差(Chromatic Aberration)、色彩调整(Color Adjustments)、曲线(Color Curves)、景深(Depth Of Field)、胶片颗粒(Film Grain)、镜头变形(Lens Distortion)、暗部gamma亮部(Lift Gamma Gain)、运动模糊(Motion Blur)、帕尼尼投影(Panini Projection)、阴影中间调高光(Shadows Midtones Hightlights)、色调分离(Split Toning)、色调(Tonemapping)、暗角(Vignette)、白平衡(White Balance)

3.2.2拓展Volume后处理

-

在Volume里拓展后处理,除了上边用到的RenderFeature Class和RenderPass Class外,还需要VolumeComponent Class。一共2个脚本1个shader文件。

-

VolumeComponent Class:在com.unity.render-pipelines.universal@7.7.1/Runtime/Overrides里可以找到所有为Volume配置文件添加的效果的属性脚本,参考里边的写法创建拓展后处理的效果参数。

using System;

namespace UnityEngine.Rendering.Universal

{

[Serializable, VolumeComponentMenu("My Post-processing/Test")]

public sealed class Test : VolumeComponent, IPostProcessComponent

{

//鼠标放到参数上时显示的描述信息

[Tooltip("Strength of the bloom filter.")]

//只有用官方封装的类型定义的变量才能在面板中显示

public MinFloatParameter intensity = new MinFloatParameter(0f, 0f);

public ClampedFloatParameter scatter = new ClampedFloatParameter(0.7f, 0f, 1f);

public ColorParameter tint = new ColorParameter(Color.white, false, false, true);

public BoolParameter highQualityFiltering = new BoolParameter(false);

public TextureParameter dirtTexture = new TextureParameter(null);

public bool IsActive() => intensity.value > 0f;

public bool IsTileCompatible() => false;

}

}

- 示例代码:

using System;

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

public class XXXTest : ScriptableRendererFeature

{

[System.Serializable]

public class XXXSettings

{

public RenderPassEvent renderPassEvent = RenderPassEvent.BeforeRenderingPostProcessing;

public Shader shader; // 设置后处理Shader

}

public XXXSettings settings = new XXXSettings();

CustomRenderPass scriptablePass;

public override void Create()

{

this.name = "TestPass"; // 外部显示名字

scriptablePass = new CustomRenderPass(RenderPassEvent.BeforeRenderingPostProcessing, settings.shader); // 初始化Pass

}

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

scriptablePass.Setup(renderer.cameraColorTarget); // 初始化Pass里的属性

renderer.EnqueuePass(scriptablePass);

}

}

public class CustomRenderPass : ScriptableRenderPass

{

static readonly string k_RenderTag = "Test Effects"; // 设置渲染 Tags

static readonly int MainTexId = Shader.PropertyToID("_MainTex"); // 设置主贴图

static readonly int TempTargetId = Shader.PropertyToID("_TempTargetColorTint"); // 设置储存图像信息

Test test;// 传递到volume

Material material; // 后处理使用材质

RenderTargetIdentifier cameraColorTexture; // 设置当前渲染目标

public ColorTintPass(RenderPassEvent evt, Shader testshader)

{

renderPassEvent = evt; // 设置渲染事件的位置

var shader = testshader; // 输入Shader信息

// 判断如果不存在Shader

if (shader = null) // Shader如果为空提示

{

Debug.LogError("没有指定Shader");

return;

}

//如果存在新建材质

material = CoreUtils.CreateEngineMaterial(testshader);

}

public void Setup(in RenderTargetIdentifier currentTarget)

{

this.cameraColorTexture = currentTarget;

}

//后处理的逻辑和渲染核心函数,基本相当于内置管线的OnRenderImage函数

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

// 判断材质是否为空

if (material == null)

{

Debug.LogError("材质初始化失败");

return;

}

// 判断是否开启后处理

if (!renderingData.cameraData.postProcessEnabled){return;}

// 渲染设置

var stack = VolumeManager.instance.stack; // 传入volume

test = stack.GetComponent<Test>(); // 拿到我们的volume

if (test == null)

{

Debug.LogError(" Volume组件获取失败 ");

return;

}

var cmd = CommandBufferPool.Get(k_RenderTag); // 设置渲染标签

Render(cmd, ref renderingData); // 设置渲染函数

context.ExecuteCommandBuffer(cmd); // 执行函数

CommandBufferPool.Release(cmd); // 释放

}

void Render(CommandBuffer cmd, ref RenderingData renderingData)

{

ref var cameraData = ref renderingData.cameraData; // 获取摄像机属性

var camera = cameraData.camera; // 传入摄像机

var source = cameraColorTexture; // 获取渲染图片

int destination = TempTargetId; // 渲染结果图片

colorTintMaterial.SetColor("_Color", test.tint.value); // 获取value 组件的颜色

cmd.SetGlobalTexture(MainTexId, source); // 获取当前摄像机渲染的图片

cmd.GetTemporaryRT(destination, cameraData.camera.scaledPixelWidth, cameraData.camera.scaledPixelHeight, 0, FilterMode.Trilinear, RenderTextureFormat.Default);

cmd.Blit(source, destination); // 设置后处理

cmd.Blit(destination, source, material, 0); // 传入颜色处理

}

}

参考资料

1,URP主要源码解析

2,URP学习之三

3,URP/LWRP学习入门

4,URP屏幕后处理

5,URP | 后处理-自定义后处理