本文记录Tanzu Kubernetes Cluster 以Load balancer方式对外提供Service的细节。

实验环境

沿用NSX-T 为vSphere with Tanzu提供网络支撑

| 项目 | 描述 | 备注 |

|---|---|---|

| vSphere | vSphere7.0u2a | 17867351 |

| vCenter | VCSA-.7.0.2 | 17920168 |

| NSX-T Datacenter | 3.1.2.1.0 | 17975795 |

| Tanzu | 1.3.1 |

IP地址分配

| 名称 | 说明 | 本实验使用 | 备注 |

|---|---|---|---|

| Pods | 命名空间中工作负载的私有 IPv4 地址池,为pod/TKG VM提供地址 | 172.211.0.0/16 | |

| Services | 服务 IPv4 地址池,用于通过 K8s ClusterIP 在命名空间内的服务 | 172.96.0.0/16 | |

| Ingress | 公共 IPv4 地址池,通过 K8s 类型负载均衡器、Ingress 和 Cloudprovider 负载均衡器,为Tanzu Kubernetes 集群和 Supervisor 集群提供 Supervisor 集群之外的服务 | 172.80.0.0/16 | |

| Egress | 公共 IPv4 地址池,用于 Supervisor 集群向外的 NAT 流量 | 172.60.0.0/16 | |

| TKG pods | TKG集群内的私有IP地址池,为pod提供地址 | 10.96.0.0/11 | Antrea提供 |

| TKG Services | TKG内部Service的Cluster IP地址 | 100.64.0.0/16 | Antrea提供 |

TKG使用的实验用例yaml

在TKG Cluster内部使用如下yaml建立deployment和service

apiVersion: v1

kind: Service

metadata:

name: hello-kubernetes

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: hello-kubernetes

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-kubernetes

spec:

replicas: 4

selector:

matchLabels:

app: hello-kubernetes

template:

metadata:

labels:

app: hello-kubernetes

spec:

imagePullSecrets:

- name: harbor-registry-secret

containers:

- name: hello-kubernetes

image: 172.80.88.4/ns-dev/hello-kubernetes:1.5

ports:

- containerPort: 8080

env:

- name: MESSAGE

value: I just deployed a PodVM on the Tanzu Kubernetes Cluster!!

结果记录

Pod及Service创建情况

[root@hop ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

hello-kubernetes-6cd455cc47-7w5jf 1/1 Running 0 39d10.96.4.4tkg-cluster-01-workers-vqv9h-dfb456585-lqz4s

hello-kubernetes-6cd455cc47-jw666 1/1 Running 0 39d10.96.0.5tkg-cluster-01-workers-vqv9h-dfb456585-mg2g7

hello-kubernetes-6cd455cc47-q7w4b 1/1 Running 0 39d10.96.0.4tkg-cluster-01-workers-vqv9h-dfb456585-mg2g7

hello-kubernetes-6cd455cc47-wm4lw 1/1 Running 0 37d10.96.1.3tkg-cluster-01-workers-vqv9h-dfb456585-8hmz8

test-pd 1/1 Running 0 27d 10.96.0.6 tkg-cluster-01-workers-vqv9h-dfb456585-mg2g7

[root@hop ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-kubernetes LoadBalancer100.64.136.105172.80.88.780:32762/TCP39d

kubernetes ClusterIP 100.64.0.1 443/TCP 64d

supervisor ClusterIP None 6443/TCP 64d

以上信息我们可以看到

- 对外提供Service的地址是:172.80.88.7

- 内部Service的Cluster IP是:100.64.136.105

- 对外提供80端口,内部是32762端口

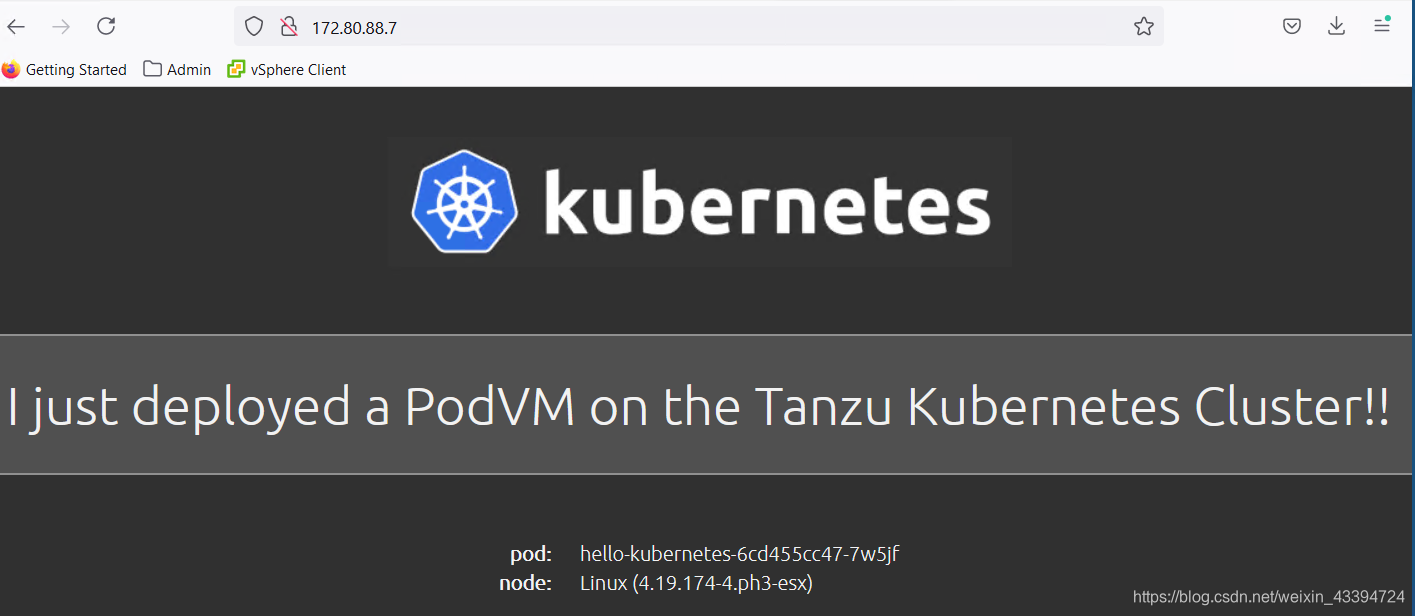

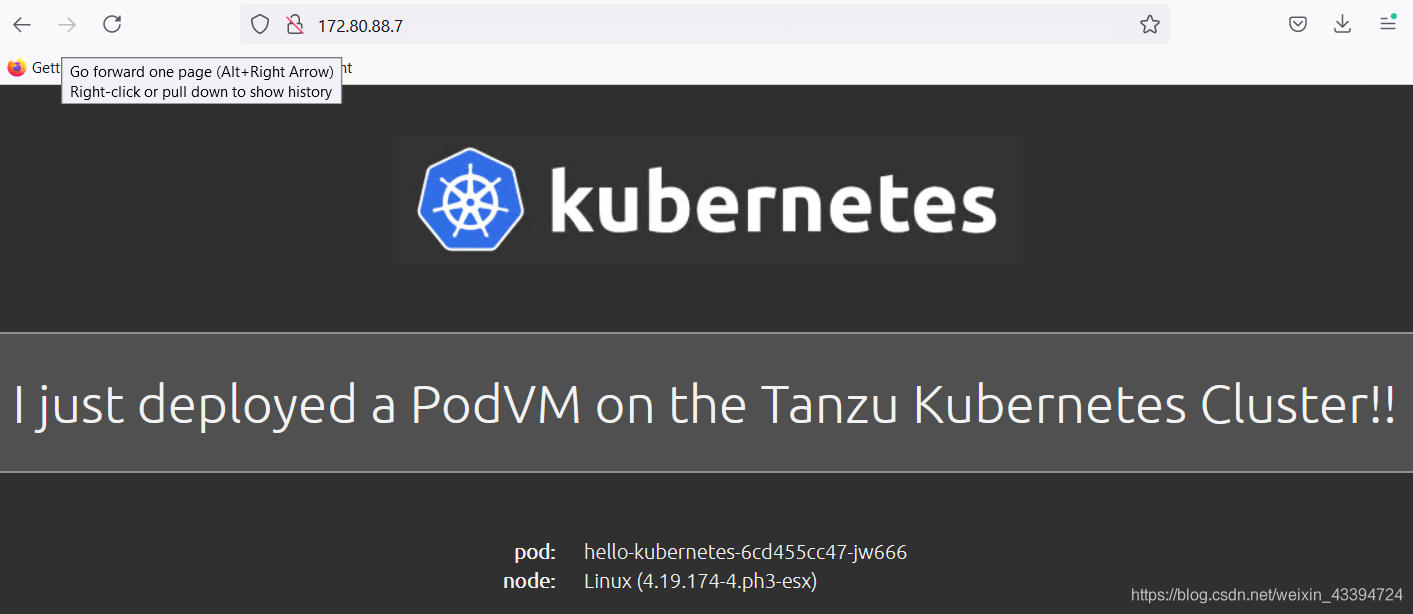

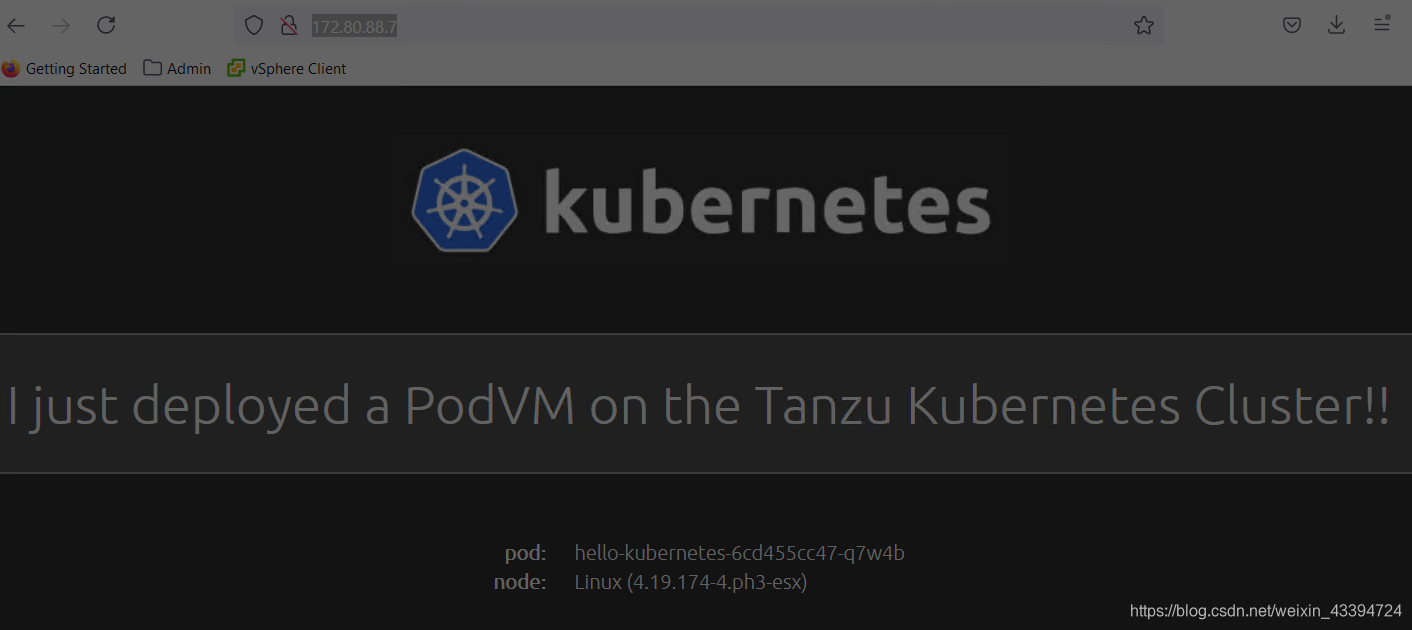

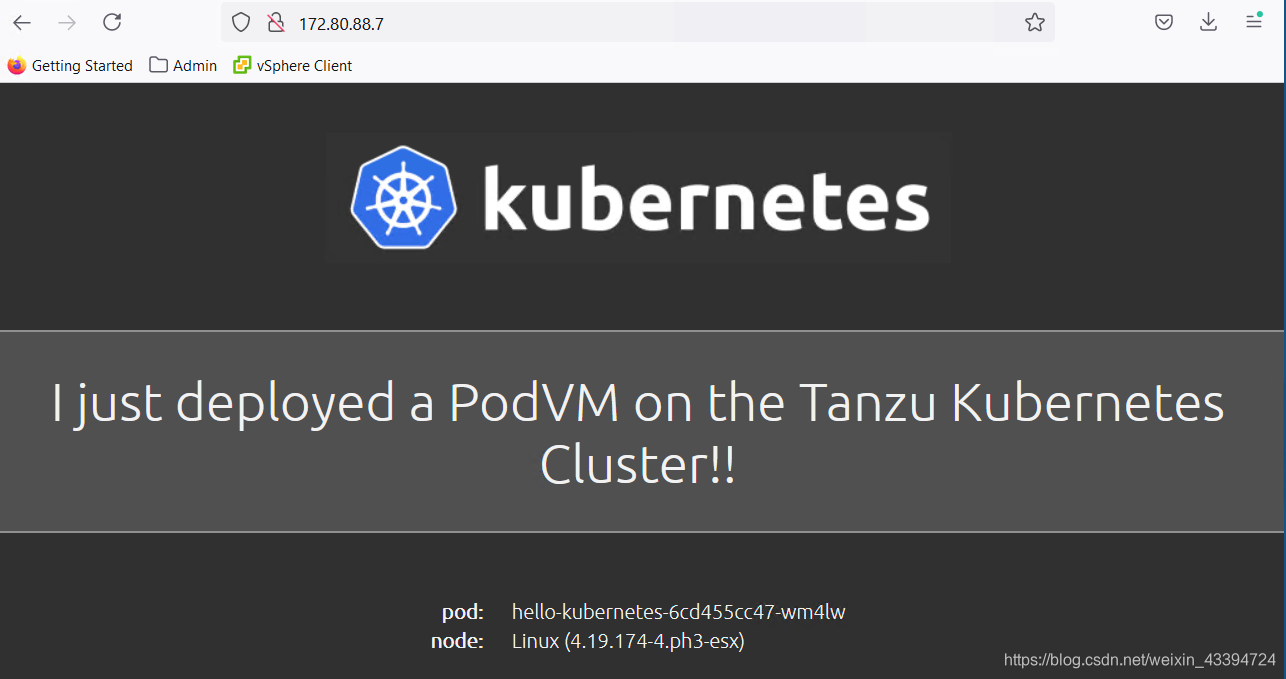

外部Web

我们把四个Pod都刷到了!

NSX-T上面观察LB

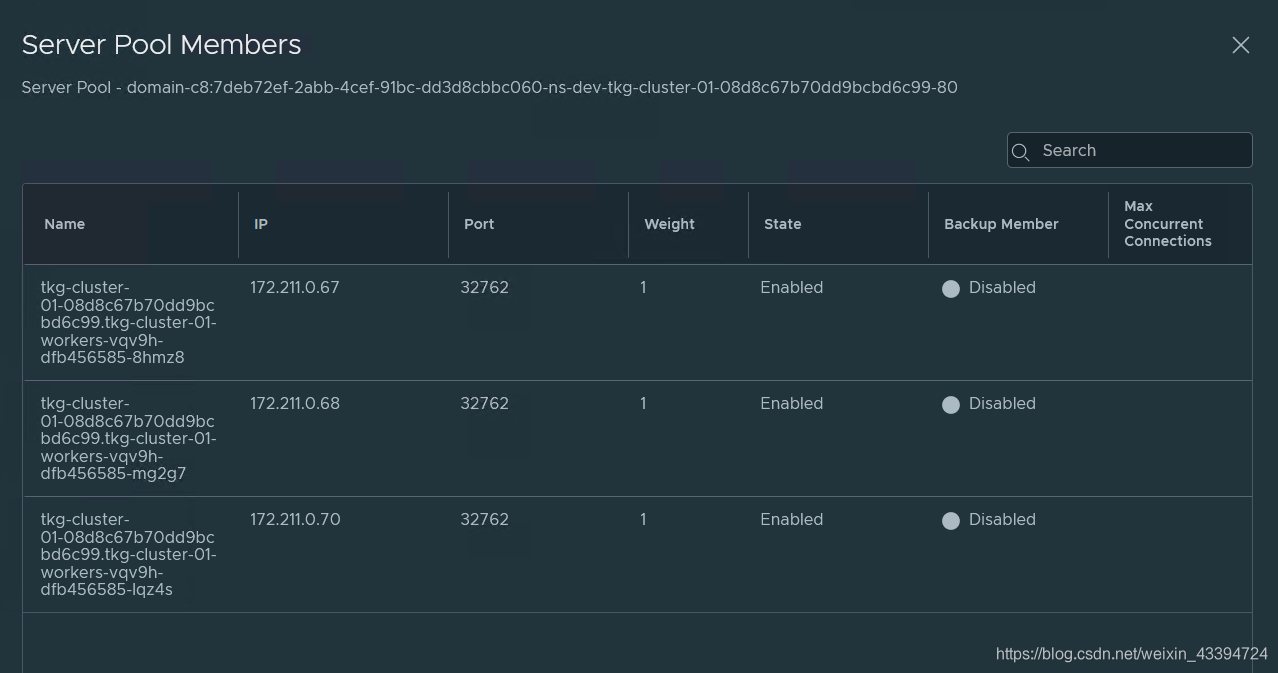

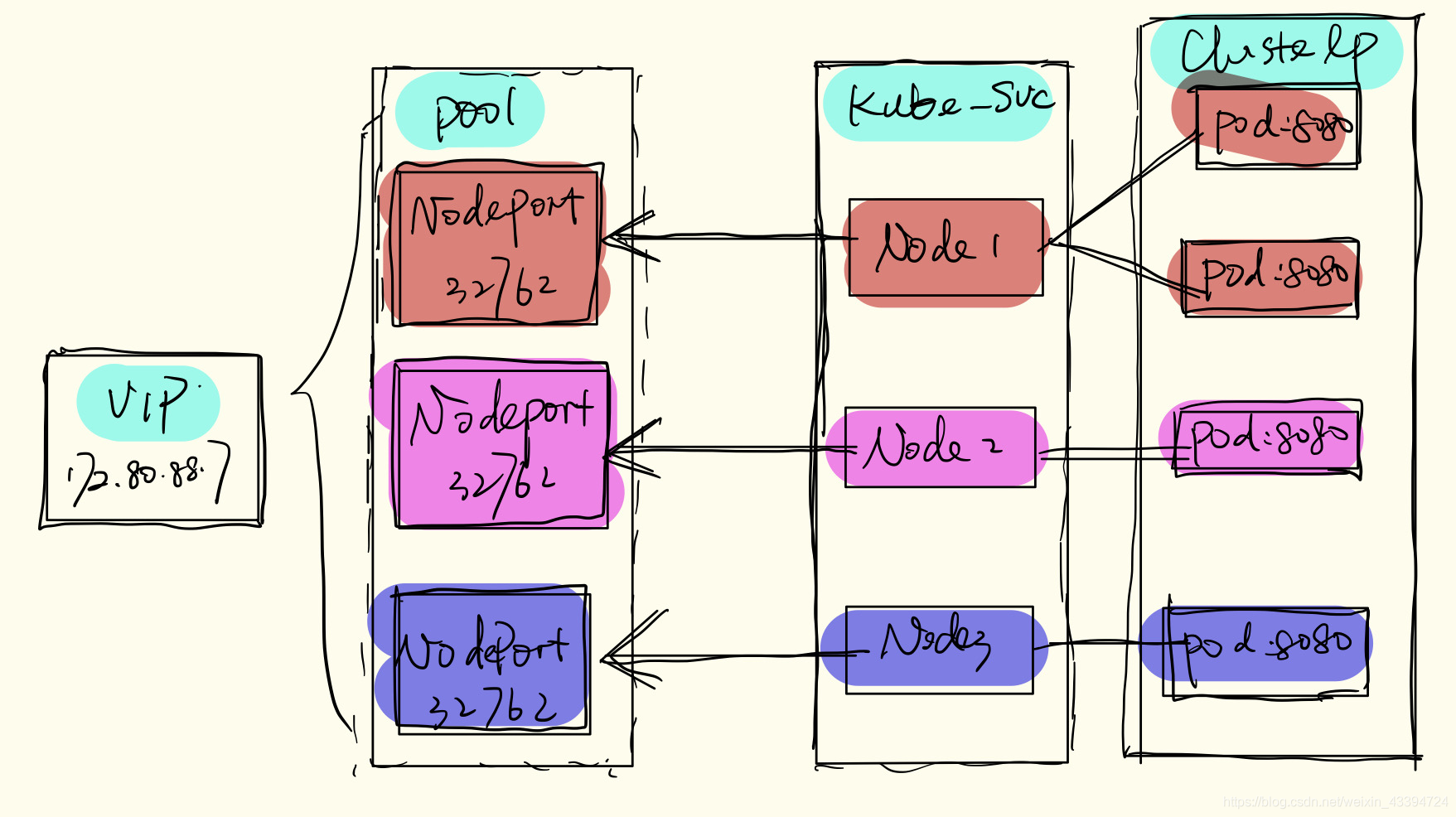

以上信息表面:

- 从NSX-T角度对外提供172.80.88.7:80的服务

- NSX-T为LB建立一个Pool,该pool看到的是TKG VM,使用NodePort指定服务

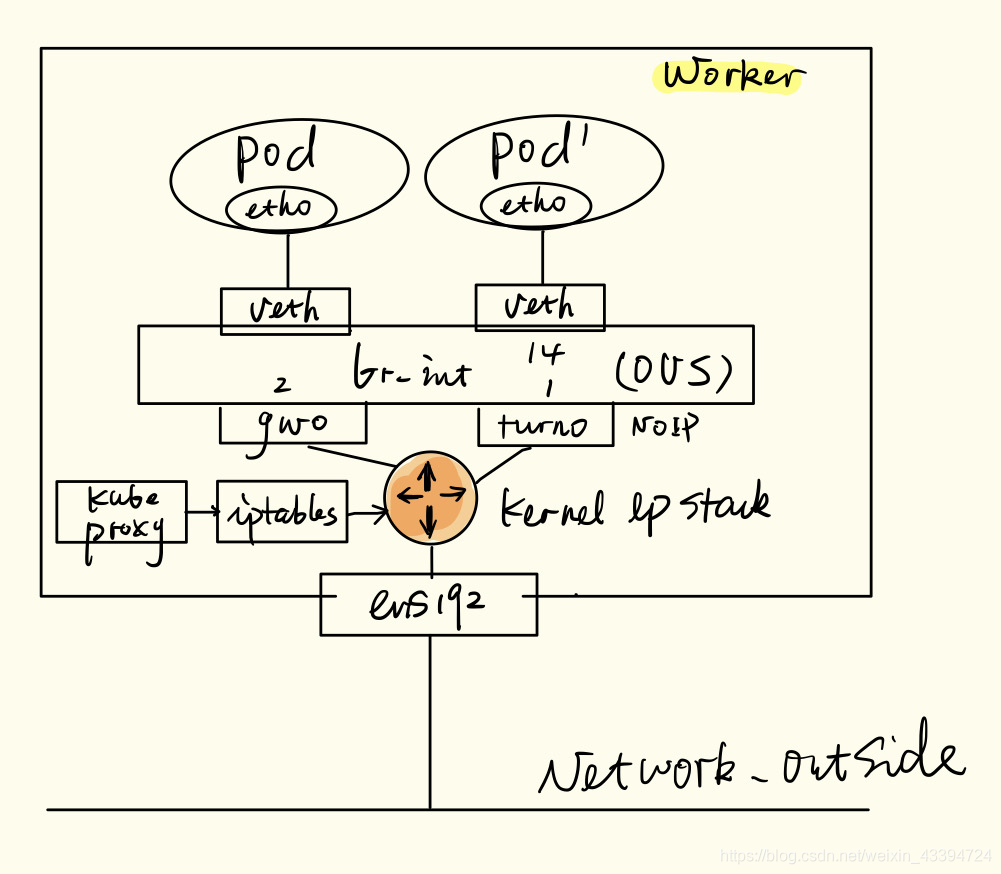

服务如何指向pod

真正提供服务的是pod,该组pods的网络是由Antrea提供

查看OVS

选择一个VM中的antrea-pod

[root@hop ~]# kubectl exec -n kube-system -it antrea-agent-62ts6 -c antrea-ovs – ovs-ofctl show br-int

OFPT_FEATURES_REPLY (xid=0x2): dpid:00006e2c7246ef4b

n_tables:254, n_buffers:0

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(antrea-tun0): addr:1a:d6:b5:98:43:23

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

2(antrea-gw0): addr:ae:d8:c3:ae:8a:a9

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

3(coredns–1de91c): addr:8e:35:ca:da:10:83

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

4(vsphere–94f0df): addr:2e:8c:0c:e7:1c:fd

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

5(hello-ku-0f40e6): addr:ba:75:2e:fd:9f:70

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

6(hello-ku-102fde): addr:4a:4d:fe:f7:3f:0d

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

7(test-pd-0ec60a): addr:b6:df:fe:3a:d6:62

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

以上信息可以清楚的看到OVS的端口发布情况:

- port1是trun0

- port2是gw0

- port5、6是示例pod的端口

查看IPtables表

Antrea是使用iptables(kube-proxy)来提供Service功能,所以我们在Node上查看IPtables,有关如何登陆TKG Node,请查看How to SSH to the Tanzu Kubernetes Cluster Nodes

vmware-system-user@tkg-cluster-01-workers-vqv9h-dfb456585-mg2g7 [ ~ ]$ sudo iptables -t nat -L -n

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

KUBE-SERVICES all – 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */Chain INPUT (policy ACCEPT)

target prot opt source destinationChain OUTPUT (policy ACCEPT)

target prot opt source destination

KUBE-SERVICES all – 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

KUBE-POSTROUTING all – 0.0.0.0/0 0.0.0.0/0 /* kubernetes postrouting rules /

ANTREA-POSTROUTING all – 0.0.0.0/0 0.0.0.0/0 / Antrea: jump to Antrea postrouting rules /

Chain ANTREA-POSTROUTING (1 references)

target prot opt source destination

MASQUERADE all – 10.96.0.0/24 0.0.0.0/0 / Antrea: masquerade pod to external packets / ! match-set ANTREA-POD-IP dst

Chain KUBE-FW-XM7DIBVJQPJ3TSDT (1 references) target prot opt source destination KUBE-MARK-MASQ all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes loadbalancer IP */ KUBE-SVC-XM7DIBVJQPJ3TSDT all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes loadbalancer IP */ KUBE-MARK-DROP all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes loadbalancer IP */

Chain KUBE-KUBELET-CANARY (0 references)

target prot opt source destination

Chain KUBE-MARK-DROP (1 references)

target prot opt source destination

MARK all – 0.0.0.0/0 0.0.0.0/0 MARK or 0x8000

Chain KUBE-MARK-MASQ (24 references)

target prot opt source destination

MARK all – 0.0.0.0/0 0.0.0.0/0 MARK or 0x4000

Chain KUBE-NODEPORTS (1 references) target prot opt source destination KUBE-MARK-MASQ tcp -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ tcp dpt:32762 KUBE-SVC-XM7DIBVJQPJ3TSDT tcp -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ tcp dpt:32762

Chain KUBE-POSTROUTING (1 references)

target prot opt source destination

RETURN all – 0.0.0.0/0 0.0.0.0/0 mark match ! 0x4000/0x4000

MARK all – 0.0.0.0/0 0.0.0.0/0 MARK xor 0x4000

MASQUERADE all – 0.0.0.0/0 0.0.0.0/0 / kubernetes service traffic requiring SNAT / random-fully

Chain KUBE-PROXY-CANARY (0 references)

target prot opt source destination

Chain KUBE-SEP-4AOHSCTDEETDC334 (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.4.3 0.0.0.0/0 / kube-system/kube-dns:dns /

DNAT udp – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns / udp to:10.96.4.3:53

Chain KUBE-SEP-7MGGW6LIQYM2AEZM (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.0.2 0.0.0.0/0 / kube-system/kube-dns:dns /

DNAT udp – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns / udp to:10.96.0.2:53

Chain KUBE-SEP-BAEMDSCADA7MFLF6 (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 172.211.0.71 0.0.0.0/0 / default/kubernetes:https /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / default/kubernetes:https / tcp to:172.211.0.71:6443

Chain KUBE-SEP-C5JUJ7G3IEKY63Q2 (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.4.3 0.0.0.0/0 / kube-system/kube-dns:dns-tcp /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns-tcp / tcp to:10.96.4.3:53

Chain KUBE-SEP-H4R2CT6HM6EFNIJJ (1 references) target prot opt source destination KUBE-MARK-MASQ all -- 10.96.0.5 0.0.0.0/0 /* default/hello-kubernetes */ DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ tcp to:10.96.0.5:8080 Chain KUBE-SEP-HU5MDV4BUZFHSUJC (1 references) target prot opt source destination KUBE-MARK-MASQ all -- 10.96.0.4 0.0.0.0/0 /* default/hello-kubernetes */ DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ tcp to:10.96.0.4:8080

Chain KUBE-SEP-KPJ3BBKAZPHMYFTG (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.0.2 0.0.0.0/0 / kube-system/kube-dns:metrics /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:metrics / tcp to:10.96.0.2:9153

Chain KUBE-SEP-LIVJA523RKC6LSVI (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.5.4 0.0.0.0/0 / vmware-system-csi/vsphere-csi-controller:syncer /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / vmware-system-csi/vsphere-csi-controller:syncer / tcp to:10.96.5.4:2113

Chain KUBE-SEP-MH6Q7TDG4GD3GZT6 (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 172.211.0.67 0.0.0.0/0 / kube-system/antrea /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / kube-system/antrea / tcp to:172.211.0.67:10349

Chain KUBE-SEP-N55QOV3APYFANXKA (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.5.4 0.0.0.0/0 / vmware-system-csi/vsphere-csi-controller:ctlr /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / vmware-system-csi/vsphere-csi-controller:ctlr / tcp to:10.96.5.4:2112

Chain KUBE-SEP-N7SD7XOQOSKLNZUL (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.4.3 0.0.0.0/0 / kube-system/kube-dns:metrics /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:metrics / tcp to:10.96.4.3:9153

Chain KUBE-SEP-NR26ZMIMIRVTQMOT (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.1.3 0.0.0.0/0 / default/hello-kubernetes /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / default/hello-kubernetes / tcp to:10.96.1.3:8080

Chain KUBE-SEP-TJMM3PWXZKCELOTO (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.0.2 0.0.0.0/0 / kube-system/kube-dns:dns-tcp /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns-tcp / tcp to:10.96.0.2:53

Chain KUBE-SEP-WBYNSHOG7M44Q3HZ (1 references)

target prot opt source destination

KUBE-MARK-MASQ all – 10.96.4.4 0.0.0.0/0 / default/hello-kubernetes /

DNAT tcp – 0.0.0.0/0 0.0.0.0/0 / default/hello-kubernetes / tcp to:10.96.4.4:8080

Chain KUBE-SERVICES (2 references)

target prot opt source destination

KUBE-MARK-MASQ tcp – !10.96.0.0/11 100.64.0.10 / kube-system/kube-dns:dns-tcp cluster IP / tcp dpt:53

KUBE-SVC-ERIFXISQEP7F7OF4 tcp – 0.0.0.0/0 100.64.0.10 / kube-system/kube-dns:dns-tcp cluster IP / tcp dpt:53

KUBE-MARK-MASQ tcp – !10.96.0.0/11 100.64.0.10 / kube-system/kube-dns:metrics cluster IP / tcp dpt:9153

KUBE-SVC-JD5MR3NA4I4DYORP tcp – 0.0.0.0/0 100.64.0.10 / kube-system/kube-dns:metrics cluster IP / tcp dpt:9153

KUBE-MARK-MASQ tcp – !10.96.0.0/11 100.71.165.12 / vmware-system-csi/vsphere-csi-controller:ctlr cluster IP / tcp dpt:211

KUBE-SVC-MRLSHM6RP645F575 tcp – 0.0.0.0/0 100.71.165.12 / vmware-system-csi/vsphere-csi-controller:ctlr cluster IP /

KUBE-MARK-MASQ tcp – !10.96.0.0/11 100.71.165.12 / vmware-system-csi/vsphere-csi-controller:syncer cluster IP / tcp dpt:2

KUBE-SVC-AAQCXBCVUR2XJIMS tcp – 0.0.0.0/0 100.71.165.12 / vmware-system-csi/vsphere-csi-controller:syncer cluster IP *

KUBE-MARK-MASQ tcp -- !10.96.0.0/11 100.64.136.105 /* default/hello-kubernetes cluster IP */ tcp dpt:80

KUBE-SVC-XM7DIBVJQPJ3TSDT tcp -- 0.0.0.0/0 100.64.136.105 /* default/hello-kubernetes cluster IP */ tcp dpt:80 KUBE-FW-XM7DIBVJQPJ3TSDT tcp -- 0.0.0.0/0 172.80.88.7 /* default/hello-kubernetes loadbalancer IP */ tcp dpt:80

KUBE-MARK-MASQ tcp – !10.96.0.0/11 100.64.0.1 /* default/kubernetes:https cluster IP / tcp dpt:443

KUBE-SVC-NPX46M4PTMTKRN6Y tcp – 0.0.0.0/0 100.64.0.1 / default/kubernetes:https cluster IP / tcp dpt:443

KUBE-MARK-MASQ tcp – !10.96.0.0/11 100.65.211.79 / kube-system/antrea cluster IP / tcp dpt:443

KUBE-SVC-QDWG4LJGNBTOT5ED tcp – 0.0.0.0/0 100.65.211.79 / kube-system/antrea cluster IP / tcp dpt:443

KUBE-MARK-MASQ udp – !10.96.0.0/11 100.64.0.10 / kube-system/kube-dns:dns cluster IP / udp dpt:53

KUBE-SVC-TCOU7JCQXEZGVUNU udp – 0.0.0.0/0 100.64.0.10 / kube-system/kube-dns:dns cluster IP / udp dpt:53

KUBE-NODEPORTS all – 0.0.0.0/0 0.0.0.0/0 / kubernetes service nodeports; NOTE: this must be the last rule in this

Chain KUBE-SVC-AAQCXBCVUR2XJIMS (1 references)

target prot opt source destination

KUBE-SEP-LIVJA523RKC6LSVI all – 0.0.0.0/0 0.0.0.0/0 /* vmware-system-csi/vsphere-csi-controller:syncer /

Chain KUBE-SVC-ERIFXISQEP7F7OF4 (1 references)

target prot opt source destination

KUBE-SEP-TJMM3PWXZKCELOTO all – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns-tcp / statistic mode random probab

KUBE-SEP-C5JUJ7G3IEKY63Q2 all – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns-tcp /

Chain KUBE-SVC-JD5MR3NA4I4DYORP (1 references)

target prot opt source destination

KUBE-SEP-KPJ3BBKAZPHMYFTG all – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:metrics / statistic mode random probab

KUBE-SEP-N7SD7XOQOSKLNZUL all – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:metrics /

Chain KUBE-SVC-MRLSHM6RP645F575 (1 references)

target prot opt source destination

KUBE-SEP-N55QOV3APYFANXKA all – 0.0.0.0/0 0.0.0.0/0 / vmware-system-csi/vsphere-csi-controller:ctlr /

Chain KUBE-SVC-NPX46M4PTMTKRN6Y (1 references)

target prot opt source destination

KUBE-SEP-BAEMDSCADA7MFLF6 all – 0.0.0.0/0 0.0.0.0/0 / default/kubernetes:https /

Chain KUBE-SVC-QDWG4LJGNBTOT5ED (1 references)

target prot opt source destination

KUBE-SEP-MH6Q7TDG4GD3GZT6 all – 0.0.0.0/0 0.0.0.0/0 / kube-system/antrea /

Chain KUBE-SVC-TCOU7JCQXEZGVUNU (1 references)

target prot opt source destination

KUBE-SEP-7MGGW6LIQYM2AEZM all – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns / statistic mode random probabilit

KUBE-SEP-4AOHSCTDEETDC334 all – 0.0.0.0/0 0.0.0.0/0 / kube-system/kube-dns:dns */

Chain KUBE-SVC-XM7DIBVJQPJ3TSDT (3 references) target prot opt source destination KUBE-SEP-HU5MDV4BUZFHSUJC all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ statistic mode random probabilit KUBE-SEP-H4R2CT6HM6EFNIJJ all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ statistic mode random probabilit KUBE-SEP-NR26ZMIMIRVTQMOT all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */ statistic mode random probabilit KUBE-SEP-WBYNSHOG7M44Q3HZ all -- 0.0.0.0/0 0.0.0.0/0 /* default/hello-kubernetes */

我们把上图标红的地方整理一下:

- Chain KUBE-SVC-XM7DIBVJQPJ3TSDT (3 references) 该表项在每个VM的IPtables表中一致

| target | prot | opt | source | destination |

|---|---|---|---|---|

KUBE-SEP-HU5MDV4BUZFHSUJC | all | – | 0.0.0.0/0 | 0.0.0.0/0 |

| KUBE-SEP-H4R2CT6HM6EFNIJJ | all | – | 0.0.0.0/0 | 0.0.0.0/0 |

| KUBE-SEP-NR26ZMIMIRVTQMOT | all | – | 0.0.0.0/0 | 0.0.0.0/0 |

| KUBE-SEP-WBYNSHOG7M44Q3HZ | all | – | 0.0.0.0/0 | 0.0.0.0/0 |

服务Chain :KUBE-SVC-XM7DIBVJQPJ3TSDT包括了4个target

分析第一个target:Chain KUBE-SEP-HU5MDV4BUZFHSUJC (1 references)

| target | prot | opt | source | destination | |

|---|---|---|---|---|---|

| KUBE-MARK-MASQ | all | – | 10.96.0.4 | 0.0.0.0/0 | /* default/hello-kubernetes */ |

| DNAT | tcp | – | 0.0.0.0/0 | 0.0.0.0/0 | /* default/hello-kubernetes */ tcp to:10.96.0.4:8080` |

这个target最终指向了提供服务的pod及其端口10.96.0.4:8080。

同样的,我们可以找到10.96.0.5:8080,这两个都是本Node的pod

- Cluster IP

| target | prot | opt | source | destination | |

|---|---|---|---|---|---|

| KUBE-MARK-MASQ | tcp | – | !10.96.0.0/11 | 100.64.136.105 | /* default/hello-kubernetes cluster IP */ tcp dpt:80` |

KUBE-SVC-XM7DIBVJQPJ3TSDT | tcp | – | 0.0.0.0/0 | 100.64.136.105 | /* default/hello-kubernetes cluster IP */ tcp dpt:80 |

KUBE-FW-XM7DIBVJQPJ3TSDT | tcp | – | 0.0.0.0/0 | 172.80.88.7 | /* default/hello-kubernetes loadbalancer IP */ tcp dpt:80 |

这个表清楚给出了Cluster IP和外部IP以及相应的target:KUBE-FW-XM7DIBVJQPJ3TSDT,并没有指定pod,说明所有pod会参与服务,事实上我们也在web前端刷到所有pod。

- NodePort

Chain KUBE-NODEPORTS (1 references)

| target | prot | opt | source | destination | |

|---|---|---|---|---|---|

| KUBE-MARK-MASQ | tcp | – | 0.0.0.0/0 | 0.0.0.0/0 | /* default/hello-kubernetes */ tcp dpt:32762 |

KUBE-SVC-XM7DIBVJQPJ3TSDT | tcp | – | 0.0.0.0/0 | 0.0.0.0/0 | /* default/hello-kubernetes */ tcp dpt:32762` |

该表给出NodePort和target的联系。

我们再回头看

[root@hop ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-kubernetes LoadBalancer100.64.136.105172.80.88.780:32762/TCP39d

和IPtables表中的相关项有对应关系。

以上