本地测试环境搭建了一套简单的K8s集群,1个Master节点、1个Node节点。

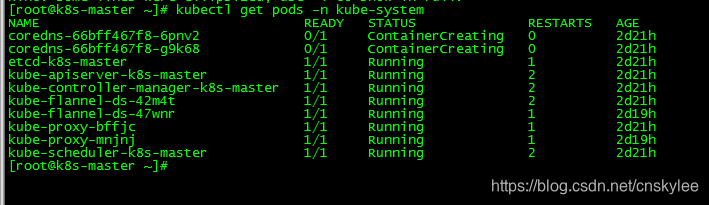

查询pods的状态时,发现coredns的两个pods实例一直处于容器创建(ContainerCreating)状态,而flannel容器实例运行正常。如下图所示:

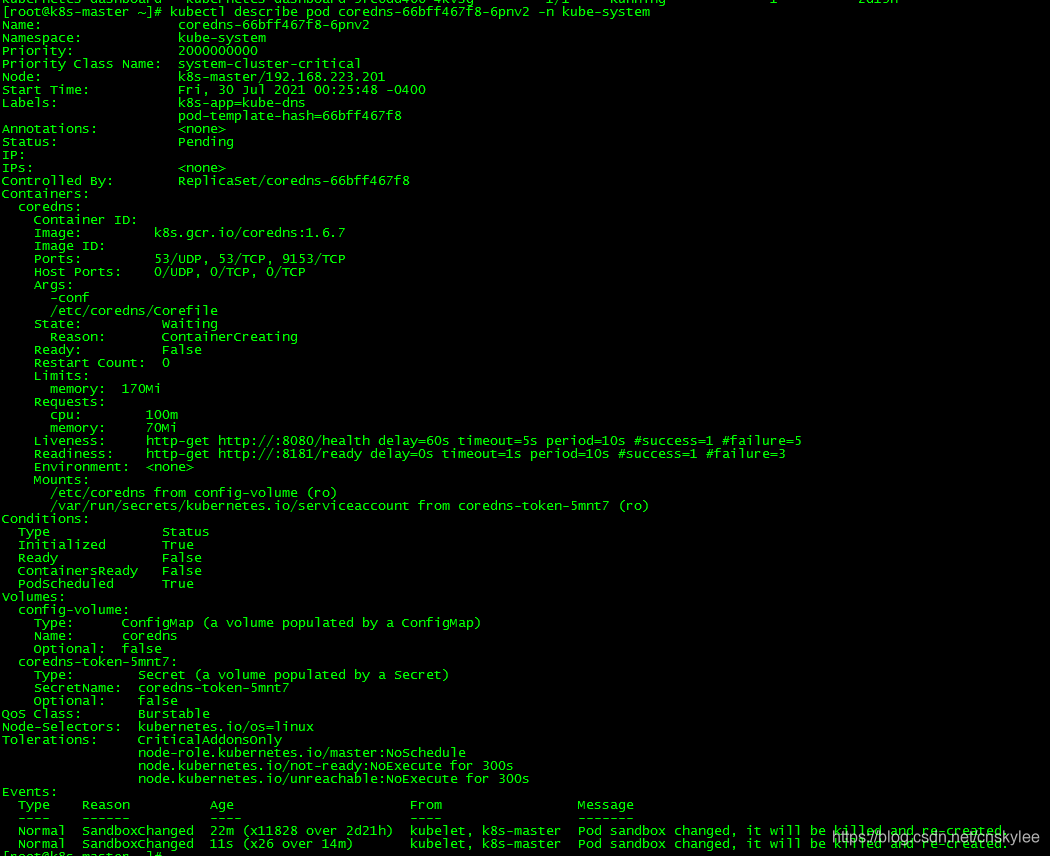

继续查看coredns其中一个pod实例的详细信息,发现容器状态为挂起(Pending),从Events并没有发现什么明显的报错。

最后在征求其他网友的建议后,删除这两个处于ContainerCreating状态的coredns pod实例,删除命令如下:

$ kubectl delete pods coredns-66bff467f8-6pnv2 -n kube-system

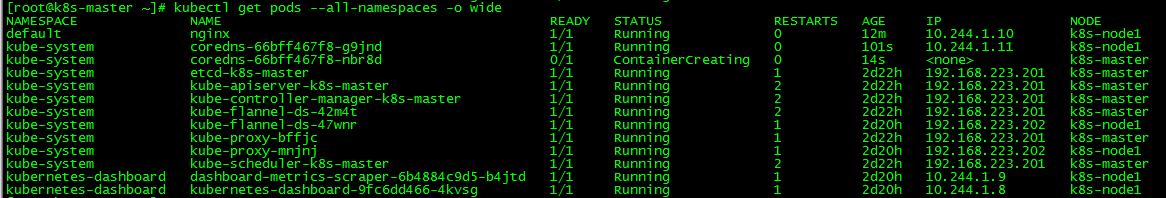

$ kubectl delete pods coredns-66bff467f8-g9k68 -n kube-system删除后,pod实例会根据replicates配置(本示例为2个)执行重新创建。重新创建后的两个coredns POD一个状态为running,另外一个仍然为ContainerCreating。

继续使用describe查看这个pods的详细信息,可以发现这个pods仍然是在k8s-master节点创建失败。

[root@k8s-master ~]# kubectl describe pod coredns-66bff467f8-nbr8d -n kube-system

Name: coredns-66bff467f8-nbr8d

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: k8s-master/192.168.223.201

Start Time: Sun, 01 Aug 2021 23:01:39 -0400

Labels: k8s-app=kube-dns

pod-template-hash=66bff467f8

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: ReplicaSet/coredns-66bff467f8

Containers:

coredns:

Container ID:

Image: k8s.gcr.io/coredns:1.6.7

Image ID:

Ports: 53/UDP, 53/TCP, 9153/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

-conf

/etc/coredns/Corefile

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:8080/health delay=60s timeout=5s period=10s #success=1 #failure=5

Readiness: http-get http://:8181/ready delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/coredns from config-volume (ro)

/var/run/secrets/kubernetes.io/serviceaccount from coredns-token-5mnt7 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns

Optional: false

coredns-token-5mnt7:

Type: Secret (a volume populated by a Secret)

SecretName: coredns-token-5mnt7

Optional: false

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: CriticalAddonsOnly

node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 108s default-scheduler Successfully assigned kube-system/coredns-66bff467f8-nbr8d to k8s-master

Warning FailedCreatePodSandBox 65s kubelet, k8s-master Failed to create pod sandbox: rpc error: code = Unknown desc = [failed to set up sandbox container "8a6c342158717335b5fc36319250c5e0ec29df7665dec76393d0bd69c5382689" network for pod "coredns-66bff467f8-nbr8d": networkPlugin cni failed to set up pod "coredns-66bff467f8-nbr8d_kube-system" network: error getting ClusterInformation: Get "https://[10.43.0.1]:443/apis/crd.projectcalico.org/v1/clusterinformations/default": dial tcp 10.43.0.1:443: connect: connection refused, failed to clean up sandbox container "8a6c342158717335b5fc36319250c5e0ec29df7665dec76393d0bd69c5382689" network for pod "coredns-66bff467f8-nbr8d": networkPlugin cni failed to teardown pod "coredns-66bff467f8-nbr8d_kube-system" network: error getting ClusterInformation: Get "https://[10.43.0.1]:443/apis/crd.projectcalico.org/v1/clusterinformations/default": dial tcp 10.43.0.1:443: connect: connection refused]

Normal SandboxChanged 1s (x4 over 65s) kubelet, k8s-master Pod sandbox changed, it will be killed and re-created.而创建失败的节点已然为k8s-master,后来经过研究,发现原因是k8s-master节点被设置为了污点(上面的信息可以看出来-node-role.kubernetes.io/master:NoSchedule),是不允许被调度的。下面是查询命令:

[root@k8s-master ~]# kubectl describe nodes k8s-master |grep -i taint

Taints: node-role.kubernetes.io/master:NoSchedule【解决】

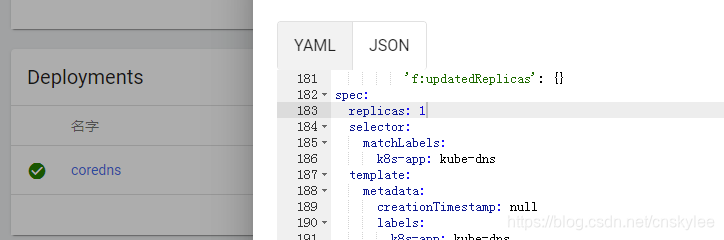

在k8s-dashboard里面,将coredns部署的副本数从2修改为1,提交后在k8s-master节点创建失败的pod实例即可成功删除。

?查询发现所有的pods运行状态恢复正常了。

[root@k8s-master ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-66bff467f8-g9jnd 1/1 Running 0 4h44m 10.244.1.11 k8s-node1 <none> <none>

etcd-k8s-master 1/1 Running 1 3d3h 192.168.223.201 k8s-master <none> <none>

kube-apiserver-k8s-master 1/1 Running 2 3d3h 192.168.223.201 k8s-master <none> <none>

kube-controller-manager-k8s-master 1/1 Running 2 3d3h 192.168.223.201 k8s-master <none> <none>

kube-flannel-ds-42m4t 1/1 Running 2 3d3h 192.168.223.201 k8s-master <none> <none>

kube-flannel-ds-47wnr 1/1 Running 1 3d1h 192.168.223.202 k8s-node1 <none> <none>

kube-proxy-bffjc 1/1 Running 1 3d3h 192.168.223.201 k8s-master <none> <none>

kube-proxy-mnjnj 1/1 Running 1 3d1h 192.168.223.202 k8s-node1 <none> <none>

kube-scheduler-k8s-master 1/1 Running 2 3d3h 192.168.223.201 k8s-master <none> <none>

[root@k8s-master ~]# 致谢:

感谢在问题处理过程中来自于【Kubernetes中国1群】的朋友的大力协助!

- 飘渺丶梦境

- 獨钓寒江

- 师师