图解

iptables 默认

[root@k8s-master1 7]# iptables-save |grep nginx-nodeport

//标记

//出口流量

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-nodeport" -m tcp --dport 30000 -j KUBE-MARK-MASQ

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-nodeport" -m tcp --dport 30000 -j KUBE-SVC-CGFVTWEXQTKV5QXW

-A KUBE-SEP-2WK7DTGWWQGBECK5 -s 10.244.169.140/32 -m comment --comment "default/nginx-nodeport" -j KUBE-MARK-MASQ

-A KUBE-SEP-2WK7DTGWWQGBECK5 -p tcp -m comment --comment "default/nginx-nodeport" -m tcp -j DNAT --to-destination 10.244.169.140:80

-A KUBE-SERVICES ! -s 10.244.0.0/16 -d 10.103.12.8/32 -p tcp -m comment --comment "default/nginx-nodeport cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

//入口流量

// 转发 访问80端口被转发到KUBE-MARK-MASQ, 也就是上面标记的端口

-A KUBE-SERVICES -d 10.103.12.8/32 -p tcp -m comment --comment "default/nginx-nodeport cluster IP" -m tcp --dport 80 -j KUBE-SVC-CGFVTWEXQTKV5QXW

-A KUBE-SVC-CGFVTWEXQTKV5QXW -m comment --comment "default/nginx-nodeport" -j KUBE-SEP-2WK7DTGWWQGBECK5

[root@k8s-master1 7]#

- 扩容

- kubectl scale deployment nginx --replicas=3

- kubectl get ep

- 查看

[root@k8s-master1 7]# iptables-save |grep KUBE-SVC-A26F6BALGETXAGKG

:KUBE-SVC-A26F6BALGETXAGKG - [0:0]

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/pod-check" -m tcp --dport 32239 -j KUBE-SVC-A26F6BALGETXAGKG

-A KUBE-SERVICES -d 10.108.6.217/32 -p tcp -m comment --comment "default/pod-check cluster IP" -m tcp --dport 80 -j KUBE-SVC-A26F6BALGETXAGKG

//三条转发规则,实现负载句哼, 概率 0.33333333349

-A KUBE-SVC-A26F6BALGETXAGKG -m comment --comment "default/pod-check" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-MIPE7TVXCPBIRR5B

// 概率 0.5

-A KUBE-SVC-A26F6BALGETXAGKG -m comment --comment "default/pod-check" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-IU6FDGX4AGYVHQQW

//概率1

-A KUBE-SVC-A26F6BALGETXAGKG -m comment --comment "default/pod-check" -j KUBE-SEP-I57WEEY5SFOMRE2L

- 满足规则转发DNT 到KUBE-SEP-I57WEEY5SFOMRE2L 默认端口80,如果设置其他端口则会标记具体端口

```

[root@k8s-master1 7]# iptables-save |grep KUBE-SEP-I57WEEY5SFOMRE2L

:KUBE-SEP-I57WEEY5SFOMRE2L - [0:0]

-A KUBE-SEP-I57WEEY5SFOMRE2L -s 10.244.169.191/32 -m comment --comment “default/pod-check” -j KUBE-MARK-MASQ

-A KUBE-SEP-I57WEEY5SFOMRE2L -p tcp -m comment --comment “default/pod-check” -m tcp -j DNAT --to-destination 10.244.169.191:80

-A KUBE-SVC-A26F6BALGETXAGKG -m comment --comment “default/pod-check” -j KUBE-SEP-I57WEEY5SFOMRE2L

[root@k8s-master1 7]#

```

ipvs

- 修改配置文件

- kubeadm方式修改ipvs模式:

- kubectl edit configmap kube-proxy -n kube-system …

- mode: “ipvs“ …

- kubeadm方式修改ipvs模式:

- kubectl delete pod kube-proxy-btz4p -n kube-system 注:

-1、kube-proxy配置文件以configmap方式存储 2、如果让所有节点生效,需要重建所有节点kube-proxy pod

验证

[root@k8s-master1 7]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-78d6f96c7b-4gfls 1/1 Running 1 5d20h 10.244.159.129 k8s-master1 <none> <none>

calico-node-gdcv2 1/1 Running 0 5d20h 192.168.1.12 k8s-node2 <none> <none>

calico-node-sh7cg 1/1 Running 0 5d20h 192.168.1.11 k8s-master1 <none> <none>

coredns-545d6fc579-7vj5m 1/1 Running 0 23d 10.244.159.132 k8s-master1 <none> <none>

coredns-545d6fc579-s64rm 1/1 Running 0 23d 10.244.159.134 k8s-master1 <none> <none>

etcd-k8s-master1 1/1 Running 2 23d 192.168.1.11 k8s-master1 <none> <none>

kube-apiserver-k8s-master1 1/1 Running 3 23d 192.168.1.11 k8s-master1 <none> <none>

kube-controller-manager-k8s-master1 1/1 Running 7 23d 192.168.1.11 k8s-master1 <none> <none>

kube-proxy-fp6xk 1/1 Running 1 23d 192.168.1.12 k8s-node2 <none> <none>

kube-proxy-v52q5 1/1 Running 0 23d 192.168.1.11 k8s-master1 <none> <none>

kube-scheduler-k8s-master1 1/1 Running 6 23d 192.168.1.11 k8s-master1 <none> <none>

metrics-server-589c9d4d6-n5rhx 1/1 Running 0 14d 192.168.1.12 k8s-node2 <none> <none>

[root@k8s-master1 7]# kubectl delete pod kube-proxy-fp6xk

Error from server (NotFound): pods "kube-proxy-fp6xk" not found

[root@k8s-master1 7]# kubectl delete pod kube-proxy-fp6xk -n kube-system

pod "kube-proxy-fp6xk" deleted

[root@k8s-master1 7]#

- 在pod所在的主机上

[root@k8s-node2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 127.0.0.1:30000 rr

-> 10.244.159.135:80 Masq 1 0 0

-> 10.244.159.137:80 Masq 1 0 0

-> 10.244.169.140:80 Masq 1 0 0

TCP 127.0.0.1:31907 rr

-> 10.244.159.135:80 Masq 1 0 0

-> 10.244.159.137:80 Masq 1 0 0

-> 10.244.169.140:80 Masq 1 0 0

TCP 127.0.0.1:31925 rr

TCP 127.0.0.1:32239 rr

-> 10.244.169.176:80 Masq 1 0 0

-> 10.244.169.177:80 Masq 1 0 0

-> 10.244.169.191:80 Masq 1 0 0

TCP 172.17.0.1:30000 rr

-> 10.244.159.135:80 Masq 1 0 0

-> 10.244.159.137:80 Masq 1 0 0

-> 10.244.169.140:80 Masq 1 0 0

```

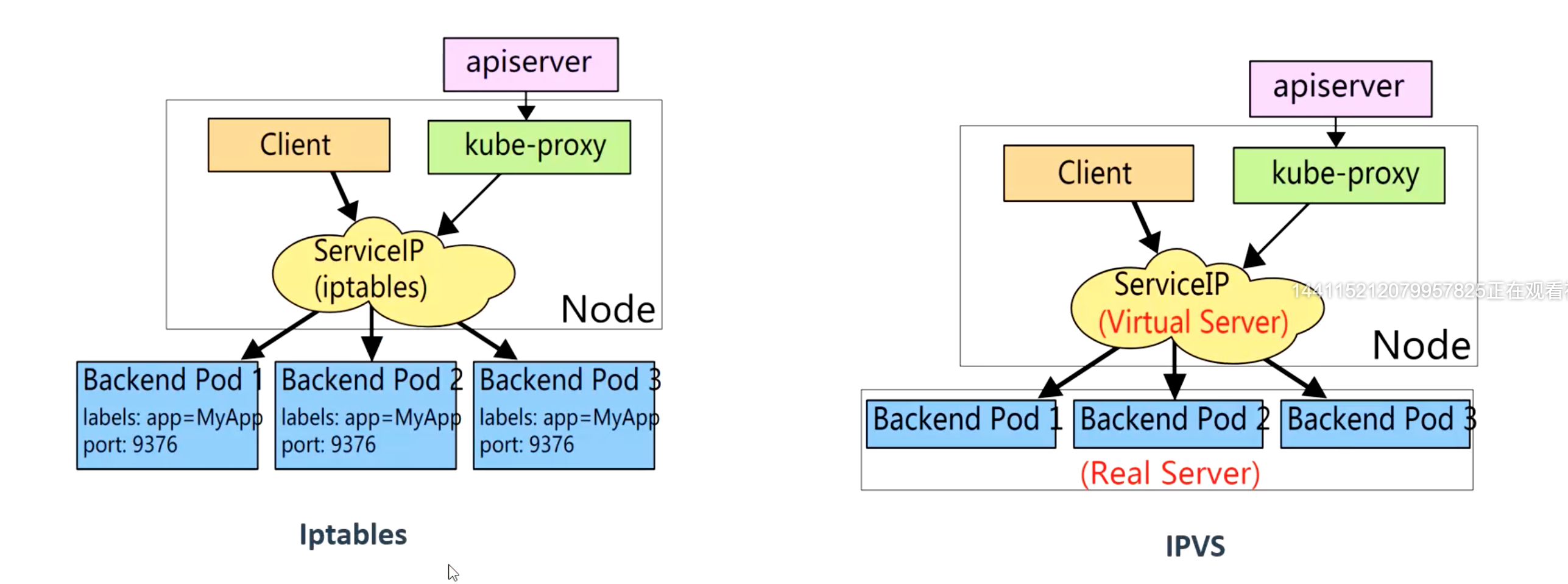

iptabel vs ipvs

-

iptable

- 灵活,功能强大

- 遍历匹配更新,线性延迟

-

ipvs

- 工作在内核太,有更好的性能

- 丰富的调度算法

- rr wrr wlc, ip hash

coreDNS

- 用busybox 进行域名解析

[root@k8s-master1 7]# kubectl run dns --image=busybox:1.28.4 -- sleep 24h

pod/dns created

[root@k8s-master1 7]# kubectl get pod

NAME

kubectl exec -it dns -- sh

-

kubectl get svc

-

查看命名空间

/ # nslookup kubernets

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

nslookup: can't resolve 'kubernets'

/ # nslookup kube-dns.kube-system.svc.cluster.local

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system.svc.cluster.local

Address 1: 10.96.0.10 kube-dns.kube-syst

- 访问格式

<service-name>.<namespace-name>.svc.cluster.local