一、伪装

把爬虫程序伪装成用浏览器来访问地址。

复制这一段"user-agent",伪装成浏览器的header。

![]()

import requests

url = "https://haokan.baidu.com/v?pd=wisenatural&vid=13010513670189503165"

headers = {

"User-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36"

}

rep = requests.get(url,headers=headers)

print(rep.text)

rep.close;二、访问百度翻译

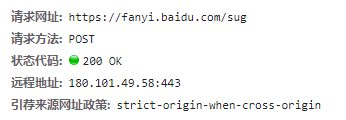

?由图可以看到该地址的请求方法为post,并且可以向该地址发送数据接收返回内容。

import requests

while(True):

url = "https://fanyi.baidu.com/sug"

s = input("请输入你要翻译的英文单词:")

kw = {

"kw":s

}

rep = requests.post(url, data= kw)

print(rep.json())

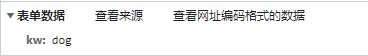

rep.close()运行结果:

三、访问豆瓣排行榜

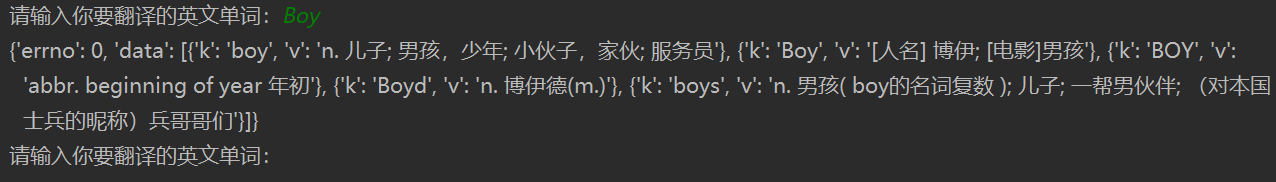

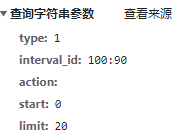

这回要用get函数了,如果嫌弃请求地址太长,可以用字典封装他的参数(右图所示)。这次还是要输入header因为豆瓣还是有一定的反爬能力🦎。

import requests

url = " https://movie.douban.com/j/chart/top_list"

#重新封装参数

dic = {

"type": "1",

"interval_id": "100:90",

"action":"",

" start":"0",

"limit": "20"

}

headers = {

"User-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36"

}

rep = requests.get(url=url, params=dic,headers=headers)

print(rep.text)

rep.close()