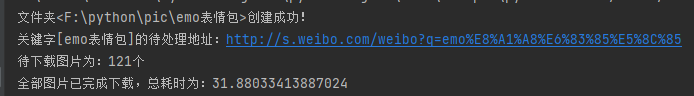

- 假设在微博主页地址输入关键字:emo表情包

- 中文需要使用urllib_parse.quote处理

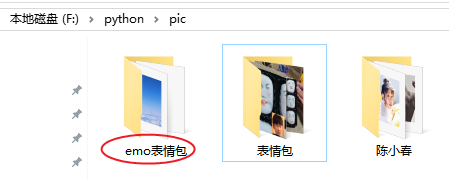

- 表情包自定义存本地文件夹

-

本地运行日志如下:

-

本地生成的文件格式为:

-

代码如下:

from selenium import webdriver

from urllib import parse

import requests

from requests.packages.urllib3.exceptions import InsecureRequestWarning

import time

import re

import os

# requests默认是keep-alive的,可能没有释放,加参数 headers={'Connection':'close'}

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36',

'Connection': 'close'

}

# 会忽略以下错误:InsecureRequestWarning: Unverified HTTPS request is being made to host 'wx3.sinaimg.cn'. Adding certificate

# verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html#ssl-warnings

requests.packages.urllib3.disable_warnings(InsecureRequestWarning)

# 增加连接重试次数

requests.adapters.DEFAULT_RETRIES = 5

# 关闭多余的连接:requests使用了urllib3库,默认的http connection是keep-alive的,requests设置False关闭

s = requests.session()

s.keep_alive = False

# 不用ssl证书验证:verify=False

# requests.get(url, verify=False)

def makedir(filePath):

E = os.path.exists(filePath)

if not E:

os.makedirs(filePath)

os.chdir(filePath)

print('文件夹<' + filePath + '>创建成功!')

else:

print('文件夹已存在!')

start_time = time.time()

# 1. 搜索图片的关键字

searchKey = 'emo表情包'

# 2. 创建当前关键字对应的文件夹

filePath = 'F:\python\pic' + '\\' + searchKey

makedir(filePath)

# 3. 处理关键字(中文会报错):https://s.weibo.com/weibo?q=%E8%A1%A8%E6%83%85%E5%8C%85

url = 'http://s.weibo.com/weibo?q=' + parse.quote(searchKey)

print('关键字[' + searchKey + ']的待处理地址:' + str(url))

def getDownloadUrl():

# 4. 模仿浏览器行为

options = webdriver.ChromeOptions()

options.add_experimental_option('excludeSwitches', ['enable-logging'])

web = webdriver.Chrome(options=options)

web.get(url)

time.sleep(6) # 给点时间获取网页内容

wb_resp = web.page_source # 返回网页源代码

web.close() # 打开的网页要及时关闭

return re.findall('img src="(?P<src>.*?)"', wb_resp)

if __name__ == '__main__':

download_link = getDownloadUrl()

print('待下载图片为:' + str(len(download_link)) + '个')

if len(download_link) > 0:

for link in download_link:

try:

if str(link).find('http') < 0:

link = "http:" + link

image_resp = requests.get(link, headers=header, verify=False)

# 为了避免命名重复导致报错,使用其中的一直在变化的一串数字来作为文件名

image_name = link.split("?")[0].split("/")[-1]

# 文件名称

# 创建Bytes形式的图片文件,把数据写入图片文件中,保存在文件中,打开方式为二进制写模式

with open(filePath + '\\' + image_name, mode="wb") as f:

f.write(image_resp.content)

# time.sleep(3)

image_resp.close()

except Exception as e:

image_resp.close()

print(e)

# 结束时间

print('全部图片已完成下载,总耗时为:' + str(time.time() - start_time))

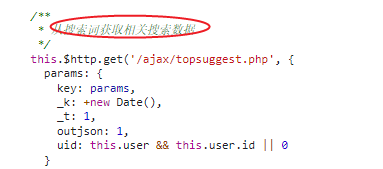

以上,只获取当前请求页的图片,如果想获取更多,F12了解到还有一个请求url:https://s.weibo.com/ajax/topsuggest.php

可以拿到与搜索词相关的博主id信息,然后直接去抓博主页的表情包也可以