–上篇–我们说到,爬取天眼查是否要用代理IP的问题,于是我索性不用代理IP,默认本机电脑IP,而且不用手机热点,直接连接公司WiFi,Cookie的话用新的,所以有了以下测试:

样例1:

1,本地公司网络

2,默认本地Ip

3,开两个程序,除了cookie都一样

4,间隔60秒

结果:一个爬了91个公司,另一个爬了99个公司,出现图片验证

结论:看样子30秒和60秒差不多

1

样例2:

1,本地公司网络

2,默认本地IP

3,新账号的cookie

4,间隔10~15秒

结果:爬了188个公司 出现图片验证

2

样例3:

1,本地公司网络

2,默认本地IP

3,新账号的cookie

4,间隔10~20秒

结果:爬了137个公司,出现图片验证

3

样例4:

在样例3的基础上,什么都不改,点击图片验证,然后拿到该账号新的Cookie,复制到代码中

结果:爬了100个公司,出现图片验证

同事建议我不加Cookie,不加请求头试试,他说他不登录也能在浏览器中看到公司的信息。于是我分为了两种情况:

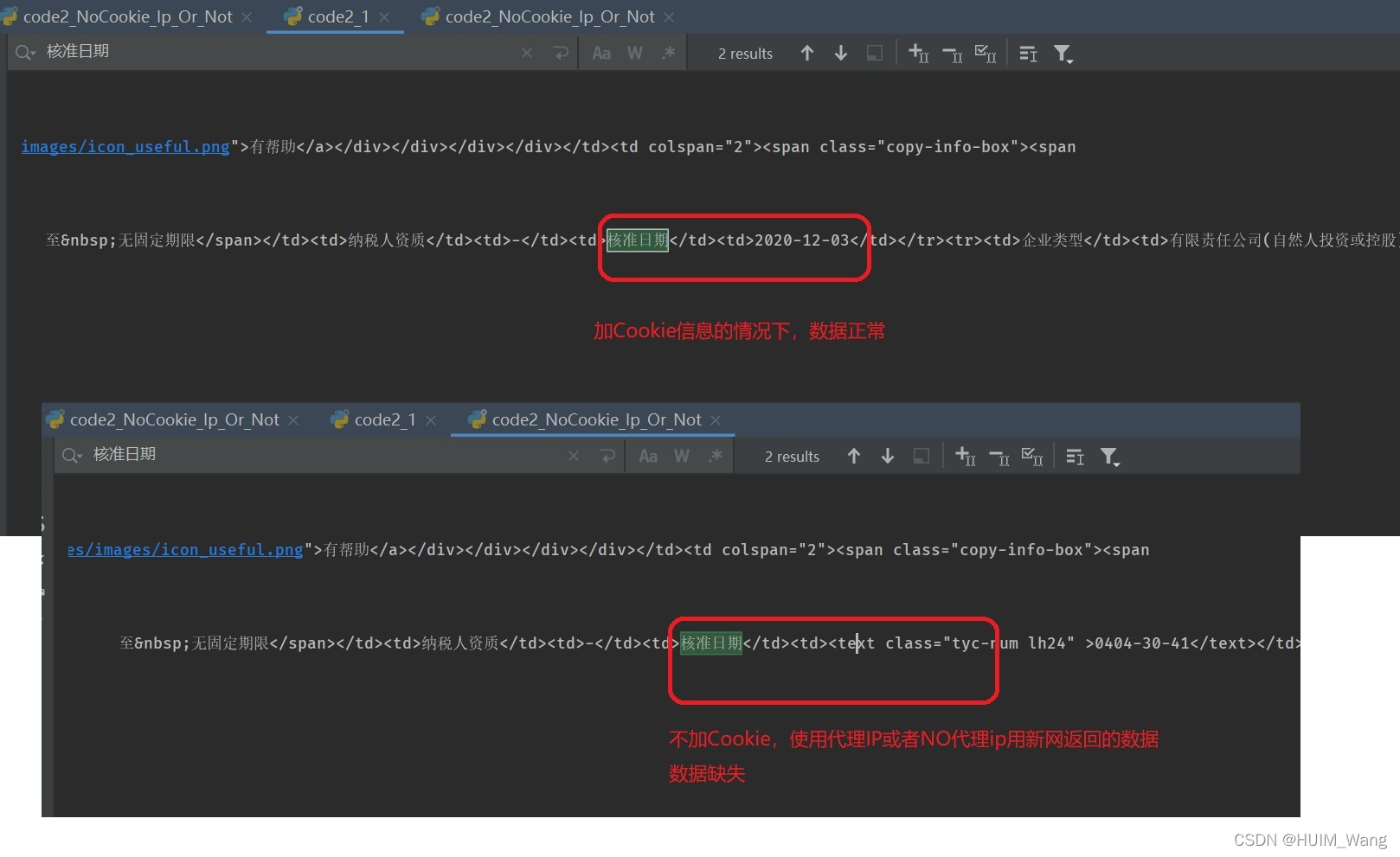

- 情况一,不加Cookie,不用代理IP,在新的网络中运行程序,结果是只能返回一个公司数据,而且!返回的数据不全!有缺失!再运行程序的话,返回的就是登录页面了,也就是需要登录了。

- 情况二,不加Cookie,用代理IP。这样的话可能会出现一些错误:返回的是JS代码、登录页面等,出现错误可能某些代理IP不可用;也有不出现报错的情况,不出现报错的返回结果和情况一返回的数据一样:存在缺失数据

结论:只要不加Cookie,无论用不用代理IP,返回的数据都不全,见下图↓

所以还是老老实实加上Cookie吧。

这里我又在加上Cookie的基础上,用和不用代理IP这两种情况测试了一下:

- 情况一:加上Cookie,不用代理IP。能够正常爬取一百个公司左右,之后就会出现图片验证(tips:只要该账号还没有出现图片验证或者登录信息,那么即浏览器中界面刷新导致Cookie更新了,原来的Cookie也可以用)

- 情况二:加Cookie,用代理IP。会报以下错误:

HTTPSConnectionPool(host=‘www.tianyancha.com’, port=443): Max

retries exceeded with url: /company/602273968 (Caused by

ProxyError(‘Cannot connect to proxy.’, FileNotFoundError(2, ‘No such

file or directory’)))

网上找了很多方法,最终结果只能是关闭代理IP

这里还有一个验证结果:

只要该账号出现了图片验证,不管是更换电脑登录(用其他新电脑)还是更换电脑(家里的电脑)又换网段(家里的网段或手机热点),或者是等一天再登录,还是会有这个图片验证,除非手动验证图片

所以,代理IP暂且不用,只更新Cookie值,先爬它几十个或一百多个公司再说,如下源码:

import requests

from lxml import etree

import time

import sys

import random

import os

class TianYan:

def __init__(self,company_id,fp,cookie):

self.fp = fp

self.company_id = company_id

self.url = "https://www.tianyancha.com/company/{}".format(company_id)

self.User_agents = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50",

"Opera/9.80 (Windows NT 6.1; U; zh-cn) Presto/2.9.168 Version/11.50",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 2.0.50727; SLCC2; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; Tablet PC 2.0; .NET4.0E)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; InfoPath.3)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; GTB7.0)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.3 (KHTML, like Gecko) Chrome/6.0.472.33 Safari/534.3 SE 2.X MetaSr 1.0",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E)",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.41 Safari/535.1 QQBrowser/6.9.11079.201",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E) QQBrowser/6.9.11079.201",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0)",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.93 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60",

]

# 需要预先登录天眼查,打开源地址数据页面,将其中的Cookie复制到这里 (此Cookie的值需要保持登录状态,如果chrome中退出再登录,需要更新Cookie)

self.cookie = cookie

self.headers = {

'User-Agent': random.choice(self.User_agents),

'Cookie':self.cookie,

'Referer':"https://www.tianyancha.com/login?from=https%3A%2F%2Fwww.tianyancha.com%2Fsearch%3Fkey%3D%25E9%2583%2591%25E5%25B7%259E%25E6%2583%25A0%25E5%25B7%259E%25E6%25B1%25BD%25E8%25BD%25A6%25E8%25BF%2590%25E8%25BE%2593%25E6%259C%2589%25E9%2599%2590%25E5%2585%25AC%25E5%258F%25B8",

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

'Upgrade-Insecure-Requests': '1'

}

try:

response = self.get_html()

except Exception as e:

print("公司{}网页读取失败,可能是ip或者登录的Cookie问题".format(self.company_id))

# raise Exception()

sys.exit(0)

if "快捷登录与短信登录" in response:

print("爬取基本信息失败-需要登录 company_id:{}".format(self.company_id))

sys.exit(0) # ※ 终止程序

# print(response) # 更换Cookie ※

self.response = response

self.tree_html = etree.HTML(response)

def get_html(self):

response = requests.get(self.url,headers=self.headers) # whm

res = response.content.decode()

return res

def get_start_crawl(self): # 基本信息

tree_html = self.tree_html

try:

tr_list = tree_html.xpath('//*[@id="_container_baseInfo"]/table/tbody/tr')

# company_name = tree_html.xpath("//div[@class='box -company-box ']/div[@class='content']/div[@class='header']/span/span/h1/text()")[0] # 公司名

company_name = tree_html.xpath("//div[@class='container company-header-block ']/div[3]/div[@class='content']/div[@class='header']/span/span/h1/text()")[0] # 公司名 ※ 定位问题

people_name = tr_list[0].xpath("td[2]//div[@class='humancompany']/div[@class='name']/a/text()")[0] # 法定代表人

company_status = tr_list[0].xpath("td[4]/text()")[0] # 经营状态

company_start_date = tr_list[1].xpath("td[2]/text()")[0] # 成立日期

company_zhuce = tr_list[2].xpath("td[2]/div/text()")[0] # 注册资本

company_shijiao = tr_list[3].xpath("td[2]/text()")[0] # 实缴资本

gongshanghao = tr_list[3].xpath("td[4]/text()")[0] # 工商注册号

xinyong_code = tr_list[4].xpath("td[2]/span/span/text()")[0] # 统一信用代码

nashuirenshibiehao = tr_list[4].xpath("td[4]/span/span/text()")[0] # 纳税人识别号

zhuzhijigou_code = tr_list[4].xpath("td[6]/span/span/text()")[0] # 组织机构代码

yingyeqixian = tr_list[5].xpath('td[2]/span/text()')[0].replace(' ', '') # 营业期限

people_zizi = tr_list[5].xpath('td[4]/text()')[0] # 纳税人资质

check_date = tr_list[5].xpath('td[6]/text()')[0] # 核准日期

leixing = tr_list[6].xpath('td[2]/text()')[0] # 企业类型

hangye = tr_list[6].xpath('td[4]/text()')[0] # 行业

people_number = tr_list[6].xpath('td[6]/text()')[0] # 人员规模

canbaorenshu = tr_list[7].xpath('td[2]/text()')[0] # 参保人数

dengjijiguan = tr_list[7].xpath('td[4]/text()')[0] # 登记机关

old_name = tr_list[8].xpath("td[2]//span[@class='copy-info-box']/span/text()")[0] # 曾用名

dizhi = tr_list[9].xpath('td[2]/span/span/span/text()')[0] # 注册地址

fanwei = tr_list[10].xpath('td[2]/span/text()')[0] # 经营范围

head_content = "法定代表人:{}\x01公司名:{}\x01经营状态:{}\x01成立日期:{}\x01注册资本:{}\x01实缴资本:{}\x01工商注册号:{}\x01统一信用代码:{}\x01纳税人识别号:{}\x01组织机构代码:{}" \

"\x01营业期限:{}\x01纳税人资质:{}\x01核准日期:{}\x01企业类型:{}\x01行业:{}\x01人员规模:{}\x01参保人数:{}\x01登记机关:{}\x01曾用名:{}\x01" \

"注册地址:{}\x01经营范围:{}".format(people_name,company_name,company_status,company_start_date,company_zhuce,company_shijiao,

gongshanghao,xinyong_code,nashuirenshibiehao,zhuzhijigou_code,yingyeqixian,people_zizi,check_date,

leixing,hangye,people_number,canbaorenshu,dengjijiguan,old_name,dizhi,fanwei)

print(head_content,file=self.fp)

except Exception as e:

print(self.response)

print("公司{}的头部基本信息提取失败".format(self.company_id))

# a = 1/0

raise Exception() # 手动引发异常,等同于a=1/0

def kaiting(self):

# 开庭公告

print("开庭公告",file=self.fp)

kt_ult = []

tree_html = self.tree_html

try:

kt_list = tree_html.xpath('//*[@id="_container_announcementcourt"]/table/tbody/tr')

if kt_list != '' and len(kt_list)>0:

for tr in kt_list:

tds = tr.xpath("td")

court_order = tds[0].xpath("text()")[0]

court_date = tds[1].xpath("text()")[0] # 开庭日期

court_num = tds[2].xpath("span/text()")[0] # 案号

court_reason = tds[3].xpath("span/text()")[0] # 案由

court_sta = tds[4].xpath("div")

court_sta_list = []

for i in court_sta:

court_sta_list.append(i.xpath("string(.)"))

court_status = " ".join(court_sta_list) # 案件身份

court_law = tds[5].xpath("span/text()")[0] # 审理法院

kt_ult.append("序号:{}\x01开庭日期:{}\x01案号:{}\x01案由:{}\x01案件身份:{}\x01审理法院:{}".format(court_order,court_date,court_num,court_reason,court_status,court_law))

# else:

# print("公司{}-开庭公告-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-开庭公告-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in kt_ult:

print(one_ult, file=self.fp)

def lawsuitwhm(self):

# 将公司ID替换掉就可以了

company_id = self.company_id

print("法律诉讼",file=self.fp)

for pg_num in range(1, 2): # 法律诉讼爬10个页面即可

ss_ult = []

# 法律诉讼的Cookie也需要登录后的数据页面中的Cookie

ss_url = 'https://www.tianyancha.com/pagination/lawsuit.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

ss_headers = {

'User-Agent': random.choice(self.User_agents),

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

# ss_page_status = requests.get(url=ss_url, headers=ss_headers).status_code

# print(ss_page_status)

response = requests.get(url=ss_url, headers=ss_headers,allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

ss_tree = etree.HTML(response)

ss_list = ss_tree.xpath('//tbody/tr')

if len(ss_list) != 0:

for tr in ss_list:

try:

tds = tr.xpath("td")

lawsuit_order = tds[0].xpath("text()")[0]

lawsuit_name = tds[1].xpath("text()")[0] # 案件名称

lawsuit_reason = tds[2].xpath("span/text()")[0] # 案由

lawsuit_sta = tds[3].xpath("div/div/div/span") # 在本案中身份

lawsuit_sta_list = []

for i in lawsuit_sta:

lawsuit_sta_list.append(i.xpath("string(.)"))

lawsuit_status = "".join(lawsuit_sta_list) # 在本案中身份

lawsuit_result = tds[4].xpath("div/div/text()")[0] # 裁判结果

lawsuit_result = lawsuit_result.replace('\n', '').replace(' ','').replace('\r', '')

lawsuit_money = tds[5].xpath("span/text()")[0] # 案件金额

ss_ult.append("序号:{}\x01案件名称:{}\x01案由:{}\x01在本案中身份:{}\x01裁判结果:{}\x01案件金额:{}".format(lawsuit_order,lawsuit_name,lawsuit_reason,lawsuit_status,lawsuit_result,lawsuit_money))

except Exception as e:

print("公司{}-法律诉讼-信息未能解析。第{}页".format(self.company_id,pg_num),e)

raise Exception("")

else:

break

for one_ult in ss_ult:

print(one_ult,file=self.fp)

def fayuangonggao(self):

# 法院公告

print("法院公告",file=self.fp)

gonggao_ult = []

gonggao_tree = self.tree_html

try:

gonggao_list = gonggao_tree.xpath('//*[@id="_container_court"]/div/table/tbody/tr')

if len(gonggao_list) != 0:

for tr in gonggao_list:

tds = tr.xpath("td")

gg_order = tds[0].xpath("text()")[0]

gg_date = tds[1].xpath("text()")[0] # 刊登日期

gg_num = tds[2].xpath("text()")[0] # 案号

gg_reason = tds[3].xpath("text()")[0] # 案由

e = tds[4].xpath("div")

estr = []

for i in e:

estr.append(i.xpath("string(.)"))

gg_status = "\x01".join(estr) # 案件身份

gg_type = tds[5].xpath("text()")[0] # 公告类型

gg_law = tds[6].xpath("text()")[0] # 法院

gonggao_ult.append("序号:{}\x01刊登日期:{}\x01案号:{}\x01案由:{}\x01案件身份:{}\x01公告类型:{}\x01法院:{}".format(

gg_order,gg_date,gg_num,gg_reason,gg_status,gg_type,gg_law))

# else:

# print("公司{}-法院公告-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-法院公告-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in gonggao_ult:

print(one_ult, file=self.fp)

def beizhixing(self):

# 被执行人

print("被执行人",file=self.fp)

company_id = self.company_id

for pg_num in range(1,2):

zhixingren_ult = []

url = 'https://www.tianyancha.com/pagination/zhixing.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

headers = {

'User-Agent': random.choice(self.User_agents), # 下面的Cookie需要换上本电脑上数据页面的Cookie

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

response = requests.get(url=url, headers=headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

# print(response)

zhixingren_tree = etree.HTML(response)

try:

zhixingren_list = zhixingren_tree.xpath('//tbody/tr')

except Exception as e:

break

if len(zhixingren_list) != 0:

for tr in zhixingren_list:

try:

tds = tr.xpath("td")

zhixing_order = tds[0].xpath("text()")[0] # 序号

zhixing_date = tds[1].xpath("text()")[0] # 立案日期

zhixing_num = tds[2].xpath("text()")[0] # 案号

zhixing_money = tds[3].xpath("text()")[0] # 执行标的

zhixing_lawer = tds[4].xpath("text()")[0] # 执行法院

zhixingren_ult.append("序号:{}\x01立案日期:{}\x01案号:{}\x01执行标的:{}\x01执行法院:{}".format(zhixing_order,zhixing_date,zhixing_num,zhixing_money,zhixing_lawer))

except Exception as e:

print("公司{}-被执行人-信息无法解析。第{}页".format(self.company_id,pg_num),e)

raise Exception("")

else:

break

for elm in zhixingren_ult:

print(elm,file=self.fp)

def lian_message(self):

# 立案信息

print("立案信息",file=self.fp)

lian_ult = []

lian_tree = self.tree_html

try:

lian_list = lian_tree.xpath('//*[@id="_container_courtRegister"]/table/tbody/tr')

if len(lian_list) != 0:

for tr in lian_list:

tds = tr.xpath("td")

register_order = tds[0].xpath("text()")[0] # 序号

register_date = tds[1].xpath("text()")[0] # 立案日期

register_num = tds[2].xpath("text()")[0] # 案号

register_sta = tds[3].xpath("div")

register_status = []

for i in register_sta:

register_status.append(i.xpath("string(.)"))

register_status = "\x01".join(register_status) # 案件身份

register_law = tds[4].xpath("text()")[0] # 法院

lian_ult.append("序号:{}\x01立案日期:{}\x01案号:{}\x01案件身份:{}\x01法院:{}".format(register_order,register_date,register_num,register_status,register_law))

# else:

# print("公司{}-立案信息-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-立案信息-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in lian_ult:

print(one_ult, file=self.fp)

def xingzheng(self):

# 行政处罚

print("行政处罚",file=self.fp)

company_id = self.company_id

for pg_num in range(1,2):

url = 'https://www.tianyancha.com/pagination/mergePunishCount.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

headers = {

'User-Agent': random.choice(self.User_agents), # 下面的Cookie需要换上本电脑上数据页面的Cookie

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

response = requests.get(url=url, headers=headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

# print(response)

xingzheng_ult = []

xingzheng_tree = etree.HTML(response)

try:

xingzheng_list = xingzheng_tree.xpath('//tbody/tr')

except Exception as e:

break

if len(xingzheng_list) != 0:

for tr in xingzheng_list:

try:

tds = tr.xpath("td")

penalty_order = tds[0].xpath("text()")[0] # 序号

penalty_date = tds[1].xpath("text()")[0] # 处罚日期

penalty_books = tds[2].xpath("div/text()")[0] # 决定文书号

penalty_reason = tds[3].xpath("div/div/text()")[0] # 处罚事由

penalty_result = tds[4].xpath("div/div/text()")[0] # 处罚结果

penalty_unit = tds[5].xpath("text()")[0] # 处罚单位

penalty_source = tds[6].xpath("span/text()")[0] # 数据来源

# print(penalty_date,penalty_books,penalty_reason,penalty_result,penalty_unit,penalty_source)

xingzheng_ult.append(

"序号:{}\x01处罚日期:{}\x01决定文书号:{}\x01处罚事由:{}\x01处罚结果:{}\x01处罚单位:{}\x01数据来源:{}".format(

penalty_order,penalty_date,penalty_books,penalty_reason,penalty_result,penalty_unit,penalty_source))

except Exception as e:

print("公司{}-行政处罚-无法解析。第{}页".format(self.company_id,pg_num), e)

raise Exception("")

else:

break

for elm in xingzheng_ult:

print(elm,file=self.fp)

def body_run(self):

self.get_start_crawl() # 基本信息

self.kaiting() # 开庭公告

self.lawsuitwhm() # 法律诉讼

self.fayuangonggao() # 法院公告

self.beizhixing() # 被执行人

self.lian_message() # 立案信息

self.xingzheng() # 行政处罚

if __name__ == '__main__':

start_time = time.time() # 开始时间

# 某些公司ID

# company_list = ["500674557", "844565574", "2319114574", "2317302446", "789235759", "2964355333"]

# company_list = ["500674557"]

with open("zSD_company_id.txt","r",encoding="utf-8") as f:

content = f.readlines()

var_list = [car_id.strip() for car_id in content]

company_list = list(set(var_list))

company_list.sort(key=var_list.index) # 去重排序不改变元素相对位置

print(len(company_list),company_list)

company_index = 0

now_index = 0

# 登录后的cookie值

cookie = "TYCID=970a0360595911ecb844678fcc48c6b4; ssuid=7782420900; _ga=GA1.2.205529974.1639100190; creditGuide=1; _bl_uid=51kg7wILzwmt33jnnh8b8gq4g88m; aliyungf_tc=975133a0586b9d8247910635fab0120f2dfb47303db68899981b96efb22ecb7a; csrfToken=GPfdbeBsdJFMKJ9PS4OZyF-k; bannerFlag=true; jsid=https%3A%2F%2Fwww.tianyancha.com%2F%3Fjsid%3DSEM-BAIDU-PZ-SY-2021112-JRGW; relatedHumanSearchGraphId=529120041; relatedHumanSearchGraphId.sig=1e6-htbuJ3oK-8m0maPt0n-jiP2_2MK-xlrwLZW5Oy0; bdHomeCount=6; Hm_lvt_e92c8d65d92d534b0fc290df538b4758=1639638751,1639638959,1639642785,1639645038; searchSessionId=1639645046.08106995; _gid=GA1.2.866996138.1639969477; RTYCID=b241d86d56414be7a262217b036e1309; cloud_token=43038c6beca14790993defa2b66eff18; acw_tc=2f6fc10e16401570463873257e721394e5eb4a63728b79f801a693095764d6; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2213683548060%22%2C%22first_id%22%3A%2217da1fc06a69ec-07c085ac54da9c-978183a-1327104-17da1fc06a7954%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%7D%2C%22%24device_id%22%3A%2217da1fc06a69ec-07c085ac54da9c-978183a-1327104-17da1fc06a7954%22%7D; tyc-user-info={%22state%22:%220%22%2C%22vipManager%22:%220%22%2C%22mobile%22:%2213683548060%22}; tyc-user-info-save-time=1640157259623; auth_token=eyJhbGciOiJIUzUxMiJ9.eyJzdWIiOiIxMzY4MzU0ODA2MCIsImlhdCI6MTY0MDE1NzI2MCwiZXhwIjoxNjcxNjkzMjYwfQ.xp1NYWEXGp77nvl9JmvlyyfIwzUHOdXbNvau_HP3WcDVozcnZfKNeMDhZ7VafdYWsF2Bw27c437v3AA5qU7sdQ; tyc-user-phone=%255B%252213683548060%2522%252C%2522185%25201358%25206009%2522%252C%2522159%25201079%25201130%2522%252C%2522152%25205245%25202157%2522%255D; Hm_lpvt_e92c8d65d92d534b0fc290df538b4758=1640157277"

for company_id in company_list:

company_index += 1 # 计数

if os.path.isfile("TY_{}.txt".format(company_id)): # 如果存在

if int(os.path.getsize("TY_{}.txt".format(company_id))) == 0: # 如果是空,删除

os.remove("TY_{}.txt".format(company_id))

if os.path.isfile("TY_{}.txt".format(company_id)): # 如果该公司信息已经爬取

print("TY_{}.txt pass".format(company_id))

else:

start2_time = time.time()

try:

now_index += 1

path = "TY_{}.txt".format(company_id)

file_txt = open(path, "w") # 新建一个文件(或者清空源文件内容)

fp = open(path, 'a+', encoding='utf-8')

ty = TianYan(company_id,fp,cookie)

ty.body_run()

fp.close()

print("序号:{}-第{}个公司-{}-successful! it cost time:{}".format(now_index,company_index,company_id, time.time() - start2_time))

time.sleep(10 + random.random() * 10) # 随机间隔3秒以内

except Exception as e: # 如果本条公司的数据爬取错误,则删除这个未爬完的txt

print("第-{}-个公司-{}-部分信息读取失败,time:{}".format(company_index,company_id,time.time()-start_time))

print("{}-files cost time:{}".format(len(company_list),time.time()-start_time))

到这里基本结束。

下面接着讨论问题:

为什么用代理IP?因为某些网站会采取一些反爬虫措施,比如检测某个IP在单位时间内请求的次数,如果次数过多,便认为我们是爬虫。而以上步骤中我们也采取了代理IP,为什么还是会被检测到呢?换一种同样的方式,当我们的一个账号在一个特定的网络下爬取数据而被检测出是爬虫,出现了图片验证问题,那么我们再另起炉灶,换一个局域网手动登录这个账号,这个时候我们所在的网络变了,对应的IP也不一样了,为什么这种情况下登录这个账号还是会有图片验证问题?我个人认为是登录账号信息的Cookie问题,因为这两种情况,IP不一样,只有Cookie这个账号一样。所以,天眼查很大可能是根据账号的登录信息Cookie来做反爬的。当然,还有可能和代理的质量有关(透明代理,普匿代理,高匿代理),条件允许的话建议使用高匿。

以下再附两个源码以供测试:

源码1:不加Cookie的情况下使用或不使用IP代理:(需要添加代理IP地址)

import requests

from lxml import etree

import time

import sys

import random

import os

class TianYan:

def __init__(self, company_id, fp,new_ip):

self.fp = fp

self.company_id = company_id

self.url = "https://www.tianyancha.com/company/{}".format(company_id)

self.User_agents = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50",

"Opera/9.80 (Windows NT 6.1; U; zh-cn) Presto/2.9.168 Version/11.50",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 2.0.50727; SLCC2; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; Tablet PC 2.0; .NET4.0E)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; InfoPath.3)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; GTB7.0)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.3 (KHTML, like Gecko) Chrome/6.0.472.33 Safari/534.3 SE 2.X MetaSr 1.0",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E)",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.41 Safari/535.1 QQBrowser/6.9.11079.201",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E) QQBrowser/6.9.11079.201",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0)",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.93 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60",

]

# 需要预先登录天眼查,打开源地址数据页面,将其中的Cookie复制到这里 (此Cookie的值需要保持登录状态,如果chrome中退出再登录,需要更新Cookie)

self.headers = {

'User-Agent': random.choice(self.User_agents),

'Referer': "https://www.tianyancha.com/login?from=https%3A%2F%2Fwww.tianyancha.com%2Fsearch%3Fkey%3D%25E9%2583%2591%25E5%25B7%259E%25E6%2583%25A0%25E5%25B7%259E%25E6%25B1%25BD%25E8%25BD%25A6%25E8%25BF%2590%25E8%25BE%2593%25E6%259C%2589%25E9%2599%2590%25E5%2585%25AC%25E5%258F%25B8",

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

'Upgrade-Insecure-Requests': '1'

}

if new_ip:

ip_host = new_ip.split(":")[0]

ip_port = new_ip.split(":")[1]

proxyMetas = "https://{}:{}".format(ip_host, ip_port)

self.ip_proxies = {"https":proxyMetas}

else:

self.ip_proxies=None

try:

response = self.get_html()

except Exception as e:

print("公司{}网页读取失败,可能是ip或者登录的Cookie问题".format(self.company_id))

# raise Exception()

sys.exit(0)

if "快捷登录与短信登录" in response:

print("爬取基本信息失败-需要登录 company_id:{}".format(self.company_id))

sys.exit(0) # ※ 终止程序

print(response)

self.response = response

self.tree_html = etree.HTML(response)

def get_html(self):

if self.ip_proxies:

response = requests.get(self.url, headers=self.headers,proxies=self.ip_proxies) # whm

else:

response = requests.get(self.url, headers=self.headers) # whm

res = response.content.decode()

return res

def get_start_crawl(self): # 基本信息

tree_html = self.tree_html

# try:

if True:

tr_list = tree_html.xpath('//*[@id="_container_baseInfo"]/table/tbody/tr')

# company_name = tree_html.xpath("//div[@class='box -company-box ']/div[@class='content']/div[@class='header']/span/span/h1/text()")[0] # 公司名

# company_name = tree_html.xpath(

# "//div[@class='container company-header-block ']/div[3]/div[@class='content']/div[@class='header']/span/span/h1/text()")[

# 0] # 公司名 ※ 定位问题

company_name = "111"

people_name = tr_list[0].xpath("td[2]//div[@class='humancompany']/div[@class='name']/a/text()")[0] # 法定代表人

company_status = tr_list[0].xpath("td[4]/text()")[0] # 经营状态

company_start_date = tr_list[1].xpath("td[2]/text()")[0] # 成立日期

company_zhuce = tr_list[2].xpath("td[2]/div/text()")[0] # 注册资本

company_shijiao = tr_list[3].xpath("td[2]/text()")[0] # 实缴资本

gongshanghao = tr_list[3].xpath("td[4]/text()")[0] # 工商注册号

xinyong_code = tr_list[4].xpath("td[2]/span/span/text()")[0] # 统一信用代码

nashuirenshibiehao = tr_list[4].xpath("td[4]/span/span/text()")[0] # 纳税人识别号

zhuzhijigou_code = tr_list[4].xpath("td[6]/span/span/text()")[0] # 组织机构代码

yingyeqixian = tr_list[5].xpath('td[2]/span/text()')[0].replace(' ', '') # 营业期限

people_zizi = tr_list[5].xpath('td[4]/text()')[0] # 纳税人资质

check_date = tr_list[5].xpath('td[6]/text()')[0] # 核准日期

leixing = tr_list[6].xpath('td[2]/text()')[0] # 企业类型

hangye = tr_list[6].xpath('td[4]/text()')[0] # 行业

people_number = tr_list[6].xpath('td[6]/text()')[0] # 人员规模

canbaorenshu = tr_list[7].xpath('td[2]/text()')[0] # 参保人数

dengjijiguan = tr_list[7].xpath('td[4]/text()')[0] # 登记机关

old_name = tr_list[8].xpath("td[2]//span[@class='copy-info-box']/span/text()")[0] # 曾用名

dizhi = tr_list[9].xpath('td[2]/span/span/span/text()')[0] # 注册地址

fanwei = tr_list[10].xpath('td[2]/span/text()')[0] # 经营范围

head_content = "法定代表人:{}\x01公司名:{}\x01经营状态:{}\x01成立日期:{}\x01注册资本:{}\x01实缴资本:{}\x01工商注册号:{}\x01统一信用代码:{}\x01纳税人识别号:{}\x01组织机构代码:{}" \

"\x01营业期限:{}\x01纳税人资质:{}\x01核准日期:{}\x01企业类型:{}\x01行业:{}\x01人员规模:{}\x01参保人数:{}\x01登记机关:{}\x01曾用名:{}\x01" \

"注册地址:{}\x01经营范围:{}".format(people_name, company_name, company_status, company_start_date,

company_zhuce, company_shijiao,

gongshanghao, xinyong_code, nashuirenshibiehao, zhuzhijigou_code,

yingyeqixian, people_zizi, check_date,

leixing, hangye, people_number, canbaorenshu, dengjijiguan,

old_name, dizhi, fanwei)

print(head_content, file=self.fp)

# except Exception as e:

# print(self.response)

# print("公司{}的头部基本信息提取失败".format(self.company_id))

# print(e)

# # a = 1/0

# raise Exception() # 手动引发异常,等同于a=1/0

def kaiting(self):

# 开庭公告

print("开庭公告", file=self.fp)

kt_ult = []

tree_html = self.tree_html

try:

kt_list = tree_html.xpath('//*[@id="_container_announcementcourt"]/table/tbody/tr')

if kt_list != '' and len(kt_list) > 0:

for tr in kt_list:

tds = tr.xpath("td")

court_order = tds[0].xpath("text()")[0]

court_date = tds[1].xpath("text()")[0] # 开庭日期

court_num = tds[2].xpath("span/text()")[0] # 案号

court_reason = tds[3].xpath("span/text()")[0] # 案由

court_sta = tds[4].xpath("div")

court_sta_list = []

for i in court_sta:

court_sta_list.append(i.xpath("string(.)"))

court_status = " ".join(court_sta_list) # 案件身份

court_law = tds[5].xpath("span/text()")[0] # 审理法院

kt_ult.append(

"序号:{}\x01开庭日期:{}\x01案号:{}\x01案由:{}\x01案件身份:{}\x01审理法院:{}".format(court_order, court_date,

court_num, court_reason,

court_status, court_law))

# else:

# print("公司{}-开庭公告-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-开庭公告-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in kt_ult:

print(one_ult, file=self.fp)

def lawsuitwhm(self):

# 将公司ID替换掉就可以了

company_id = self.company_id

print("法律诉讼", file=self.fp)

for pg_num in range(1, 2): # 法律诉讼爬10个页面即可

ss_ult = []

# 法律诉讼的Cookie也需要登录后的数据页面中的Cookie

ss_url = 'https://www.tianyancha.com/pagination/lawsuit.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

ss_headers = {

'User-Agent': random.choice(self.User_agents),

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

# ss_page_status = requests.get(url=ss_url, headers=ss_headers).status_code

# print(ss_page_status)

if self.ip_proxies:

response = requests.get(url=ss_url, headers=ss_headers, allow_redirects=False,proxies=self.ip_proxies).content.decode()

else:

response = requests.get(url=ss_url, headers=ss_headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

ss_tree = etree.HTML(response)

ss_list = ss_tree.xpath('//tbody/tr')

if len(ss_list) != 0:

for tr in ss_list:

try:

tds = tr.xpath("td")

lawsuit_order = tds[0].xpath("text()")[0]

lawsuit_name = tds[1].xpath("text()")[0] # 案件名称

lawsuit_reason = tds[2].xpath("span/text()")[0] # 案由

lawsuit_sta = tds[3].xpath("div/div/div/span") # 在本案中身份

lawsuit_sta_list = []

for i in lawsuit_sta:

lawsuit_sta_list.append(i.xpath("string(.)"))

lawsuit_status = "".join(lawsuit_sta_list) # 在本案中身份

lawsuit_result = tds[4].xpath("div/div/text()")[0] # 裁判结果

lawsuit_result = lawsuit_result.replace('\n', '').replace(' ', '').replace('\r', '')

lawsuit_money = tds[5].xpath("span/text()")[0] # 案件金额

ss_ult.append(

"序号:{}\x01案件名称:{}\x01案由:{}\x01在本案中身份:{}\x01裁判结果:{}\x01案件金额:{}".format(lawsuit_order,

lawsuit_name,

lawsuit_reason,

lawsuit_status,

lawsuit_result,

lawsuit_money))

except Exception as e:

print("公司{}-法律诉讼-信息未能解析。第{}页".format(self.company_id, pg_num), e)

raise Exception("")

else:

break

for one_ult in ss_ult:

print(one_ult, file=self.fp)

def fayuangonggao(self):

# 法院公告

print("法院公告", file=self.fp)

gonggao_ult = []

gonggao_tree = self.tree_html

try:

gonggao_list = gonggao_tree.xpath('//*[@id="_container_court"]/div/table/tbody/tr')

if len(gonggao_list) != 0:

for tr in gonggao_list:

tds = tr.xpath("td")

gg_order = tds[0].xpath("text()")[0]

gg_date = tds[1].xpath("text()")[0] # 刊登日期

gg_num = tds[2].xpath("text()")[0] # 案号

gg_reason = tds[3].xpath("text()")[0] # 案由

e = tds[4].xpath("div")

estr = []

for i in e:

estr.append(i.xpath("string(.)"))

gg_status = "\x01".join(estr) # 案件身份

gg_type = tds[5].xpath("text()")[0] # 公告类型

gg_law = tds[6].xpath("text()")[0] # 法院

gonggao_ult.append("序号:{}\x01刊登日期:{}\x01案号:{}\x01案由:{}\x01案件身份:{}\x01公告类型:{}\x01法院:{}".format(

gg_order, gg_date, gg_num, gg_reason, gg_status, gg_type, gg_law))

# else:

# print("公司{}-法院公告-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-法院公告-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in gonggao_ult:

print(one_ult, file=self.fp)

def beizhixing(self):

# 被执行人

print("被执行人", file=self.fp)

company_id = self.company_id

for pg_num in range(1, 2):

zhixingren_ult = []

url = 'https://www.tianyancha.com/pagination/zhixing.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

headers = {

'User-Agent': random.choice(self.User_agents), # 下面的Cookie需要换上本电脑上数据页面的Cookie

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

if self.ip_proxies:

response = requests.get(url=url, headers=headers, allow_redirects=False,proxies=self.ip_proxies).content.decode()

else:

response = requests.get(url=url, headers=headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

# print(response)

zhixingren_tree = etree.HTML(response)

try:

zhixingren_list = zhixingren_tree.xpath('//tbody/tr')

except Exception as e:

break

if len(zhixingren_list) != 0:

for tr in zhixingren_list:

try:

tds = tr.xpath("td")

zhixing_order = tds[0].xpath("text()")[0] # 序号

zhixing_date = tds[1].xpath("text()")[0] # 立案日期

zhixing_num = tds[2].xpath("text()")[0] # 案号

zhixing_money = tds[3].xpath("text()")[0] # 执行标的

zhixing_lawer = tds[4].xpath("text()")[0] # 执行法院

zhixingren_ult.append(

"序号:{}\x01立案日期:{}\x01案号:{}\x01执行标的:{}\x01执行法院:{}".format(zhixing_order, zhixing_date,

zhixing_num, zhixing_money,

zhixing_lawer))

except Exception as e:

print("公司{}-被执行人-信息无法解析。第{}页".format(self.company_id, pg_num), e)

raise Exception("")

else:

break

for elm in zhixingren_ult:

print(elm, file=self.fp)

def lian_message(self):

# 立案信息

print("立案信息", file=self.fp)

lian_ult = []

lian_tree = self.tree_html

try:

lian_list = lian_tree.xpath('//*[@id="_container_courtRegister"]/table/tbody/tr')

if len(lian_list) != 0:

for tr in lian_list:

tds = tr.xpath("td")

register_order = tds[0].xpath("text()")[0] # 序号

register_date = tds[1].xpath("text()")[0] # 立案日期

register_num = tds[2].xpath("text()")[0] # 案号

register_sta = tds[3].xpath("div")

register_status = []

for i in register_sta:

register_status.append(i.xpath("string(.)"))

register_status = "\x01".join(register_status) # 案件身份

register_law = tds[4].xpath("text()")[0] # 法院

lian_ult.append(

"序号:{}\x01立案日期:{}\x01案号:{}\x01案件身份:{}\x01法院:{}".format(register_order, register_date,

register_num, register_status,

register_law))

# else:

# print("公司{}-立案信息-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-立案信息-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in lian_ult:

print(one_ult, file=self.fp)

def xingzheng(self):

# 行政处罚

print("行政处罚", file=self.fp)

company_id = self.company_id

for pg_num in range(1, 2):

url = 'https://www.tianyancha.com/pagination/mergePunishCount.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

headers = {

'User-Agent': random.choice(self.User_agents), # 下面的Cookie需要换上本电脑上数据页面的Cookie

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

if self.ip_proxies:

response = requests.get(url=url, headers=headers, allow_redirects=False,proxies=self.ip_proxies).content.decode()

else:

response = requests.get(url=url, headers=headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

# print(response)

xingzheng_ult = []

xingzheng_tree = etree.HTML(response)

try:

xingzheng_list = xingzheng_tree.xpath('//tbody/tr')

except Exception as e:

break

if len(xingzheng_list) != 0:

for tr in xingzheng_list:

try:

tds = tr.xpath("td")

penalty_order = tds[0].xpath("text()")[0] # 序号

penalty_date = tds[1].xpath("text()")[0] # 处罚日期

penalty_books = tds[2].xpath("div/text()")[0] # 决定文书号

penalty_reason = tds[3].xpath("div/div/text()")[0] # 处罚事由

penalty_result = tds[4].xpath("div/div/text()")[0] # 处罚结果

penalty_unit = tds[5].xpath("text()")[0] # 处罚单位

penalty_source = tds[6].xpath("span/text()")[0] # 数据来源

# print(penalty_date,penalty_books,penalty_reason,penalty_result,penalty_unit,penalty_source)

xingzheng_ult.append(

"序号:{}\x01处罚日期:{}\x01决定文书号:{}\x01处罚事由:{}\x01处罚结果:{}\x01处罚单位:{}\x01数据来源:{}".format(

penalty_order, penalty_date, penalty_books, penalty_reason, penalty_result,

penalty_unit, penalty_source))

except Exception as e:

print("公司{}-行政处罚-无法解析。第{}页".format(self.company_id, pg_num), e)

raise Exception("")

else:

break

for elm in xingzheng_ult:

print(elm, file=self.fp)

def body_run(self):

self.get_start_crawl() # 基本信息

self.kaiting() # 开庭公告

self.lawsuitwhm() # 法律诉讼

self.fayuangonggao() # 法院公告

self.beizhixing() # 被执行人

self.lian_message() # 立案信息

self.xingzheng() # 行政处罚

def change_ip():

# 这里的url是我自己在天启HTTP里面申请的代理IP的地址,因为我的账号下的IP数已经用完了,所以需要各位自己注册个账号,完事会送你一些金币,你可以用它买一些时效不同的IP,把它的链接复制到下面替换即可

url = "http://api.tianqiip.com/getip?secret=***=txt&num=1&time={}&port=2".format(3) # 时效3秒,可以填3,5,10,15

response = requests.get(url).content.decode()

res_ip = response.strip()

return res_ip # 返回新的代理ip

if __name__ == '__main__':

start_time = time.time() # 开始时间

# 某些公司ID

# company_list = ["500674557", "844565574", "2319114574", "2317302446", "789235759", "2964355333"]

# company_list = ["500674557"]

with open("zSD_company_id.txt", "r", encoding="utf-8") as f:

content = f.readlines()

var_list = [car_id.strip() for car_id in content]

company_list = list(set(var_list))

company_list.sort(key=var_list.index) # 去重排序不改变元素相对位置

print(len(company_list), company_list)

company_index = 0

for company_id in company_list:

company_index += 1 # 计数

if os.path.isfile("TY_{}.txt".format(company_id)): # 如果存在

if int(os.path.getsize("TY_{}.txt".format(company_id))) == 0: # 如果是空,删除

os.remove("TY_{}.txt".format(company_id))

if os.path.isfile("TY_{}.txt".format(company_id)): # 如果该公司信息已经爬取

print("TY_{}.txt pass".format(company_id))

else:

start2_time = time.time()

# try:

if True:

new_ip = None # 不使用代理IP

# new_ip = change_ip() # 使用代理ip

print(new_ip)

path = "TY_{}.txt".format(company_id)

file_txt = open(path, "w") # 新建一个文件(或者清空源文件内容)

fp = open(path, 'a+', encoding='utf-8')

ty = TianYan(company_id, fp,new_ip)

ty.body_run()

fp.close()

print("第-{}-个公司-{}-successful! it cost time:{}".format(company_index, company_id,

time.time() - start2_time))

# except Exception as e: # 如果本条公司的数据爬取错误,则删除这个未爬完的txt

# print("第-{}-个公司-{}-部分信息读取失败,time:{}".format(company_index, company_id, time.time() - start_time))

time.sleep(10 + random.random() * 2) # 随机间隔3秒以内

print("{}-files cost time:{}".format(len(company_list), time.time() - start_time))

源码2:加Cookie的情况下使用或不使用IP代理:(需要更换Cookie和添加代理IP地址)

import requests

from lxml import etree

import time

import sys

import random

import os

class TianYan:

def __init__(self, company_id, fp, cookie,new_ip):

self.fp = fp

self.company_id = company_id

self.url = "https://www.tianyancha.com/company/{}".format(company_id)

self.User_agents = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50",

"Opera/9.80 (Windows NT 6.1; U; zh-cn) Presto/2.9.168 Version/11.50",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 2.0.50727; SLCC2; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; Tablet PC 2.0; .NET4.0E)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; InfoPath.3)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; GTB7.0)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)",

"Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.3 (KHTML, like Gecko) Chrome/6.0.472.33 Safari/534.3 SE 2.X MetaSr 1.0",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E)",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.41 Safari/535.1 QQBrowser/6.9.11079.201",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E) QQBrowser/6.9.11079.201",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0)",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.93 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60",

]

# 需要预先登录天眼查,打开源地址数据页面,将其中的Cookie复制到这里 (此Cookie的值需要保持登录状态,如果chrome中退出再登录,需要更新Cookie)

self.cookie = cookie

self.headers = {

'User-Agent': random.choice(self.User_agents),

'Cookie': self.cookie,

'Referer': "https://www.tianyancha.com/login?from=https%3A%2F%2Fwww.tianyancha.com%2Fsearch%3Fkey%3D%25E9%2583%2591%25E5%25B7%259E%25E6%2583%25A0%25E5%25B7%259E%25E6%25B1%25BD%25E8%25BD%25A6%25E8%25BF%2590%25E8%25BE%2593%25E6%259C%2589%25E9%2599%2590%25E5%2585%25AC%25E5%258F%25B8",

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

'Upgrade-Insecure-Requests': '1'

}

if new_ip:

ip_host = new_ip.split(":")[0]

ip_port = new_ip.split(":")[1]

proxyMetas = "https://{}:{}".format(ip_host, ip_port)

self.ip_proxies = {"https":proxyMetas}

else:

self.ip_proxies=None

try:

response = self.get_html()

except Exception as e:

print("公司{}网页读取失败,可能是ip或者登录的Cookie问题".format(self.company_id))

# raise Exception()

sys.exit(0)

if "快捷登录与短信登录" in response:

print("爬取基本信息失败-需要登录 company_id:{}".format(self.company_id))

sys.exit(0) # ※ 终止程序

# print(response) # 更换Cookie ※

self.response = response

self.tree_html = etree.HTML(response)

def get_html(self):

if self.ip_proxies:

response = requests.get(self.url, headers=self.headers,proxies=self.ip_proxies) # whm

else:

response = requests.get(self.url, headers=self.headers) # whm

res = response.content.decode()

return res

def get_start_crawl(self): # 基本信息

tree_html = self.tree_html

try:

tr_list = tree_html.xpath('//*[@id="_container_baseInfo"]/table/tbody/tr')

# company_name = tree_html.xpath("//div[@class='box -company-box ']/div[@class='content']/div[@class='header']/span/span/h1/text()")[0] # 公司名

company_name = tree_html.xpath(

"//div[@class='container company-header-block ']/div[3]/div[@class='content']/div[@class='header']/span/span/h1/text()")[

0] # 公司名 ※ 定位问题

people_name = tr_list[0].xpath("td[2]//div[@class='humancompany']/div[@class='name']/a/text()")[0] # 法定代表人

company_status = tr_list[0].xpath("td[4]/text()")[0] # 经营状态

company_start_date = tr_list[1].xpath("td[2]/text()")[0] # 成立日期

company_zhuce = tr_list[2].xpath("td[2]/div/text()")[0] # 注册资本

company_shijiao = tr_list[3].xpath("td[2]/text()")[0] # 实缴资本

gongshanghao = tr_list[3].xpath("td[4]/text()")[0] # 工商注册号

xinyong_code = tr_list[4].xpath("td[2]/span/span/text()")[0] # 统一信用代码

nashuirenshibiehao = tr_list[4].xpath("td[4]/span/span/text()")[0] # 纳税人识别号

zhuzhijigou_code = tr_list[4].xpath("td[6]/span/span/text()")[0] # 组织机构代码

yingyeqixian = tr_list[5].xpath('td[2]/span/text()')[0].replace(' ', '') # 营业期限

people_zizi = tr_list[5].xpath('td[4]/text()')[0] # 纳税人资质

check_date = tr_list[5].xpath('td[6]/text()')[0] # 核准日期

leixing = tr_list[6].xpath('td[2]/text()')[0] # 企业类型

hangye = tr_list[6].xpath('td[4]/text()')[0] # 行业

people_number = tr_list[6].xpath('td[6]/text()')[0] # 人员规模

canbaorenshu = tr_list[7].xpath('td[2]/text()')[0] # 参保人数

dengjijiguan = tr_list[7].xpath('td[4]/text()')[0] # 登记机关

old_name = tr_list[8].xpath("td[2]//span[@class='copy-info-box']/span/text()")[0] # 曾用名

dizhi = tr_list[9].xpath('td[2]/span/span/span/text()')[0] # 注册地址

fanwei = tr_list[10].xpath('td[2]/span/text()')[0] # 经营范围

head_content = "法定代表人:{}\x01公司名:{}\x01经营状态:{}\x01成立日期:{}\x01注册资本:{}\x01实缴资本:{}\x01工商注册号:{}\x01统一信用代码:{}\x01纳税人识别号:{}\x01组织机构代码:{}" \

"\x01营业期限:{}\x01纳税人资质:{}\x01核准日期:{}\x01企业类型:{}\x01行业:{}\x01人员规模:{}\x01参保人数:{}\x01登记机关:{}\x01曾用名:{}\x01" \

"注册地址:{}\x01经营范围:{}".format(people_name, company_name, company_status, company_start_date,

company_zhuce, company_shijiao,

gongshanghao, xinyong_code, nashuirenshibiehao, zhuzhijigou_code,

yingyeqixian, people_zizi, check_date,

leixing, hangye, people_number, canbaorenshu, dengjijiguan,

old_name, dizhi, fanwei)

print(head_content, file=self.fp)

except Exception as e:

print(self.response)

print("公司{}的头部基本信息提取失败".format(self.company_id),e)

# a = 1/0

raise Exception() # 手动引发异常,等同于a=1/0

def kaiting(self):

# 开庭公告

print("开庭公告", file=self.fp)

kt_ult = []

tree_html = self.tree_html

try:

kt_list = tree_html.xpath('//*[@id="_container_announcementcourt"]/table/tbody/tr')

if kt_list != '' and len(kt_list) > 0:

for tr in kt_list:

tds = tr.xpath("td")

court_order = tds[0].xpath("text()")[0]

court_date = tds[1].xpath("text()")[0] # 开庭日期

court_num = tds[2].xpath("span/text()")[0] # 案号

court_reason = tds[3].xpath("span/text()")[0] # 案由

court_sta = tds[4].xpath("div")

court_sta_list = []

for i in court_sta:

court_sta_list.append(i.xpath("string(.)"))

court_status = " ".join(court_sta_list) # 案件身份

court_law = tds[5].xpath("span/text()")[0] # 审理法院

kt_ult.append(

"序号:{}\x01开庭日期:{}\x01案号:{}\x01案由:{}\x01案件身份:{}\x01审理法院:{}".format(court_order, court_date,

court_num, court_reason,

court_status, court_law))

# else:

# print("公司{}-开庭公告-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-开庭公告-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in kt_ult:

print(one_ult, file=self.fp)

def lawsuitwhm(self):

# 将公司ID替换掉就可以了

company_id = self.company_id

print("法律诉讼", file=self.fp)

for pg_num in range(1, 2): # 法律诉讼爬10个页面即可

ss_ult = []

# 法律诉讼的Cookie也需要登录后的数据页面中的Cookie

ss_url = 'https://www.tianyancha.com/pagination/lawsuit.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

ss_headers = {

'User-Agent': random.choice(self.User_agents),

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

# ss_page_status = requests.get(url=ss_url, headers=ss_headers).status_code

# print(ss_page_status)

if self.ip_proxies:

response = requests.get(url=ss_url, headers=ss_headers, allow_redirects=False,proxies=self.ip_proxies).content.decode()

else:

response = requests.get(url=ss_url, headers=ss_headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

ss_tree = etree.HTML(response)

ss_list = ss_tree.xpath('//tbody/tr')

if len(ss_list) != 0:

for tr in ss_list:

try:

tds = tr.xpath("td")

lawsuit_order = tds[0].xpath("text()")[0]

lawsuit_name = tds[1].xpath("text()")[0] # 案件名称

lawsuit_reason = tds[2].xpath("span/text()")[0] # 案由

lawsuit_sta = tds[3].xpath("div/div/div/span") # 在本案中身份

lawsuit_sta_list = []

for i in lawsuit_sta:

lawsuit_sta_list.append(i.xpath("string(.)"))

lawsuit_status = "".join(lawsuit_sta_list) # 在本案中身份

lawsuit_result = tds[4].xpath("div/div/text()")[0] # 裁判结果

lawsuit_result = lawsuit_result.replace('\n', '').replace(' ', '').replace('\r', '')

lawsuit_money = tds[5].xpath("span/text()")[0] # 案件金额

ss_ult.append(

"序号:{}\x01案件名称:{}\x01案由:{}\x01在本案中身份:{}\x01裁判结果:{}\x01案件金额:{}".format(lawsuit_order,

lawsuit_name,

lawsuit_reason,

lawsuit_status,

lawsuit_result,

lawsuit_money))

except Exception as e:

print("公司{}-法律诉讼-信息未能解析。第{}页".format(self.company_id, pg_num), e)

raise Exception("")

else:

break

for one_ult in ss_ult:

print(one_ult, file=self.fp)

def fayuangonggao(self):

# 法院公告

print("法院公告", file=self.fp)

gonggao_ult = []

gonggao_tree = self.tree_html

try:

gonggao_list = gonggao_tree.xpath('//*[@id="_container_court"]/div/table/tbody/tr')

if len(gonggao_list) != 0:

for tr in gonggao_list:

tds = tr.xpath("td")

gg_order = tds[0].xpath("text()")[0]

gg_date = tds[1].xpath("text()")[0] # 刊登日期

gg_num = tds[2].xpath("text()")[0] # 案号

gg_reason = tds[3].xpath("text()")[0] # 案由

e = tds[4].xpath("div")

estr = []

for i in e:

estr.append(i.xpath("string(.)"))

gg_status = "\x01".join(estr) # 案件身份

gg_type = tds[5].xpath("text()")[0] # 公告类型

gg_law = tds[6].xpath("text()")[0] # 法院

gonggao_ult.append("序号:{}\x01刊登日期:{}\x01案号:{}\x01案由:{}\x01案件身份:{}\x01公告类型:{}\x01法院:{}".format(

gg_order, gg_date, gg_num, gg_reason, gg_status, gg_type, gg_law))

# else:

# print("公司{}-法院公告-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-法院公告-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in gonggao_ult:

print(one_ult, file=self.fp)

def beizhixing(self):

# 被执行人

print("被执行人", file=self.fp)

company_id = self.company_id

for pg_num in range(1, 2):

zhixingren_ult = []

url = 'https://www.tianyancha.com/pagination/zhixing.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

headers = {

'User-Agent': random.choice(self.User_agents), # 下面的Cookie需要换上本电脑上数据页面的Cookie

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

if self.ip_proxies:

response = requests.get(url=url, headers=headers, allow_redirects=False,proxies=self.ip_proxies).content.decode()

else:

response = requests.get(url=url, headers=headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

# print(response)

zhixingren_tree = etree.HTML(response)

try:

zhixingren_list = zhixingren_tree.xpath('//tbody/tr')

except Exception as e:

break

if len(zhixingren_list) != 0:

for tr in zhixingren_list:

try:

tds = tr.xpath("td")

zhixing_order = tds[0].xpath("text()")[0] # 序号

zhixing_date = tds[1].xpath("text()")[0] # 立案日期

zhixing_num = tds[2].xpath("text()")[0] # 案号

zhixing_money = tds[3].xpath("text()")[0] # 执行标的

zhixing_lawer = tds[4].xpath("text()")[0] # 执行法院

zhixingren_ult.append(

"序号:{}\x01立案日期:{}\x01案号:{}\x01执行标的:{}\x01执行法院:{}".format(zhixing_order, zhixing_date,

zhixing_num, zhixing_money,

zhixing_lawer))

except Exception as e:

print("公司{}-被执行人-信息无法解析。第{}页".format(self.company_id, pg_num), e)

raise Exception("")

else:

break

for elm in zhixingren_ult:

print(elm, file=self.fp)

def lian_message(self):

# 立案信息

print("立案信息", file=self.fp)

lian_ult = []

lian_tree = self.tree_html

try:

lian_list = lian_tree.xpath('//*[@id="_container_courtRegister"]/table/tbody/tr')

if len(lian_list) != 0:

for tr in lian_list:

tds = tr.xpath("td")

register_order = tds[0].xpath("text()")[0] # 序号

register_date = tds[1].xpath("text()")[0] # 立案日期

register_num = tds[2].xpath("text()")[0] # 案号

register_sta = tds[3].xpath("div")

register_status = []

for i in register_sta:

register_status.append(i.xpath("string(.)"))

register_status = "\x01".join(register_status) # 案件身份

register_law = tds[4].xpath("text()")[0] # 法院

lian_ult.append(

"序号:{}\x01立案日期:{}\x01案号:{}\x01案件身份:{}\x01法院:{}".format(register_order, register_date,

register_num, register_status,

register_law))

# else:

# print("公司{}-立案信息-读取结果为空".format(self.company_id))

except Exception as e:

print("公司{}此条-立案信息-信息未能解析".format(self.company_id), e)

raise Exception("")

for one_ult in lian_ult:

print(one_ult, file=self.fp)

def xingzheng(self):

# 行政处罚

print("行政处罚", file=self.fp)

company_id = self.company_id

for pg_num in range(1, 2):

url = 'https://www.tianyancha.com/pagination/mergePunishCount.xhtml?TABLE_DIM_NAME=manageDangerous&ps=10&pn={}&id={}'.format(

pg_num, company_id)

headers = {

'User-Agent': random.choice(self.User_agents), # 下面的Cookie需要换上本电脑上数据页面的Cookie

'Cookie': self.cookie,

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Host': 'www.tianyancha.com',

'sec-ch-ua': '"Chromium";v="92", " Not A;Brand";v="99", "Google Chrome";v="92"',

}

if self.ip_proxies:

response = requests.get(url=url, headers=headers, allow_redirects=False,proxies=self.ip_proxies).content.decode()

else:

response = requests.get(url=url, headers=headers, allow_redirects=False).content.decode()

if "抱歉,没有找到相关信息,请更换关键词重试" in response:

break

# print(response)

xingzheng_ult = []

xingzheng_tree = etree.HTML(response)

try:

xingzheng_list = xingzheng_tree.xpath('//tbody/tr')

except Exception as e:

break

if len(xingzheng_list) != 0:

for tr in xingzheng_list:

try:

tds = tr.xpath("td")

penalty_order = tds[0].xpath("text()")[0] # 序号

penalty_date = tds[1].xpath("text()")[0] # 处罚日期

penalty_books = tds[2].xpath("div/text()")[0] # 决定文书号

penalty_reason = tds[3].xpath("div/div/text()")[0] # 处罚事由

penalty_result = tds[4].xpath("div/div/text()")[0] # 处罚结果

penalty_unit = tds[5].xpath("text()")[0] # 处罚单位

penalty_source = tds[6].xpath("span/text()")[0] # 数据来源

# print(penalty_date,penalty_books,penalty_reason,penalty_result,penalty_unit,penalty_source)

xingzheng_ult.append(

"序号:{}\x01处罚日期:{}\x01决定文书号:{}\x01处罚事由:{}\x01处罚结果:{}\x01处罚单位:{}\x01数据来源:{}".format(

penalty_order, penalty_date, penalty_books, penalty_reason, penalty_result,

penalty_unit, penalty_source))

except Exception as e:

print("公司{}-行政处罚-无法解析。第{}页".format(self.company_id, pg_num), e)

raise Exception("")

else:

break

for elm in xingzheng_ult:

print(elm, file=self.fp)

def body_run(self):

self.get_start_crawl() # 基本信息

self.kaiting() # 开庭公告

self.lawsuitwhm() # 法律诉讼

self.fayuangonggao() # 法院公告

self.beizhixing() # 被执行人

self.lian_message() # 立案信息

self.xingzheng() # 行政处罚

def change_ip():

# 这里的url是我自己在天启HTTP里面申请的代理IP的地址,因为我的账号下的IP数已经用完了,所以需要各位自己注册个账号,完事会送你一些金币,你可以用它买一些时效不同的IP,把它的链接复制到下面替换即可

url = "http://api.tianqiip.com/getip?secret=******&type=txt&num=1&time={}&port=2".format(3) # 时效3秒,可以填3,5,10,15

response = requests.get(url).content.decode()

res_ip = response.strip()

return res_ip # 返回新的代理ip

if __name__ == '__main__':

start_time = time.time() # 开始时间

# 某些公司ID

# company_list = ["500674557", "844565574", "2319114574", "2317302446", "789235759", "2964355333"]

# company_list = ["500674557"]

with open("zSD_company_id.txt", "r", encoding="utf-8") as f:

content = f.readlines()

var_list = [car_id.strip() for car_id in content]

company_list = list(set(var_list))

company_list.sort(key=var_list.index) # 去重排序不改变元素相对位置

print(len(company_list), company_list)

company_index = 0

now_index = 0

# 登录后的cookie值

# cookie = "TYCID=970a0360595911ecb844678fcc48c6b4; ssuid=7782420900; _ga=GA1.2.205529974.1639100190; creditGuide=1; _bl_uid=51kg7wILzwmt33jnnh8b8gq4g88m; aliyungf_tc=975133a0586b9d8247910635fab0120f2dfb47303db68899981b96efb22ecb7a; csrfToken=GPfdbeBsdJFMKJ9PS4OZyF-k; bannerFlag=true; jsid=https%3A%2F%2Fwww.tianyancha.com%2F%3Fjsid%3DSEM-BAIDU-PZ-SY-2021112-JRGW; relatedHumanSearchGraphId=529120041; relatedHumanSearchGraphId.sig=1e6-htbuJ3oK-8m0maPt0n-jiP2_2MK-xlrwLZW5Oy0; bdHomeCount=6; Hm_lvt_e92c8d65d92d534b0fc290df538b4758=1639638751,1639638959,1639642785,1639645038; searchSessionId=1639645046.08106995; _gid=GA1.2.866996138.1639969477; CT_TYCID=29563fae7c84453f94a0e5432c88d1a0; RTYCID=b241d86d56414be7a262217b036e1309; acw_tc=707c9f6716400766862111551e648ff6e9a0a6ee71e2eac77d0c89b29673e1; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2215910791130%22%2C%22first_id%22%3A%2217da1fc06a69ec-07c085ac54da9c-978183a-1327104-17da1fc06a7954%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%7D%2C%22%24device_id%22%3A%2217da1fc06a69ec-07c085ac54da9c-978183a-1327104-17da1fc06a7954%22%7D; tyc-user-info={%22state%22:%220%22%2C%22vipManager%22:%220%22%2C%22mobile%22:%2215910791130%22}; tyc-user-info-save-time=1640076960601; auth_token=eyJhbGciOiJIUzUxMiJ9.eyJzdWIiOiIxNTkxMDc5MTEzMCIsImlhdCI6MTY0MDA3Njk2MCwiZXhwIjoxNjcxNjEyOTYwfQ.YjdrON1ur2aB3mjylOB-9e6s0WB7yEWx8d9v7ZAE0FEnyG_wP2zY5QMed5d671vQSxg7N_90cgg73Fpgdcs91A; tyc-user-phone=%255B%252215910791130%2522%252C%2522152%25205245%25202157%2522%252C%2522130%25201112%25204493%2522%252C%2522185%25201022%25206873%2522%255D; Hm_lpvt_e92c8d65d92d534b0fc290df538b4758=1640076972; cloud_token=2b297584cb1b455fb982c5837f285c44; cloud_utm=c74404e80e0244a6b579f19c6914b772"

# cookie = "TYCID=970a0360595911ecb844678fcc48c6b4; ssuid=7782420900; _ga=GA1.2.205529974.1639100190; creditGuide=1; _bl_uid=51kg7wILzwmt33jnnh8b8gq4g88m; aliyungf_tc=975133a0586b9d8247910635fab0120f2dfb47303db68899981b96efb22ecb7a; csrfToken=GPfdbeBsdJFMKJ9PS4OZyF-k; bannerFlag=true; jsid=https%3A%2F%2Fwww.tianyancha.com%2F%3Fjsid%3DSEM-BAIDU-PZ-SY-2021112-JRGW; relatedHumanSearchGraphId=529120041; relatedHumanSearchGraphId.sig=1e6-htbuJ3oK-8m0maPt0n-jiP2_2MK-xlrwLZW5Oy0; bdHomeCount=6; Hm_lvt_e92c8d65d92d534b0fc290df538b4758=1639638751,1639638959,1639642785,1639645038; searchSessionId=1639645046.08106995; _gid=GA1.2.866996138.1639969477; CT_TYCID=29563fae7c84453f94a0e5432c88d1a0; RTYCID=b241d86d56414be7a262217b036e1309; acw_tc=2f6fc10b16400813152455804e36b1c0495ce7ecc4e1fe07a27d06d67408ff; _gat_gtag_UA_123487620_1=1; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2217376501816%22%2C%22first_id%22%3A%2217da1fc06a69ec-07c085ac54da9c-978183a-1327104-17da1fc06a7954%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%7D%2C%22%24device_id%22%3A%2217da1fc06a69ec-07c085ac54da9c-978183a-1327104-17da1fc06a7954%22%7D; tyc-user-info-save-time=1640082079669; auth_token=eyJhbGciOiJIUzUxMiJ9.eyJzdWIiOiIxNzM3NjUwMTgxNiIsImlhdCI6MTY0MDA4MjA3OSwiZXhwIjoxNjcxNjE4MDc5fQ.BjDQyI-pEigOFwfAa6ZiACbj6pGaQj9xgWDHNv3jVs7Ws037HwWZf8zNMTQOnUkNQIe8zx_cXczFmXwydVMokg; tyc-user-info={%22state%22:%220%22%2C%22vipManager%22:%220%22%2C%22mobile%22:%2217376501816%22}; tyc-user-phone=%255B%252217376501816%2522%252C%2522159%25201079%25201130%2522%252C%2522152%25205245%25202157%2522%252C%2522130%25201112%25204493%2522%255D; Hm_lpvt_e92c8d65d92d534b0fc290df538b4758=1640082090; cloud_token=4c80b666379a438fac067ea9bfd2045d; cloud_utm=bff256b5d11b41cc9317de84974df7f8"

# cookie = "aliyungf_tc=fc1cad659bc12d12b92f26de44a539ff742cd5b1b12daecb3c886f42a21770ac; csrfToken=U4Cye88YY47VByNXADJTsNSK; jsid=https%3A%2F%2Fwww.tianyancha.com%2F%3Fjsid%3DSEM-BAIDU-PZ-SY-2021112-JRGW; TYCID=aaa94ab0620611ec9f30e345ca9b6f3c; ssuid=4292369232; bannerFlag=true; _ga=GA1.2.1978953168.1640054133; _gid=GA1.2.1170878138.1640054133; relatedHumanSearchGraphId=17066311; relatedHumanSearchGraphId.sig=TwOcKyCjpeHTjV7s49eE4L_3pHpB94avftKmZJek8gk; searchSessionId=1640054217.62527182; creditGuide=1; RTYCID=c841ead232eb431e9a08fde10bf8e19d; CT_TYCID=e65f025a58a5454fb1b8149c60c20d2c; tyc-user-phone=%255B%252215871491493%2522%255D; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2215871491493%22%2C%22first_id%22%3A%2217ddad80cb33e8-08285e097c8ce1-58412b14-921600-17ddad80cb4532%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E8%87%AA%E7%84%B6%E6%90%9C%E7%B4%A2%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E5%A4%A9%E7%9C%BC%E6%9F%A5%22%2C%22%24latest_referrer%22%3A%22https%3A%2F%2Fwww.baidu.com%2Fother.php%22%7D%2C%22%24device_id%22%3A%2217ddad80cb33e8-08285e097c8ce1-58412b14-921600-17ddad80cb4532%22%7D; bdHomeCount=3; Hm_lvt_e92c8d65d92d534b0fc290df538b4758=1640054132,1640076829,1640100859,1640141256; acw_tc=781bad3e16401442445646794e2a756c6f0302b9a4894f09b7e0e76eb6b8cb; _bl_uid=20k0nxR7gObzU3o9j3Xt19pwL0Rg; tyc-user-info={%22state%22:%220%22%2C%22vipManager%22:%220%22%2C%22mobile%22:%2215871491493%22}; tyc-user-info-save-time=1640144490193; auth_token=eyJhbGciOiJIUzUxMiJ9.eyJzdWIiOiIxNTg3MTQ5MTQ5MyIsImlhdCI6MTY0MDE0NDQ4OSwiZXhwIjoxNjcxNjgwNDg5fQ.g83pWE5uAKzOqTEHSHexgkJgvuW_PyZfAb7fFBfbe_wZr0ZdN7TDPBXx_10Ab4hvhKY8qp9DPgT0Xf34IQZJJg; Hm_lpvt_e92c8d65d92d534b0fc290df538b4758=1640144503; cloud_token=60674da54c8c4627b34422c78b980bde; cloud_utm=89c23a8360784939875fbb8941b5706e"

cookie = "aliyungf_tc=fc1cad659bc12d12b92f26de44a539ff742cd5b1b12daecb3c886f42a21770ac; csrfToken=U4Cye88YY47VByNXADJTsNSK; jsid=https%3A%2F%2Fwww.tianyancha.com%2F%3Fjsid%3DSEM-BAIDU-PZ-SY-2021112-JRGW; TYCID=aaa94ab0620611ec9f30e345ca9b6f3c; ssuid=4292369232; bannerFlag=true; _ga=GA1.2.1978953168.1640054133; _gid=GA1.2.1170878138.1640054133; relatedHumanSearchGraphId=17066311; relatedHumanSearchGraphId.sig=TwOcKyCjpeHTjV7s49eE4L_3pHpB94avftKmZJek8gk; searchSessionId=1640054217.62527182; creditGuide=1; RTYCID=c841ead232eb431e9a08fde10bf8e19d; CT_TYCID=e65f025a58a5454fb1b8149c60c20d2c; tyc-user-phone=%255B%252215871491493%2522%255D; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2215871491493%22%2C%22first_id%22%3A%2217ddad80cb33e8-08285e097c8ce1-58412b14-921600-17ddad80cb4532%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E8%87%AA%E7%84%B6%E6%90%9C%E7%B4%A2%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E5%A4%A9%E7%9C%BC%E6%9F%A5%22%2C%22%24latest_referrer%22%3A%22https%3A%2F%2Fwww.baidu.com%2Fother.php%22%7D%2C%22%24device_id%22%3A%2217ddad80cb33e8-08285e097c8ce1-58412b14-921600-17ddad80cb4532%22%7D; bdHomeCount=3; Hm_lvt_e92c8d65d92d534b0fc290df538b4758=1640054132,1640076829,1640100859,1640141256; _bl_uid=20k0nxR7gObzU3o9j3Xt19pwL0Rg; tyc-user-info={%22state%22:%220%22%2C%22vipManager%22:%220%22%2C%22mobile%22:%2215871491493%22}; tyc-user-info-save-time=1640144490193; auth_token=eyJhbGciOiJIUzUxMiJ9.eyJzdWIiOiIxNTg3MTQ5MTQ5MyIsImlhdCI6MTY0MDE0NDQ4OSwiZXhwIjoxNjcxNjgwNDg5fQ.g83pWE5uAKzOqTEHSHexgkJgvuW_PyZfAb7fFBfbe_wZr0ZdN7TDPBXx_10Ab4hvhKY8qp9DPgT0Xf34IQZJJg; acw_tc=2f6fc12116401516477605226e66d961a24c27a891c9659e573e10af01e7a5; Hm_lpvt_e92c8d65d92d534b0fc290df538b4758=1640151651; cloud_token=804f9713fa6c4a56a8f3a139486888be; cloud_utm=7f6eb3b3b93a46b6973a220bb5e649c5"

for company_id in company_list:

company_index += 1 # 计数

if os.path.isfile("TY_{}.txt".format(company_id)): # 如果存在

if int(os.path.getsize("TY_{}.txt".format(company_id))) == 0: # 如果是空,删除

os.remove("TY_{}.txt".format(company_id))

if os.path.isfile("TY_{}.txt".format(company_id)): # 如果该公司信息已经爬取

print("TY_{}.txt pass".format(company_id))

else:

start2_time = time.time()

try:

now_index += 1

new_ip = None # 不使用代理IP

# new_ip = change_ip() # 使用代理ip

print(new_ip)

path = "TY_{}.txt".format(company_id)

file_txt = open(path, "w") # 新建一个文件(或者清空源文件内容)

fp = open(path, 'a+', encoding='utf-8')

ty = TianYan(company_id, fp, cookie,new_ip)

ty.body_run()

fp.close()

print("序号:{}-第{}个公司-{}-successful! it cost time:{}".format(now_index,company_index,company_id, time.time() - start2_time))

time.sleep(10 + random.random() * 10) # 随机间隔3秒以内

except Exception as e: # 如果本条公司的数据爬取错误,则删除这个未爬完的txt

print("第-{}-个公司-{}-部分信息读取失败,time:{}".format(company_index, company_id, time.time() - start_time))

print("{}-files cost time:{}".format(len(company_list), time.time() - start_time))

先到这里吧,肝的太难受了,下次见!

代码中zSD_company_id.txt文件百度网盘链接:

https://pan.baidu.com/s/1zDA06jJUTG7JEU1RMv03Kg 提取码:osdu