1.ASCII(一个字节代表一个)是一种UTF8(动态的1-4字节代表一个)

2. 使用urllib请求网址后,就可以当作普通文件一样操作

***记得decode()

3.课后作业3:PY4E - Python for EverybodyPY4E - Python for EverybodyPY4E - Python for Everybody

题目:In this assignment you will write a Python program that expands on?http://www.py4e.com/code3/urllinks.py. The program will use?urllib?to read the HTML from the data files below, extract the href= vaues from the anchor tags, scan for a tag that is in a particular position relative to the first name in the list, follow that link and repeat the process a number of times and report the last name you find.

使用re获取网址结果超时timeout..

获取soup中所有锚点tags获得一个数组,直接使用[num]就可以调用第num项!

# To run this, download the BeautifulSoup zip file

# http://www.py4e.com/code3/bs4.zip

# and unzip it in the same directory as this file

import urllib.request, urllib.parse, urllib.error

from bs4 import BeautifulSoup

import ssl

import re

# Ignore SSL certificate errors

ctx = ssl.create_default_context()

ctx.check_hostname = False

ctx.verify_mode = ssl.CERT_NONE

count = 7

url = 'http://py4e-data.dr-chuck.net/known_by_Kayam.html'

for i in range(count):

html = urllib.request.urlopen(url, context=ctx).read()

soup = BeautifulSoup(html, 'html.parser')

tags = soup('a')

#url = re.findall('h.*html',tags[2].get('href', None))

url = tags[17].get('href',None)

print(url)

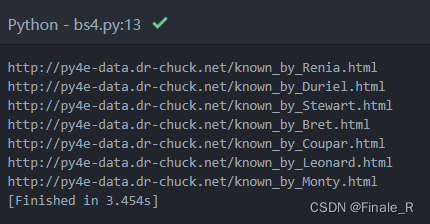

运行结果:

*Monty为我的正确答案(每个人不一样)