import requests

from bs4 import BeautifulSoup

import json

import lxml

import re

import os

import os.path

import string

#要分大小写

path = '//nas/LargeSave/高清图像数据/httpswww.pexels.comzh-tw/'

def get_image(page,count):

url=f'https://www.pexels.com/zh-tw/?format=js&seed={page}&type='

res=requests.get(url,headers={'Accept': 'text/javascript, application/javascript, application/ecmascript, application/x-ecmascript, */*; q=0.01',

'Accept-Language':'zh-CN,zh;q=0.9',

'Referer':'https://www.pexels.com/zh-tw/',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/99.0.4844.51 Safari/537.36 Edg/99.0.1150.39',

'Cookie':'_ga=GA1.2.953246023.1646290358; _hjSessionUser_171201=eyJpZCI6ImI4NzRmMjRiLWRlMjQtNWZlMi1hNjRkLWUyMDA2MjRmODFlYiIsImNyZWF0ZWQiOjE2NDYyOTA5MDI2NTcsImV4aXN0aW5nIjp0cnVlfQ==; locale=zh-TW; NEXT_LOCALE=zh-TW; _gid=GA1.2.572006961.1647307445; ab.storage.sessionId.5791d6db-4410-4ace-8814-12c903a548ba=%7B%22g%22%3A%223c77dd5a-d80d-5514-6e89-a515667aaba1%22%2C%22e%22%3A1647336874054%2C%22c%22%3A1647335074055%2C%22l%22%3A1647335074055%7D; ab.storage.deviceId.5791d6db-4410-4ace-8814-12c903a548ba=%7B%22g%22%3A%22b43b9c98-d28a-dfdb-1c65-c0f58a7b3689%22%2C%22c%22%3A1646290357434%2C%22l%22%3A1647335074056%7D; _gat=1; __cf_bm=MtqVbgDKWWQPM0LD5MJ2yjHVLCK1HddcW169X4OgWPQ-1647335087-0-AZcu7uQ0AlxdR8R5ANaYX7c9fCDbKBKL4XGoxTSgYCC0zon2SKUWtTvwSNBk4TyzHLrRW0TFq2RYZY42JOW2sbMjYFx3eMvD2mZc4zNwlDH9HAaGN9/HdaiOuvGR0+ClhwtVkqHRgkLtH4jt2DpznUh7yzGGojmH8CG5MBNuWrny'

}, proxies={'http':'http://127.0.0.1:18888','https':'http://127.0.0.1:18888'},verify=False)#要加上User-Agent verify=False

#获取img_url

text=res.text

soup=BeautifulSoup(res.content,'html.parser')

body=soup.find('img')

img_label=body['data-big-src']

pattern = re.compile(r'\d+')#正则表达式,找字符串中的数字

img_id=pattern.findall(img_label)[0]

img_url=f'https://images.pexels.com/photos/{img_id}/pexels-photo-{img_id}.jpeg'

print(img_url)

#创建文件夹

if os.path.isdir(path + str(count)):

pass

else:

os.mkdir(path + str(count))

document_path=path + str(count)

pic_path=document_path + '/'+str(count) + '.jpg'#这里用了‘/’来构成路径

if os.path.exists(pic_path):

pass

else:

byte=requests.get(img_url).content

fp = open(pic_path,'wb')

fp.write(byte)

fp.close()

count +=1

#获取 next

next=re.findall(r"seed=.+?\\",text)[0]

while len(next)==0:

break

next=next.strip('\\')

page_next=next[5:]

print(page_next)

get_image(page_next,count)

if __name__ == '__main__':

page='2022-03-14%2010%3A41%3A43%20UTC'

count=0

get_image(page,count)

爬取的网站依旧是:免費圖庫相片 · Pexels

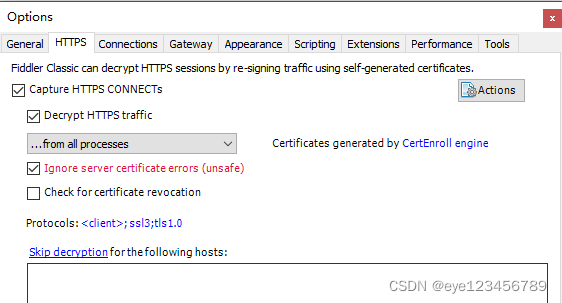

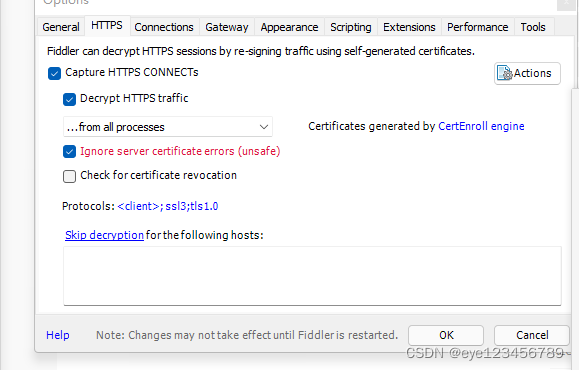

下载了 fiddlers

安装HTTP证书

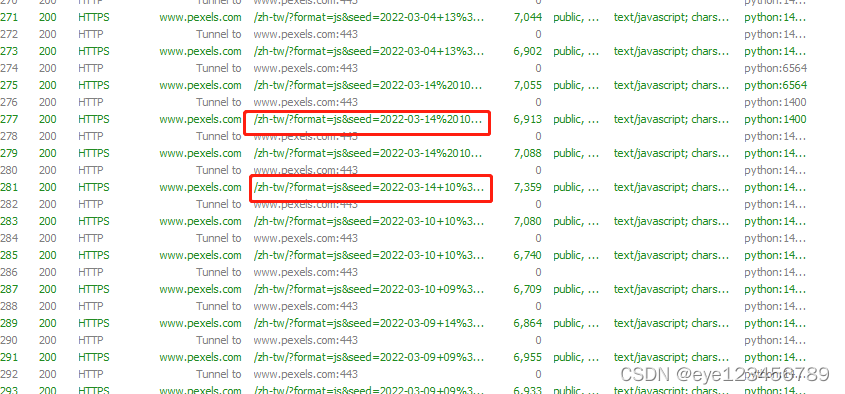

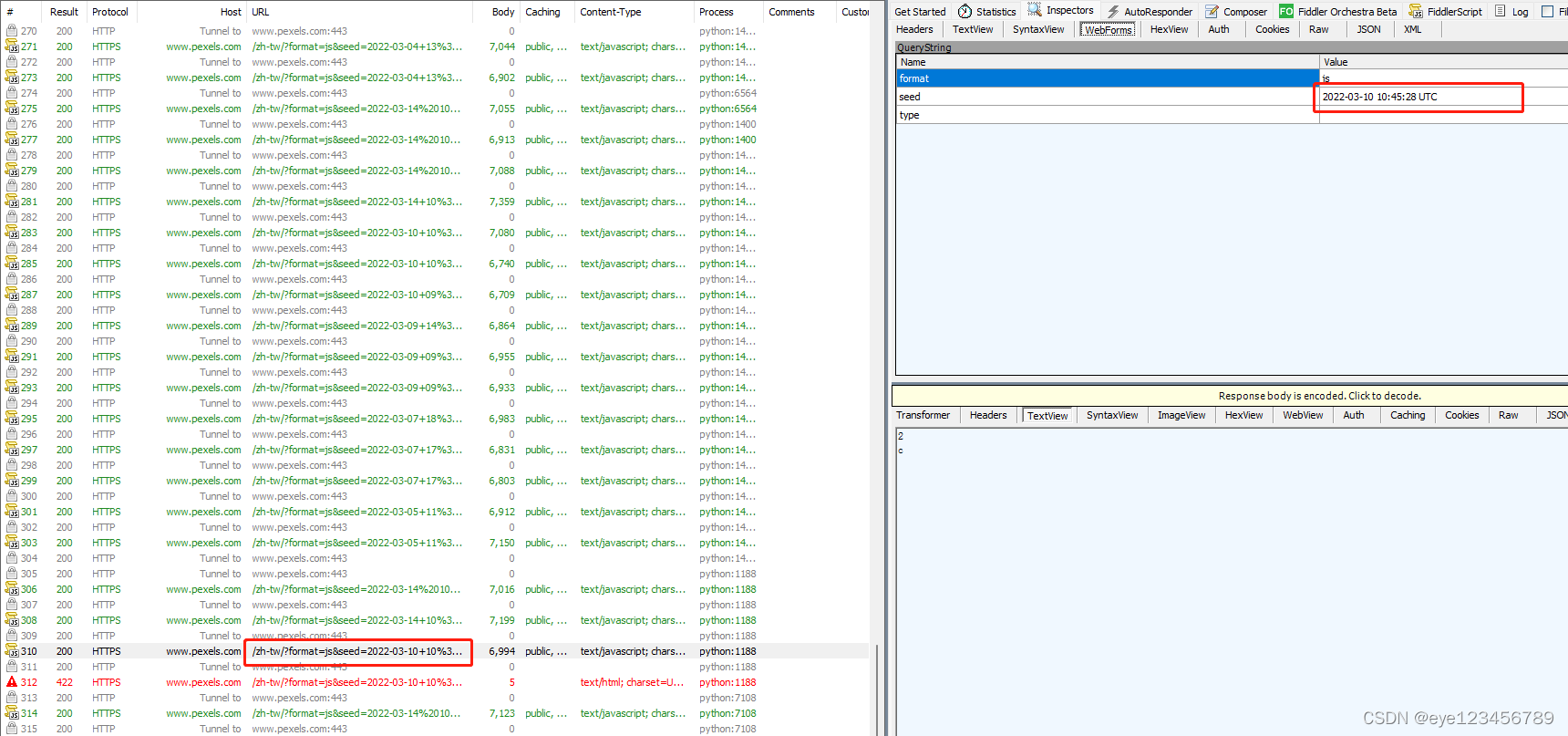

关于如何查看请求与响应,点击inspectors就可以看到,以及关于一些参数可以点击webforms,关于如何发现需要爬取的图片在哪些网站中,(按照经验猜想,一般都是经过ajax加密的,所以一般都会呈现js的格式)可以关注到下图中的format=js

?

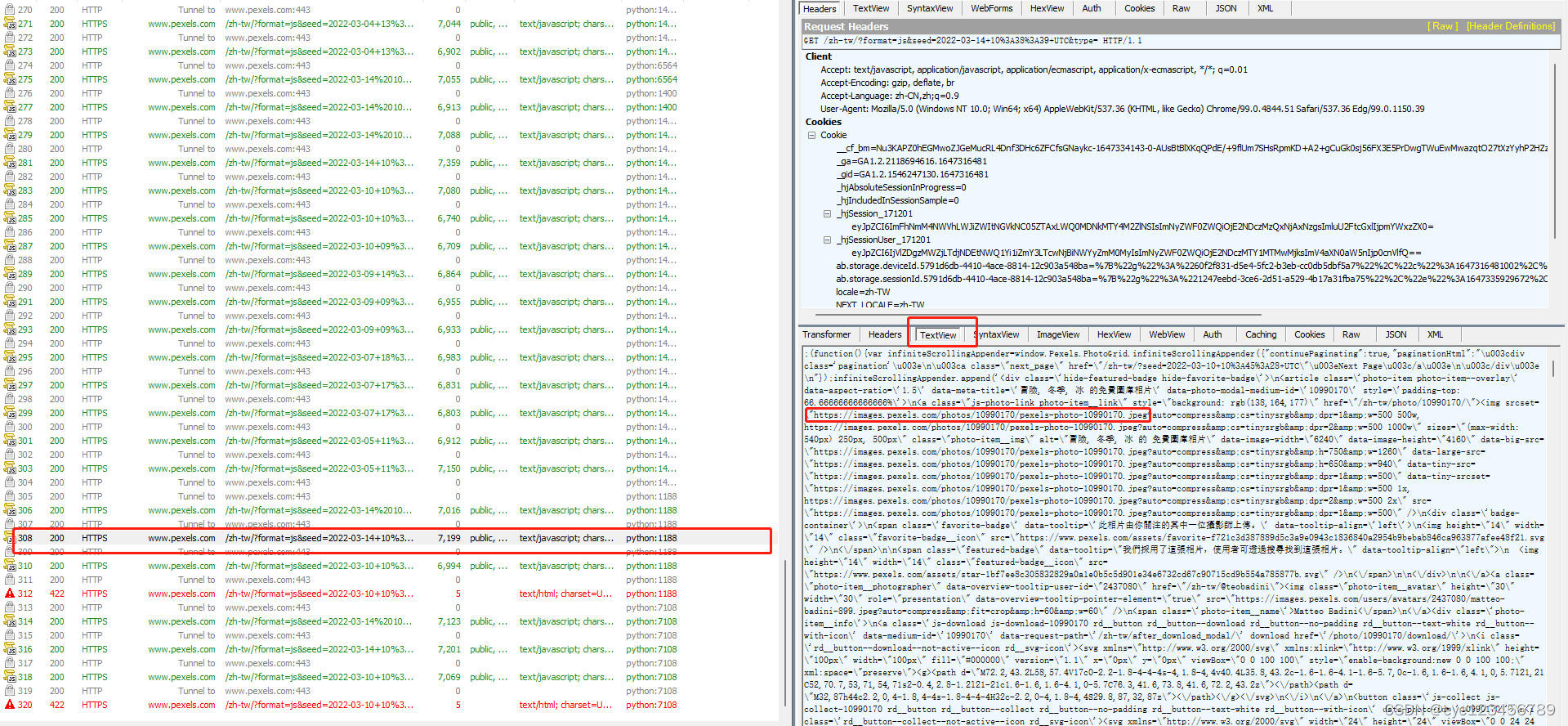

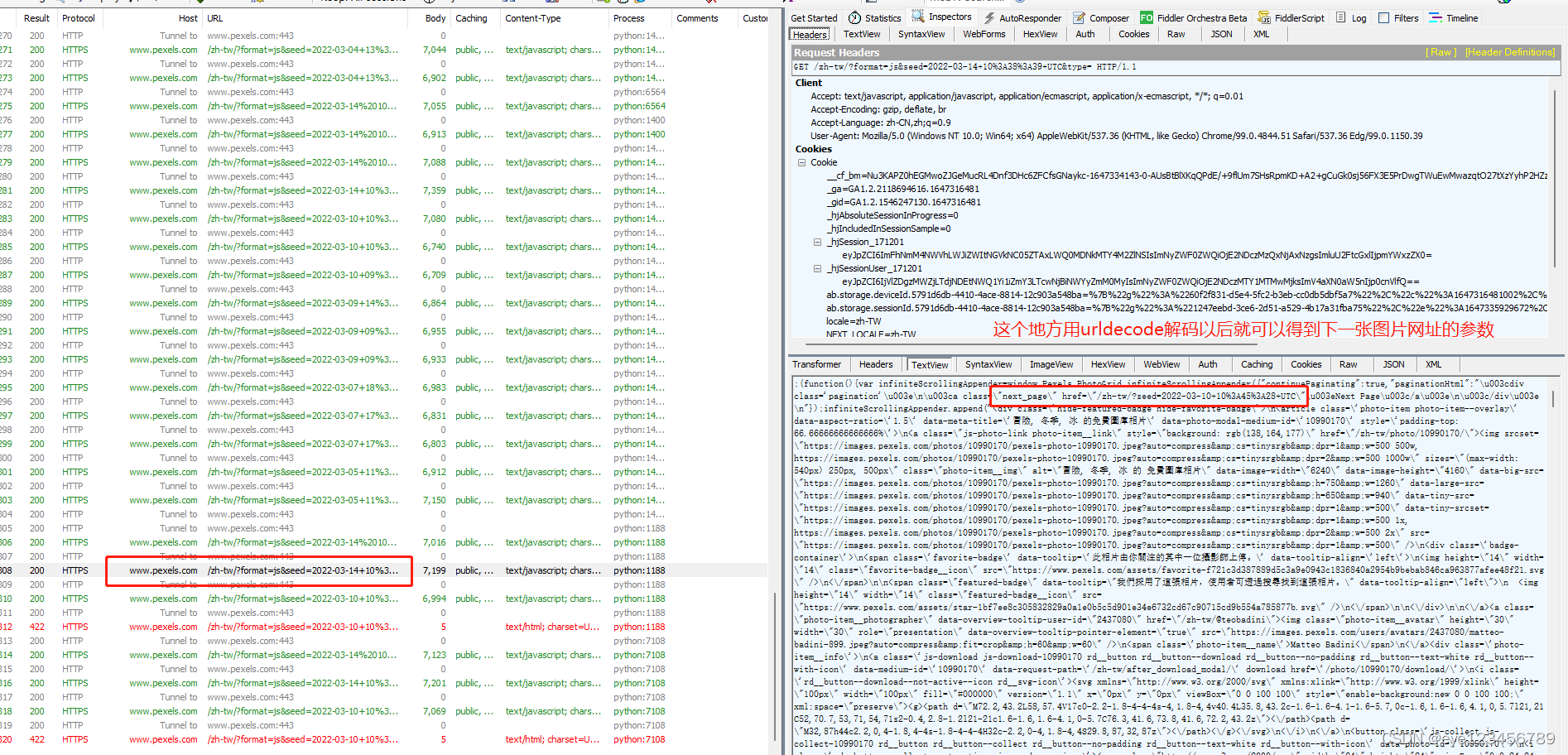

?再点进去一一查看view,在textview中看到需要找的img url

?关于如何查找下一个图网站的结构构成规律,

?

?

?